Tutorial on Adversarial Machine Learning - Part 1

The Good, The Bad, The Ugly

The Good

(a.k.a. The Age of Deep Neural Networks)

Deep Neural Networks (DNNs) have revolutionized various domains, showcasing remarkable achievements in computer vision

The Bad

(a.k.a. The Dark Side of DNNs)

While DNNs have achieved unprecedented success and widespread adoption, their reliability and security remain a concern. DNNs are known as black-box models, meaning that their internal workings are not transparent to users, and even their creators. This lack of transparency makes it difficult to understand their behavior and trust their decisions.

For some low-stakes applications, such as fraud transactions detection or movie recommendation, it is not a big deal if the model makes a mistake. The consequences of incorrect predictions are not severe. However, for some high-stakes applications, such as autonomous driving, clinical diagnostics, auto-trading bots where the model’s decisions can lead to life-threatening conditions or economic collapse, it is crucial to understand the model’s behavior and trust its decisions. Just think about a situation, when you have a serious disease and a machine learning model predicts that you should take a specific medicine, would you trust the model’s decision to take the medicine?

| AI’s application | AI’s risk | Consequence |

|---|---|---|

| Commercial Ads recommendation | Matching “users-interest” incorrectly | Seeing non-interested Ads |

| Auto-trading bot | Triggering wrong signal | Financial loss |

| Autopilot in Tesla | Mist-Classifying “Stop-Sign” | Fatal crash |

| Autonomous drone swarms | Wrong targeting/attacking | Fatal mistake - many deaths |

Some examples of catastrophic failures of unreliable DNNs in real-life:

- Tesla behind eight-vehicle crash was in ‘full self-driving’ mode

- IBM’s Watson supercomputer recommended ‘unsafe and incorrect’ cancer treatments

- A fake AI photo of a Pentagon blast wiped billions off Wall Street

Conclusion: The more autonomous the AI system is, the more important it is to understand the model’s behavior and trust its decisions.

The Ugly

(a.k.a. Adversarial Examples)

In addition to their lack of transparency, DNNs are also vulnerable to adversarial attacks including backdoor attacks, poisoning attacks, and adversarial examples.

A notable work from Szegedy et al. (2014)

The above example illustrates an adversarial example generated from a pre-trained ResNet50 model. The image on the left is the original image of a koala, which is correctly classified as a koala with nearly 50% confidence. The image in the middle is the adversarial perturbation, which is imperceptible to the human eye. The image on the right is the adversarial example generated from the original image on the left. The adversarial example is misclassified as a ballon with nearly 100% confidence.

The Efforts

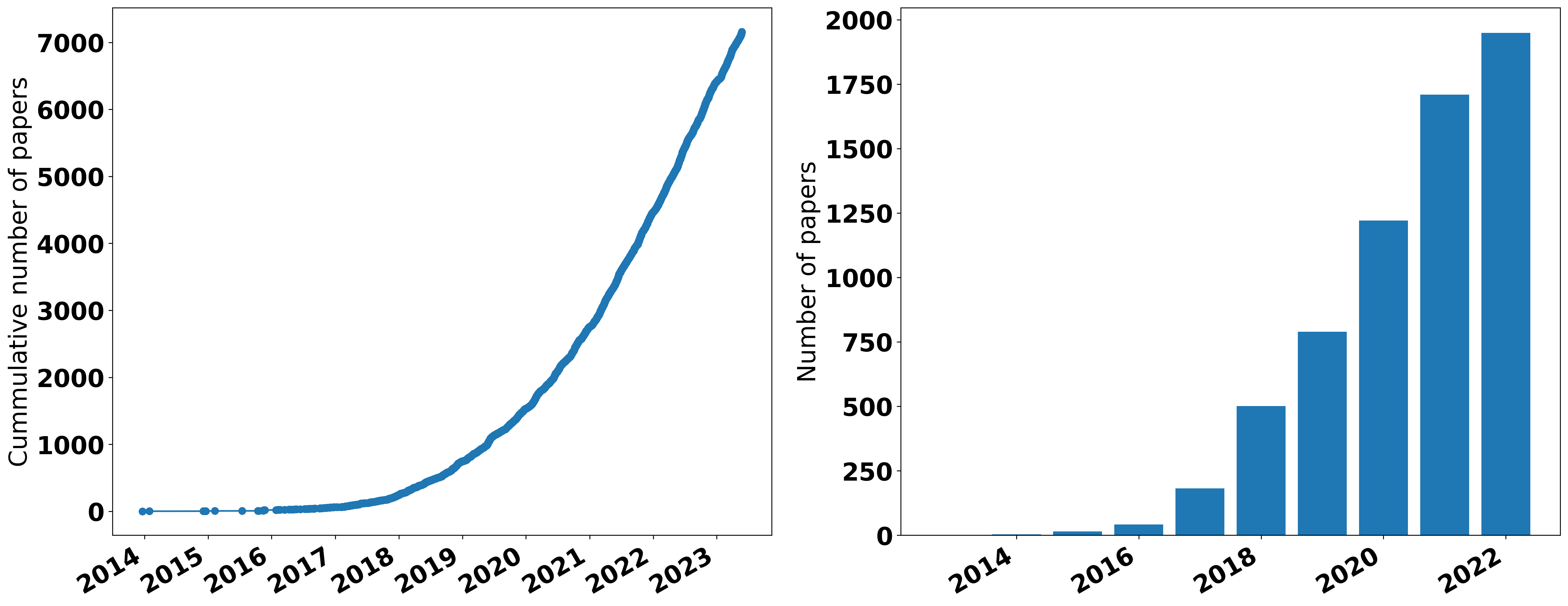

Since the discovery of adversarial examples [4], it has been an extensive with the number of papers on this topic increasing exponentially, as shown in the figure below.

On the one hand, various attack methods have been proposed to enhance effectiveness

On the other hand, there is also an extremely large number of defense methods proposed to mitigate adversarial attacks, from all aspects of the machine learning pipeline.

- Architecture-based defenses: Ensemble models

, distillation , quantization , model pruning , smooth activation functions , etc. - Preprocessing-based defenses: transformations to remove adversarial perturbations such as JPEG compression

, etc. - Postprocessing-based defenses: detecting adversarial examples

, etc. - Training-based defenses: adversarial training

, regularization , etc.

Despite numerous defense strategies being proposed to counter adversarial attacks, no method has yet provided comprehensive protection or completely illuminated the vulnerabilities of DNNs.

The Difficulties

(of Evaluating Adversarial Robustness)

Checking out the toughness of adversarial examples is a lot trickier than your usual machine learning model checks. This is mainly because adversarial examples don’t just pop up naturally, you have to create them using adversaries. And let’s just say, making these examples to honestly reflect the threat model takes a lot of genuine efforts.

Now, there’s this thing called gradient masking that folks often use to stop gradient information from being used to make adversarial examples. Attacks like PGD, for instance, need to work out the gradients of the model’s loss function to create adversarial examples. But sometimes, due to the way the model is built or trained, you might not be able to get the precise gradients you need, and this can throw a wrench in the works of adversarial attacks.

Also, adversarial attacks can be quite picky when it comes to specific settings. Like, a PGD attack might work great when you use a certain step size, number of iterations, and scale of logits, but not so well in other settings. Transferred attacks, on the other hand, care a lot about the model you choose as a substitute. So, you’ve got to make sure you’re checking the toughness of adversarial examples in lots of different settings.

Carlini et al. (2019)

The Privilege

(a.k.a. AML never dies and AML’s reseachers never unemployed ![]() )

)

This is my humble and fun opinion so please don’t take it seriously ![]() . IMHO, researchers in AML have several privileges that other researchers in other fields don’t have:

. IMHO, researchers in AML have several privileges that other researchers in other fields don’t have:

-

Privilege 1: Police-check job: Whenever a new architecture/model is proposed, we can always ask some straightforward - but worth to ask - because no answer yet questions: “Is this architecture/model robust to adversarial attacks?” or “Is it biased to some specific features, such as texture?”. And then we can just do some extensive comprehensive empirical studies to answer these questions. For example, Vision Transformer, Multimodal ML or recently Diffusion Models or LLM. And with the rapid development of new architectures/models, it is safe to say that we will never run out of these police check tasks

.

. -

Privilege 2: We create our own job: Our

Attack vs Defendgame can create a lot of jobs. Even with only single ConvNet architecture, we have seen a thousand papers on adversarial attacks and defenses. And a lot of them have been developed by the common strategies: “our adversary can break your defense” or “our defense can defend your adversary”. While the game is still on, there are some milestone papers that set higher bars for both sides, such as On Evaluating Adversarial Robustness by Carlini et al. (2019) or RobustBenchmark by Croce et al. (2021). - Privilege 3: Easy ideas: Or the interchange between AML \(\dArr\) other topics. People from different topics can bring their knowledge to solve AML problems or study Adversarial Robustness problems in their own topics. For example, Long tail distribution, Continual Learning, Knowledge Distillation, Model Compression are these topics that I have seen several papers with this spirit.

-

Privilege 4: Security assumption makes life easier: The standard assumption in security research is that you will never know how strong the adversary might be or will be. Your system can be safe today with current technology but you can not 100\% guarantee that it will be still safe in future, when the adversary has more powerful tools or just because someone inside leaks your secret. Therefore, you have to assume and fight against the worst-case scenario -

white-box attack. This assumption makes life easier for us because we can always assume that the adversary has full knowledge of our system and can do whatever they want to break our system. From that, with our creativity, we can come up with a lot of ideas/scenarios in both attack and defend sides. However, while this easy assumption is sound in academic, it is not practical in real-life (and make AML researcher like me harder to find an industry job ). By restricting the adversary power, we can have more practical and useful solutions.

). By restricting the adversary power, we can have more practical and useful solutions.