Erasing Undesired Concepts from Diffusion Models

Resources

- Conditional Diffusion Models: https://tuananhbui89.github.io/blog/2023/conditional-diffusion/

Introduction

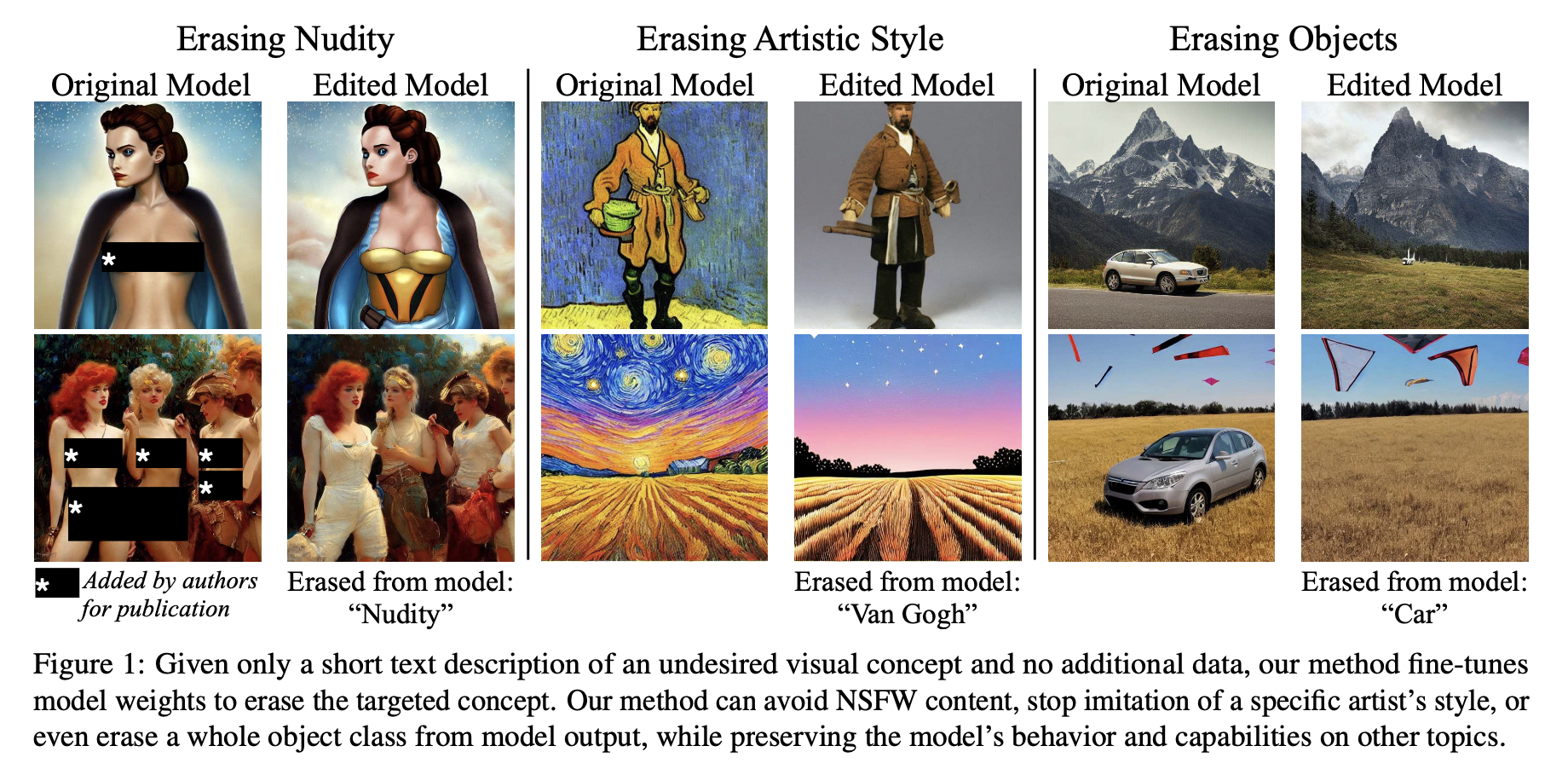

Given a pre-trained generative model such as Stable Diffusion, we aim to remove a generation capability of the model regarding specific concept or keyword, for example “Barack Obama”. By unlearning that concept, the model cannot generate meaningful output image whenever a prompt with this specific keyword while still retain its capability for all other things.

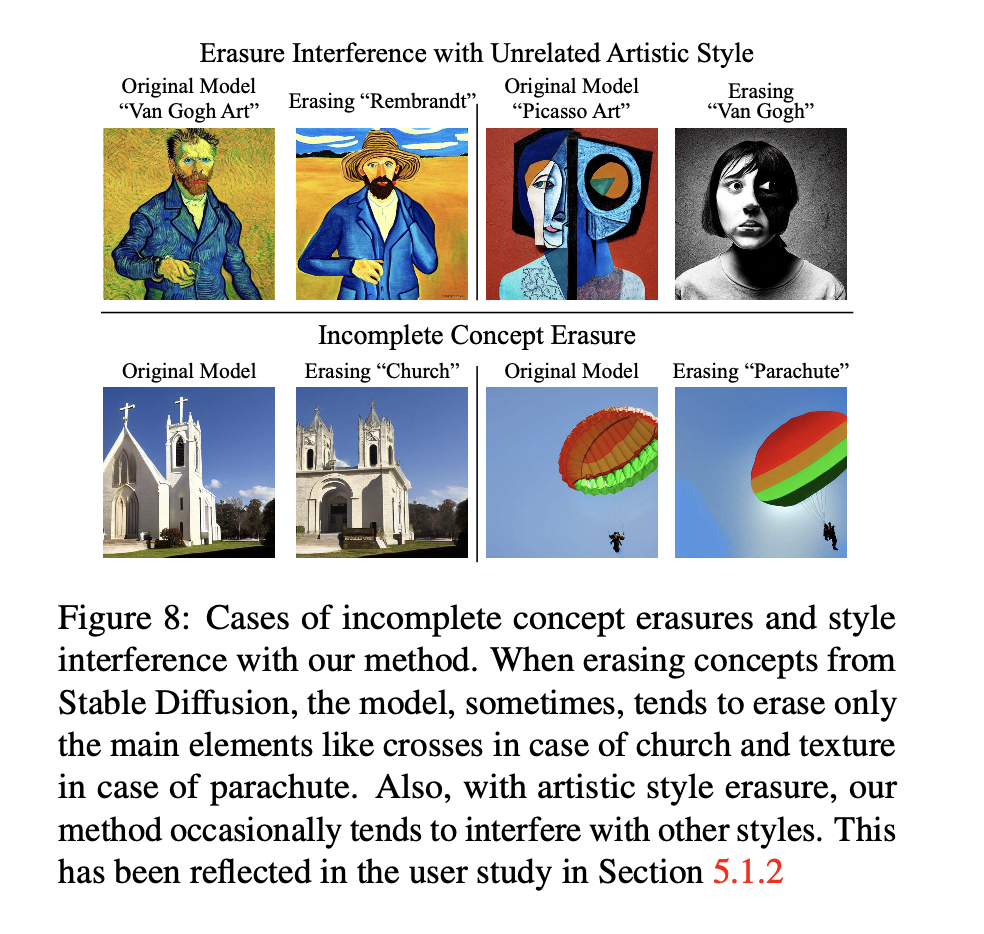

This idea has been proposed in the paper “Erasing Concepts from Diffusion Models”. However, there are some limitations of this paper:

- The concept is erased not completely, but only reduced its probability of generating an image according to the likelihood that is described by the concept, scaled by a power factor \(\eta\).

- The concept to be erased needs to be associated with a specific keyword (e.g., “Barack Obama” or “nudity” or “gun”). However, a concept can be described in many different ways, for example, “Barack Obama” can be described as “the 44th president of the United States” or “the first African American president of the United States”. Therefore, it is not possible to erase all concepts related to “Barack Obama” by just erasing the keyword “Barack Obama”.

- When erasing a specific concept (e.g., “Barack Obama”), related concepts (e.g., “Donald Trump”) can be also erased.

- This erased concept can be recovered by an adversary by crawling images with this concept from the Internet and use Textual Inversion to recover the erased concept.

Therefore, we propose a new idea to erase a specific concept completely, without effecting other related concepts. The idea is to utilize an auxiliary classifier to classify the concept (or set of concepts) and then use the gradient of the classifier to guide the unlearning process in the diffusion model.

Terminology

- \(\theta\): the parameters of the fine-tuned generative model to be erased the undesired concept \(c\).

- \(\theta^*\): the parameters of the base generative model.

- \(\psi^*\): the parameters of the base classifier to guide the generation process of the base generative model to generate images with a specific concept \(c\).

- \(\phi\): the parameters of the auxiliary classifier to serve as the classifier to distinguish between the images with the concept \(c\) and the images without the concept \(c\).

- \(x_t^n\): the image generated at the time step \(t\) with the null concept \(n\). Sometimes, we use \(x_t\) instead of \(x_t^n\) for simplicity.

- \(x_t^c\): the image generated at the time step \(t\) with the undesired concept \(c\).

- \(\epsilon_\theta (x_t, t)\): the predicted noise at the time step \(t\) of the fine-tuned diffusion model \(\theta\) with input image \(x_t\).

- \(\epsilon_\theta (x_t, c, t)\): the predicted conditional noise at the time step \(t\) of the fine-tuned diffusion model \(\theta\) with input image \(x_t\).

Review of the paper “Erasing Concepts from Diffusion Models”

Aim to reduce the probability of generating an image \(x\) according to the likelihood that is described by the concept, scaled by a power factor \(\eta\).

\[P_{\theta} (x) \propto \frac{P_{\theta^*} (x)}{P_{\theta^*} (c \mid x)^\eta}\]where \(P_{\theta^*} (x)\) is the likelihood of the image \(x\) under the original model, \(P_{\theta^*} (c \mid x)\) is the likelihood of the concept \(c\) given the image \(x\) under the original model, and \(\theta\) is the parameters of the model after unlearning the concept \(c\).

It can be interpreted as: if the concept \(c\) is present in the image \(x\) in which \(P_{\theta^*} (c \mid x)\) is high, then the likelihood of the image \(x\) under the new model \(P_{\theta} (x)\) will be reduced. While if the concept \(c\) is not present in the image \(x\) in which \(P_{\theta^*} (c \mid x)\) is low, then the likelihood of the image \(x\) under the new model \(P_{\theta} (x)\) will be increased.

Because of the conditional likelihood:

\[P_{\theta^*} (c \mid x) = \frac{P_{\theta^*} (x \mid c) P_{\theta^*} (c)}{P_{\theta^*} (x)}\]Therefore, the above equation can be rewritten when taking the derivative w.r.t. \(x\) as follows:

\[\nabla_{x} \log P_{\theta} (x) \propto \nabla_{x} \log P_{\theta^*} (x) - \eta \nabla_{x} \log P_{\theta^*} (c \mid x)\] \[\nabla_{x} \log P_{\theta} (x) \propto \nabla_{x} \log P_{\theta^*} (x) - \eta (\nabla_{x} \log P_{\theta^*} (x \mid c) + \nabla_{x} \log P_{\theta^*} (c) - \nabla_{x} \log P_{\theta^*} (x))\] \[\nabla_{x} \log P_{\theta} (x) \propto \nabla_{x} \log P_{\theta^*} (x) - \eta (\nabla_{x} \log P_{\theta^*} (x \mid c) - \nabla_{x} \log P_{\theta^*} (x))\]Because in the diffusion model, each step has been approximated to a Gaussian distribution, therefore, the gradient of the log-likelihood is computed as follows:

\[\nabla_{x} \log P_{\theta^*} (x) = \frac{1}{\sigma^2} (x - \mu)\]where \(\mu\) is the mean of the diffusion model, \(\sigma\) is the standard deviation of the diffusion model, and \(c\) is the concept. Based on the repameterization trick, the gradient of the log-likelihood is correlated with the noise \(\epsilon\) at each step as follows:

\[\epsilon_{\theta}(x_t,t) \propto \epsilon_{\theta^*} (x_t,t) - \eta (\epsilon_{\theta^*}(x_t,c,t) - \epsilon_{\theta^*} (x_t,t))\]where \(\epsilon_{\theta}(x_t,t)\) is the noise at step \(t\) of the diffusion model after unlearning the concept \(c\). Finally, to fine-tune the diffusion model from pretrained model \(\theta^*\) to new cleaned model \(\theta\), the authors proposed to minimize the following loss function:

\[\mathcal{L}(\theta) = \mathbb{E}_{x_0 \sim \mathcal{D}} \left[ \sum_{t=0}^{T-1} \left\| \epsilon_{\theta}(x_t,t) - \epsilon_{\theta^*} (x_t,t) + \eta (\epsilon_{\theta^*}(x_t,c,t) - \epsilon_{\theta^*} (x_t,t)) \right\|^2 \right]\]where \(x_0\) is the input image sampled from data distribution \(\mathcal{D}\), \(T\) is the number of steps of the diffusion model.

Instead of recursively sampling the noise \(\epsilon_{\theta}(x_t,t)\) at every step, we can sample the time step \(t \sim \mathcal{U}(0, T-1)\) and then sample the noise \(\epsilon_{\theta}(x_t,t)\) at that time step. Therefore, the loss function can be rewritten as follows:

\[\mathcal{L}(\theta) = \mathbb{E}_{x_0 \sim \mathcal{D}} \left[ \left\| \epsilon_{\theta}(x_t,t) - \epsilon_{\theta^*} (x_t,t) + \eta (\epsilon_{\theta^*}(x_t,c,t) - \epsilon_{\theta^*} (x_t,t)) \right\|^2 \right]\]where \(t \sim \mathcal{U}(0, T-1)\).

However, in the paper, instead of using the above loss function, the author proposed to use the following loss function:

\[\mathcal{L}(\theta) = \mathbb{E}_{x_0 \sim \mathcal{D}} \left[ \left\| \epsilon_{\theta}(x_t,c,t) - \epsilon_{\theta^*} (x_t,t) + \eta (\epsilon_{\theta^*}(x_t,c,t) - \epsilon_{\theta^*} (x_t,t)) \right\|^2 \right]\]where \(t \sim \mathcal{U}(0, T-1)\).

The difference between the two loss functions is that the first loss function is computed based on the unconditional noise \(\epsilon_{\theta}(x_t,t)\) at the time step \(t\) while the second loss function is computed based on the noise \(\epsilon_{\theta}(x_t,c,t)\) at the time step \(t\) conditioned on the concept \(c\).

Interpretation of the loss function: By minimizing the above loss function, we try to force the conditional noise \(\epsilon_{\theta}(x_t,c,t)\) to be close to the unconditional noise \(\epsilon_{\theta^*} (x_t,t)\) of the original model.

Our idea

The idea is to utilize an auxiliary classifier to classify the concept (or set of concepts) and then use the gradient of the classifier to guide the unlearning process in the diffusion model.

The central optimization problem as follows:

\[P_{\theta} (x) \propto \frac{P_{\theta^*} (x)}{P_{\phi} (c \mid x)^\eta}\]where \(P_{\phi} (c \mid x)\) is the likelihood of the concept \(c\) given the image \(x\) under the auxiliary classifier.

Similarly, the derivative w.r.t. \(x\) is computed as follows:

\[\nabla_{x} \log P_{\theta} (x) \propto \nabla_{x} \log P_{\theta^*} (x) - \eta \nabla_{x} \log P_{\phi} (c \mid x)\]The loss function of the diffusion model as follows:

\[\mathcal{L}(\theta) = \mathbb{E}_{x_0 \sim \mathcal{D}} \left[ \left\| \epsilon_{\theta}(x_t,t) - \epsilon_{\theta^*} (x_t,t) + \eta \nabla_{x} \log P_{\phi} (c \mid x_t) \right\|^2 \right]\]where \(t \sim \mathcal{U}(0, T-1)\).

The loss function of the classifier as follows:

\[\mathcal{L}(\phi) = \mathbb{E}_{x \sim \mathcal{D}} \left[ \log P_{\phi} (c \mid x) \right] - \mathbb{E}_{z \sim \mathcal{N} } \left[ \log P_{\phi} (c \mid G(z,c)) \right] + \mathbb{E}_{z \sim \mathcal{N} } \left[ \log P_{\phi} (c \mid G(z)) \right]\]where \(G(z,c)\) is the generated image from the noise \(z\) and the concept \(c\).

The loss function of the classifier can be interpreted as follows: the classifier tries to distinguish between two sets of images: the set with concept \(c\) which is generated from the diffusion model and the set without concept \(c\) which is combined from the training set \(\mathcal{D}\) and the generated images from the diffusion model with null concept.

It is a worth noting that the objective of the classifier is also can be formulated as contrastive learning problem.

The loss function of the diffusion model can be interpreted as follows: the diffusion model tries to generate images that are similar to the images generated by the original model, but will not likely to be classified as the concept \(c\) by the classifier.

Note

In the above equation, when calculating the loss function, the additional term is independent with diffusion parameter \(\theta\), which serves as a bias term.

Implemenetation and Experiments’ Results

Base generative model

We use the classifier guidance model released by OpenAI as the base generative model. Link to the model can be found here.

In this repository, there are two models provided: the diffusion model and the classifier to guide the generation process. Both models are trained on the ImageNet dataset with 1000 classes.

There are several pretrained models associated with different resolutions and settings, including: 64x64, 128x128, 256x256, and 512x512. Because of limited computational resources, we only use the 64x64 and 128x128 models.

Choosing the concept to be erased

We need to choose several concepts as the target concepts to be erased from 1000 classes of ImageNet dataset. However, we know that there are many classes in ImageNet dataset are quite similar to each other, for example, “dog” breeds or “car” types. These classes are quite challenging and conceptually ambiguous. Therefore, we use the ImageNet hierarchy provided by Mike Bostock to choose some classes that are conceptually clear and distinct from each other. At the end, we choose the following classes:

- traffic light: 920

- toilet tissue: 999

- street sign: 919

- pretzel: 932

- menu: 922

- french loaf: 930

- carbonara: 959

- bubble: 971

The more advanced algorithm that can erase a specific concept even highly entangled with other concepts might be proposed in the future. However, we believe that using an auxiliary classifier to learn and distinguish between these concepts is an appropriate direction to solve this problem.

Overall framework

The framework includes four components as follows:

- The base/original generative model \(\theta^*\). This model was trained unconditionally, therefore, it can generate images with any concept.

- The base/original classifier \(\psi^*\) to guide the generation process of the base generative model to generate images with a specific concept \(c\). This model is specficed to the classifier guidance model only, which actually should be considered as a part of the generative model. If using a different conditional generative model such as Stable Diffusion, there is no classifier to guide but instead using the textual encoder to encode the concept \(c\) into the latent space.

- The auxiliary classifier \(\phi\) to serve as the classifier to distinguish between the images with the concept \(c\) and the images without the concept \(c\).

- The fine-tuned generative model \(\theta\) to be erased the undesired concept \(c\).

In this framework, the base classifier \(\psi^*\) is Unet’s encoder-based classifier, which requires two inputs: the image \(x\) and the time step \(t\).

Version 1

For the auxiliary classifier \(\phi\), we use the same architecture as the base classifier \(\psi^*\), and initialize its parameters with the parameters of the base classifier \(\psi^*\). It means that the auxiliary classifier is the multi-class classifier with 1000 classes, which is the same as the base classifier \(\psi^*\).

We also do not finetune the auxiliary classifier \(\phi\) during the training process of the fine-tuned generative model \(\theta\), therefore, \(\phi = \psi^*\) all the time.

The loss function of the diffusion model as follows:

\[\mathcal{L}(\theta) = \mathbb{E}_{x_t \sim P_{\theta}(. \mid c)} \left[ \left\| \epsilon_{\theta}(x_t,t) - \epsilon_{\theta^*} (x_t,t) + \eta \nabla_{x} \log P_{\phi} (c \mid x_t) \right\|^2 \right]\]where \(t \sim \mathcal{U}(0, T-1)\). \(x_t\) is the image generated at the time step \(t\) from the base diffusion model with the concept \(c\).

\(\epsilon_{\theta}(x_t,t)\) is the predicted noise at the time step \(t\) of the fine-tuned diffusion model \(\theta\). In the implementation of the diffusion model, this is modeled as the Gaussian distribution with the mean \(\mu\) and the standard deviation \(\sigma\) by an Unet model. It requires the concept \(c\) as the input to the Unet model as well, therefore, the correct formulation should be \(\epsilon_{\theta}(x_t,c,t)\) (similarly with null concept \(n\), \(\epsilon_{\theta}(x_n,t) = \epsilon_{\theta}(x_n,n,t)\)).

In this version, we use the mean \(\mu\) only.

Experiment results:

- our approach with 64x64 setting seems to be working. The generated images are all meaningless and do not contain the concept \(c\).

Results at the time step \(t = 1\):

Null concepts:

Erased concepts (label 919: street sign, label 920: traffic light):

Results at the time step \(t = 5\):

Null concepts:

Erased concepts (label 919: street sign, label 920: traffic light):

Results at the time step \(t = 10\):

Null concepts:

Erased concepts (label 919: street sign, label 920: traffic light):

Results at the time step \(t = 22\):

Null concepts:

Erase concepts (label 919: street sign, label 920: traffic light):

Results at the time step \(t = 50\):

Null concepts:

Erased concepts (label 919: street sign, label 920: traffic light):

Version 2

In this version, we still use the same procedure on the auxiliary classifier \(\phi\).

However, we change the loss function of the diffusion model inspired by the loss of the ESD model as follows:

\[\mathcal{L}(\theta) = \mathbb{E}_{x_t \sim P_{\theta}(. \mid c), x_n \sim P_{\theta}(.)} \left[ \left\| \epsilon_{\theta}(x_t,t) - \epsilon_{\theta^*} (x_n,t) + \eta \nabla_{x} \log P_{\phi} (c \mid x_t) \right\|^2 \right]\]where \(x_n\) is the image generated at the time step \(t\) from the base diffusion model with the null concept.

Experiment results:

- our approach with 128x128 resolution seems to be not working. The generated images still contain the concept \(c\). It is weird.

Version 3

In this version, we change the loss function of the diffusion model as follows:

\[\mathcal{L}(\theta) = \mathbb{E}_{x_t \sim P_{\theta}(. \mid c), x_n \sim P_{\theta}(.)} \left[ \left\| \epsilon_{\theta}(x_t,t) - \epsilon_{\theta^*} (x_n,t) \right\|^2 + \eta \left\| \nabla_{x} \log P_{\phi} (c \mid x_t) \right\|^2 \right]\]Version 4

Add the additional term to remember the concept that we don’t want to erase

\[\mathcal{L}(\theta) = \mathbb{E}_{x_c \sim P_{\theta}(. \mid c), x_n \sim P_{\theta}(.)} \left[ \eta_{\text{forget}} \left\| \epsilon_{\theta}(x_c,t) - \epsilon_{\theta^*} (x_n,t) \right\|^2 + \eta_{\text{rem}} \left\| \epsilon_{\theta}(x_n,t) - \epsilon_{\theta^*} (x_n,t) \right\|^2 + \eta_{\text{bias}} \left\| \nabla_{x} \log P_{\phi} (c \mid x_c) \right\|^2 \right]\]where \(\eta_{\text{forget}}\), \(\eta_{\text{rem}}\), and \(\eta_{\text{bias}}\) are the weights of each forget term, remember term, and bias term, respectively.

Results at the time step t = 10, \(\eta_{\text{forget}} = 0.01\) and \(\eta_{\text{bias}} = 0\)

Sub version 4.2 is the same as version 4 but in the forget term, we force the noise of the generated image \(x_c\) with concept \(c\) to be close to the noise of the generated image \(x_a\) with a specific alternative target concept \(a\).

\[\mathcal{L}(\theta) = \mathbb{E}_{x_c \sim P_{\theta}(. \mid c), x_a \sim P_{\theta}(. \mid a), x_n \sim P_{\theta}(.)} \left[ \eta_{\text{forget}} \left\| \epsilon_{\theta}(x_c,t) - \epsilon_{\theta^*} (x_a,t) \right\|^2 + \eta_{\text{rem}} \left\| \epsilon_{\theta}(x_n,t) - \epsilon_{\theta^*} (x_n,t) \right\|^2 + \eta_{\text{bias}} \left\| \nabla_{x} \log P_{\phi} (c \mid x_c) \right\|^2 \right]\]Moreover, we also change the time step schedule as uniform distribution \(t \sim \mathcal{U}(1, T)\) to the a specific range \(t \sim \mathcal{U}(t_{\text{min}}, t_{\text{max}})\), for example, \(t_{\text{min}} = 160\) and \(t_{\text{max}} = 250\) where \(T = 250\).

Results at the time step t = 7, \(\eta_{\text{forget}} = 0.01\) and \(\eta_{\text{bias}} = 0\)

Results at the time step t = 8, \(\eta_{\text{forget}} = 0.01\) and \(\eta_{\text{bias}} = 0\)

Version 5

Given \(x_t\) from (uncondition/standard) forward diffusion model, there are two approaches to find the noise \(\epsilon_t\):

- the (uncondition/standard) \(\epsilon_\theta(x_t,t)\)

- the condition \(\hat{\epsilon}_{\theta}(x_t,t,y,\phi) = \epsilon_\theta(x_t,t) - \sqrt{1 - \bar{\alpha}_t} \nabla_{x_t} \log p_\phi (y \mid x_t)\) as in classifier-guidance model

And we also can have two approaches to obtain the projected image \(\tilde{x}_0\):

- the (uncondition/standard) \(\tilde{x}_0 = \frac{x_t - \sqrt{1 - \bar{\alpha}_t} \epsilon_\theta(x_t,t)}{\sqrt{\bar{\alpha}_t}}\)

- the condition \(\tilde{x}_0 = \frac{x_t - \sqrt{1 - \bar{\alpha}_t} \hat{\epsilon}_{\theta}(x_t,t,y,\phi)}{\sqrt{\bar{\alpha}_t}}\)

Because we don’t touch the classifier \(\phi\) but only fine-tune the diffusion model \(\theta\), therefore, in this version, we will use the second approach to obtain \(\epsilon_t\) and \(\tilde{x}_0\) for the final objective loss.

Version 6

From Flow Matching idea, we can have two follow up ideas:

- At any abitrary time step t, we find an adversarial latent that close to the latent of the erased image \(x_t^f\) but in different concepts. Then we can use this adversarial latent to guide the unlearning process. We can use CLIP base to search the most similar one in other concept or can use auxiliary classifier to search or use adversarial attack to gen. Another idea is using the concept alignment loss as in the paper “”, we can find the most similar image in other concept.

- We use transformation (i.e., blurring) to transform the image with concept \(c\) so that the transformed image looks close to the original image but cannot recognize the concept \(c\) anymore. This transformed image can help the training more stable because the change is small than making the image meaningless.

The main principle of the two above ideas is how to make the training smoother and more stable by finding the most similar image in other concept or by transforming the image.