Anti-Personalized Generative Models

Introduction

Generate a perturbation that can be added to the input images to prevent these images from then being learned by a personalized method.

- Defender’s goal: generate a perturbation that can be added to the input images to prevent these images from then being learned by a personalized method.

- Attacker’s goal: get the perturbed images and use them to personalize the model.

What are the differences between this idea and the Anti-Dreambooth idea?

- We focus on specific set of key words or concepts

- These images can still be learned by personalized method, but cannot generate meaningful images if there is a prohitibited keyword/concept in the prompt.

Setup

Data to learn:

- Just 4 images collected from Huggingface

Version 2

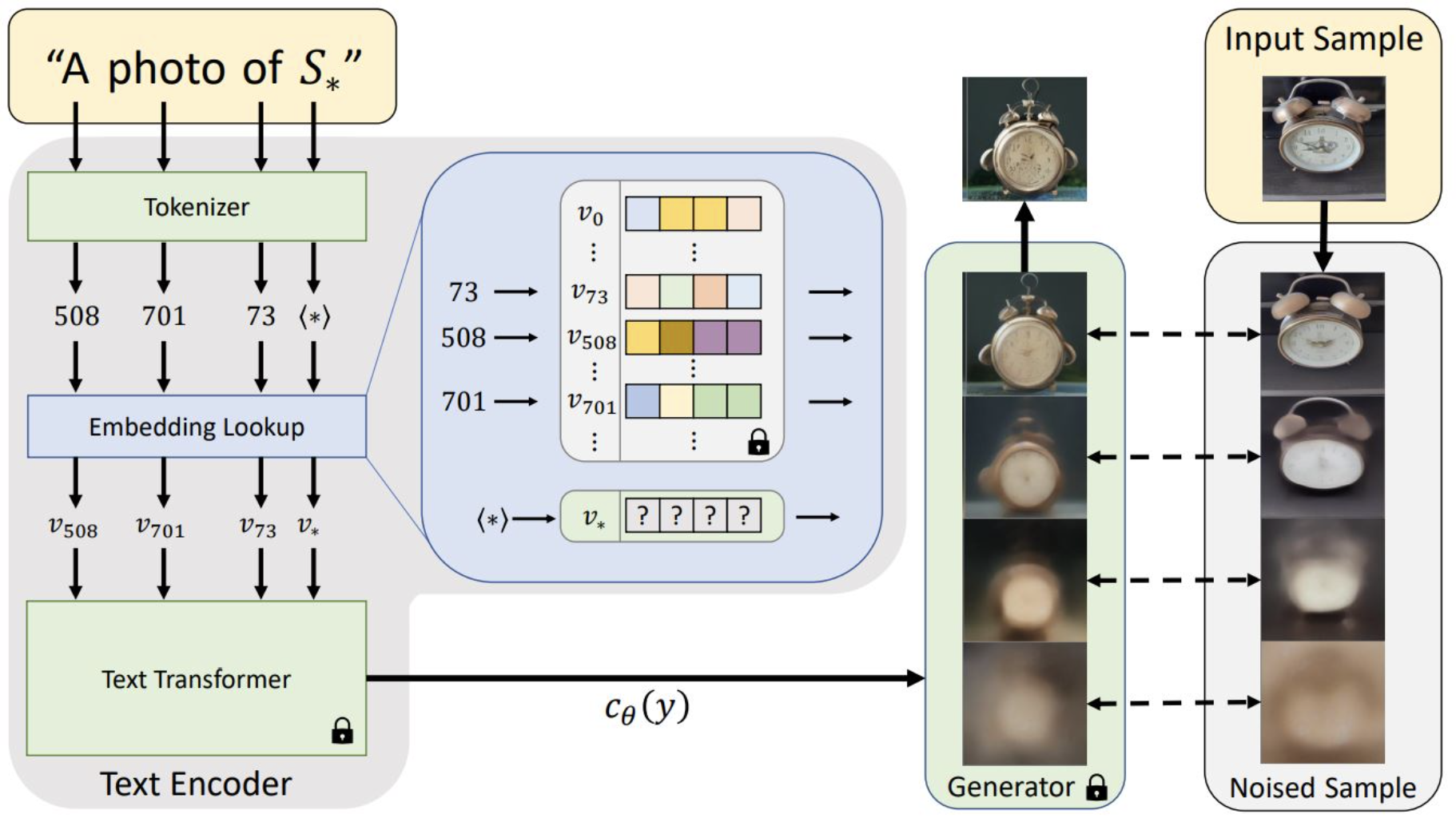

Recap Textual Inversion

Tokenization and Text Embedding

Given a prompt \(y\), we tokenize it into a sequence of tokens \(y = (y_1, y_2, ..., y_n)\), where \(y_i\) is the \(i\)-th token in the sequence.

Then, we use an embedding lookup table (i.e., an embedding matrix) to get the embedding vector \(v_i\) of each token \(y_i\) in the sequence. \(v = T(y) = (v_1, v_2, ..., v_n)\).

Finally, we use a contextualized text embedding model (e.g., CLIP text embedding) to get the text embedding \(\tau(T(y))\) of the prompt \(y\). (i.e., denoted by \(c_\theta (y)\) in the figure above).

In textual inversion, we will lock the conceptualized text embedding model \(\tau\) and only learn the embedding lookup table (actually, we will change the row of the embedding matrix that corresponds to a specific placeholder token, e.g., \(sks\)).

Textual Inversion

High-level idea: We will learn an embedding vector \(v^*\) that can represent the visual concept of a given set of images \(X\).

The objective function:

\[v^* = \underset{v}{\text{argmin}} \; \mathbb{E}_{x \sim X, z \sim \mathcal{N}, c \sim C} \; \mathcal{L}(G(z, \tau( T(c)+v)), x)\]where \(G\) is the generator, \(z\) is the latent code, \(c\) is the prompt sampled from a set of templates \(C\), \(x\) is the input image, and \(v\) is the embedding vector.

Version 2.1

Generate perturbed images by maximizing the reconstruction loss.

\[\delta^* = \underset{\delta}{\text{argmax}} \; \mathbb{E}_{z \sim \mathcal{N}, c \sim C_d} \; \mathcal{L}(G(z, \tau( T(c) + v_d)), (x+\delta))\]where \(G\) is the generator, \(z\) is the latent code, \(c\) is the prompt sampled from a set of templates for defender \(C\), \(x\) is the input image, and \(\delta\) is the perturbation. \(C\) is the list of neutral prompts, i.e., a photo of sks, sks is the placeholder token.

In this particular setting:

- Defender uses the same prompts (i.e., the same placeholder token and templates \(C_a = C_d\)) as the attacker, which is quite unrealistic assumption.

Results: Attacker cannot use the perturbed images to personalize the model. The output images are not meaningful.

Version 2.2

Generate perturbed images by maximizing the reconstruction loss.

\[\delta^* = \underset{\delta}{\text{argmax}} \; \mathbb{E}_{z \sim \mathcal{N}, c \sim C_d} \; \mathcal{L}(G(z, \tau( T(c) + v_d)), (x+\delta))\]where \(G\) is the generator, \(z\) is the latent code, \(c\) is the prompt sampled from a set of templates for defender \(C\), \(x\) is the input image, and \(\delta\) is the perturbation. \(C\) is the list of neutral prompts, i.e., a photo of sks, sks is the placeholder token.

In this setting:

- Defender uses different prompts (i.e., different placeholder token and the templates \(C_a \neq C_d\)) from the attacker. For example,

defender_token=personwhileplaceholder_token=advmersh_diff.

Results: Attacker cannot use the perturbed images to personalize the model. The output images are not meaningful.

Next step:

- Develop better attack method that can use the perturbed images to personalize the model. Try with different transformations. There is a tool called Adverse Cleaner which employs computer vision filtering to filter out adversarial perturbations. In the Anti-Dreambooth paper, the authors already test with this tool (and Gaussian filter and JPEG compression as well). Their method show some robustness to these filters.

Version 2.3

Three parties: defender, attacker, and adversarial cleaner.

- Defender: generate perturbed images by maximizing the reconstruction loss.

- Attacker: use the perturbed images to personalize the model.

- Adversarial cleaner: clean the perturbed images before the attacker can use them to personalize the model.

In this version:

- Develop adversarial cleaner to clean the perturbed images. Adversarial cleaner tries to remove the adversarial perturbation from the input image by applying another perturbation, with the loss function is minimizing the distance between the input image (the perturbed images) and the generated ones (given by a prompt).

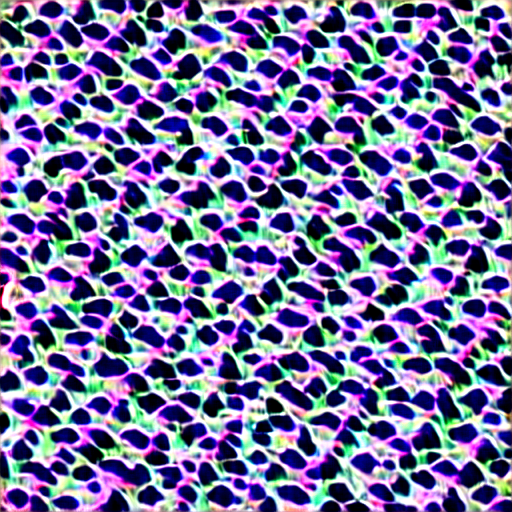

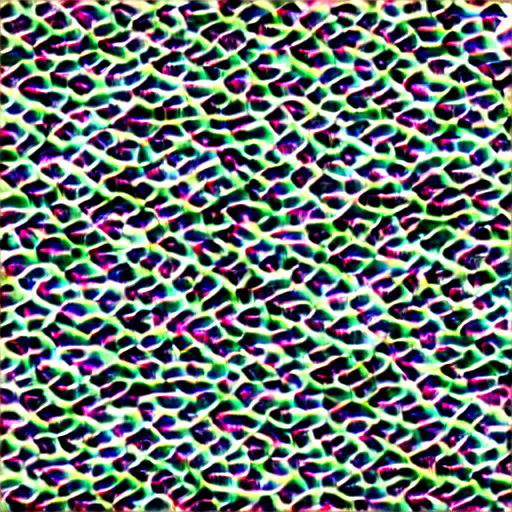

Results: There are two versions of adversarial cleaner: advmersh_clean where the prompt templates of defender and cleaner are the same, and just different in the placeholder token (i.e., person and human). In advmersh_clean2 where the prompt templates of defender and cleaner are different, and the placeholder token as well. The results show that in the first version, after cleaning, the attacker cannot use these cleaned-perturbed images to personalize the model, however, in one generated sample, we can recall the original person. In the second version, the attacker cannot generate any meaningful images at all.

advmerch_clean

advmerch_clean2

Conclusion

- Simple perturbation method can work well

- It is surprising that even when the defender and the attacker use different placeholder tokens (i.e., version 2.2), the attacker cannot use the perturbed images to personalize the model.

- The adversarial cleaner somehow can reduce the adversarial effect but it is not strong enough.

Next step

- Develop a stronger adversarial cleaner that can clean the perturbed images completely.

- Generate perturbed images by maximizing the embedding distance between the perturbed images and the text embeddings of the prompts.

Version 3

Questions

- How to completely remove the adversarial perturbation from the input image, so that the attacker still can use these images to personalize the model?

References

- https://huggingface.co/spaces/multimodalart/latentdiffusion/blame/5ea000aef2990e60c6cf2ebed428268cff8ae465/app.py

- Code that can get text embedding and image embedding from clip based and calculate ssim to detect

NSFWdata