Foundations of Natural Language Processing

Word Meaning and Word Sense Disambiguation

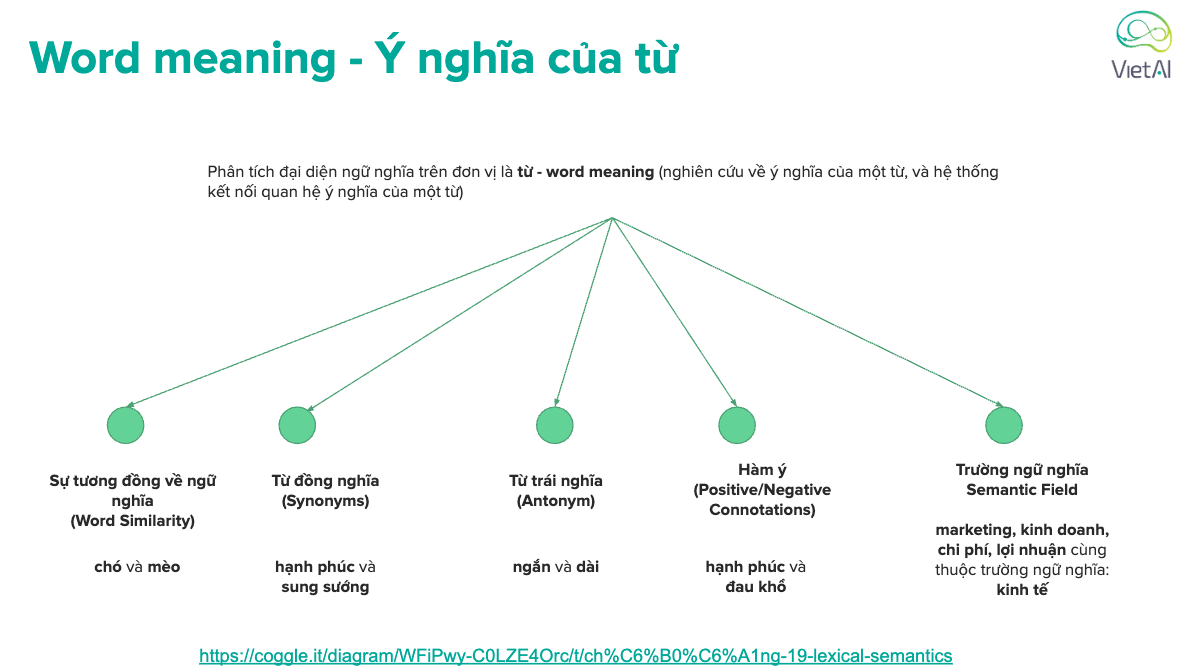

- Word meaning: The meaning of a word is the concept it refers to. For example, the word “dog” refers to the concept of a four-legged animal that barks.

- Word sense: The sense of a word is the particular meaning it has in a specific context. For example, the word “bank” can refer to a financial institution or the side of a river.

- Word sense disambiguation: The task of determining which sense of a word is used in a particular context.

- Lexical semantics: The study of word meaning and word sense disambiguation.

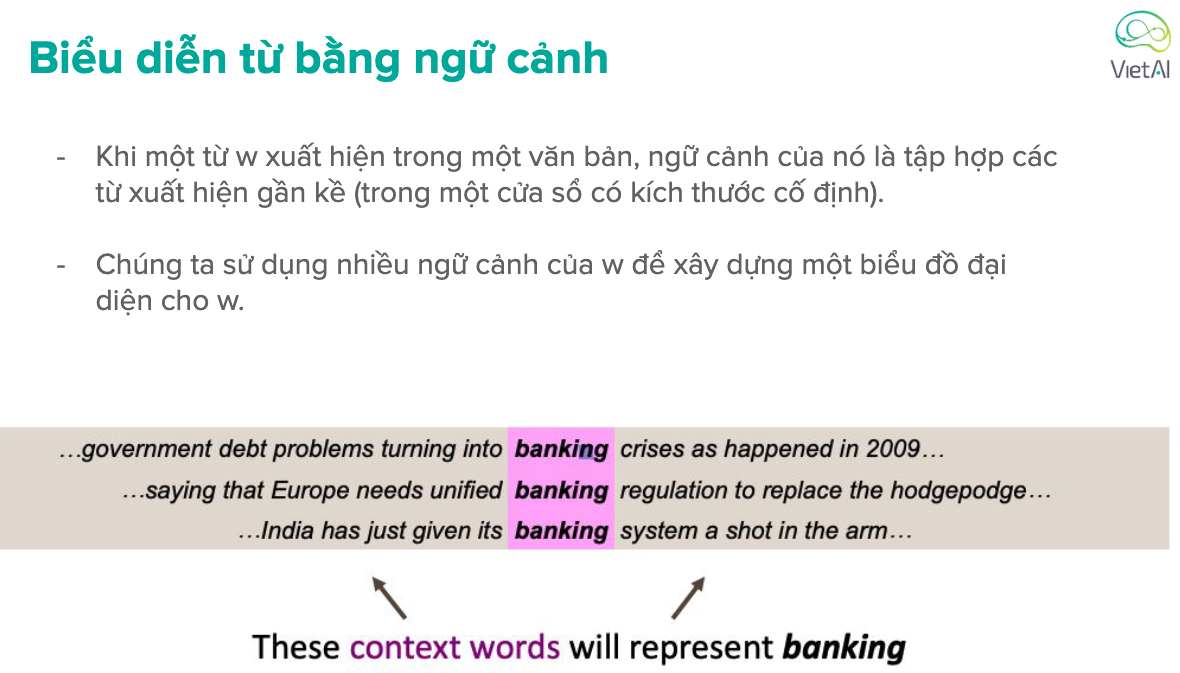

- Distributional semantics: The study of word meaning based on the distributional properties of words in text.

- Word embeddings: Dense vector representations of words that capture their meaning based on the distributional properties of words in text.

- Word similarity: The similarity between words based on their meaning, often measured using word embeddings.

- Word analogy: The relationship between words based on their meaning, often captured using word embeddings.

- Word sense induction: The task of automatically identifying the different senses of a word in a corpus of text.

Distributional semantics is based on the idea that words that occur in similar contexts tend to have similar meanings.

Tokenization and Word Embeddings

- Tokenization: The process of splitting a text into individual words or tokens.

Most common tokenization methods:

- Byte pair encoding (BPE): A tokenization algorithm that iteratively merges the most frequent pairs of characters in a corpus of text to create a vocabulary of subword units.

- WordPiece: A tokenization algorithm similar to BPE that uses a greedy algorithm to iteratively merge the most frequent subword units in a corpus of text.

What is NLTK?

- Natural Language Toolkit (NLTK): A library for building programs to work with human language data. It provides easy-to-use interfaces to over 50 corpora and lexical resources such as WordNet, along with a suite of text processing libraries for classification, tokenization, stemming, tagging, parsing, and semantic reasoning.

BERT

Tokenization in BERT

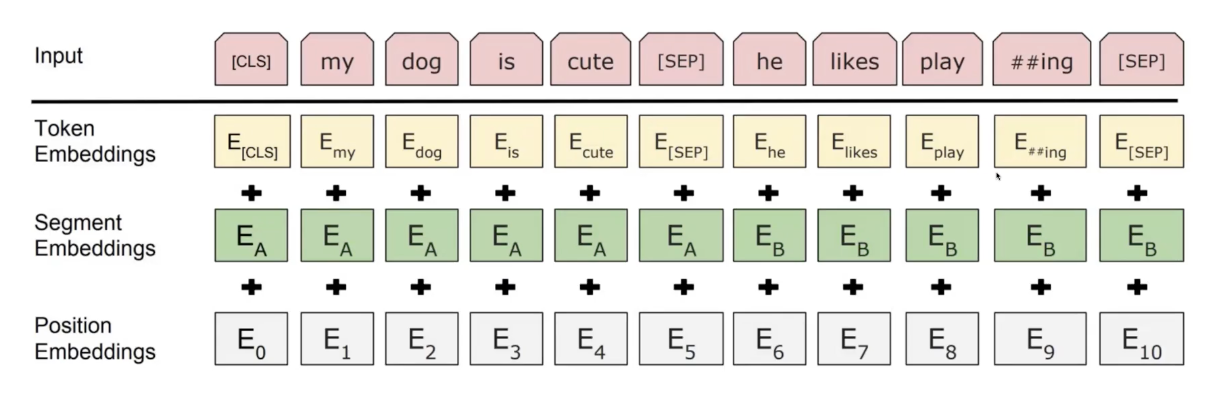

- WordPiece: A tokenization algorithm similar to BPE that uses a greedy algorithm to iteratively merge the most frequent subword units in a corpus of text.

- Example: The word “university” might be split into the subword units “un”, “##iver”, and “##sity”. The “##” prefix is used to indicate that a subword unit is part of a larger word. This allows the model to represent out-of-vocabulary words by combining subword units that it has seen during training. This also allows to reduce the size of the vocabulary and the number of out-of-vocabulary words.

Special tokens in BERT:

- [CLS]: A special token that is added to the beginning of each input sequence in BERT. It is used to represent the classification of the entire input sequence.

- [SEP]: A special token that is added between two sentences in BERT. It is used to separate the two sentences.

- [MASK]: A special token that is used to mask a word in the input sequence during the pretraining of BERT.

- [UNK]: A special token that is used to represent out-of-vocabulary words in BERT.

- [PAD]: A special token that is used to pad input sequences to the same length in BERT.

Learnable position embeddings: In BERT, the position embeddings are learned during the training process, allowing the model to learn the relative positions of words in the input sequence. This is in contrast to traditional position embeddings, which are fixed and do not change during training.

Segment embeddings: In BERT, each input sequence is associated with a segment embedding that indicates whether the input sequence is the first sentence or the second sentence in a pair of sentences. This allows the model to distinguish between the two sentences in the input sequence. There are only two values for the segment embeddings: 0 and 1.

Masked Prediction in BERT

- Masked Language Model (MLM): A pretraining objective for BERT that involves predicting the masked words in the input sequence. The masked words are replaced with the [MASK] token, and the model is trained to predict the original words in the input sequence.

- Next Sentence Prediction (NSP): A pretraining objective for BERT that involves predicting whether two sentences are consecutive in the original text. The sentences are concatenated together with the [SEP] token, and the model is trained to predict whether the two sentences are consecutive.

Pre-training BERT

- Masked Language Model (MLM): A pretraining objective for BERT that involves predicting the masked words in the input sequence. The masked words are replaced with the [MASK] token, and the model is trained to predict the original words in the input sequence.

- Next Sentence Prediction (NSP): A pretraining objective for BERT that involves predicting whether two sentences are consecutive in the original text. The sentences are concatenated together with the [SEP] token, and the model is trained to predict whether the two sentences are consecutive.