AI Interview Helper

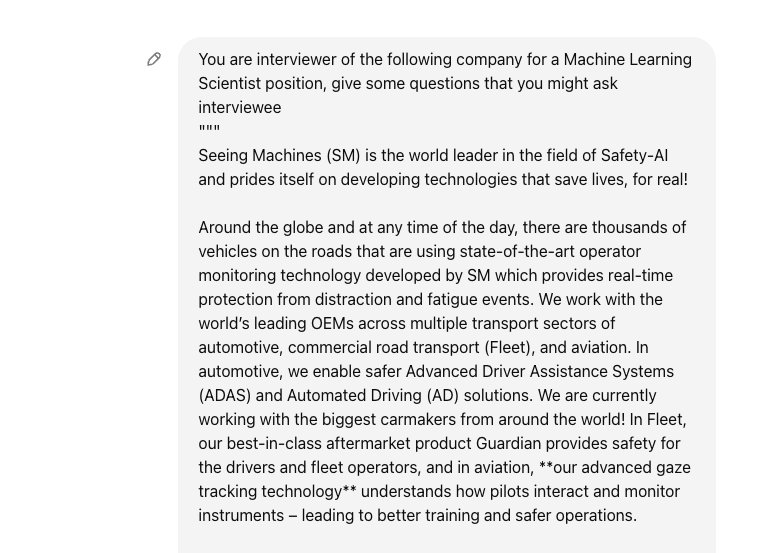

I was looking for a new position and had some interviews lined up. I usually post the job descriptions to ChatGPT and ask for some questions that might be asked regarding the role (:D yes, sounds dumb but it’s quite helpful). For example, here are some questions that ChatGPT suggested for a Machine Learning Scientist role, some of which were quite relevant and helpful:

But there are some drawbacks of using ChatGPT web interface:

- It’s not easy to keep track my answers for previous questions that I have asked. For example, I have answered some questions from previous interviews, and I want to reuse them for the next interviews.

- It’s not easy to paste/input my CV or past experiences to get more relevant answers/suggestions.

- It’s not a code editor

Actually, the main motivation for this project is to learn how to use OpenAI API and maybe build a simple web application to support my needs. Below is the brief description of the project:

- Main features: Given a personal CV (pdf file) and a job description (text), the application will suggest some questions that might be asked regarding the role and how to answer them based on your experiences.

-

A Personal Wiki:

- Meta data: Users can add meta data such as past projects, blog posts, announcements, working notes, even meeting notes, etc. to the wiki. The ultimate goal is to build a personal knowledge base. A first step to build not only a interview helper like this project, but also a personal agent.

-

Database operations: This feature cannot be done with ChatGPT web interface and a important feature to build a personal wiki.

- Import/export data: Users can import/export data from/to the wiki. Data can be personal data or data from the companies that the user has applied to.

- Search: Users can search for data in the wiki.

- Edit suggestions and save for future use: Users can edit the suggestions from ChatGPT and save them for future use. This is also useful for personalization.

-

Data privacy:

- Data masking: The application is inspired by the Salesforce’s Einstein Trust Layer, which plays as a intermediary between the user and the AI model. The user’s data is masked/encripted before sending to the AI model, and the AI model only sees the masked data. The AI model will return the masked data, and the application will de-mask the data before showing to the user. This way, the user’s data is protected from the AI model. Sounds fancy, right? :D But it can be simple just that using a dictionary to map the user’s data to predefined strings and vice versa.

-

Others:

- Choose source of LLMs: Users can choose the source of LLMs (e.g., ChatGPT, Claude or MistralAI) to generate the questions.

Similar Tools

It’s definitely not a new idea, especially, the core technology is the OpenAI API, which everyone can access. Here are some similar tools that I found:

FinalRoundAI: https://www.finalroundai.com/

- Interview Copilot: Real-time AI suggestions

- Specialization: Domain-specific knowledge to provide more relevant suggestions, e.g., Frontend, Backend, Data Science, etc.

- AI Resume Builder, AI resume revision and cover letter generation.

- AI Mock Interview

- Questions Bank

- Unlimited 5-minute free trial sessions

Aptico: https://www.aptico.xyz/ found on Reddit :D

InterviewPro: https://interviewpro.ai/

- Provide sample answers for some common behavioral questions https://interviewpro.ai/blog

RightJoin: https://rightjoin.co/

Interesting opposite direction :D so instead of helping interviewees, it helps interviewers.

Modules

- Read and extract text from a PDF file.

- Refine the extracted text

Data Structure

- User: A user can have multiple CVs, job descriptions, and meta data.

- CV: A CV can have multiple fields such as education, work experience, skills, etc.

- Job Description: A job description can have multiple fields such as job title, job description, requirements, etc.

- Meta Data: A meta data can have multiple fields such as projects, blog posts, announcements, working notes, meeting notes, etc.

A chain of thought template/flow in each document so that we can reuse them for building a personal wiki. The data structure should be able to support this specific feature. More specifically, the data structure should be able to support the following operations:

- Data masking/privacy protection: Create a deep copy of the entire raw/original data and apply the masking to the copied data.

- Version control for each document: Users can see the history of each document, including (1) the raw/original data, (2) refined by LLMs or other models, (3) refined by the user with mandatory comments/reasons for the changes, (4) the final version.

- Interface for users to upload documents, display the documents, and edit the documents: Future feature.

- Structure of the current database: Users can see how many documents are in the database, summary of the documents, etc.