CS336 Lecture 13 - Data

- Data is the primary determinant of language model behavior

- Pre-training, mid-training, and post-training form distinct stages of model training

- Example training mixes illustrate the pre/mid/post pipeline and token scales

- There is no simple formalism for dataset selection; curated practices are inductive and empirical

- BERT demonstrated early pre-training on books and Wikipedia and revealed issues like data poisoning risk

- WebText used link popularity heuristics to extract higher-quality web pages

- Common Crawl provides large monthly web snapshots but requires extensive processing

- Common Crawl contains offensive and copyrighted material and respects robots.txt policies variably

- Two principal approaches to web filtering are model-based scoring and rule-based heuristics

- GPT-3 used a mixture of Common Crawl, filtered web text, books, and Wikipedia with a learned quality classifier

- The Pile aggregated diverse high-quality domains to maximize open-source training material

- Stack Exchange and GitHub provide structurally rich, application-relevant corpora but require careful licensing and processing

- Gopher and other research-era large datasets emphasized curated filters and manual heuristics

- LLaMA, RedPajama reproductions, and RepAv2 illustrate dataset reproduction, link-aware quality, and large-scale signal computation

- RefinedWeb and FineWeb provide minimally processed web datasets intended for further curation

- DataComp defined a standard benchmarking and competition framework and demonstrated aggressive model-based filtering gains

- NeMo/NeatronCC ensembles model-based scorers and rewrites to increase usable token volume while preserving quality

- Copyright law and licensing are central constraints for dataset collection and sharing

- Training models on copyrighted content raises complex legal and technical issues including memorization and transformative use arguments

- Mid-training and post-training use targeted corpora and synthetic data to instill capabilities such as long context and instruction following

- Dataset engineering is heuristic, consequential, and a primary axis for improving language models

Data is the primary determinant of language model behavior

Data quality, composition, and curation materially determine the capabilities and risks of foundation models.

High-level system choices like architecture, optimization, and tokenization matter, but the empirical performance and emergent behaviors of large language models are primarily driven by what they are trained on:

-

data sources (web crawls, books, code, papers, etc.)

-

filtering heuristics (rule-based or model-based)

-

deduplication strategies

-

downstream instructional tuning (instruction data, RLHF)

Commercial disclosure about training data is often limited for competitive or legal reasons, which complicates reproducibility and independent evaluation.

Organizing and staffing data efforts is a distinct, highly scalable operational function separate from architecture development, enabling specialized teams to produce targeted corpora for capabilities like multilinguality, code, or multimodal learning.

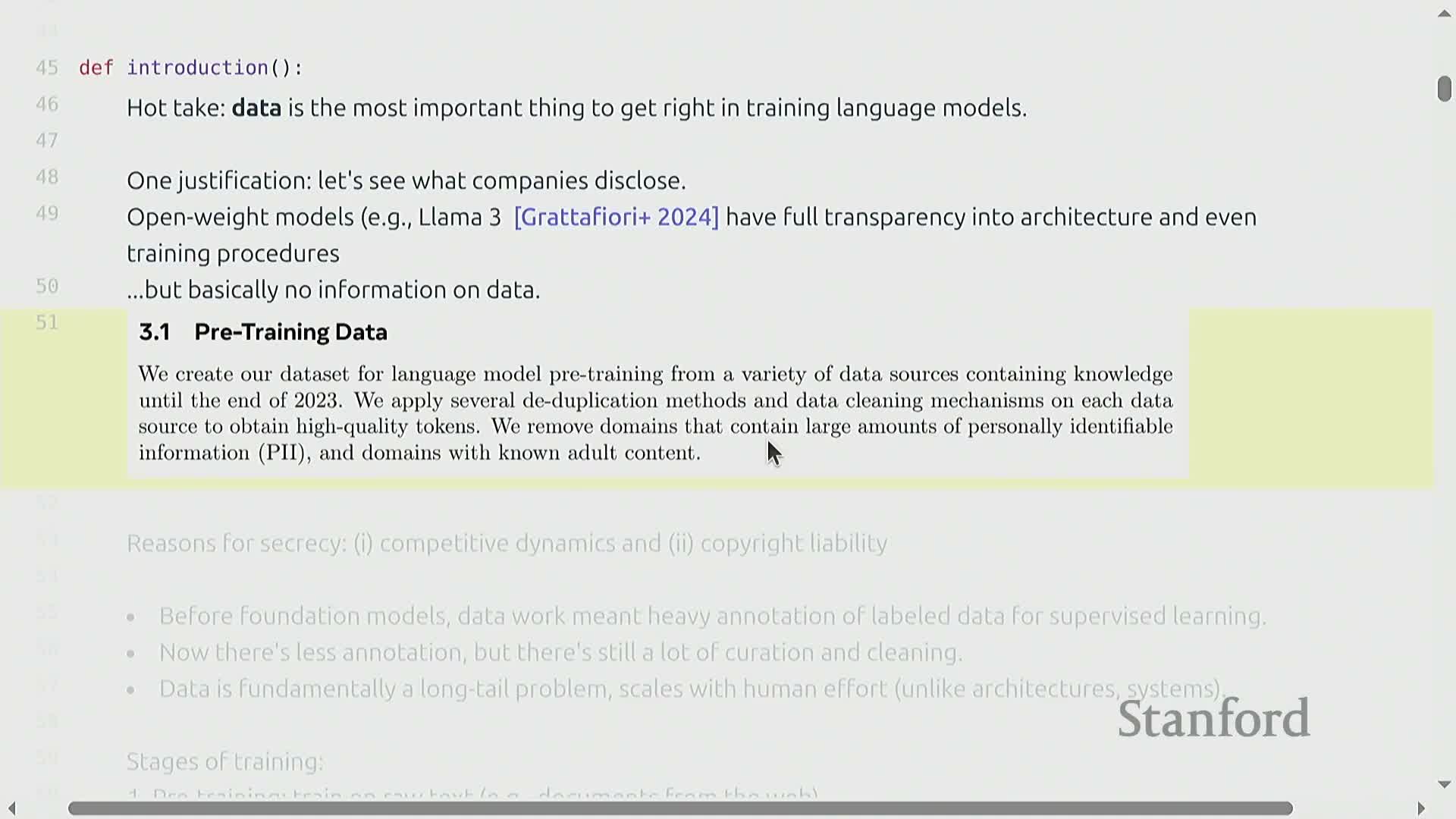

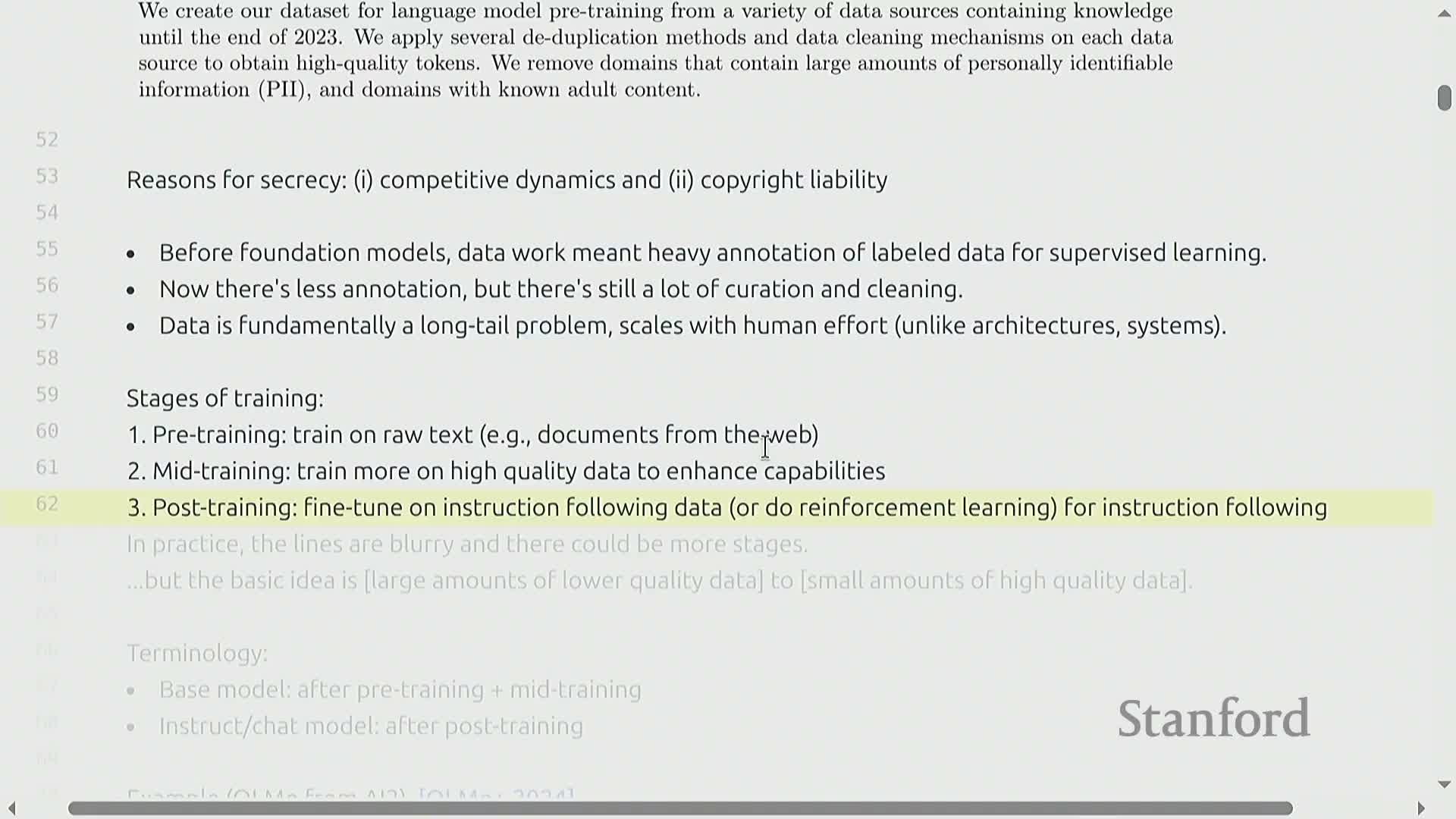

Pre-training, mid-training, and post-training form distinct stages of model training

Model training is commonly organized into three phases:

-

Pre‑training — large, raw corpora to learn general language priors.

-

Mid‑training — smaller, curated corpora to develop targeted capabilities (e.g., math, code, long‑context).

-

Post‑training — instruction tuning and reinforcement methods to align models for safe, useful interaction.

Checkpoints after pre‑ and mid‑training are often called base models; models after post‑training are production or instruction‑tuned checkpoints.

In practice, these boundaries blur: organizations use multiple iterative stages and mixtures of data to reach desired behaviors.

Each stage imposes different curation and filtering trade-offs because early stages prioritize scale, while later stages emphasize higher signal‑to‑noise and specific task formats.

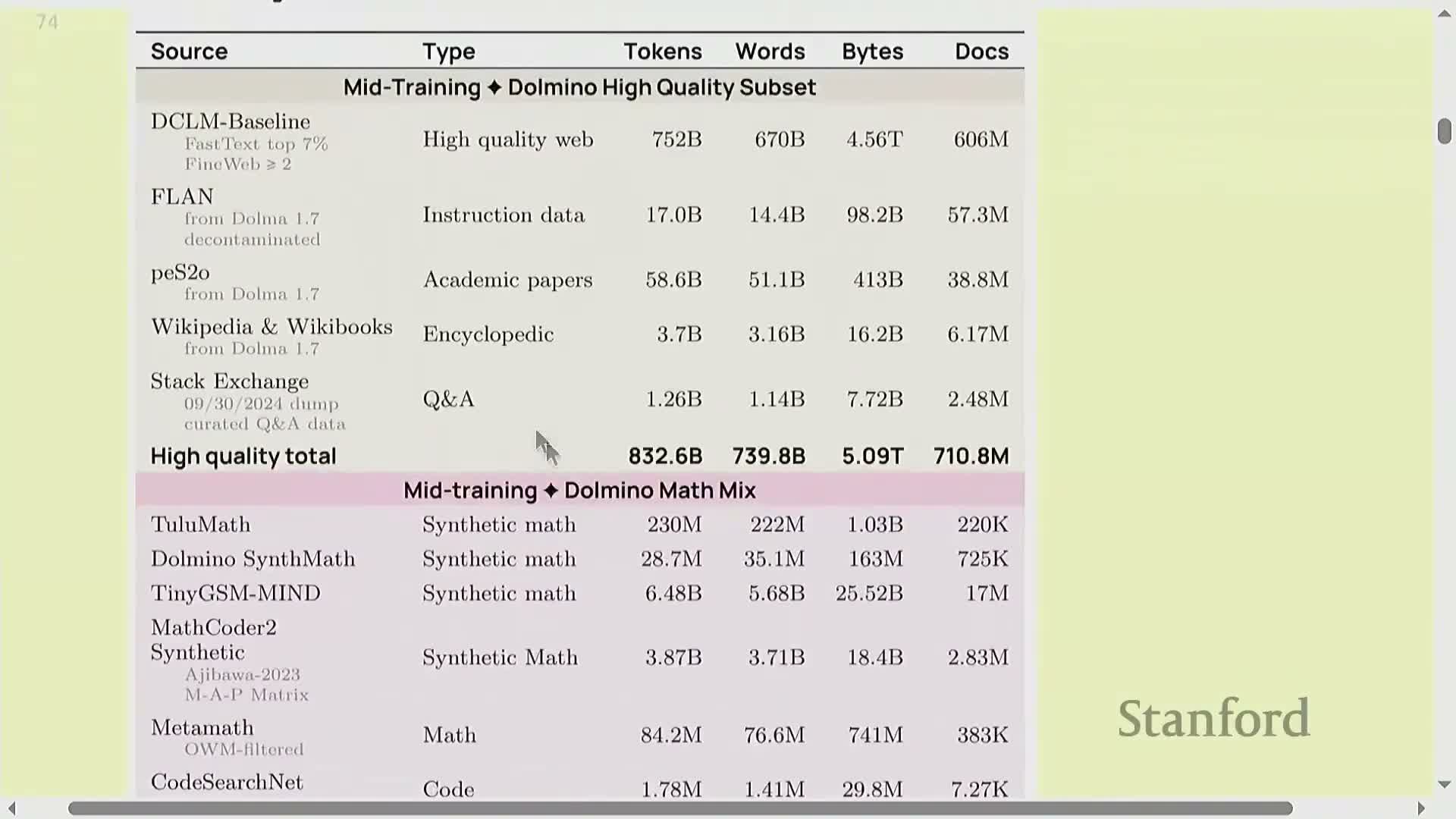

Example training mixes illustrate the pre/mid/post pipeline and token scales

Released open‑source examples show a common pipeline:

- Massive pre‑training mixes dominated by web crawls plus books, code, academic papers, math, and Wikipedia.

- Mid‑training on filtered subsets and synthetic data to focus capabilities.

- Post‑training on chat and instruction‑like data for alignment.

Token counts in public reproductions typically scale down across stages:

- Trillions of tokens in pre‑training

- Tens–hundreds of billions in mid‑training

- Billions in post‑training

This illustrates a progressive focusing from scale → quality.

Synthetic generation is increasingly used at mid and post stages to produce targeted instruction or reasoning traces, and dataset composition choices plus token budgets materially affect which capabilities improve at each stage.

There is no simple formalism for dataset selection; curated practices are inductive and empirical

There is no established theory prescribing exact dataset composition for optimal model behavior; choices remain empirical, heuristic, and context‑dependent.

Data preparation is therefore an inductive process: study historical dataset designs, observe how they map to capabilities, and form principled heuristics for:

-

filtering

-

deduplication

-

augmentation

Practical dataset engineering combines domain knowledge (e.g., including math or code sources) with scalable collection and quality pipelines.

The lack of formal principles motivates reproducibility efforts, ablation studies, and benchmarks that compare filtering strategies and data mixes.

BERT demonstrated early pre-training on books and Wikipedia and revealed issues like data poisoning risk

Early transformer pre‑training relied on curated, high‑quality corpora such as books and Wikipedia to learn robust language representations and long‑context structure.

Public book collections (e.g., Smashwords‑derived corpora, Project Gutenberg) and Wikipedia provide coherent long‑form and factual content but do not cover informal or opinionated genres like recipes or personal blogs.

Public datasets that mirror live services can be vulnerable to data poisoning, where adversarial edits are timed to appear in dumps—showing that training data from live services contains manipulation risks and requires pipeline‑level safeguards.

Adversarial or erroneous content in foundational sources can produce undesirable model behaviors if not detected and removed.

WebText used link popularity heuristics to extract higher-quality web pages

WebText (the GPT‑2 corpus) used a pragmatic heuristic to get a diverse but higher‑quality subset of the web: extract pages linked from Reddit posts that exceeded a small karma threshold.

This produced a compact set of higher‑signal documents (≈8 million pages and tens of gigabytes of text in the original work) compared to undifferentiated web crawls.

The approach highlights the value of using social signals or other external proxies to select content likely to be high quality and human‑valued.

However, proprietary crawls and heuristics used by many developers are often not fully disclosed, limiting reproducibility.

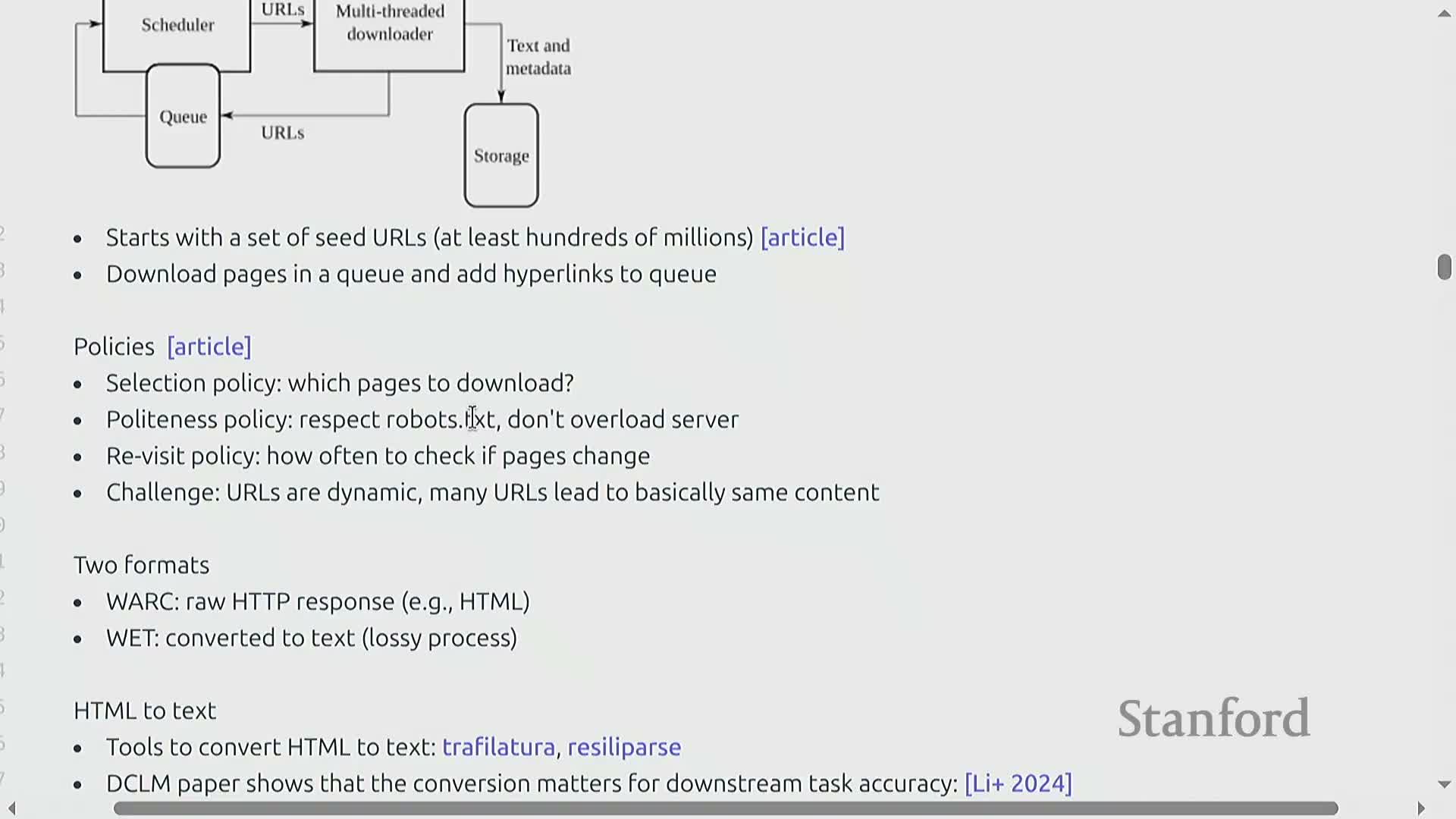

Common Crawl provides large monthly web snapshots but requires extensive processing

Common Crawl is an open, monthly web crawl that provides raw HTTP responses and WARC archives for billions of pages—an academic approximation of the web.

Raw data requires heavy preprocessing before training, including:

-

HTML→text conversion (WET files or custom parsers)

-

deduplication

-

language identification

-

filtering for boilerplate, dynamic URLs, and near‑duplicates

The HTML‑to‑text toolchain materially affects downstream model quality: different extractors yield measurable performance differences in ablations.

Common Crawl is deliberately conservative in crawling behavior and is not a comprehensive mirror of the full web, so downstream datasets often supplement it with targeted crawls or third‑party sources.

Common Crawl contains offensive and copyrighted material and respects robots.txt policies variably

Common Crawl does not perform aggressive semantic filtering by default, so raw dumps include offensive, toxic, and copyrighted content, and handling of illegal or disallowed pages is limited.

- Web hosts can request exclusion via robots.txt or other crawler rules, but adherence varies and is partly heuristic.

- Major model developers often run bespoke crawlers beyond Common Crawl.

- Media (images, etc.) may appear in raw responses but are frequently ignored by text pipelines unless explicit media crawlers are used.

The prevalence of copyrighted material complicates legal usage; teams typically address this with licensing agreements or fair‑use arguments, while content owners may restrict downstream uses via terms of service.

Two principal approaches to web filtering are model-based scoring and rule-based heuristics

Filtering large web crawls into high‑quality corpora has been implemented via two main paradigms:

-

Model‑based approaches (e.g., CCNet) train a classifier or language model to score documents against curated positive examples (Wikipedia, books). These enable language‑aware quality judgments but can concentrate content toward the positive example distribution.

-

Rule‑based heuristics (e.g., C4) apply deterministic filters (punctuation counts, sentence thresholds, blacklists). They preserve broader linguistic diversity but can admit well‑formed spam or boilerplate.

Both paradigms have trade‑offs: model‑based selection amplifies similarity to positives; rule‑based selection preserves diversity at the cost of admitting noise.

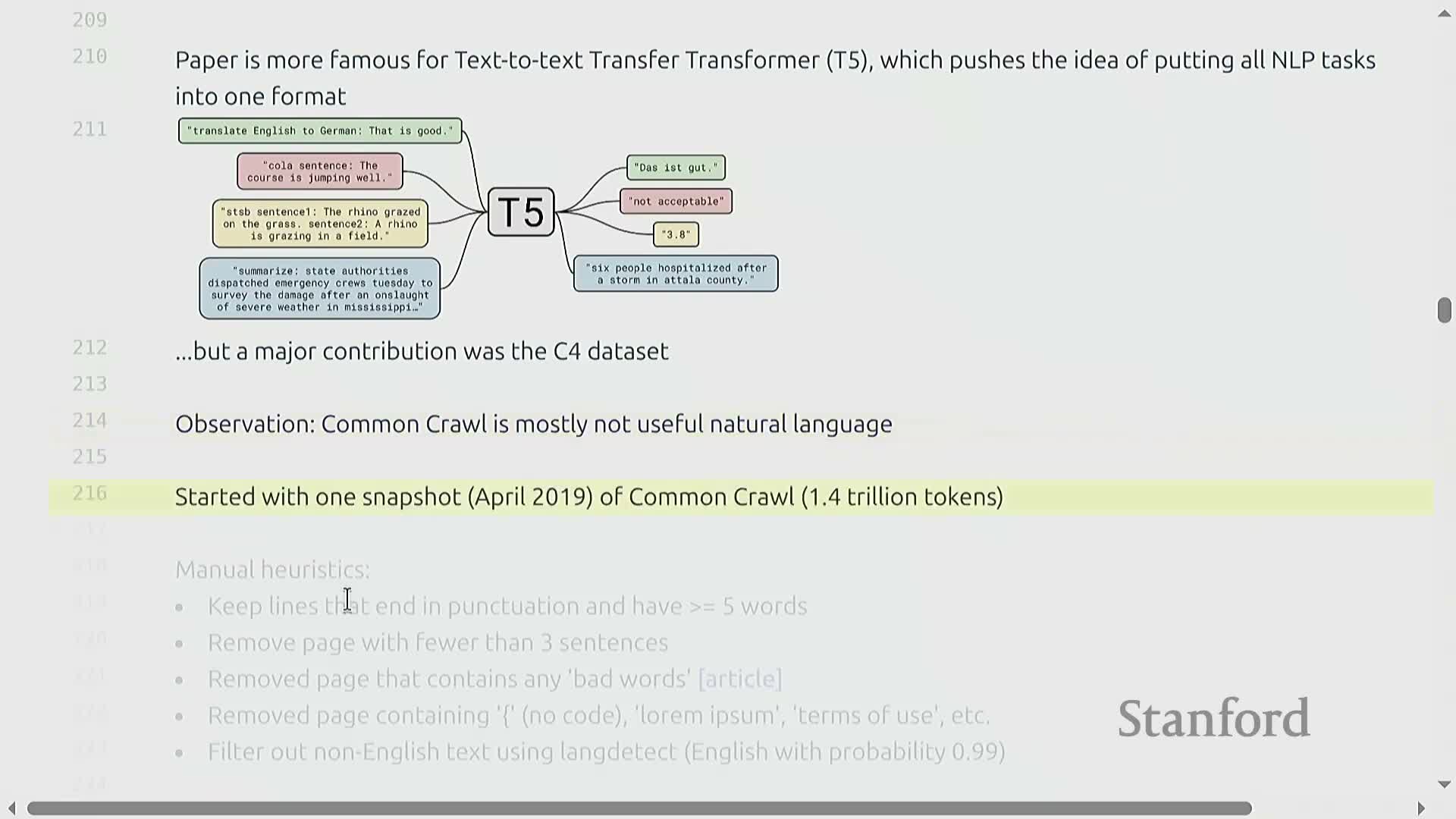

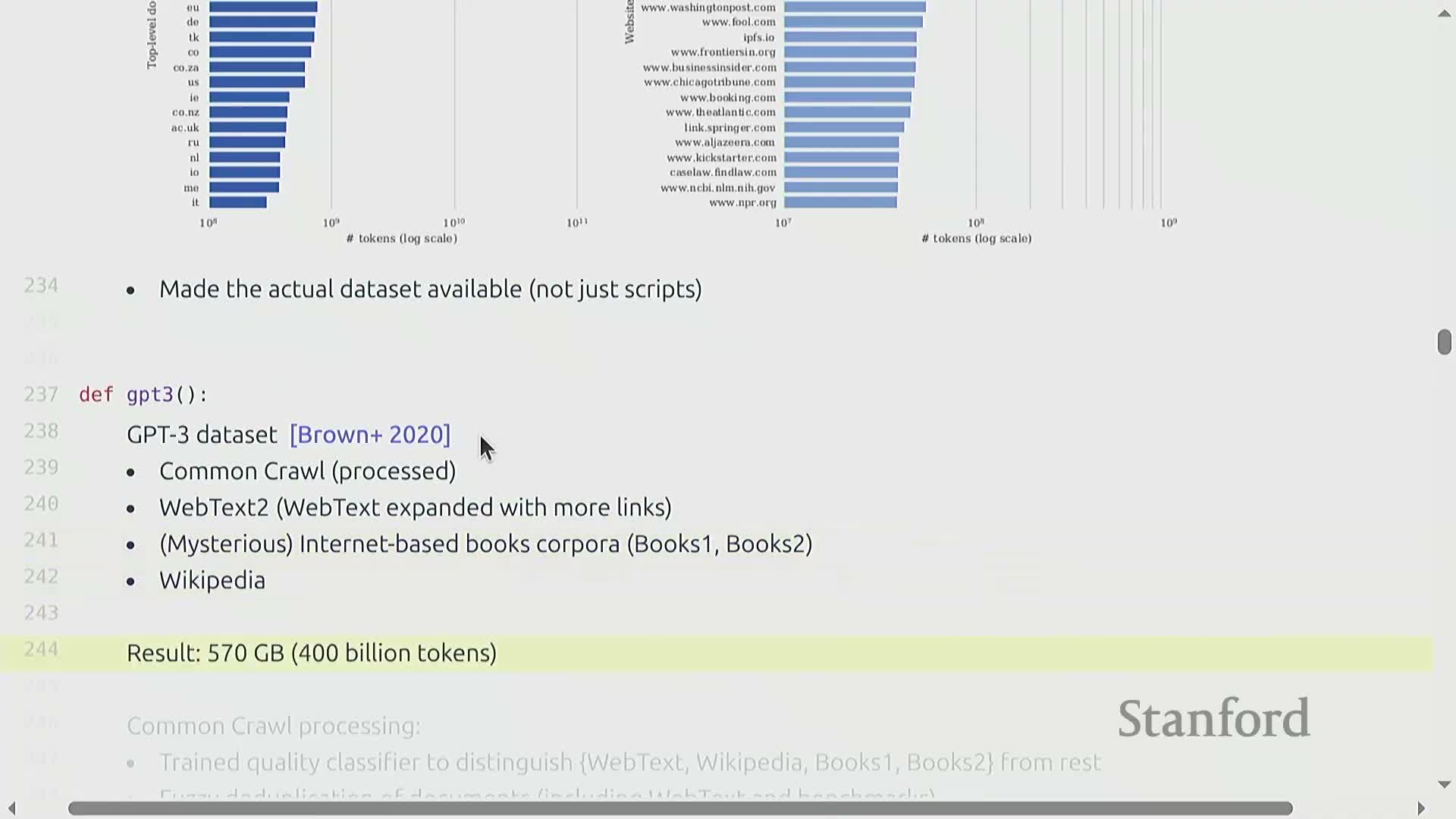

GPT-3 used a mixture of Common Crawl, filtered web text, books, and Wikipedia with a learned quality classifier

The GPT‑3 era dataset combined Common Crawl processed into WebText2, curated book corpora (Books1/Books2), and Wikipedia, then applied a quality classifier to surface high‑signal documents.

Key aspects of the classifier‑based pipeline:

- Select positive examples representative of desired quality.

- Train a classifier to find similar material across a much larger pool.

- Produce a focused ~400B‑token training mix by amplifying scarce high‑quality sources.

This demonstrates the utility of supervised quality scoring to extract high‑signal content from massive raw pools, but proprietary training mixes and classifier details are often incompletely disclosed, limiting reproducibility and independent evaluation.

The Pile aggregated diverse high-quality domains to maximize open-source training material

The Pile is an open, community‑assembled dataset aggregating multiple high‑quality domains: Common Crawl subsets, OpenWebText, arXiv, PubMed Central, Stack Exchange, GitHub, Project Gutenberg, and curated book collections.

Goals and practices:

- Maximize domain diversity and include permissively licensed material where possible.

- Perform licensing checks, deduplication, and conversion choices to preserve code and long‑form attributes.

The Pile shows how curated open datasets can replicate—and sometimes exceed—proprietary mixes when volunteers and institutions coordinate collection and processing.

Stack Exchange and GitHub provide structurally rich, application-relevant corpora but require careful licensing and processing

Stack Exchange dumps and GitHub repositories contribute QA‑style conversational data and code, respectively—both valuable for instruction‑following and reasoning behaviors.

Important considerations:

- These sources include metadata (votes, comments, commit history) that support fine‑grained filtering and high‑signal selection.

- They often carry licensing constraints and commercial‑use caveats (license‑aware inclusion policies are required).

- Converting repo snapshots into tokenizable training data needs careful handling of non‑code files, deduplication, and licensing; permissive licenses facilitate open redistribution (e.g., The Stack).

Random sampling reveals large heterogeneity in quality and content distribution, so preprocessing choices strongly affect final dataset composition.

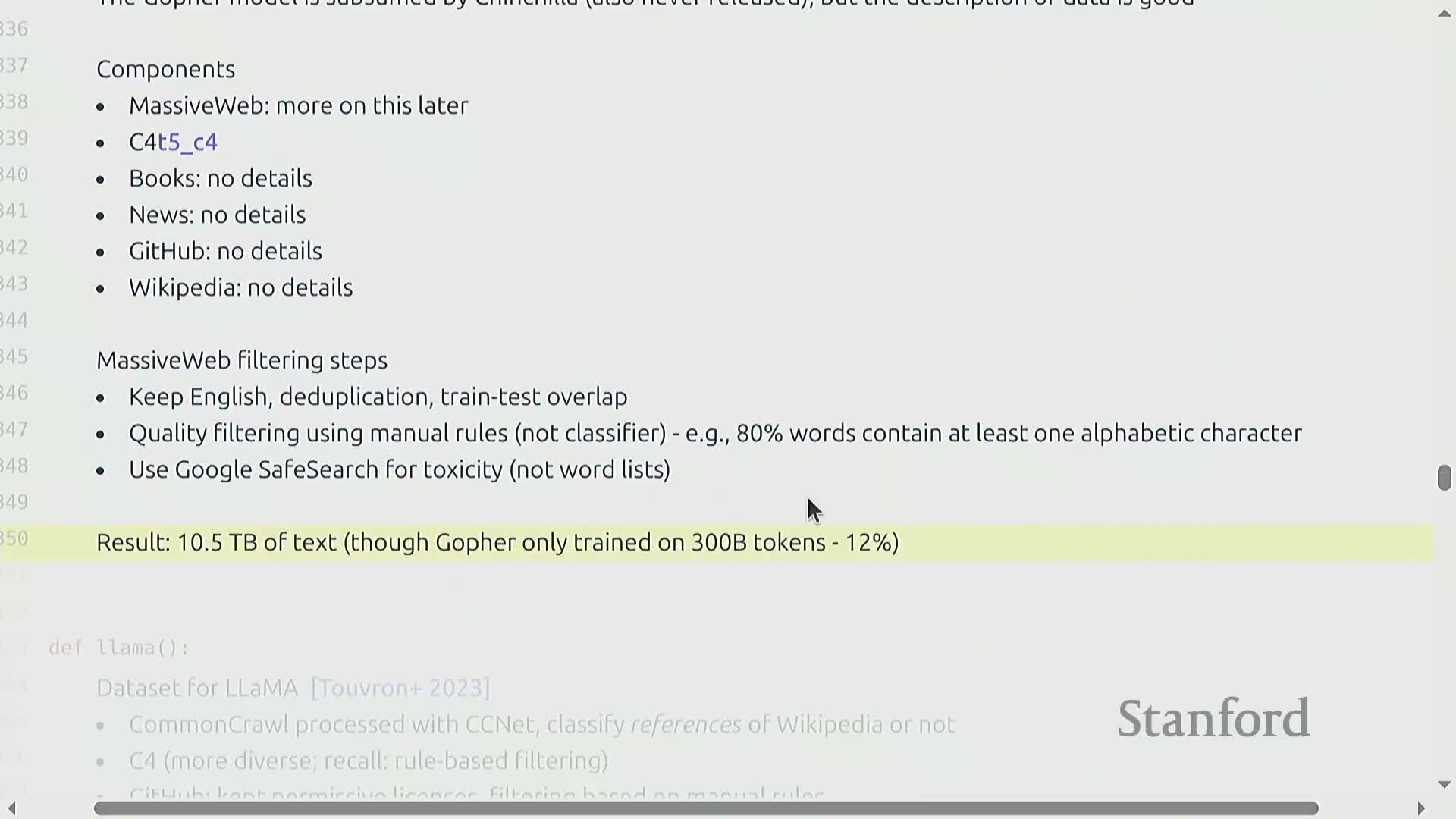

Gopher and other research-era large datasets emphasized curated filters and manual heuristics

Research models such as DeepMind’s Gopher collected massive text sources and applied language‑specific filters, rule‑based heuristics, and toxicity checks to improve signal‑to‑noise in the pre‑training pool.

Early decisions favored manual rule‑based filters because model understanding was limited, and conservative filters reduced the risk of excluding marginalized or non‑standard linguistic forms.

Massive curated pools were often larger than required by training runs, enabling selective training on the best subsets and iterative dataset design.

Design choices in these datasets influenced later model‑based filtering approaches and the community trade‑offs between coverage and quality.

LLaMA, RedPajama reproductions, and RepAv2 illustrate dataset reproduction, link-aware quality, and large-scale signal computation

LLaMA combined Common Crawl processed with CCNet‑style filters, C4, permissively licensed GitHub, Wikipedia, and multiple book sources to produce a multi‑trillion‑token training mix; the exact proprietary mix was not released but has been materially reproduced (e.g., RedPajama).

Variants like RepAv2 process multiple Common Crawl snapshots and compute many quality signals at scale to produce candidate corpora for research into filtering strategies.

Alternative signals include link‑structure classifiers that predict whether pages are cited by Wikipedia, leveraging web link graphs as proxies for quality.

These reproductions highlight challenges of exact replication and the benefits of publishing both data and filtering code for the research community.

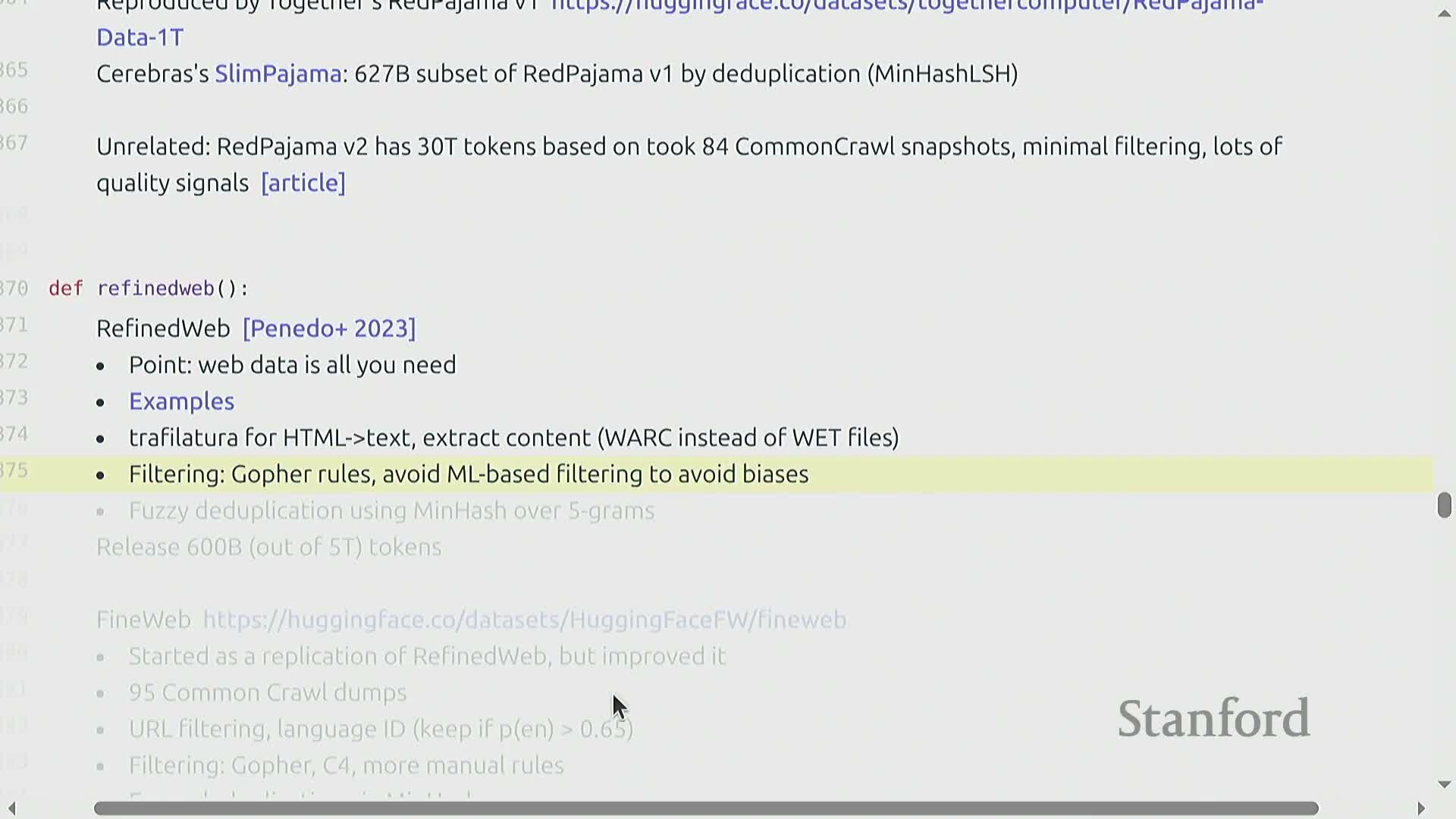

RefinedWeb and FineWeb provide minimally processed web datasets intended for further curation

RefinedWeb and FineWeb produce lightly filtered, deduplicated, and extract‑cleaned Common Crawl derivatives that preserve broad coverage while removing obvious noise.

Typical priorities:

- High‑quality HTML→text extraction

-

Fuzzy deduplication

- Conservative rule‑based removal of low‑signal documents

These datasets yield multi‑trillion‑token pools with released subsets intended as researcher‑friendly starting points for subsequent model‑based filtering or task‑specific selection, preserving linguistic diversity that Wikipedia‑centric filters might eliminate.

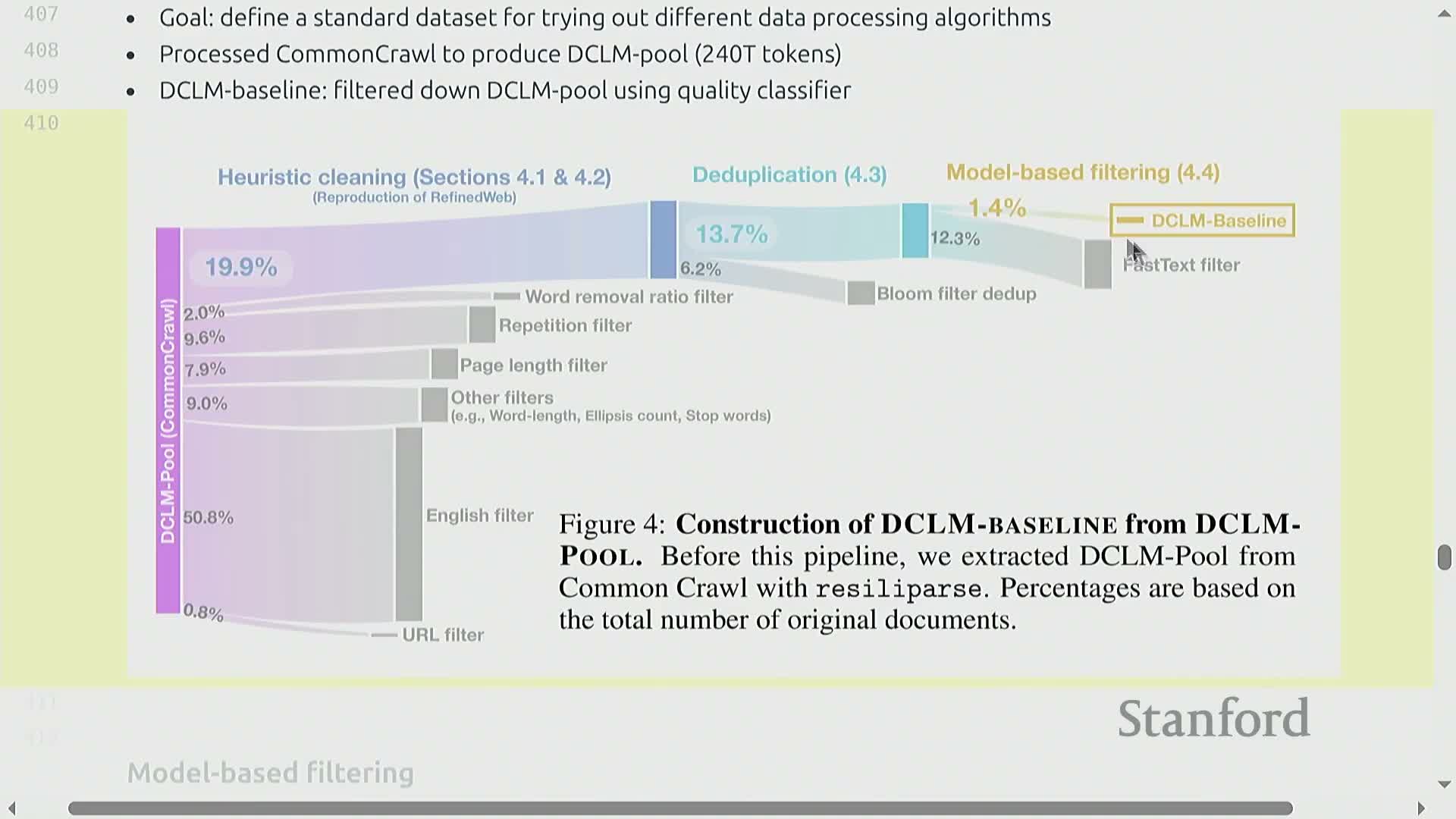

DataComp defined a standard benchmarking and competition framework and demonstrated aggressive model-based filtering gains

DataComp produced a standardized infrastructure to process all Common Crawl dumps into a massive DCM pool, then applied a staged recipe to filter down to a DCM baseline using a learned quality classifier plus rule‑based filters.

Key elements:

- Label positive examples (e.g., instruction‑like data from Open Hermes, ELI5).

- Train a fast classifier to score candidates.

- Aggressively filter the large raw pool to a small high‑quality subset.

Aggressive model‑in‑the‑loop filtering delivered substantial downstream gains on language modeling benchmarks and catalyzed reproducible comparisons of filtering strategies through open artifacts and baselines.

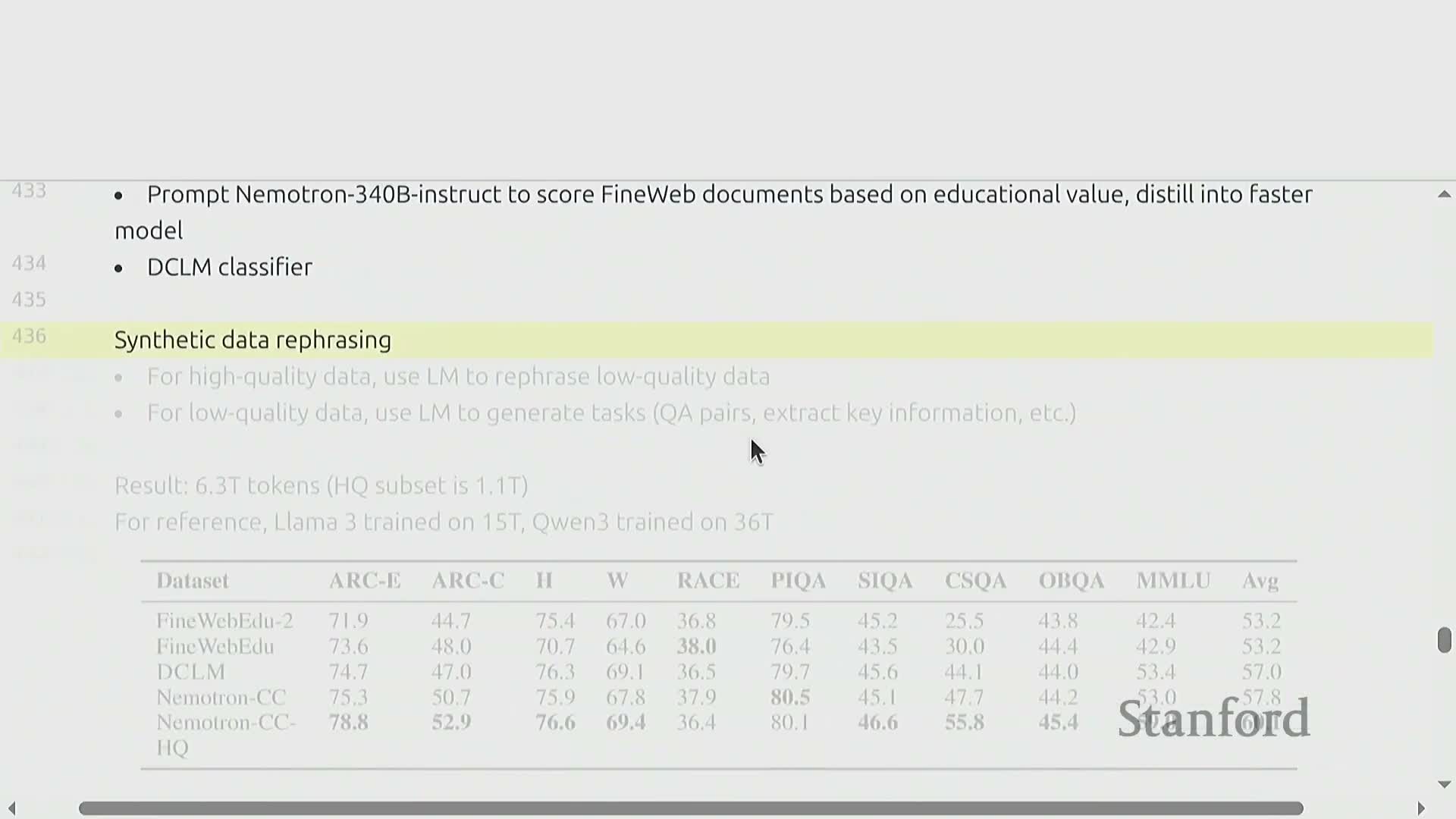

NeMo/NeatronCC ensembles model-based scorers and rewrites to increase usable token volume while preserving quality

NeMo/NeatronCC addressed the scale‑quality trade‑off by combining multiple scoring models, bucket‑based sampling, and token‑preserving extraction to recover more usable tokens from Common Crawl while maintaining benchmark performance.

Techniques used:

- Use large models to score documents for educational value.

- Ensemble scores with DCM classifiers and sample from score buckets to preserve coverage.

- In some cases, use models to rewrite lower‑quality texts into higher‑quality forms or generate task‑like input‑output pairs from high‑quality documents.

This increased the available high‑quality token pool to several trillion tokens and produced subsets that outperformed aggressively pruned baselines on standard benchmarks—illustrating ensemble and rewrite techniques as practical mechanisms to expand training budgets without sacrificing curated signal.

Copyright law and licensing are central constraints for dataset collection and sharing

Copyright protects original expressive works fixed in a tangible medium, and most internet text is copyrighted even without registration.

Legal pathways for dataset use include:

-

Licenses from rights holders

-

Fair use defenses (assessed by purpose, nature, amount used, and market effect)

Platform licensing agreements and terms of service (YouTube, Reddit, GitHub, etc.) can restrict automated crawling and downstream model training even when content is publicly accessible.

For research and production datasets, explicit licensing choices or risk assessments about fair use and market effects are essential to determine lawful inclusion and redistribution strategies.

Training models on copyrighted content raises complex legal and technical issues including memorization and transformative use arguments

Using copyrighted material for training can implicate reproduction rights because training copies data into storage and models can sometimes memorize and regurgitate verbatim excerpts.

Common legal and technical considerations:

- Defenses include arguing training is transformative—extracting abstract patterns rather than reproducing expression—but the legal status is unsettled and fact‑specific.

- Platform terms of service may independently prohibit automated extraction, so datasets must account for contractual constraints as well as copyright law.

- Technical mitigations: deduplication, removal of verbatim copyrighted passages, and auditing to limit extractable memorized content.

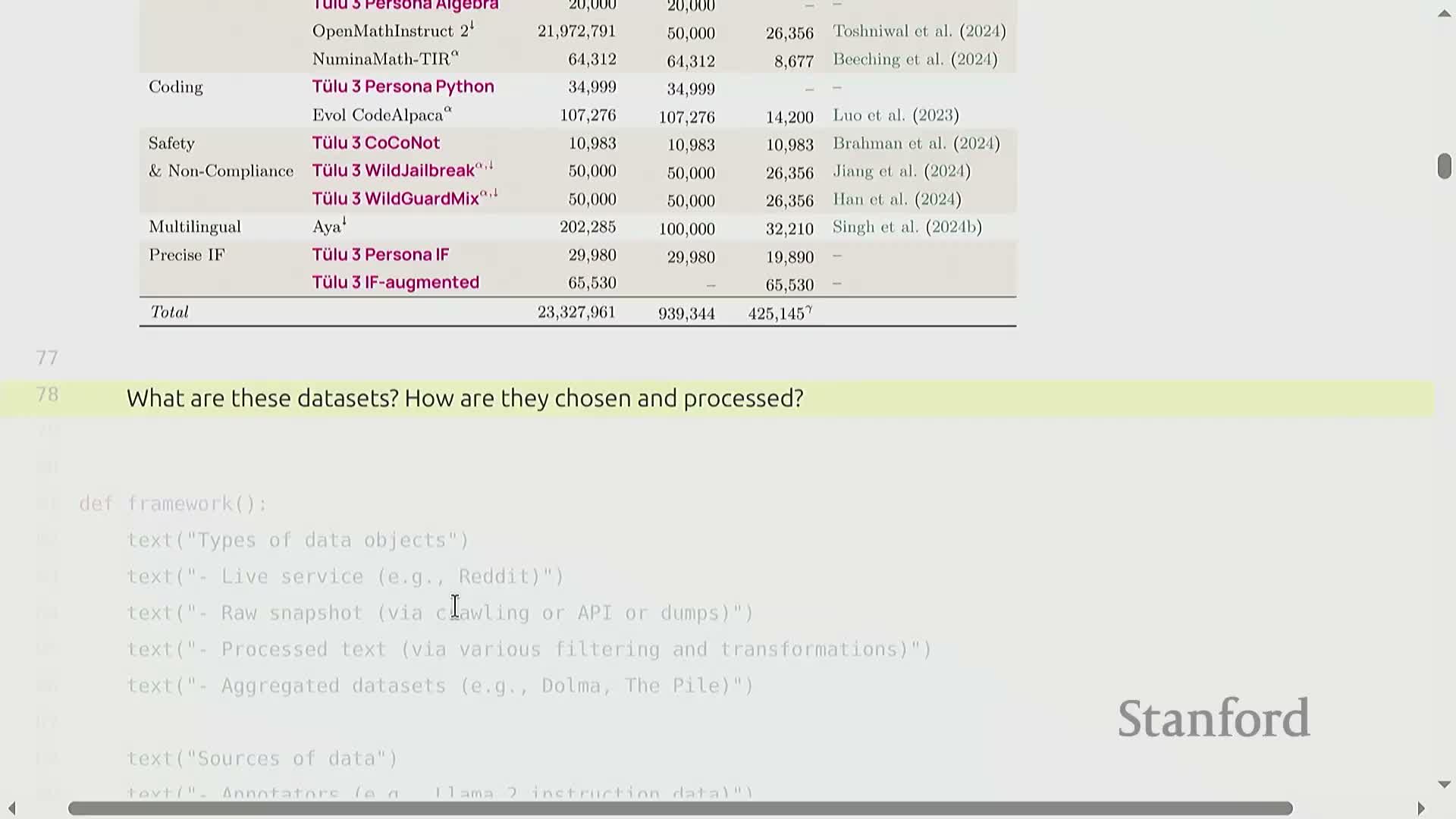

Mid-training and post-training use targeted corpora and synthetic data to instill capabilities such as long context and instruction following

Mid‑training often focuses on capability extension, for example training on longer documents (books, mathematical text) to enable long‑context modeling, since attention complexity makes long‑context training expensive and better deferred until model capacity is adequate.

Instruction tuning and post‑training exploit task‑structured datasets by converting benchmarks into unified prompt‑response formats (e.g., FLAN, SuperNaturalInstructions) or by synthesizing instruction data using existing models (self‑instruct, Alpaca, Vicuna).

Notes on synthetic pipelines and human alignment:

- Synthetic generation can produce large amounts of conversational or reasoning‑trace data by prompting stronger models, but generator license terms may restrict their use.

-

Human annotation and RLHF remain important for alignment, though they are more expensive and slower than synthetic methods.

Dataset engineering is heuristic, consequential, and a primary axis for improving language models

Effective language model development requires an end‑to‑end data pipeline that includes:

- Acquiring live service snapshots.

- Converting raw responses to tokenizable text.

- Language identification.

- Deduplication.

- Quality filtering and license checking.

- Constructing staged mixes for pre‑, mid‑, and post‑training.

Small implementation choices—HTML extractor, deduplication thresholds, positive example selection for classifiers, sampling from score buckets—have measurable downstream impacts on model behavior and benchmarks.

Because many decisions remain heuristic, there are substantial opportunities for systematic research on:

- Principled filtering

- Fairness‑aware curation

- Copyright‑safe pipelines

- Reproducible dataset benchmarks

Investing in dataset engineering and open reproducible pipelines often yields outsized returns relative to marginal architectural changes when scale and compute are sufficient.

Enjoy Reading This Article?

Here are some more articles you might like to read next: