Karpathy Series - Deep Dive into LLMs like ChatGPT

- Video purpose and structure

- Pre-training stage and web-scale text acquisition

- Common Crawl and raw-data preprocessing pipeline

- Tokenization and vocabulary design (bytes, BPE, vocabulary size)

- Practical tokenization illustration and dataset scale

- Neural network training objective (next-token prediction)

- Transformer architecture and internal representations

- Inference and sampling from the trained model

- GPT-2 as a concrete historical example and reproducibility

- Compute infrastructure and GPU scaling for training

- Base model releases: code plus parameters

- Interacting with base models and in-context learning

- Post-training (supervised fine-tuning) and conversation datasets

- Conversation encoding and token protocols for assistant training

- Model hallucination causes and detection via model interrogation

- Tool use and web search to refresh working memory

- Model identity and system messages

- Models need tokens to think: distributed, stepwise reasoning and tool use

- Reinforcement learning as a final training stage and its motivation

Video purpose and structure

This segment defines the video’s objective as providing accessible mental models for understanding large language models (LLMs) and outlines a multi-stage pipeline for building systems like ChatGPT.

Key framing points:

- The pipeline is described as pre-training, post-training, and inference stages.

-

LLMs are powerful but have distinct strengths, weaknesses, and sharp edges that users should understand.

- The presentation will cover both engineering and cognitive implications across pipeline stages.

- The narrative prepares you to follow a sequential explanation of:

-

data acquisition,

-

model architectures,

-

training procedures,

-

deployment, and

-

human-in-the-loop refinement workflows.

-

data acquisition,

The goal is to give accessible mental models that preserve technical nuance while making the end-to-end system understandable.

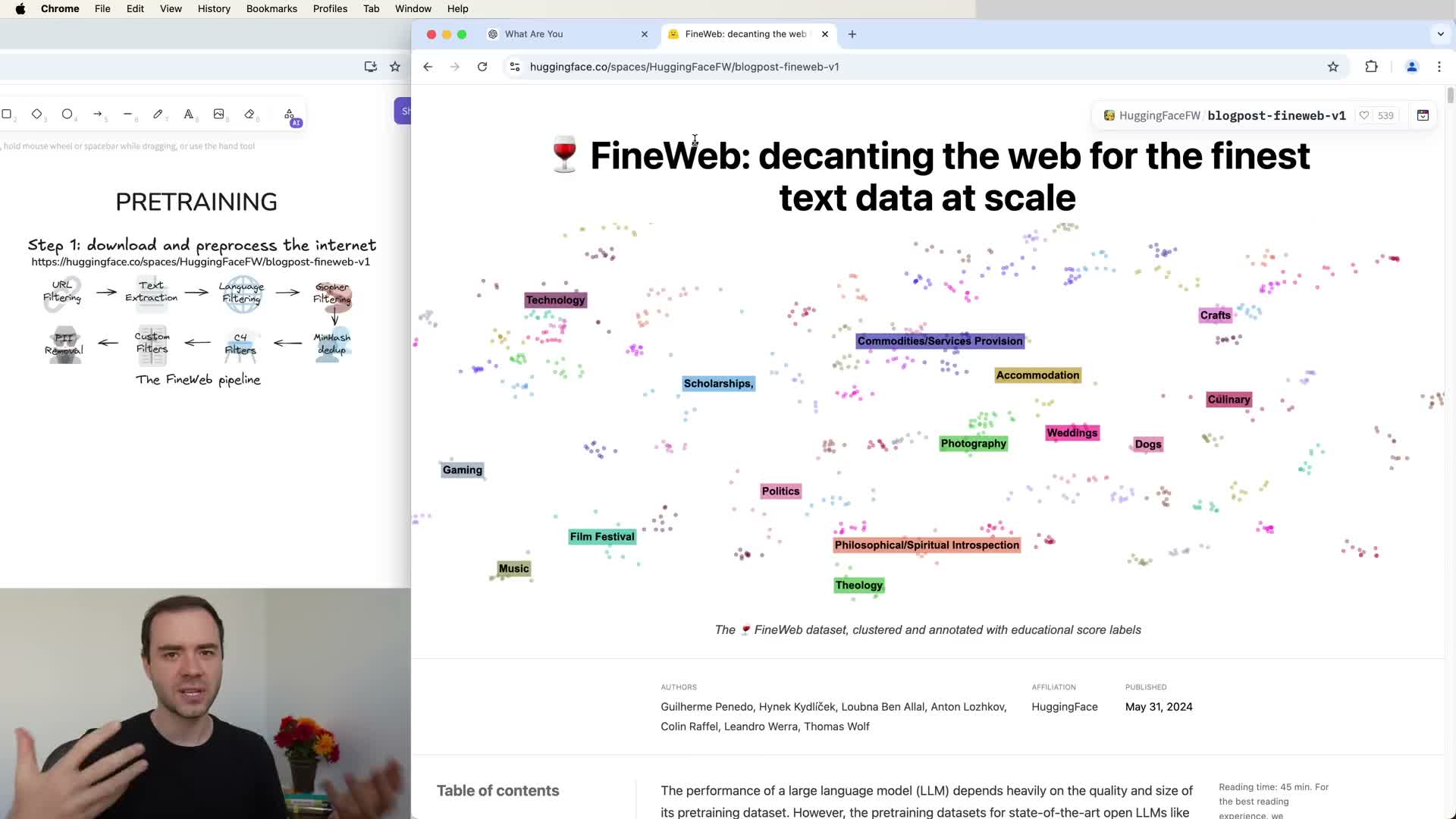

Pre-training stage and web-scale text acquisition

Pre-training is the initial stage in which massive amounts of text are collected and processed to build a base LLM.

Highlights and goals:

- Commercial efforts typically construct large curated corpora (examples include variants of “FineWeb”) by downloading public internet content and filtering for quality and diversity.

- The objective is to expose the model to extremely large quantities of high-quality, diverse documents so the network can internalize broad statistical patterns of natural language.

- Practical dataset sizes after aggressive filtering are on the order of tens of terabytes and trillions of tokens.

- This stage is computationally dominant and sets the knowledge base that later training and fine-tuning will leverage.

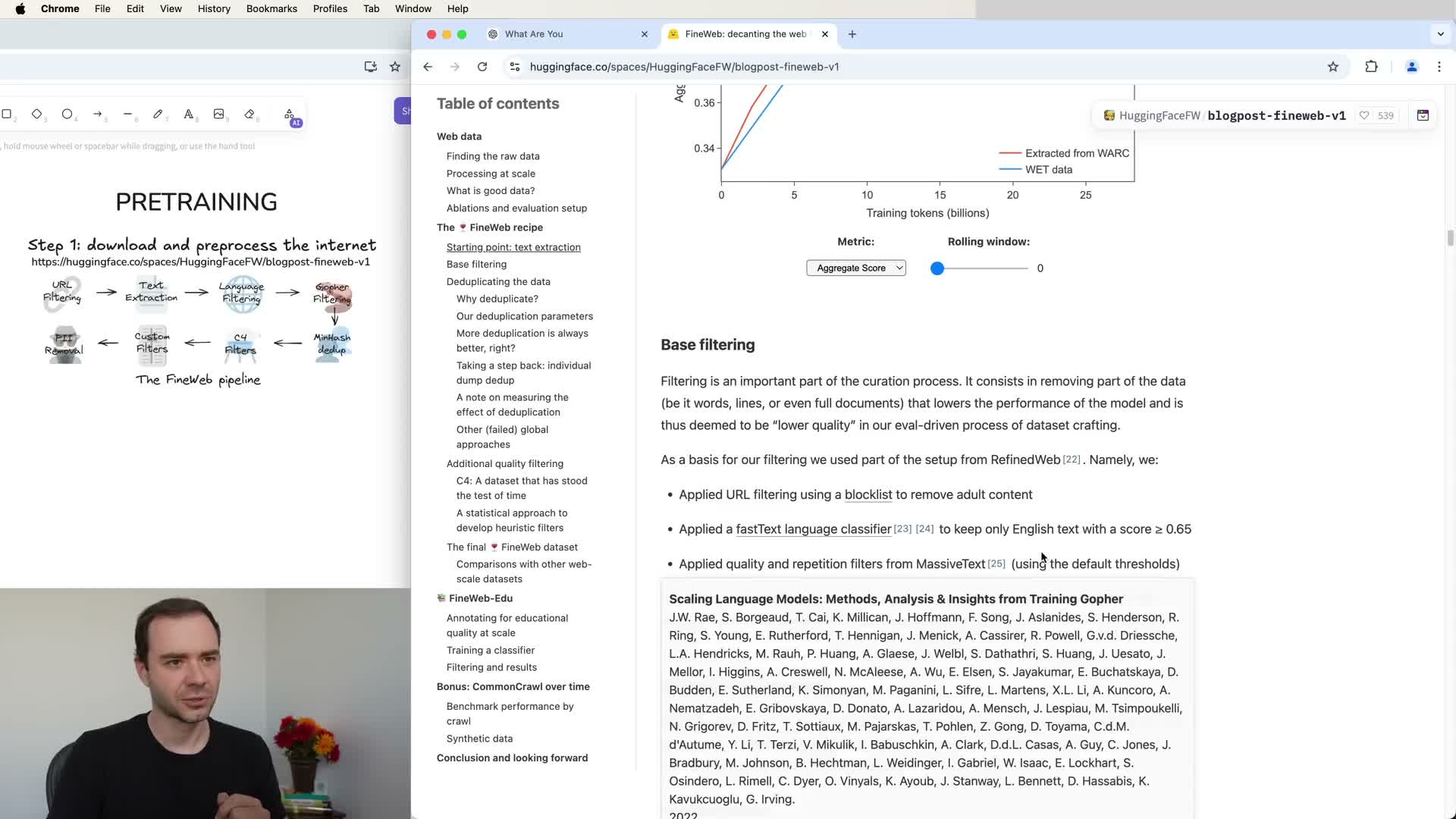

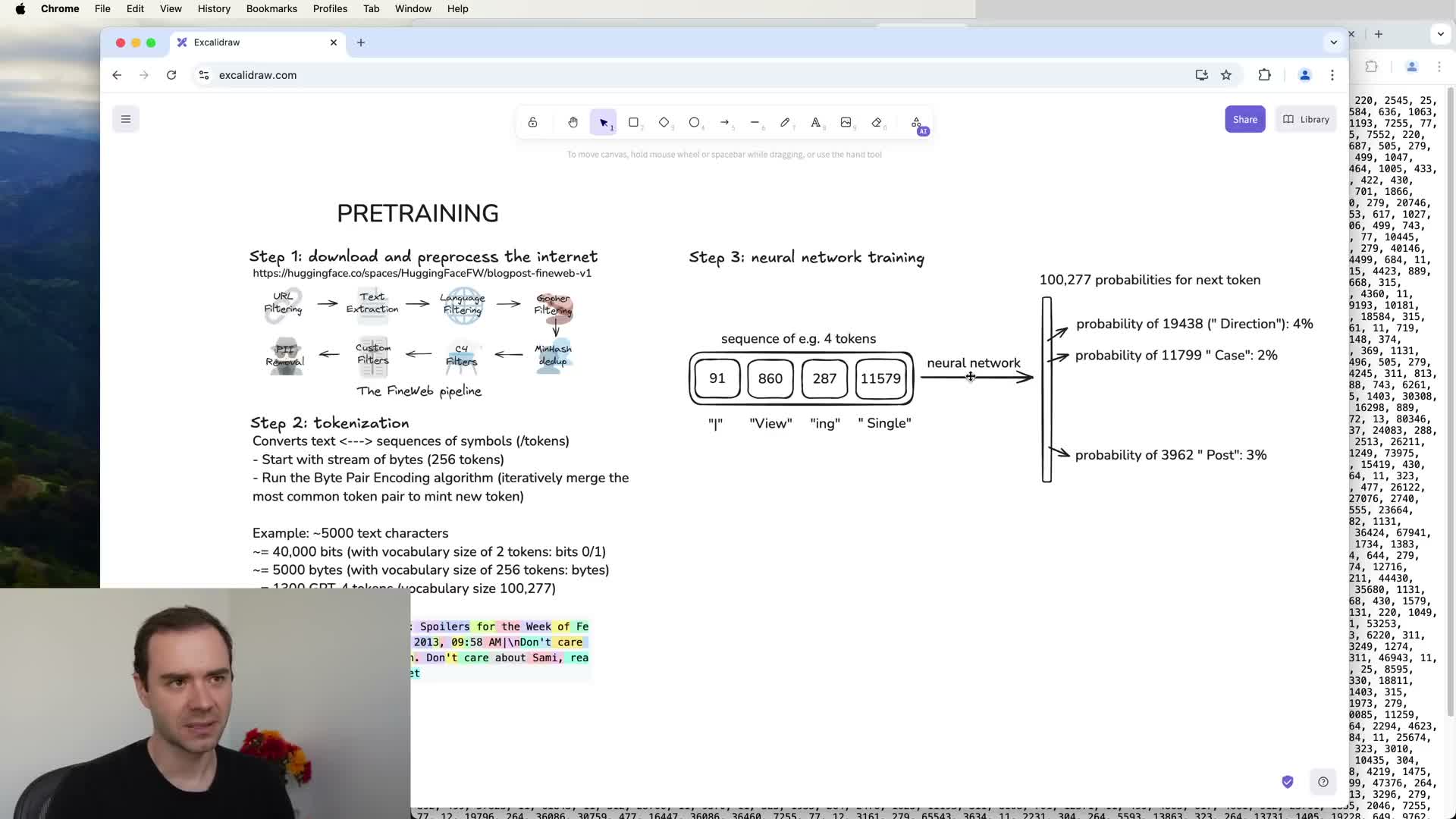

Common Crawl and raw-data preprocessing pipeline

Internet-scale text used for pre-training commonly originates from web crawls such as Common Crawl and undergoes multiple preprocessing steps.

Typical preprocessing pipeline (each step uses heuristics and classifiers):

-

URL/domain filtering to exclude malware, spam, or adult content.

-

HTML text extraction to remove markup and navigation boilerplate.

-

Language classification to select documents in target languages.

-

Deduplication to remove repeated content.

-

PII removal to strip personally identifiable information.

Why this matters:

- These steps transform raw HTML into cleaned text documents suitable for tokenization and strongly shape model capabilities (for example, multilingual coverage) and corpus size.

- The end result is a large but curated text corpus that balances quantity, diversity, and quality before tokenization and model training.

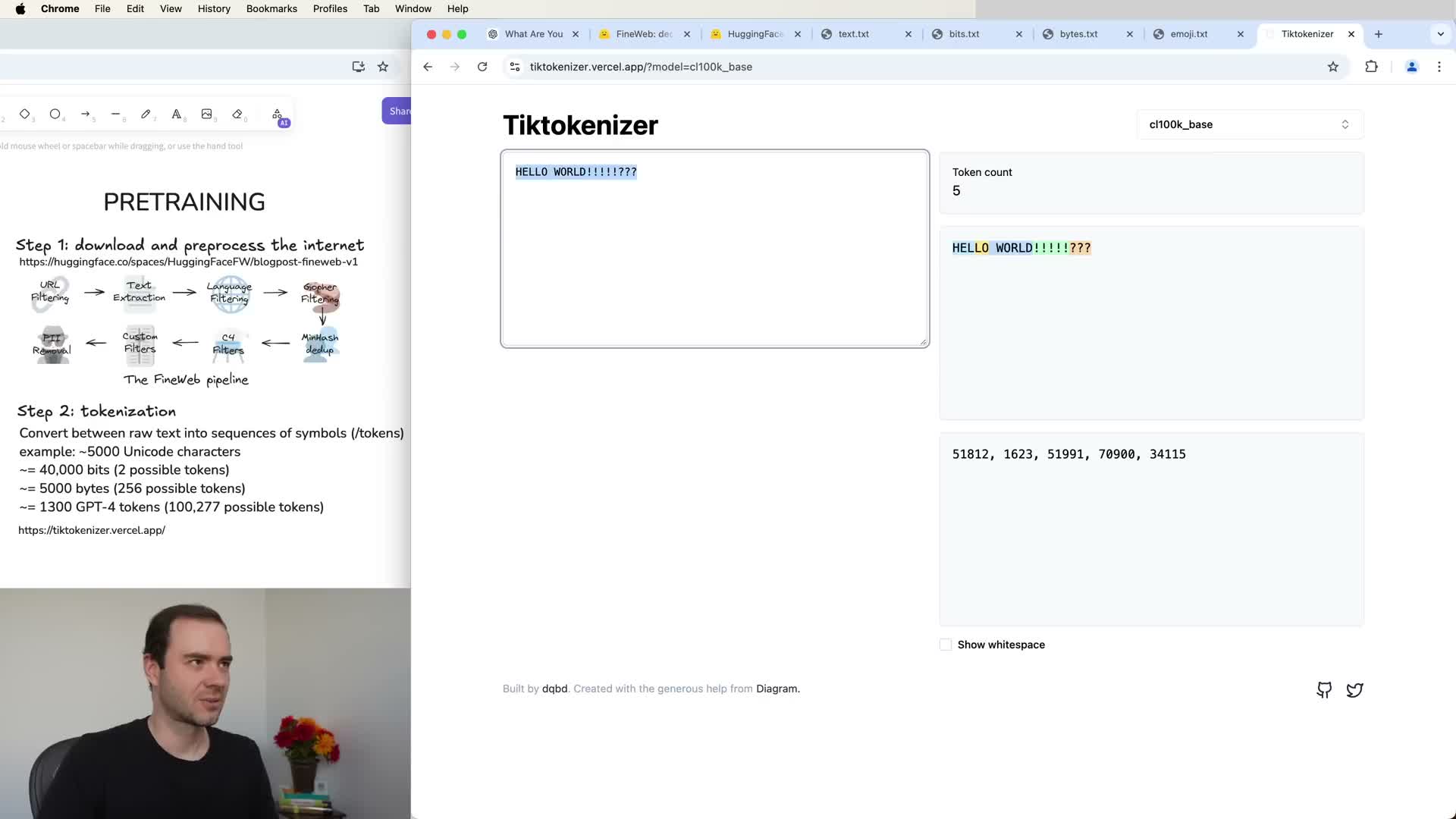

Tokenization and vocabulary design (bytes, BPE, vocabulary size)

Tokenization maps raw text into finite discrete symbols suitable for neural sequence models by trading sequence length for vocabulary expressiveness.

Key concepts:

- Start from raw bytes (256 symbols) and group common byte sequences using algorithms like Byte Pair Encoding (BPE) to form higher-level tokens.

- Contemporary production LLMs use vocabularies on the order of 100k tokens (for example, GPT‑4 uses ~100,277 tokens).

- Larger vocabularies substantially shorten sequence lengths while still representing text efficiently.

- Tokenizer behavior is case-sensitive and spacing-sensitive, so tokenization decisions affect downstream model behavior and token budgets.

Practical takeaway:

-

Tokenization is an explicit compression step that determines model input length, token IDs, and how textual variations are represented internally.

Practical tokenization illustration and dataset scale

This segment demonstrates tokenization in practice using an online tokenizer viewer and explains scaling implications.

Demonstration points:

- A single line of text is converted into a sequence of token IDs; tokens are abstract IDs, not semantically meaningful numbers.

- Tokenization choices (spacing, case, merged pairs) change token counts and thus affect cost and context usage.

- Long text corpora (tens of terabytes) map to enormous token sequences — commonly tens of trillions of tokens for pre-training corpora.

Why this matters:

- Understanding corpus token counts and tokenization idiosyncrasies is important for resource planning, context window configuration, and training cost estimation.

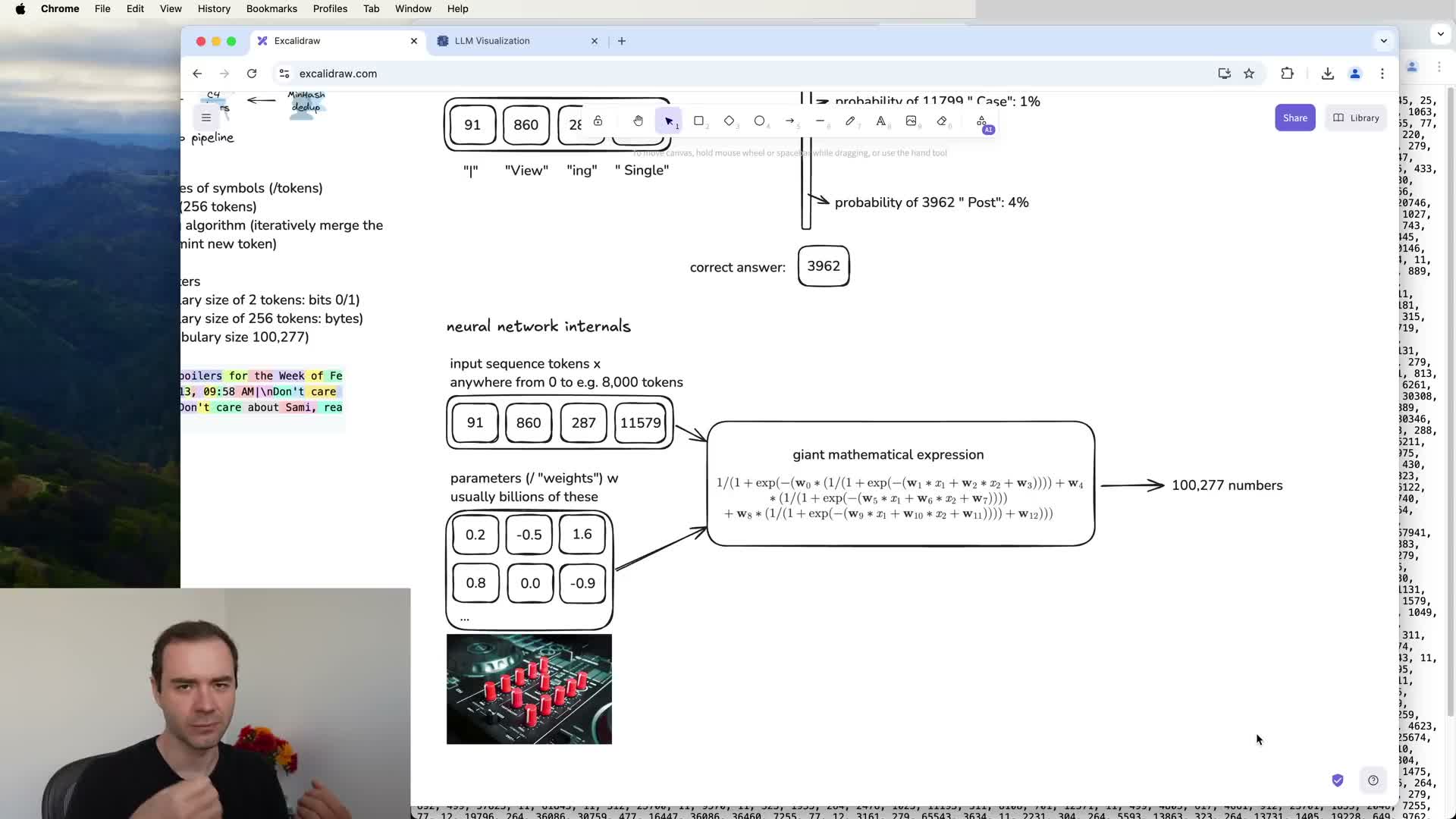

Neural network training objective (next-token prediction)

Neural network pre-training optimizes a next-token prediction objective.

Process (repeated during training):

- Randomly sample windows of tokens from the preprocessed corpus.

- Feed the token sequence as context into the network.

- Train the model to maximize the probability assigned to the actual next token.

- Compute a probability distribution over the entire vocabulary (a vector with dimension = vocabulary size).

- Measure prediction quality with a loss function (e.g., cross-entropy) and update billions of parameters via gradient-based optimization.

Outcome:

- The iterative process converges to parameter settings that capture statistical relationships among tokens over the training distribution, producing a high-quality next-token predictor.

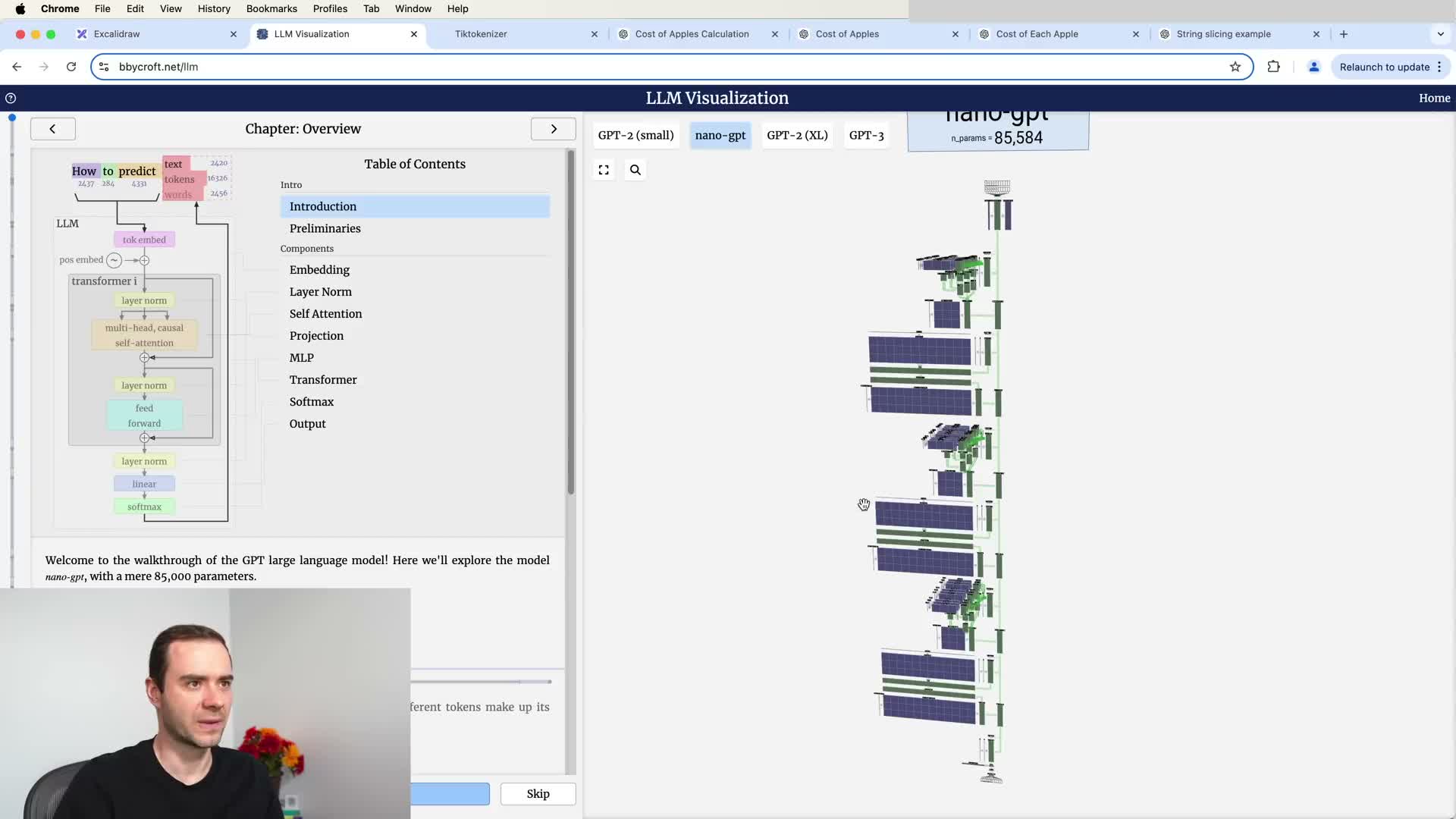

Transformer architecture and internal representations

Modern LLMs implement the next-token predictor using Transformer architectures.

Core mechanics:

- Each token is embedded into a distributed vector space, positional information is added, and vectors propagate through repeated attention and MLP (feed-forward) blocks.

- The architecture mixes token embeddings with parameterized layers (attention, feed-forward networks, layer norms) to compute logits and probabilities for the next token.

- Intermediate activations represent distributed features that the model uses to compute output probabilities.

- The Transformer is a large differentiable mathematical function parameterized by billions of weights; training adjusts those weights so the function maps input token sequences to probability distributions aligned with observed data statistics.

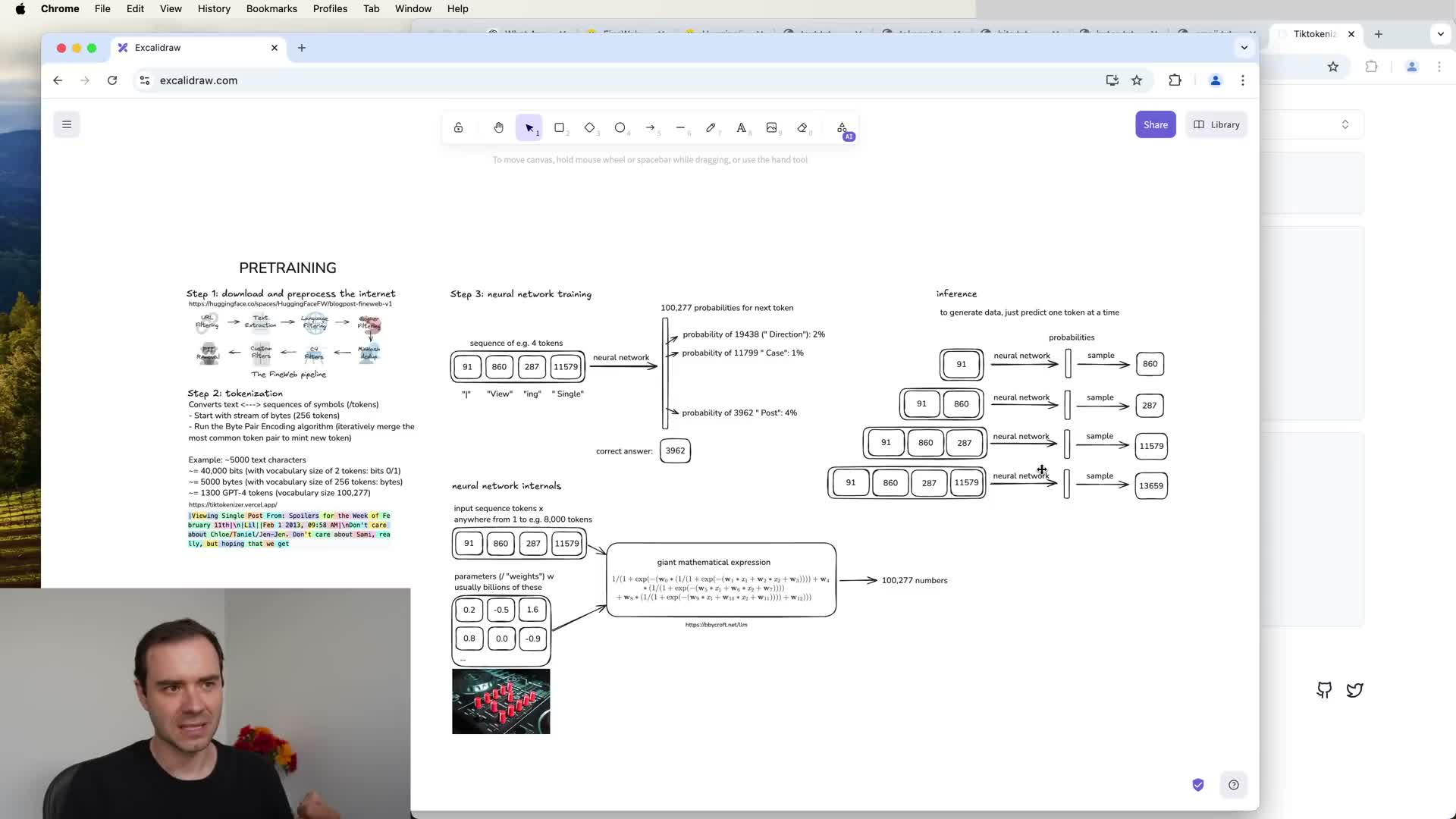

Inference and sampling from the trained model

Inference generates new token sequences by repeatedly querying the trained model for the next-token distribution given a prefix.

Generation loop:

- Query the model for the next-token probability distribution conditioned on the current prefix.

- Sample (or take the argmax) from that distribution and append the sampled token to the prefix.

- Repeat until termination criteria are met (end-of-sequence token, length limit, etc.).

Practical notes:

- Because sampling is stochastic, repeated generations from the same prefix usually yield different continuations; outputs are statistically similar to but not identical with training texts.

-

Sampling hyperparameters (temperature, top-k, top-p) control diversity.

- Production systems typically freeze model weights after training and perform inference only, optionally applying post-processing, filtering, or tool invocation policies during generation.

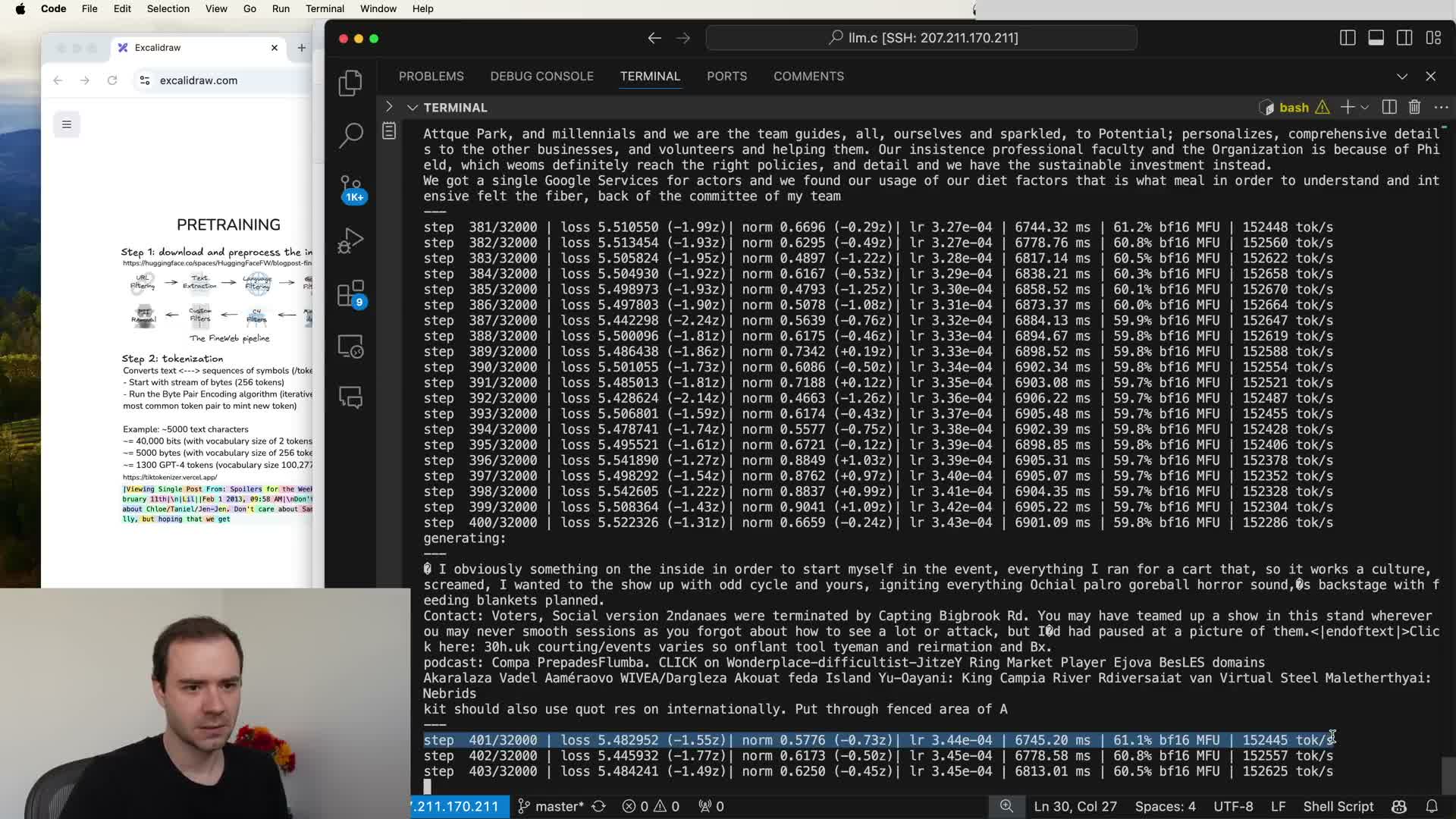

GPT-2 as a concrete historical example and reproducibility

GPT‑2 is a widely cited pre-training milestone and a reproducible benchmark for modern stacks.

Key facts about GPT‑2:

- Transformer-based model with 1.6B parameters.

-

Maximum context length: 1,024 tokens.

- Trained on approximately 100B tokens.

Why it matters:

- At the time it was state-of-the-art and provided a canonical, reproducible reference point.

- Reproducing GPT‑2 today is far cheaper due to improved datasets, faster hardware, and optimized software, though the overall training paradigm remains the same: dataset construction, tokenization, next-token objective, and Transformer architecture.

- Smaller reproductions of GPT‑2 illustrate the training workflow, loss monitoring, and practicalities of multi-step optimization until convergence.

Compute infrastructure and GPU scaling for training

Large-scale LLM training requires specialized hardware and distributed systems.

Typical infrastructure:

- Data-center scale clusters composed of high-performance accelerators such as NVIDIA H100 GPUs.

- Multi‑GPU nodes (for example, 8× H100 per node) and high-bandwidth interconnects to enable parallelism across many devices and machines.

- Training throughput scales with the number of accelerators, directly affecting wall-clock time and cost.

Operational implications:

- Commercial providers rent on-demand GPU nodes; hyperscalers and cloud providers host dense GPU farms.

- Large GPU fleets drive demand for specialized software stacks for parallel linear algebra and memory management, plus significant capital and operational expenditure for state-of-the-art training.

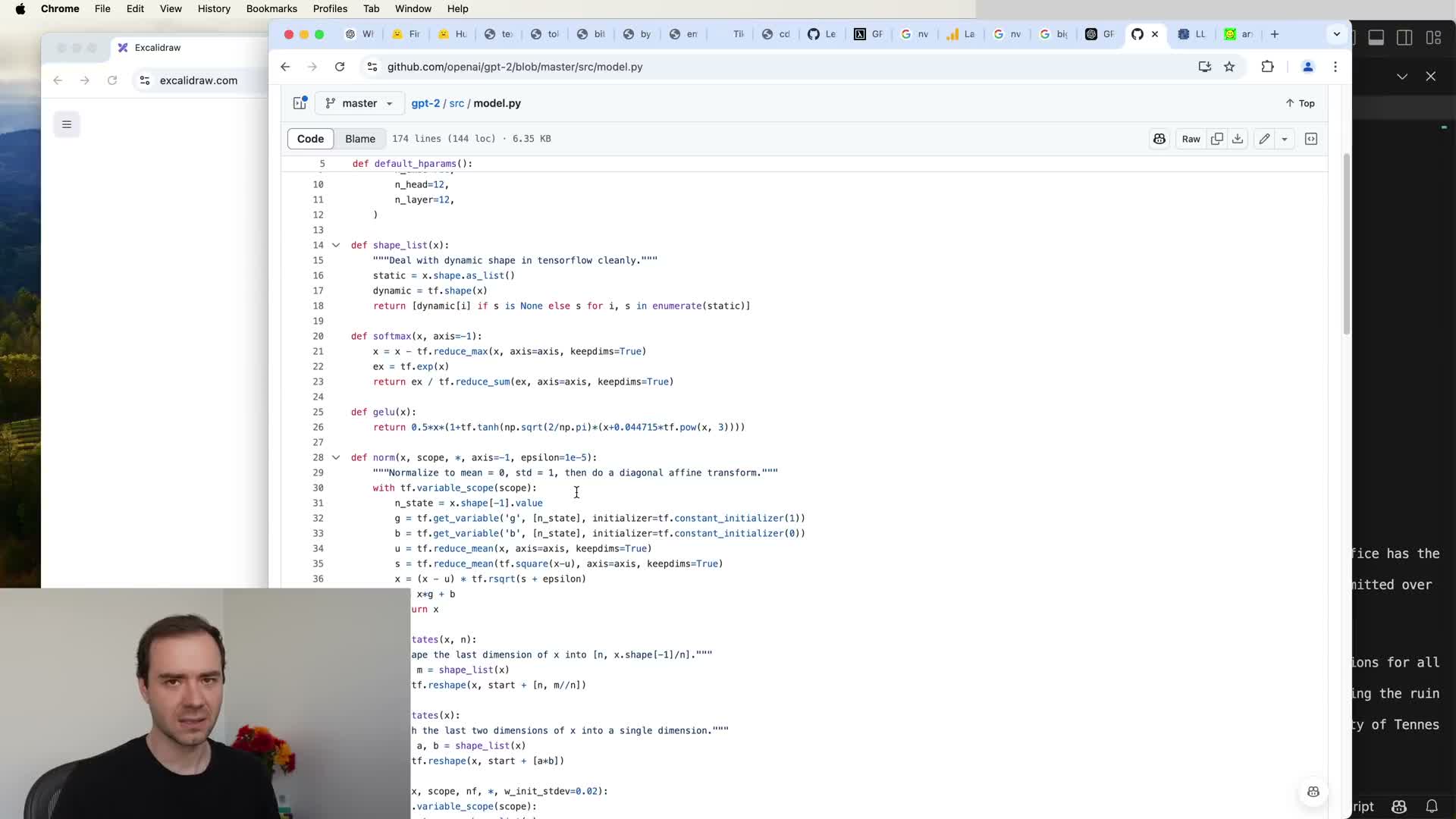

Base model releases: code plus parameters

A base model release comprises two deliverables:

-

Model implementation — compact code (often Python) that defines the forward pass and architectural wiring for Transformer blocks.

-

Trained parameter values — a large binary artifact containing billions of floating-point numbers encoding the learned statistical compression of the training text.

Practical effects:

- Releasing base models enables downstream researchers and engineers to inspect, serve, or further fine-tune the token simulator.

- A base model alone is not an “assistant” until it undergoes additional post-training steps to shape behavior and persona.

Interacting with base models and in-context learning

Base models act as powerful token autocompleters and can be used directly via interfaces or APIs, but they are not purpose-built assistants until post-training creates an instruction-following persona.

How base models are useful before post-training:

-

Prompt engineering and in-context learning (few-shot prompting) let you steer behavior by supplying example input–output pairs or role-play conversational prefixes in the context window.

- Because base models are stochastic, identical prompts can produce different outputs; carefully constructed prompts often yield more reliable behavior.

- You can bootstrap prompt-driven assistants by constructing conversational scaffolds that emulate a human–assistant exchange and letting the base model continue the sequence.

Post-training (supervised fine-tuning) and conversation datasets

Post-training converts a base token simulator into an instruction-following assistant by continuing training on curated datasets of human-generated conversations.

Key elements:

-

Supervised fine-tuning (SFT) uses datasets where labelers provide prompts and ideal assistant responses, guided by explicit labeling instructions (e.g., be helpful, honest, harmless).

- Human contractors following detailed documentation compose multi-turn conversations and write exemplar answers.

- Modern SFT datasets often blend human-authored and model-assisted synthetic examples to scale dataset creation.

- Different SFT mixtures and sources materially shape assistant personality, refusal behavior, and domain strengths.

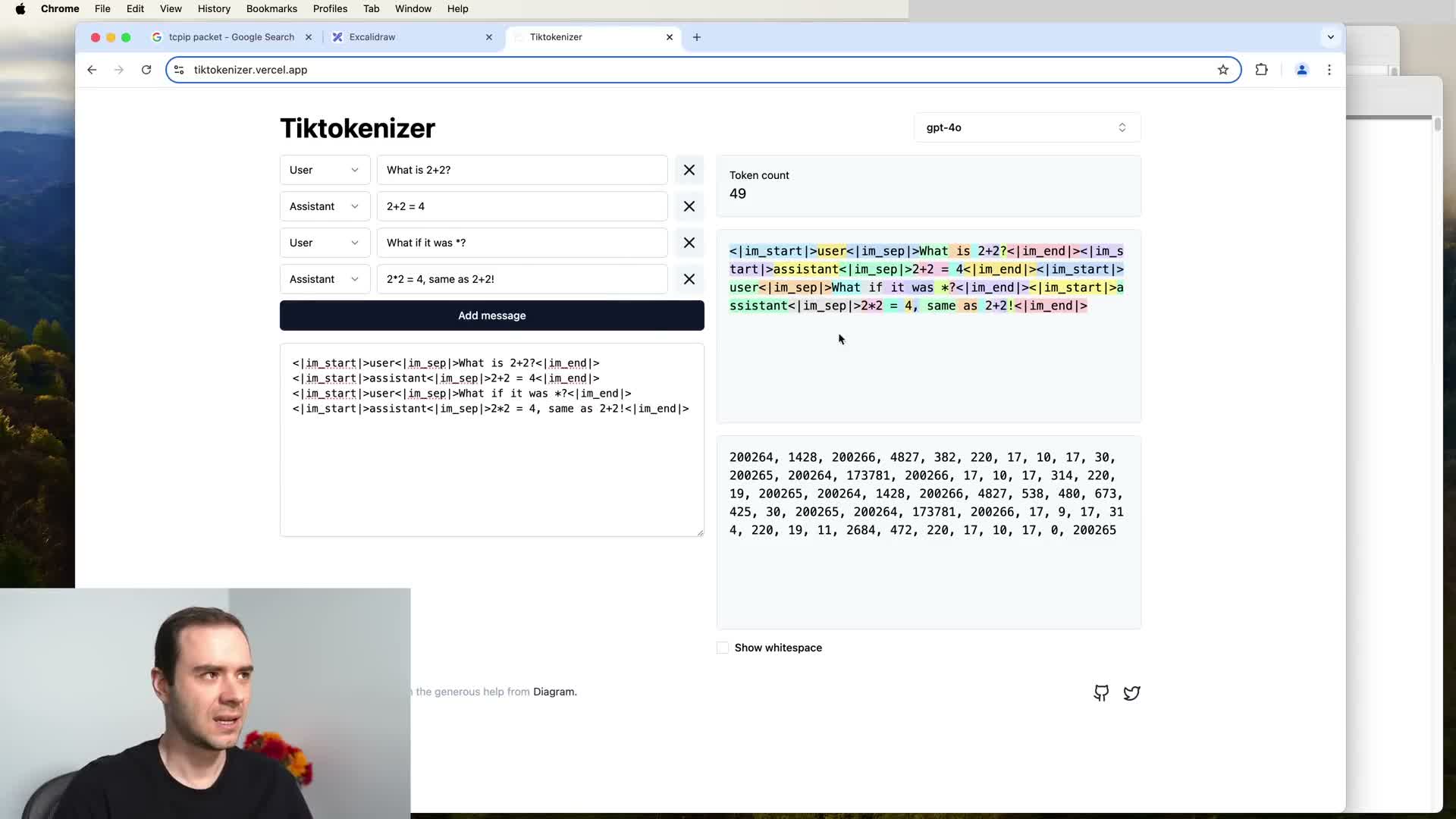

Conversation encoding and token protocols for assistant training

Conversations are converted into one-dimensional token sequences by defining an encoding protocol that serializes structured turns for Transformer inputs.

Encoding practices:

- Use special tokens, separators, and role markers to serialize system/user/assistant turns into a linear token stream.

- Examples include tokens to mark the start/end of a monologue, role tokens indicating whose turn follows, and separators to delimit content.

- These tokens are introduced during post-training so models learn the semantics of turns.

Why this works:

- By encoding structured dialogues as token sequences, the same next-token training objective can teach the model multi-turn conversational dynamics, role adherence, and response formatting.

Model hallucination causes and detection via model interrogation

Hallucination — generation of false factual statements — is a systematic consequence of next-token sampling from a statistical model that favors confident-seeming continuations when knowledge is lacking.

Mitigation strategies:

-

Empirical probing: automatically generate factual questions from documents, query the model repeatedly, and compare answers to ground truth to identify areas of ignorance.

-

Calibrated refusal examples: include SFT/post-training examples where the correct assistant response is an explicit, calibrated refusal (“I don’t know”) so the model learns to map internal uncertainty to explicit uncertainty responses.

Result:

- These techniques reduce confident hallucinations in unknown cases by teaching the model to recognize and verbalize uncertainty.

Tool use and web search to refresh working memory

When model parameters encode only a vague recollection of facts, external tools (web search, code execution, databases) refresh working memory.

Tool-use pattern:

- The model emits special tool-invocation tokens that a runtime intercepts to call external services.

- Retrieved text is inserted into the context window and becomes directly accessible to the model as explicit tokens (working memory).

- This transforms persistent parameter knowledge (long-term memory) into explicit context tokens, enabling up-to-date answers, citations, and corrective retrieval.

Training to use tools:

- Requires annotated examples that show how to format queries, when to call tools, and how to integrate retrieved material into responses so the model learns a reliable protocol for tool invocation.

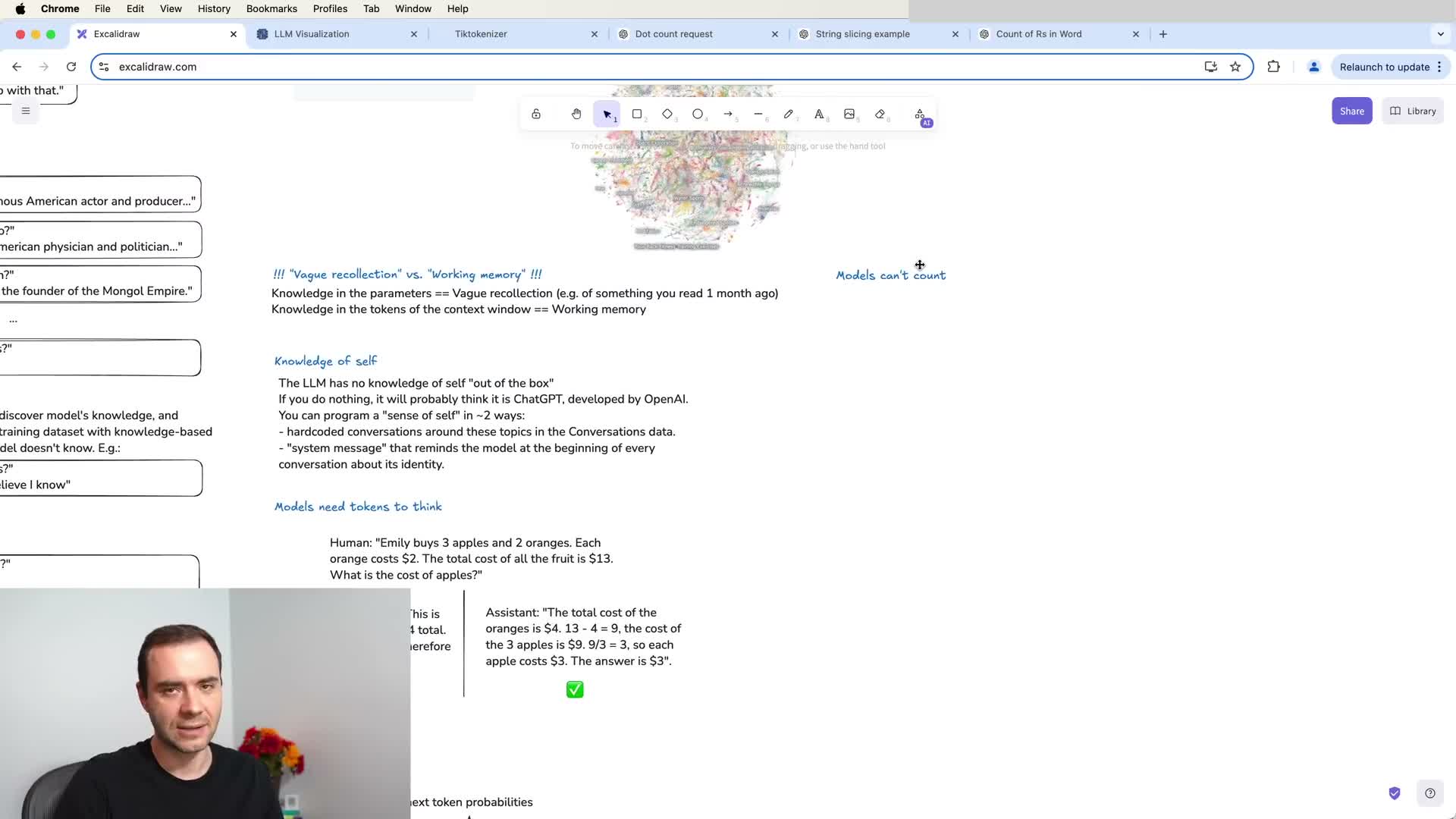

Model identity and system messages

An assistant’s declarations about its own provenance or identity are not intrinsic facts discovered by the model but arise from training data, system messages, or hardcoded SFT examples.

Consequences and controls:

- Without explicit conditioning, the model will produce a statistically likely self-description based on its training distribution, which can be incorrect or misleading.

- Developers can control identity disclosures by adding a system token or explicit hardcoded entries in the SFT dataset that specify desired name, origin, and knowledge cutoff.

- Treat model self-descriptions as controlled metadata rather than emergent truths about an autonomous agent; use explicit conditioning to ensure consistent and auditable self-descriptions.

Models need tokens to think: distributed, stepwise reasoning and tool use

Transformers execute a bounded amount of computation per output token, so complex reasoning must be distributed across multiple tokens and intermediate steps rather than done in a single token generation event.

Practical techniques to improve reasoning accuracy:

- Encourage step-by-step chains of thought in training and prompting (explicit intermediate calculations, variable definitions, or short inference steps) so the model can spread computation across tokens.

- Avoid forcing complex arithmetic or multi-step reasoning into a single-token response — that often causes errors.

- Use deterministic external tools (a code interpreter, web search, or string manipulation) to offload hard computational or character-level tasks to reliable operations.

Result:

- Prompting models to “use code” or to paste reference material into the context window is a robust pattern to improve correctness by combining token-level reasoning with deterministic computation.

Reinforcement learning as a final training stage and its motivation

Reinforcement learning (RL) is a post-training stage that resembles practice problems in human learning.

Where RL fits in the learning pipeline:

-

Pre-training provides exposition (mass exposure to language statistics).

-

Supervised fine-tuning (SFT) provides expert imitation (human-guided behavior).

-

Reinforcement learning lets the model discover token-level strategies by trial and error on many prompts with verifiable rewards.

Why RL is valuable:

- Human annotators cannot anticipate the exact token sequences that are efficient and robust for the model’s internal computation.

- Letting the model sample many solutions and reinforcing those that succeed enables discovery of emergent problem-solving patterns and procedural traces.

- Properly applied RL can improve performance on tasks with an objective scoring function or verifiable answers by optimizing behavior across many practice instances.

Enjoy Reading This Article?

Here are some more articles you might like to read next: