Karpathy Series - How I use LLMs

- Introduction and video objectives

- ChatGPT and the expanding LLM ecosystem

- Where to discover and compare LLMs

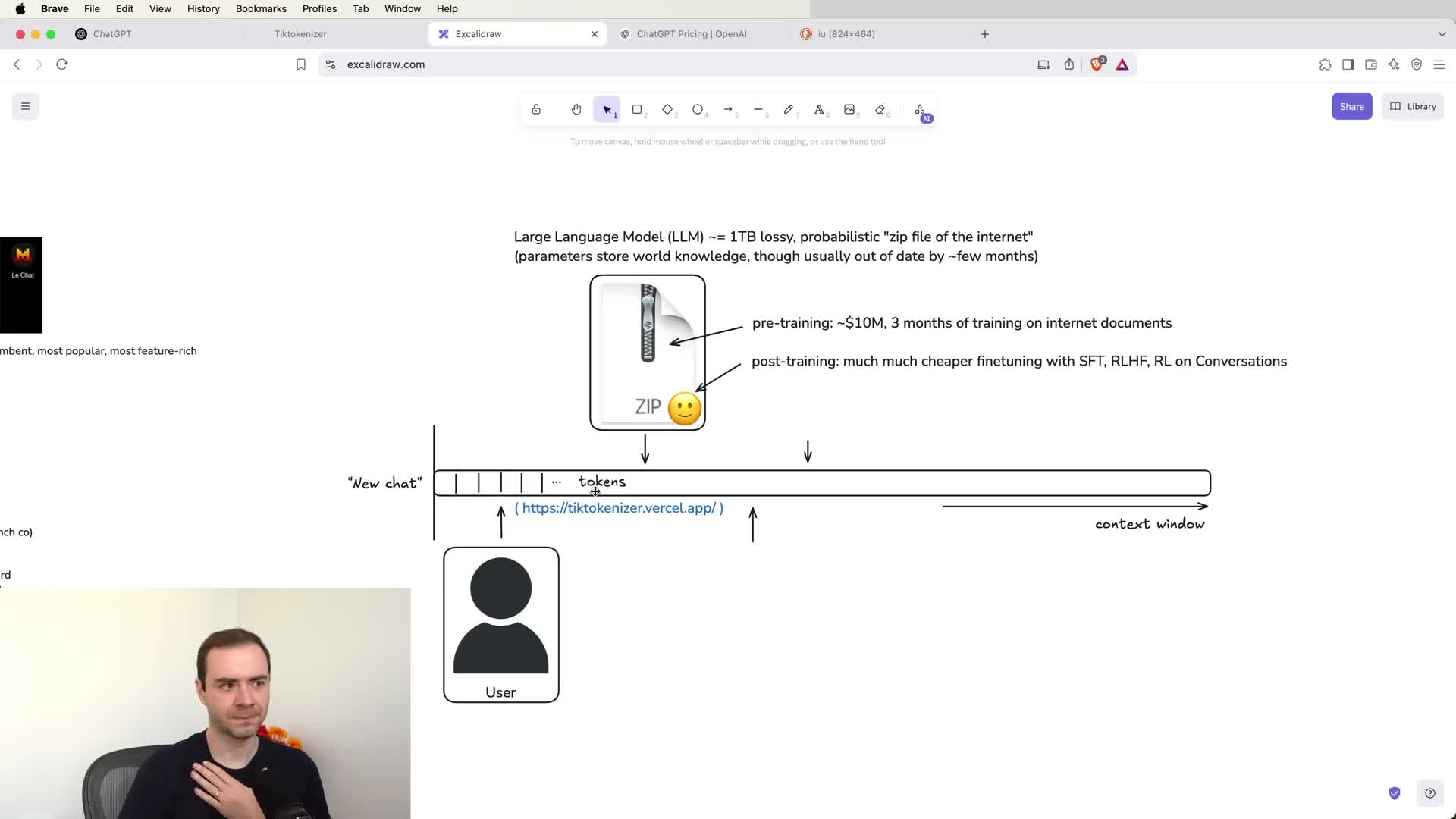

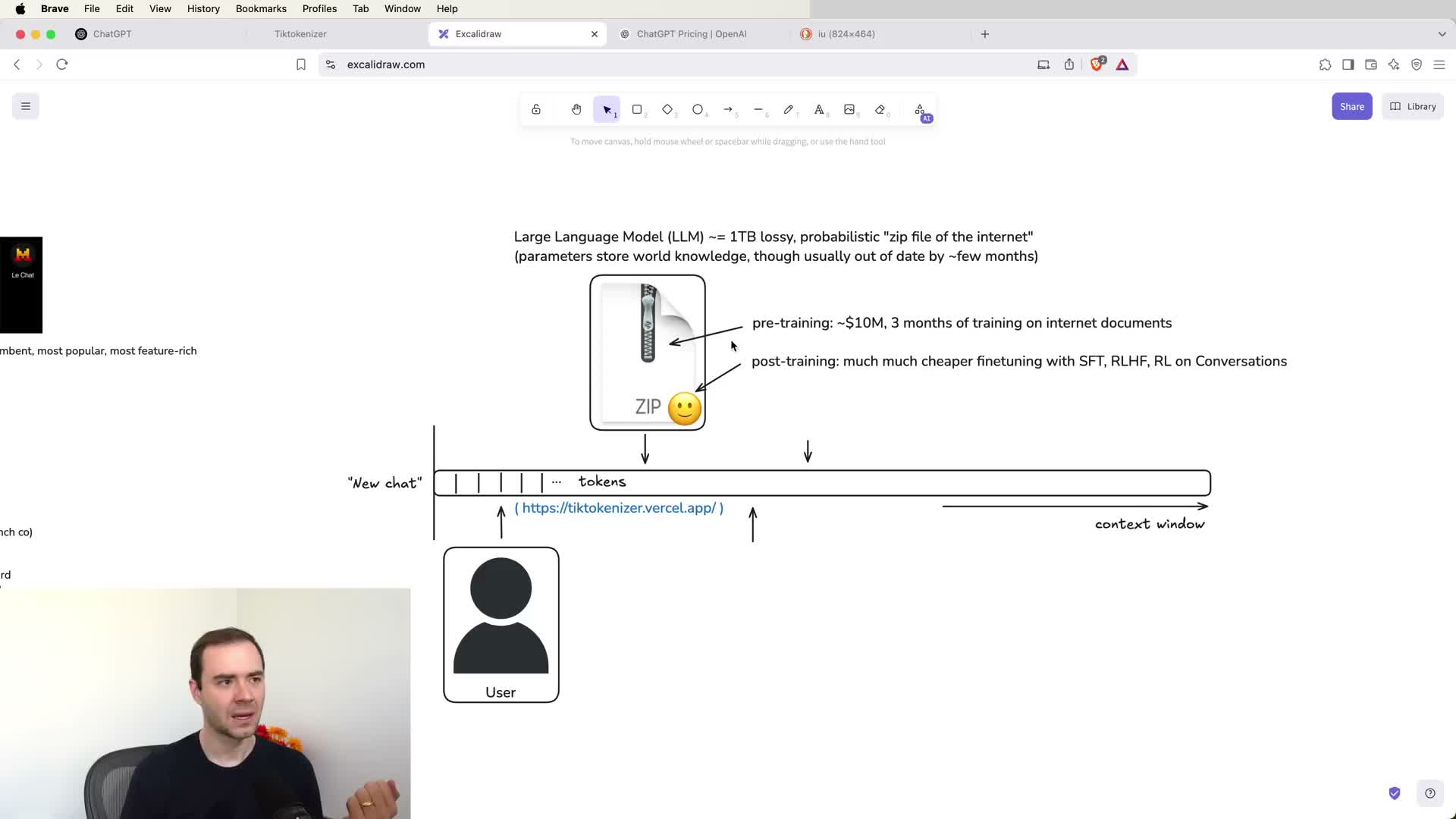

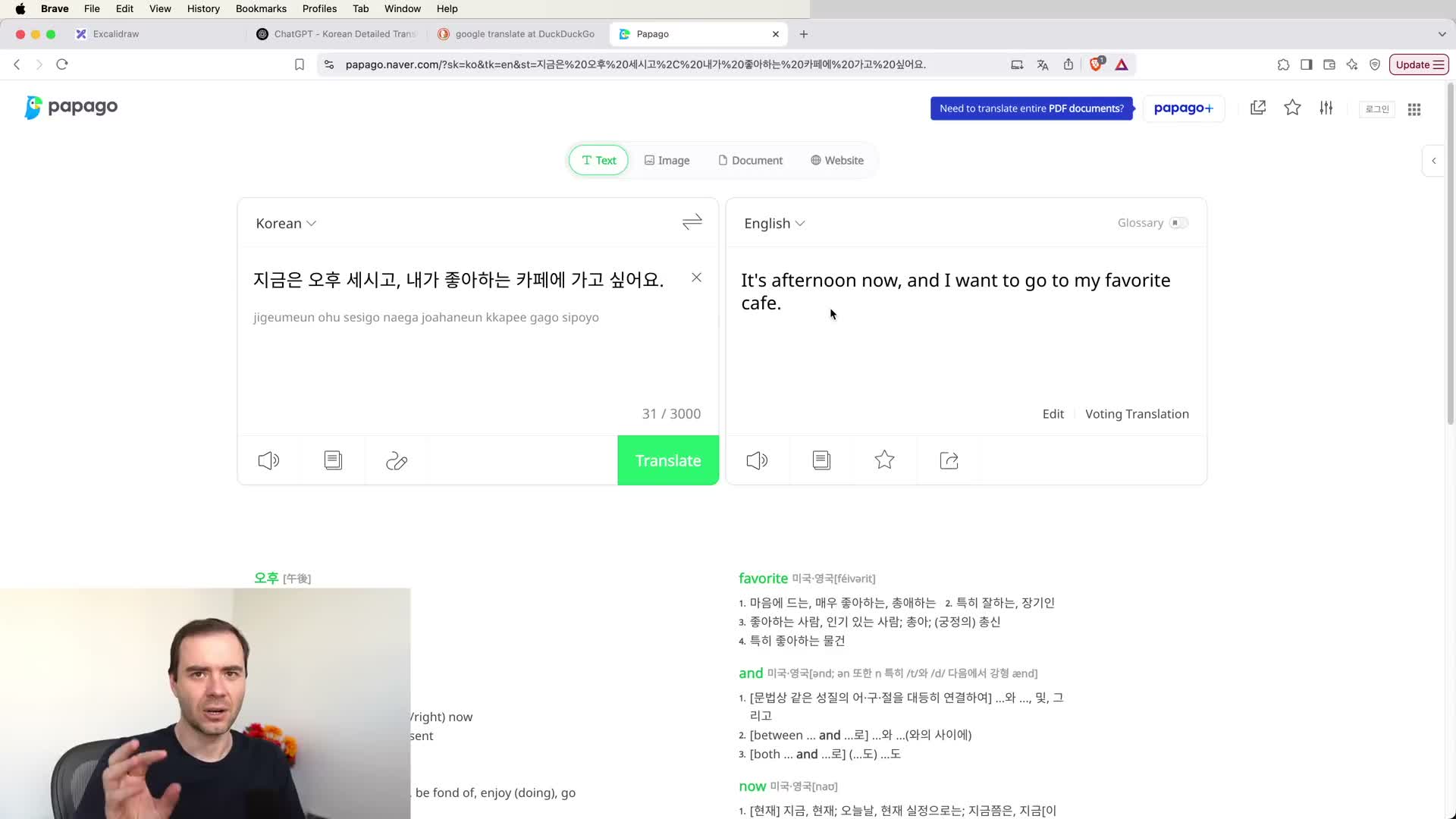

- Basic interaction model and tokenization

- Conversation format and context window behavior

- Pre-training and post-training as sources of model behavior

- How to conceptualize ChatGPT’s identity and limits

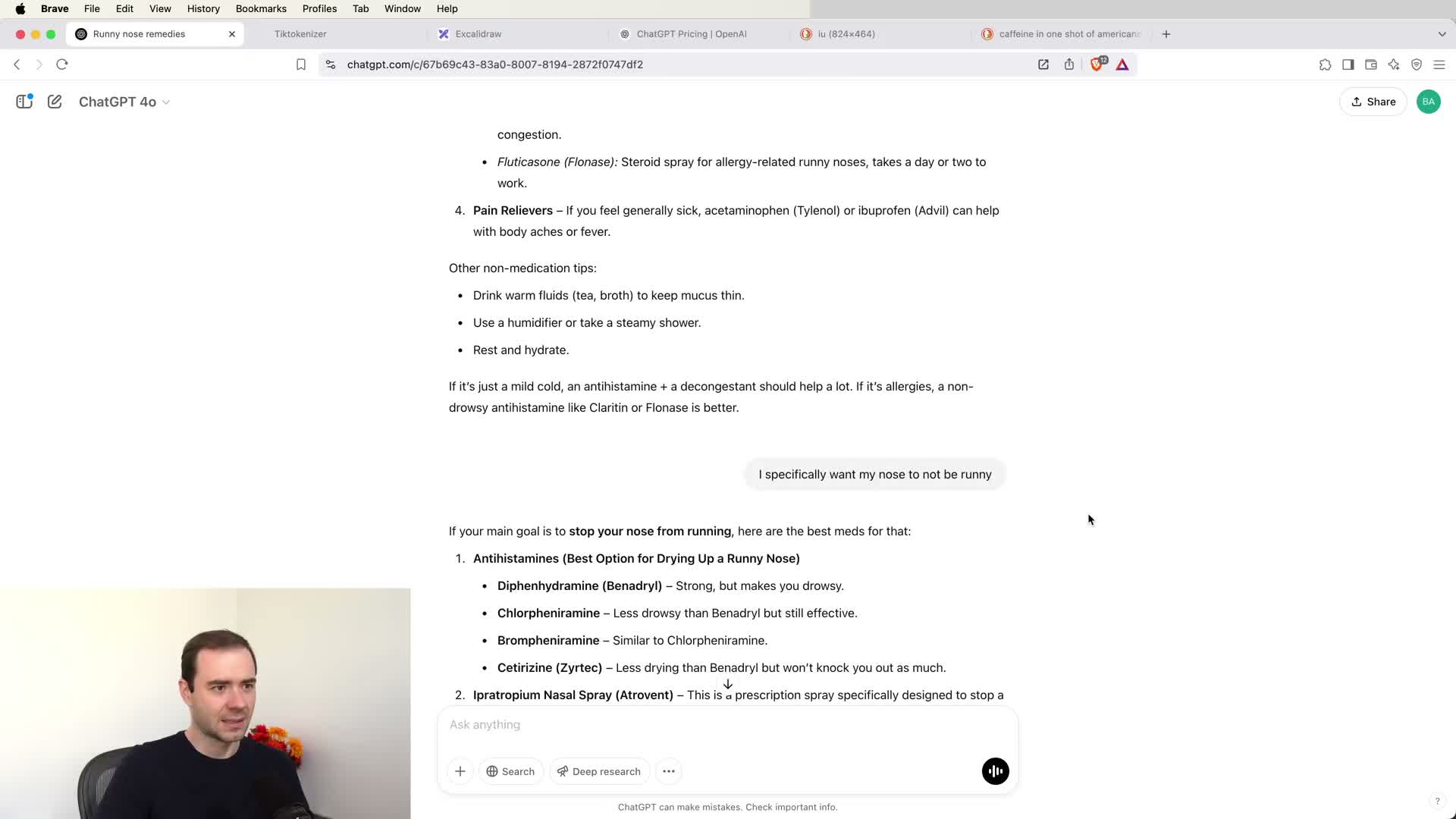

- Practical knowledge queries and verification

- Context management: start new chats and token costs

- Model selection and subscription tiers

- Using multiple providers and an ‘LLM council’

- Thinking models and reinforcement learning tuning

- Tool use concept: integrating internet search

- Search integration across providers and behavior

- Practical search use cases and examples

- Deep research: long-form, citation-rich automated research

- Deep research outputs, caveats, and examples

- Uploading documents and adding specific sources to context

- Reading and studying books or papers with an LLM

- Python interpreter and programmatic tool use

- Advanced Data Analysis and plotting with LLMs

- Cloud artifacts: LLM-generated interactive apps and diagrams

- Code-centric development tools and ‘vibe coding’

- Multimodality: speech input/output and advanced voice

- NotebookLM and on-demand podcast generation

- Image modalities: OCR, image understanding, and generation

- Video understanding via mobile camera and demo use cases

- AI video generation landscape

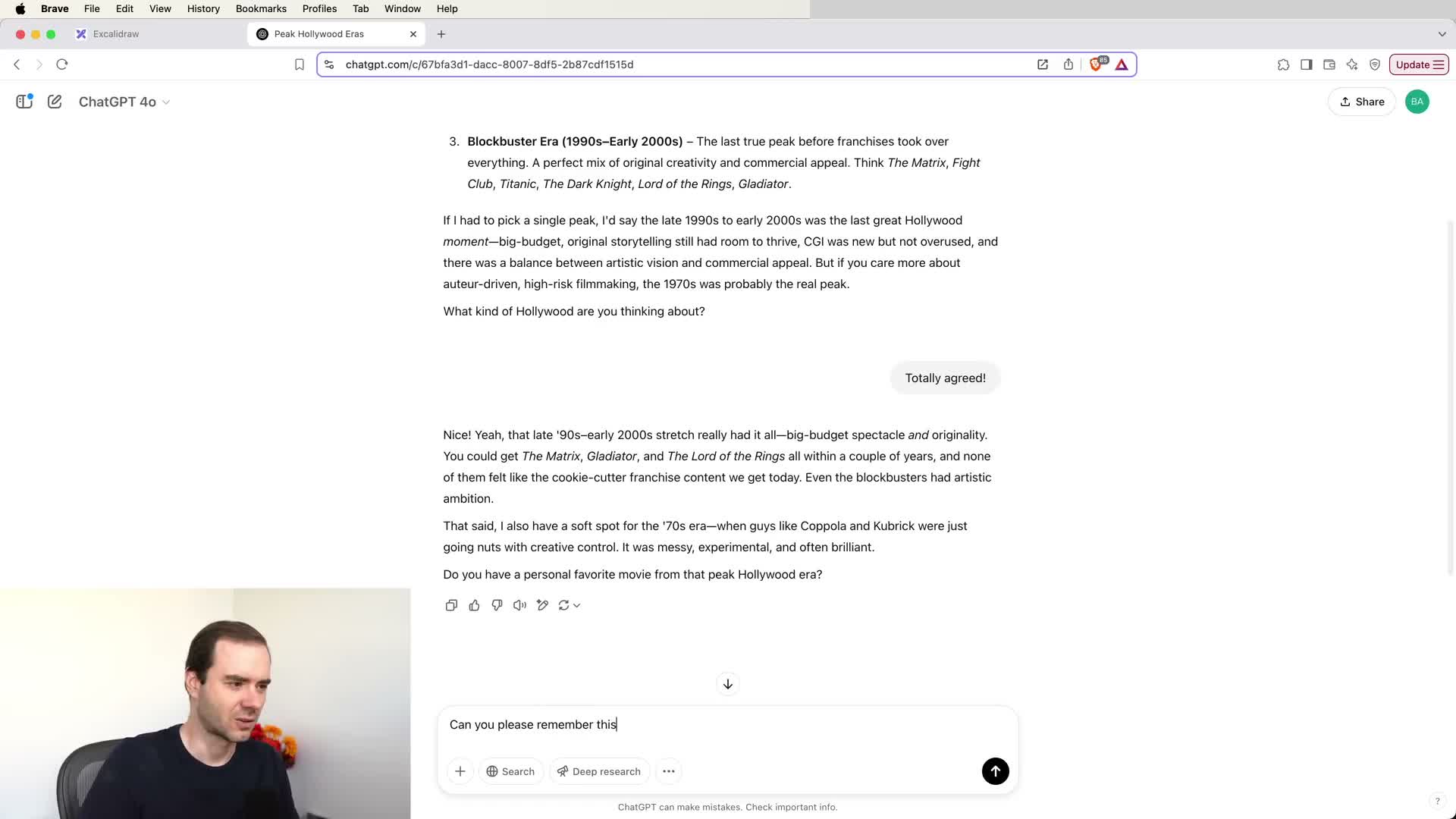

- Memory features for personalized experiences

- Custom instructions and persistent persona tuning

- Custom GPTs and saved prompts for repeatable tasks

- Summary: practical takeaways and system-level considerations

Introduction and video objectives

The presenter introduces a practical guide to using large language models (LLMs), with the explicit objective to demonstrate settings, examples, and workflows for both everyday and professional use.

This session is framed as a continuation of an earlier foundational lecture, but it deliberately focuses on applied techniques rather than training theory.

What the segment promises to cover:

- A survey of multiple LLM providers and interfaces.

- Live demonstrations of how the presenter personally uses these tools.

- Concrete demos and configuration tips viewers can replicate.

The introduction therefore establishes scope and motivates viewers to follow the practical demonstrations for actionable guidance.

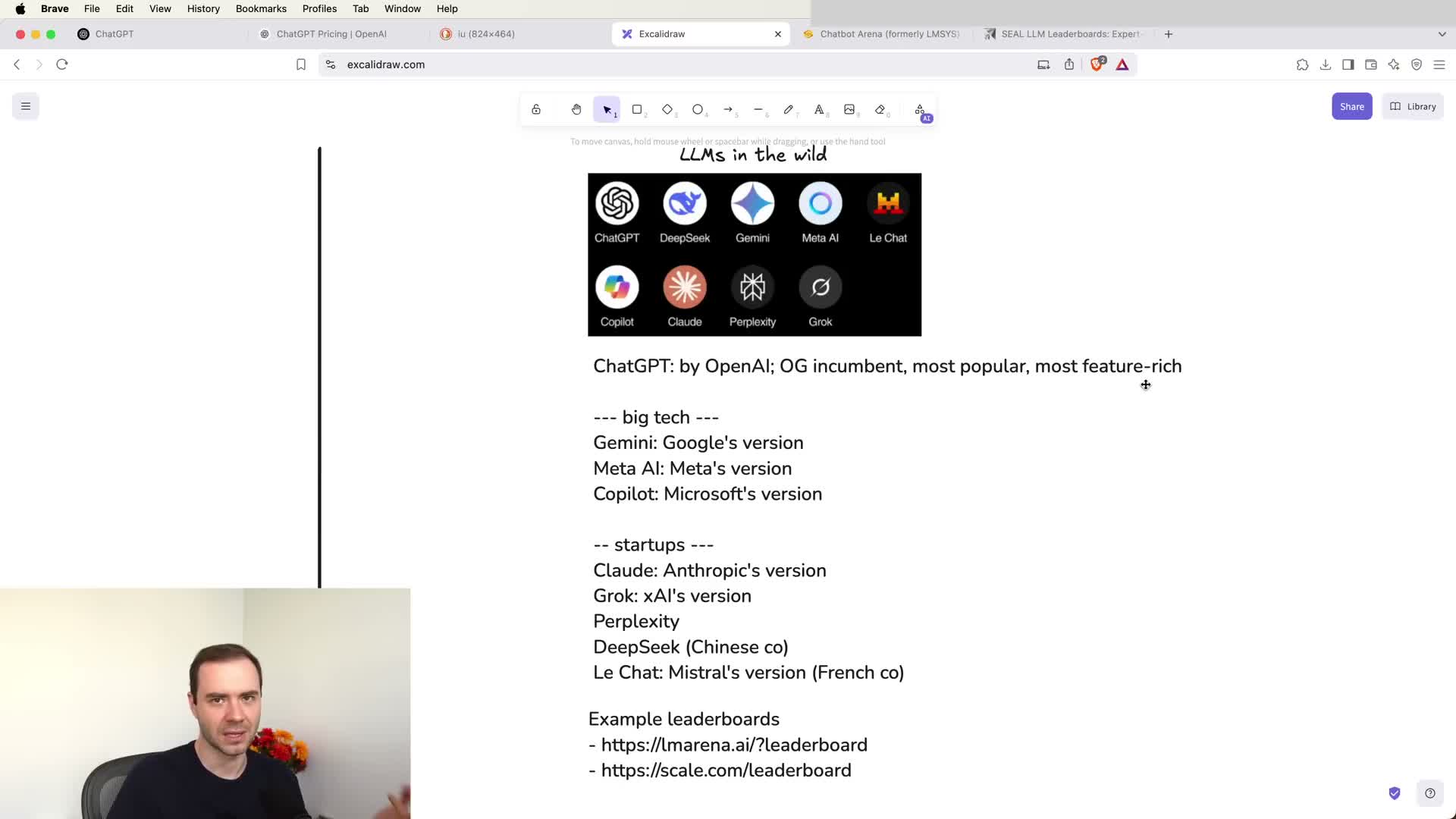

ChatGPT and the expanding LLM ecosystem

ChatGPT is presented as the seminal text-chat interface that popularized public interaction with LLMs and remains feature-rich thanks to longevity.

At the same time, the ecosystem now includes many competing offerings from Big Tech and startups. The speaker names prominent alternatives:

-

Gemini, Claude, Grok, Mistral, DeepSeek, and Llama-like services.

Key point: different vendors expose distinct features and user experiences. The speaker recommends:

- Track model progress with leaderboards and evaluation dashboards to monitor comparative capabilities and performance.

- Treat ChatGPT as a reasonable representative starting point, but be open to specialized providers when a particular feature or capability is required.

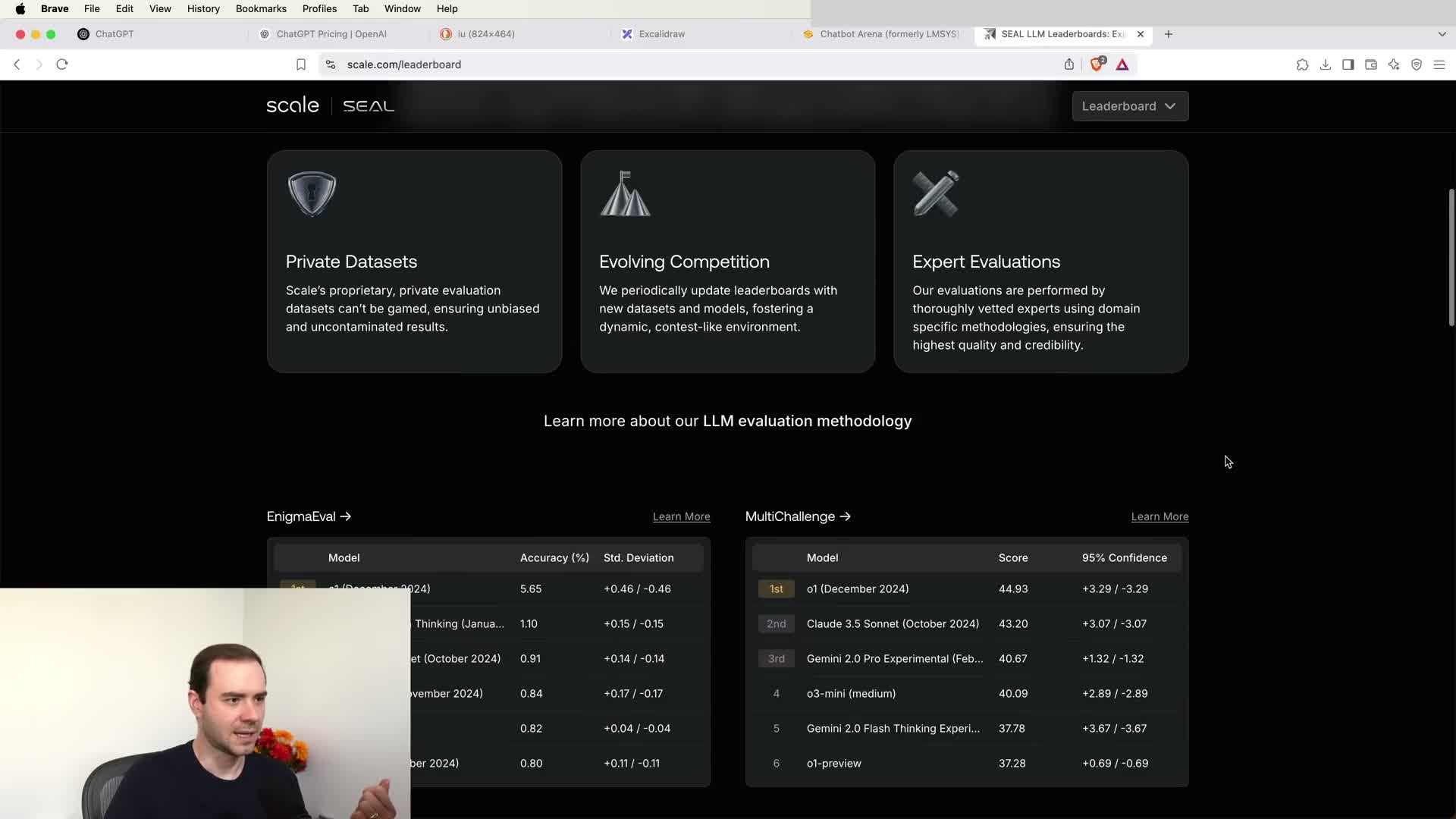

Where to discover and compare LLMs

Multiple public resources aggregate model performance and features, enabling side-by-side comparisons across providers and tasks.

Recommended comparators:

- Chatbot Arena

-

Scale AI’s evaluation pages

What these leaderboards provide:

- Relative strengths, ELO-like scores, and task-specific benchmarks (reasoning, coding, general knowledge).

- Up-to-date evaluations that complement vendor documentation and product pages.

Why use them: vendor marketing alone rarely reveals empirical differences, so external comparators help make informed model-selection decisions.

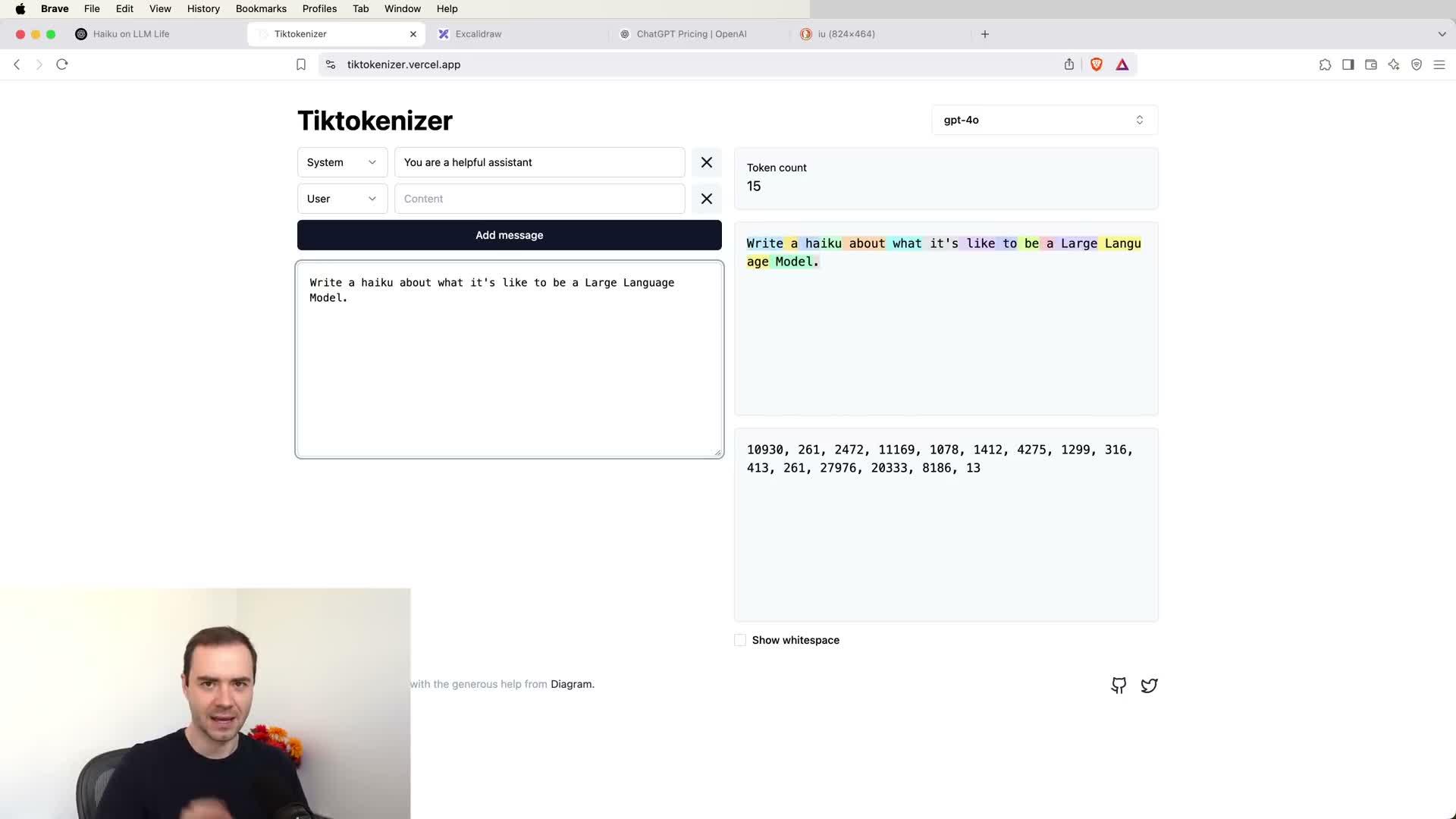

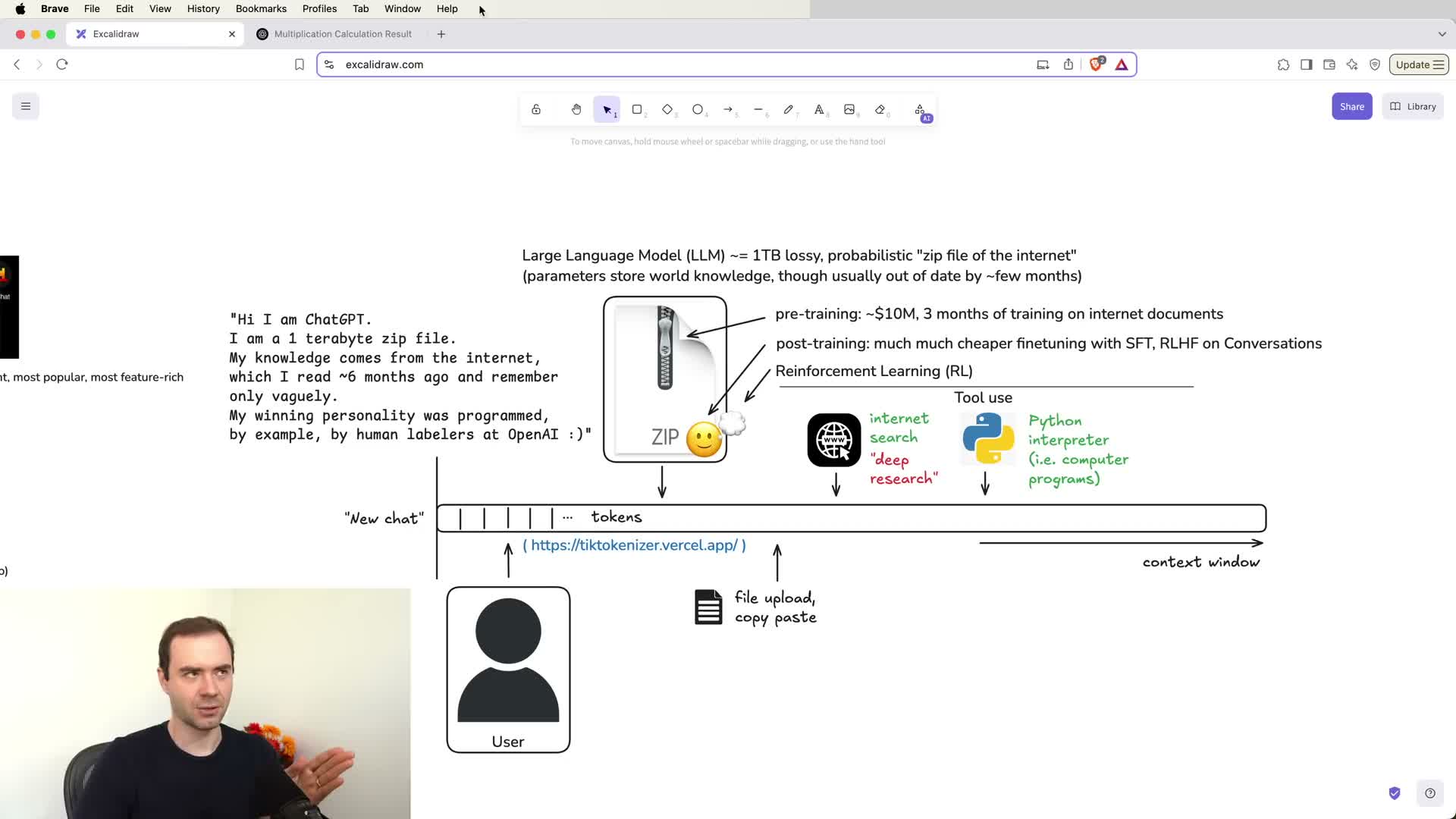

Basic interaction model and tokenization

A language-model interaction is fundamentally text-in, text-out; under the UI this maps to a linear token sequence.

Demonstrated with a tokenizer tool:

- Inputs and responses are split into tokens from a fixed vocabulary (hundreds of thousands of tokens).

- Tokens are represented by token IDs, the atomic units the model processes.

Why tokenization matters:

- Prompts, responses, and conversational context all occupy the same one-dimensional token stream.

- Tokens count against context/window limits, so every chat bubble contributes to that single sequence the model continues.

Conversation format and context window behavior

Conversational UIs wrap user messages and model replies into a structured chat format that is serialized into the token stream, including role markers and message boundaries.

Important operational details:

- The context window (working memory) contains all tokens visible to the model.

- New-chat resets the context window to zero tokens — effectively starting a fresh working memory.

- The model and user alternate by appending tokens until the model emits a termination token, at which point control returns to the user.

- Each turn builds the shared context; managing that context window is equivalent to managing the model’s working memory and directly impacts what the model can reference and reason about.

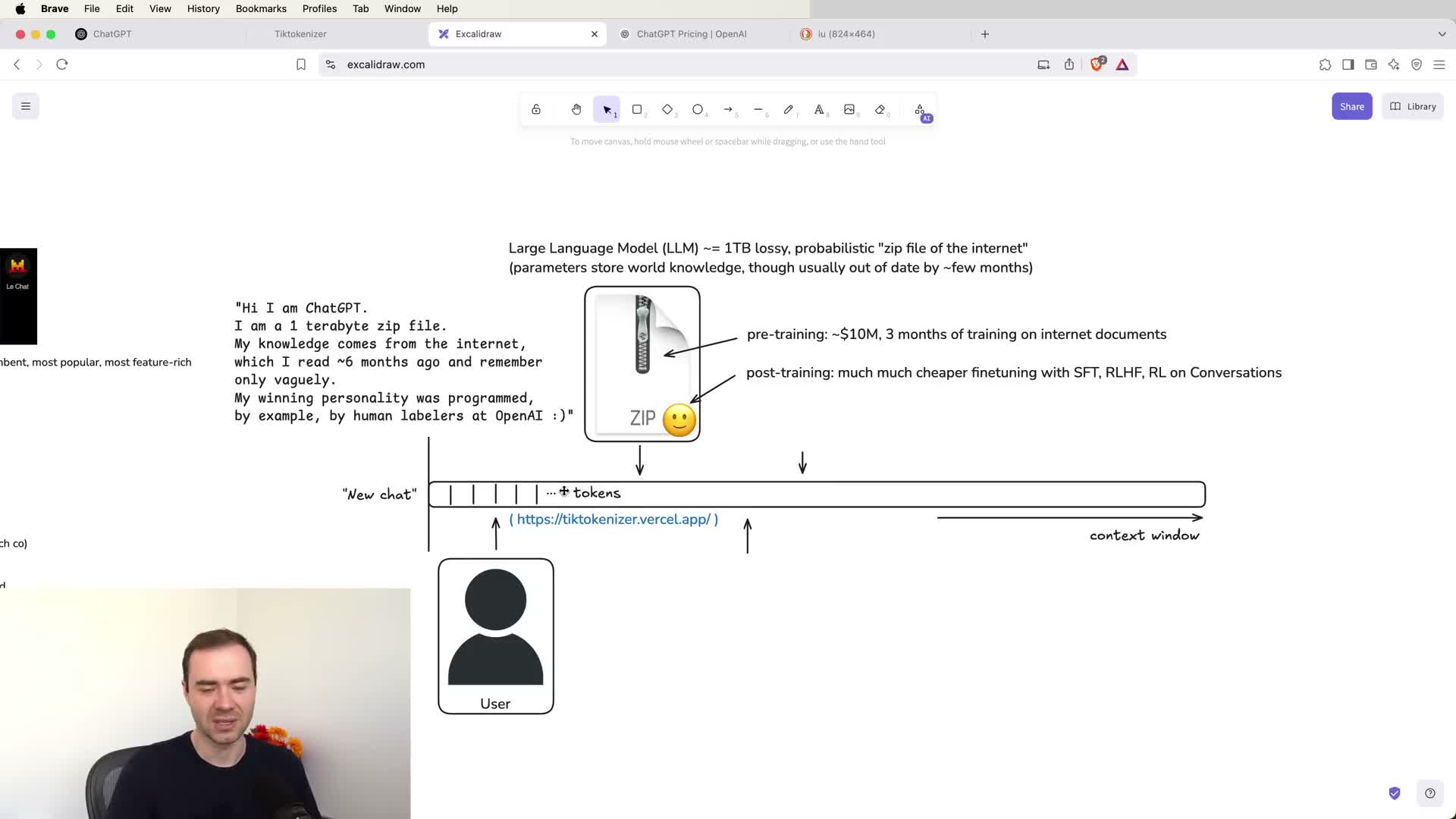

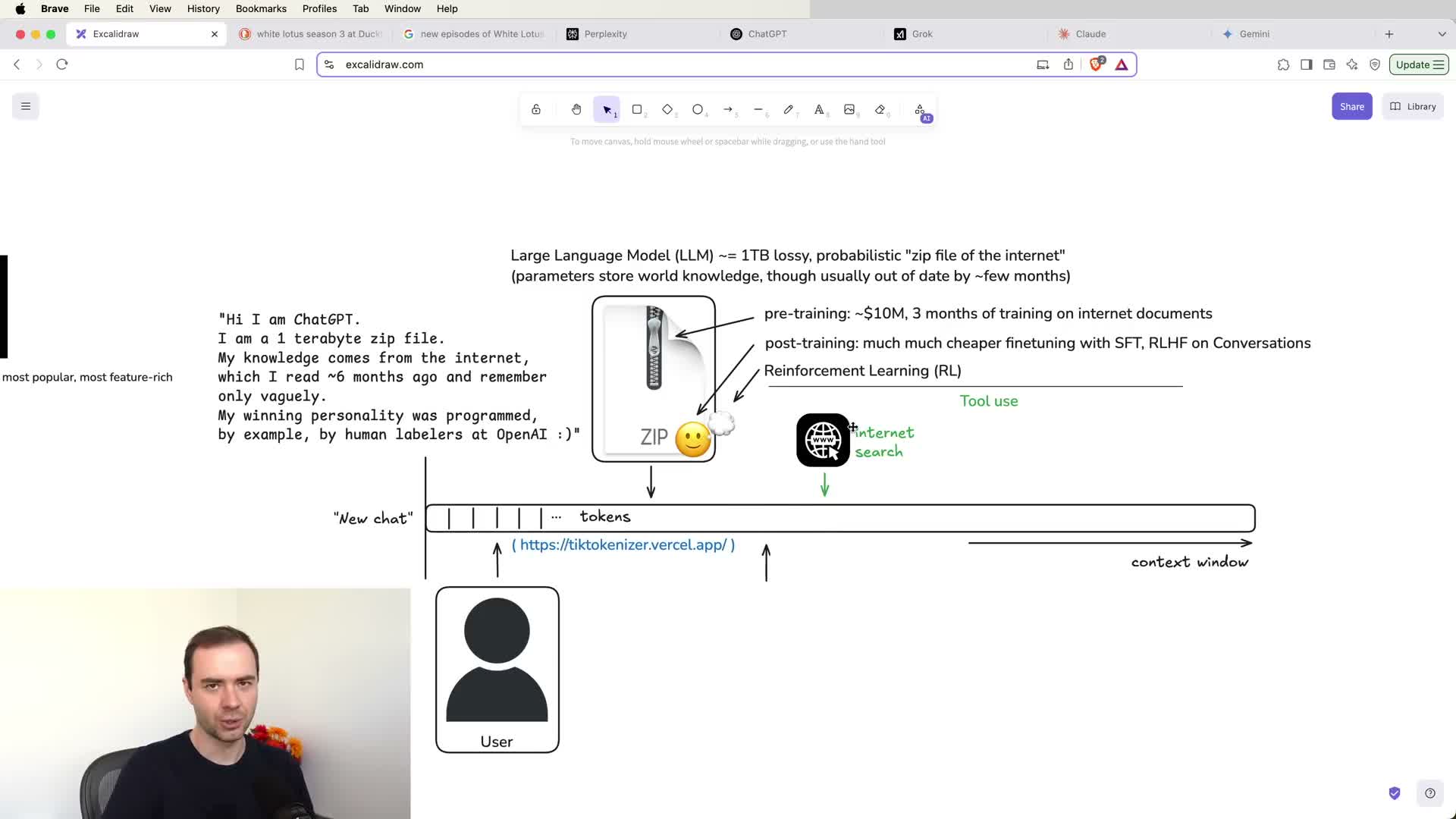

Pre-training and post-training as sources of model behavior

LLMs acquire general knowledge through a costly pre-training stage and then adopt an assistant persona through post-training on curated conversational datasets.

Clarifying the stages:

- Pre-training: compresses large corpora into network parameters — a lossy, probabilistic compressed representation of the internet inside the model. This yields a large but stale knowledge base with a clear knowledge cutoff date.

-

Post-training: includes supervised fine-tuning on dialogues and reinforcement learning from human feedback (RLHF), which modify model behavior toward an assistant style.

Why this distinction matters: it explains how models can be knowledgeable yet out-of-date, and why persona/response style is separate from stored knowledge.

How to conceptualize ChatGPT’s identity and limits

Think of ChatGPT as a large, self-contained artifact: its knowledge is a compressed snapshot of internet content, and its conversational behavior is shaped by human-curated fine-tuning.

Consequences of this framing:

- Probabilistic recall: frequently occurring internet items are remembered better.

- Vagueness about recent events: due to the knowledge cutoff.

-

Style driven by post-training labelers.

Practical expectations: the model is fallible, may hallucinate, and cannot access fresh data unless explicitly connected to tools. Treat the base model as a static knowledge artifact unless tool integrations are present.

Practical knowledge queries and verification

Simple, common factual queries that are unlikely to have changed recently are good candidates to ask the base model directly, but answers should be verified when accuracy matters.

Recommended workflow:

- Model-first: use the model for quick orientation (e.g., approximate facts like caffeine content in an Americano).

-

Targeted verification: cross-check with primary or authoritative sources for moderately important or safety-sensitive matters (e.g., medication ingredients).

Rationale: frequent facts are well represented in pre-training, but critical decisions require external confirmation.

Context management: start new chats and token costs

Start new chats whenever switching topics because the context window is a finite, expensive resource that affects performance and cost.

Operational guidance:

- Large accumulated token windows can distract the model with irrelevant prior context.

- They also slightly increase computation cost per token when sampling new tokens.

- Treat tokens in the context window as precious working memory: keep them concise and relevant, and reset the conversation when prior tokens are no longer beneficial.

Benefit: effective context hygiene improves response quality and reduces unnecessary expense and latency.

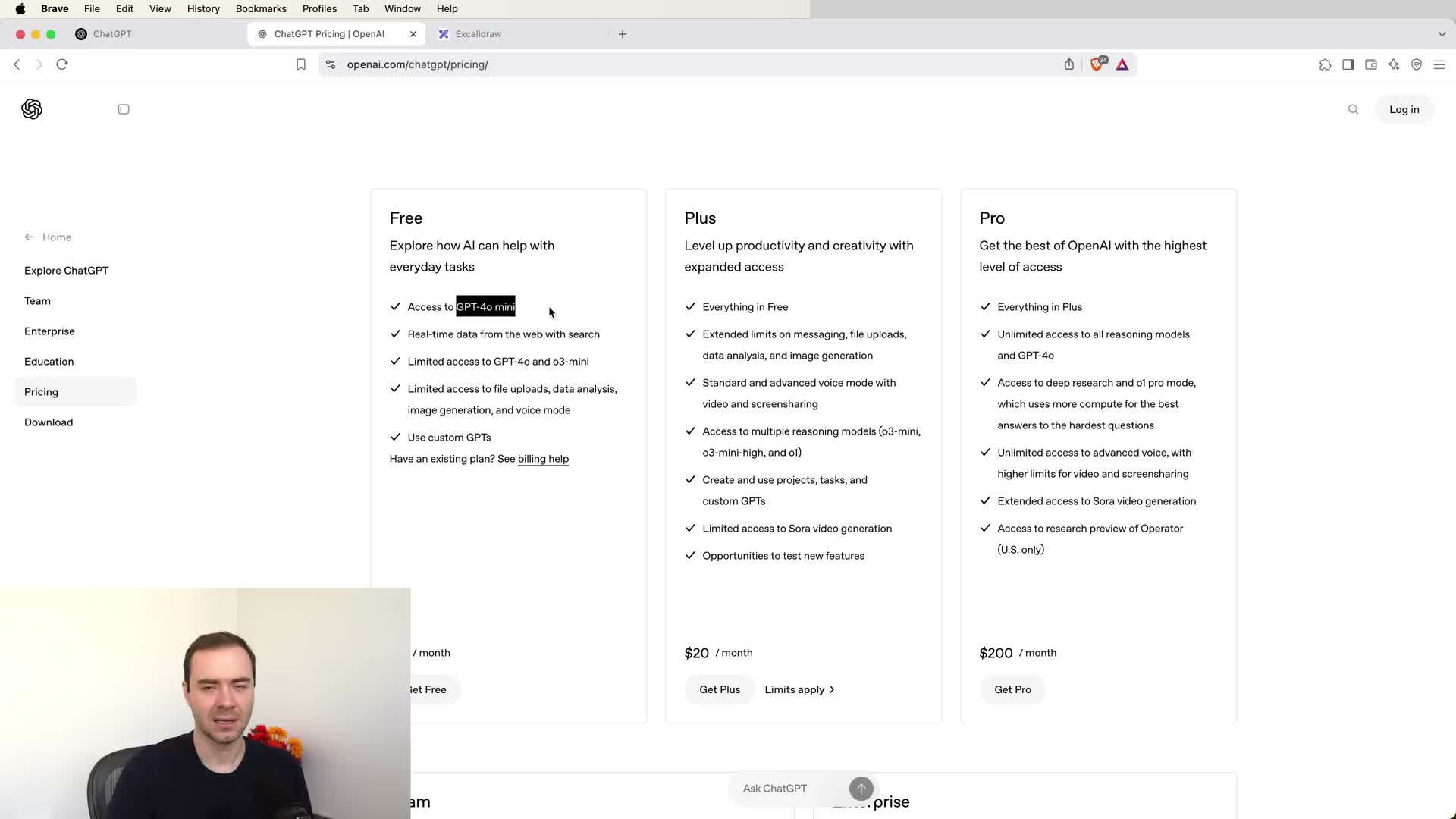

Model selection and subscription tiers

Models differ in size, capability, and pricing tiers; larger models generally provide better knowledge, creativity, and reliability but at higher compute cost.

Typical provider tiers:

- Free tier: reduced-capacity models.

- Middle tier: limited access to flagship models.

-

Professional tier: priority or unlimited access to the largest variants.

Practical advice:

- Be deliberate about tradeoffs between cost and capability based on use case (prototyping vs professional work).

- Paid plans can be cost-effective for heavy users (e.g., software engineers).

-

Always verify which model is actually active in your session—UIs can be ambiguous about the selected model.

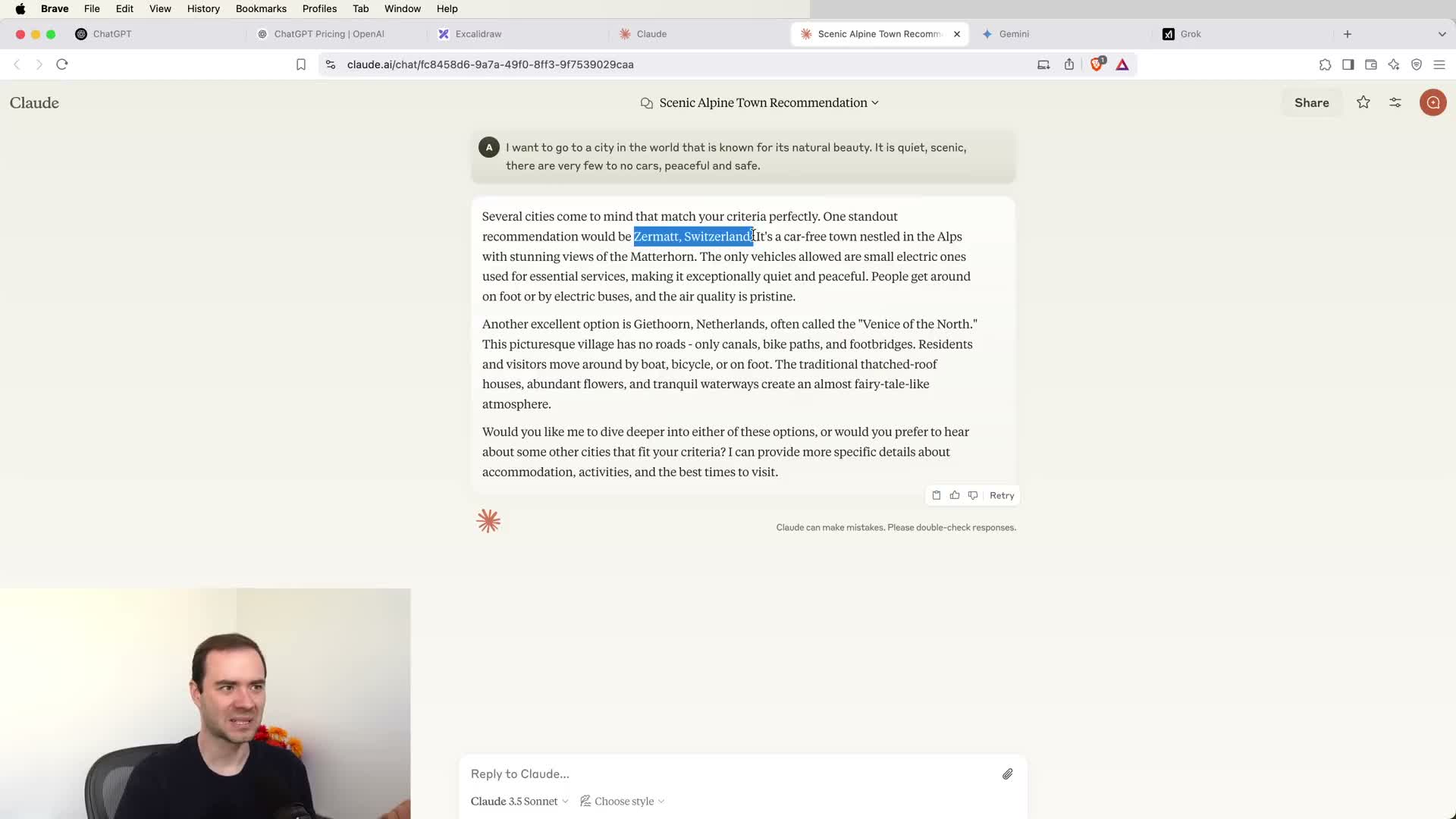

Using multiple providers and an ‘LLM council’

Relying on multiple LLM providers and models — an “LLM council” — surfaces diverse perspectives and comparative suggestions for ideation and decision support.

How to use an LLM council:

- Poll several models to aggregate complementary strengths (search integration, deep-research features, reasoning biases).

- Experiment across vendors and pay selectively for higher tiers when needed.

- Use the council for non-critical tasks (travel planning, ideation) where multiple viewpoints add value and increase confidence.

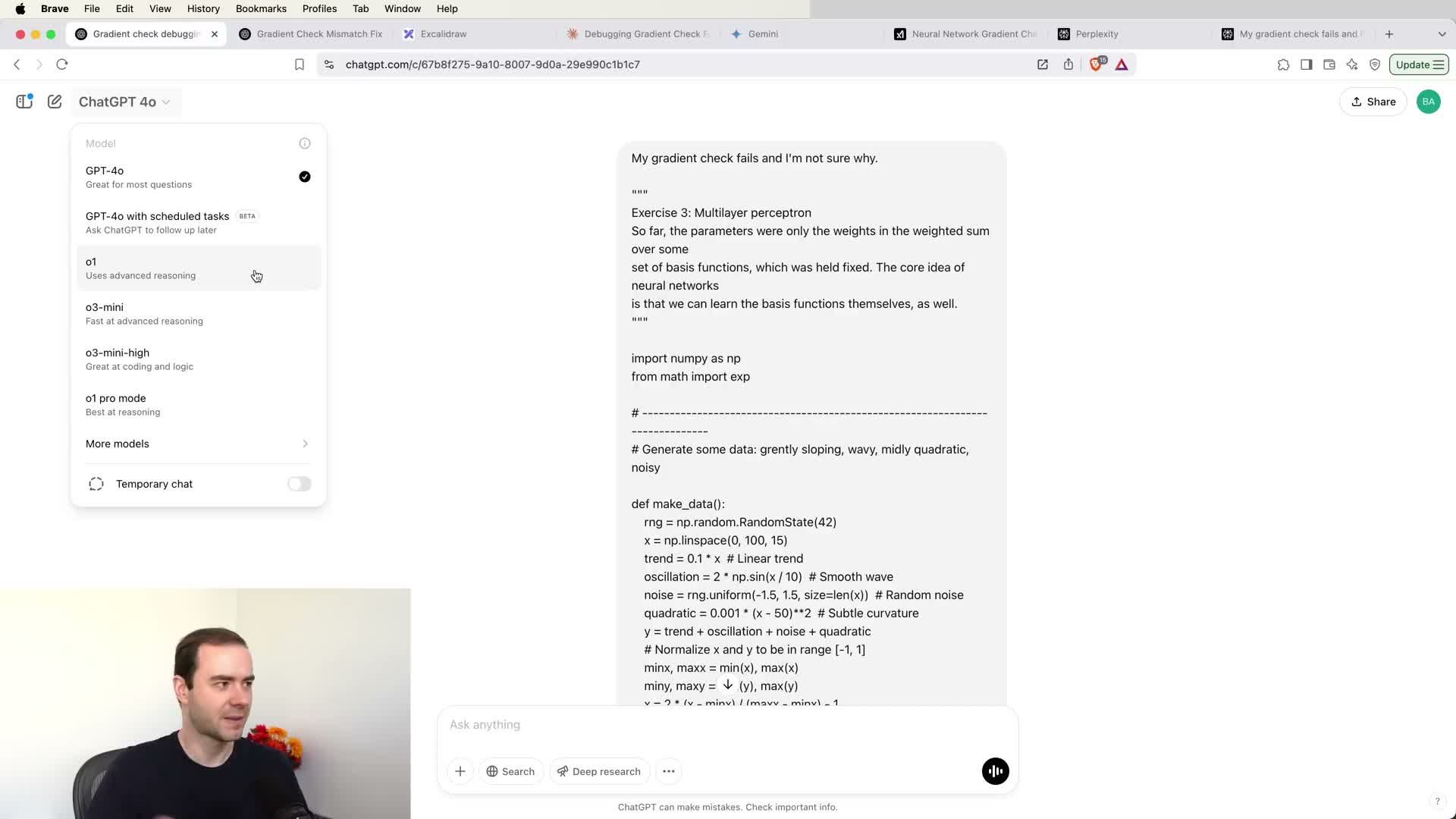

Thinking models and reinforcement learning tuning

A class of models tuned with reinforcement learning can develop internal, chain-of-thought-like strategies that materially improve performance on complex problems (math, coding, deep reasoning).

Key characteristics:

- RLHF helps models iterate and discover problem-solving heuristics difficult to encode with supervision alone.

- Thinking models often take longer to respond (they may emit many intermediate tokens) but can yield higher accuracy on deep tasks.

- They are less helpful for simple prompts and should be used selectively for difficult logical, mathematical, or programmatic problems.

Tool use concept: integrating internet search

Tool use extends a closed model by letting it emit a special search (or action) that the host application executes, then inserting retrieved content into the model’s context window.

What this enables:

- Access to fresh or niche information not present in pre-trained parameters.

- The application inserts retrieved page text as tokens into the same context stream so the model can reference, synthesize, and cite those sources.

When to use tools: whenever recency or niche specificity is required — prefer tool-mediated search over relying solely on frozen model knowledge.

Search integration across providers and behavior

Provider apps integrate search differently: some auto-detect the need to search, some expose explicit search buttons, and some tiers or models lack real-time search entirely.

Practical demo observations:

- Some models will automatically switch to search mode for clearly recent queries.

- Others require user enablement of search.

- Availability can vary by subscription tier.

Result behavior: when search is available the system returns citations for verification; when absent the model either declines or answers based on stale knowledge with a knowledge-cutoff disclaimer. Choose the right provider or UI control for time-sensitive questions.

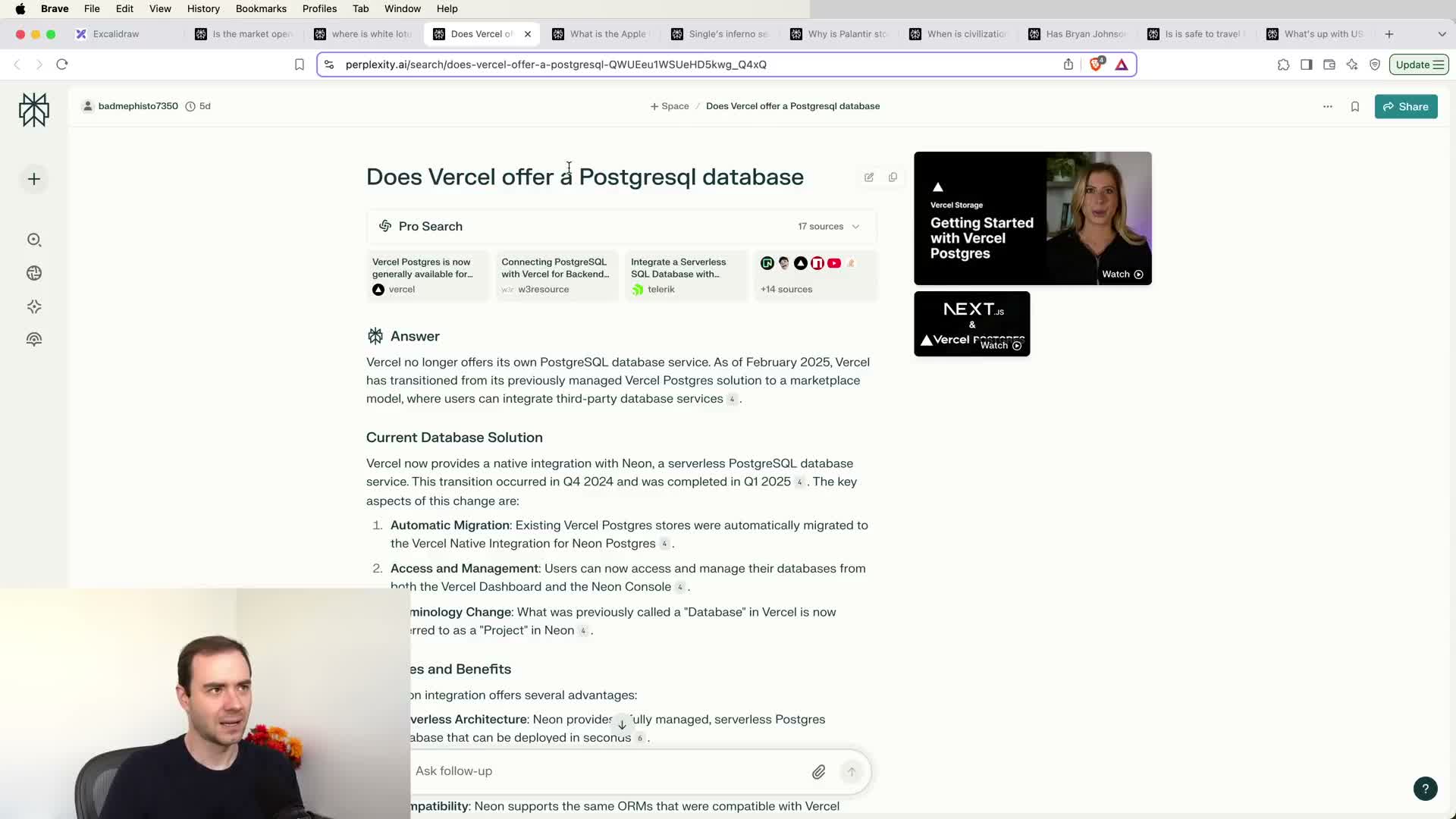

Practical search use cases and examples

Common, practical searches where tool use is especially helpful include:

- Market open days and stock drivers

- Filming locations and cast lists

- Product feature availability and launch rumors

- Recent news context and social-media trends

Why: these queries are time-sensitive, esoteric, or trending, and LLM+search pipelines can aggregate top links and produce succinct summaries with citations. For quick situational awareness, use a search-capable app (e.g., Perplexity) for concise, cited answers derived from multiple web sources.

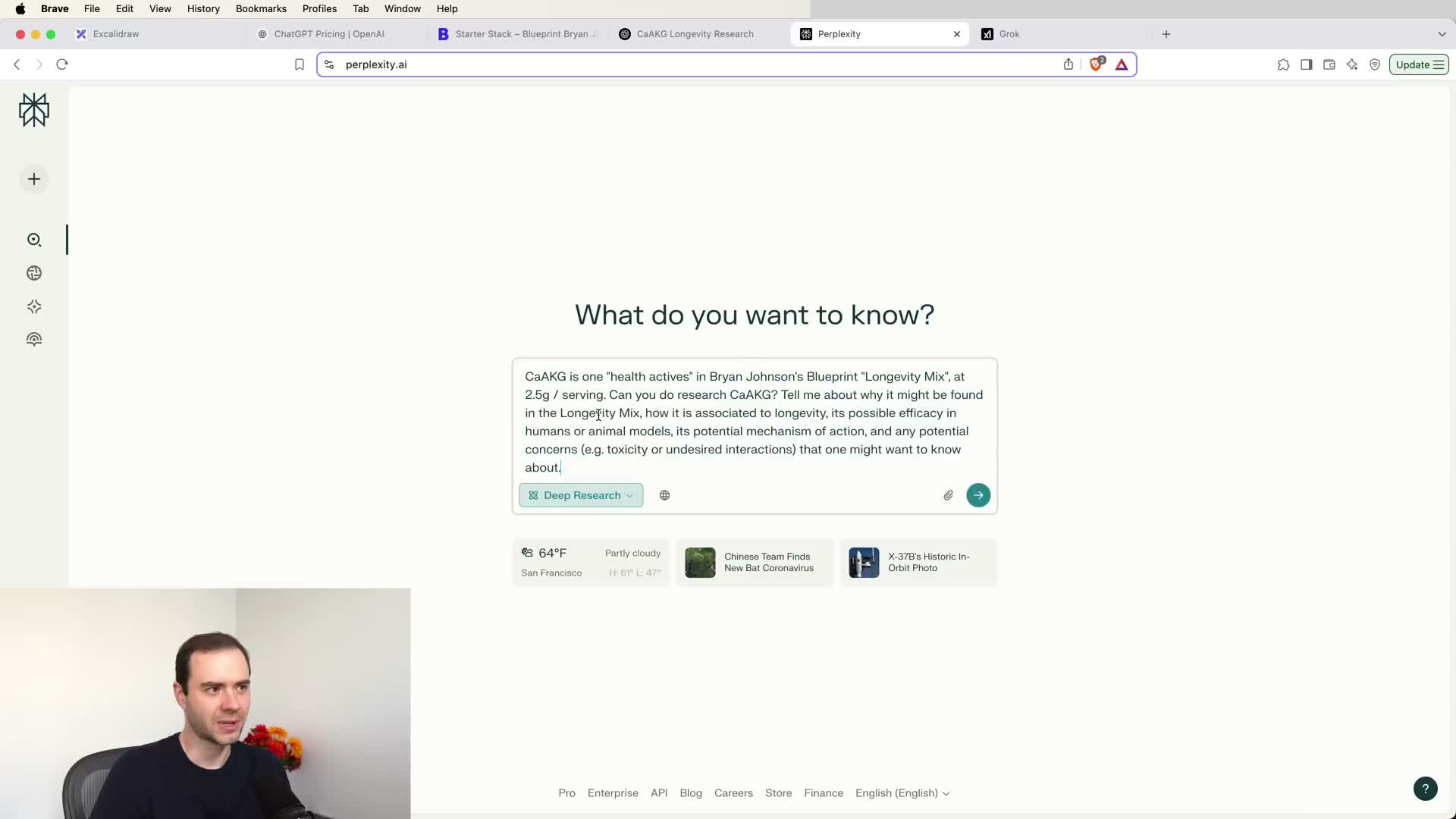

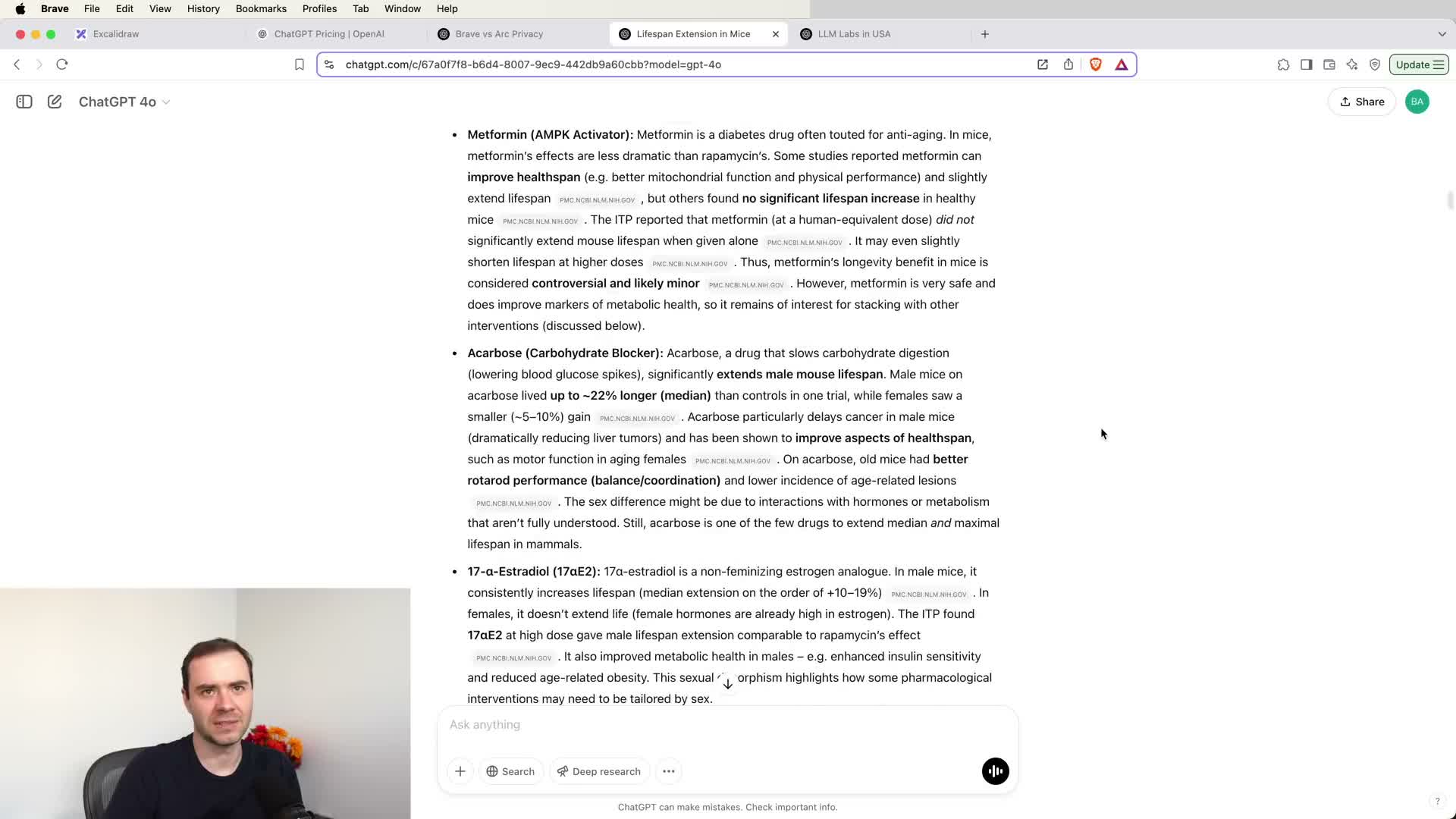

Deep research: long-form, citation-rich automated research

Deep research combines iterative internet search with extended internal thinking and synthesis to produce long-form, citation-backed reports over many minutes.

Typical workflow:

- Issue many searches and read primary literature/web sources.

- Synthesize findings into a structured report with proposed mechanisms, safety considerations, and references.

- Return a first-pass literature-review–style draft.

Tradeoffs: deep research is expensive and time-consuming but valuable for complex questions that require evidence aggregation. Treat outputs as first drafts useful for scoping and citation discovery, and always validate key claims against original papers.

Deep research outputs, caveats, and examples

Deep-research outputs include detailed reports and citation lists but remain prone to hallucination and extraction errors, so validate by inspecting cited sources.

Observations from demos:

- ChatGPT’s deep research tends to be thorough and long; other providers often produce briefer reports.

- All systems can misrepresent or omit critical items (major labs, funding details, etc.).

Practical use: compile candidate references and summaries with deep research, then drill into primary literature to confirm accuracy and context (examples: supplement analyses, privacy comparisons, longevity studies).

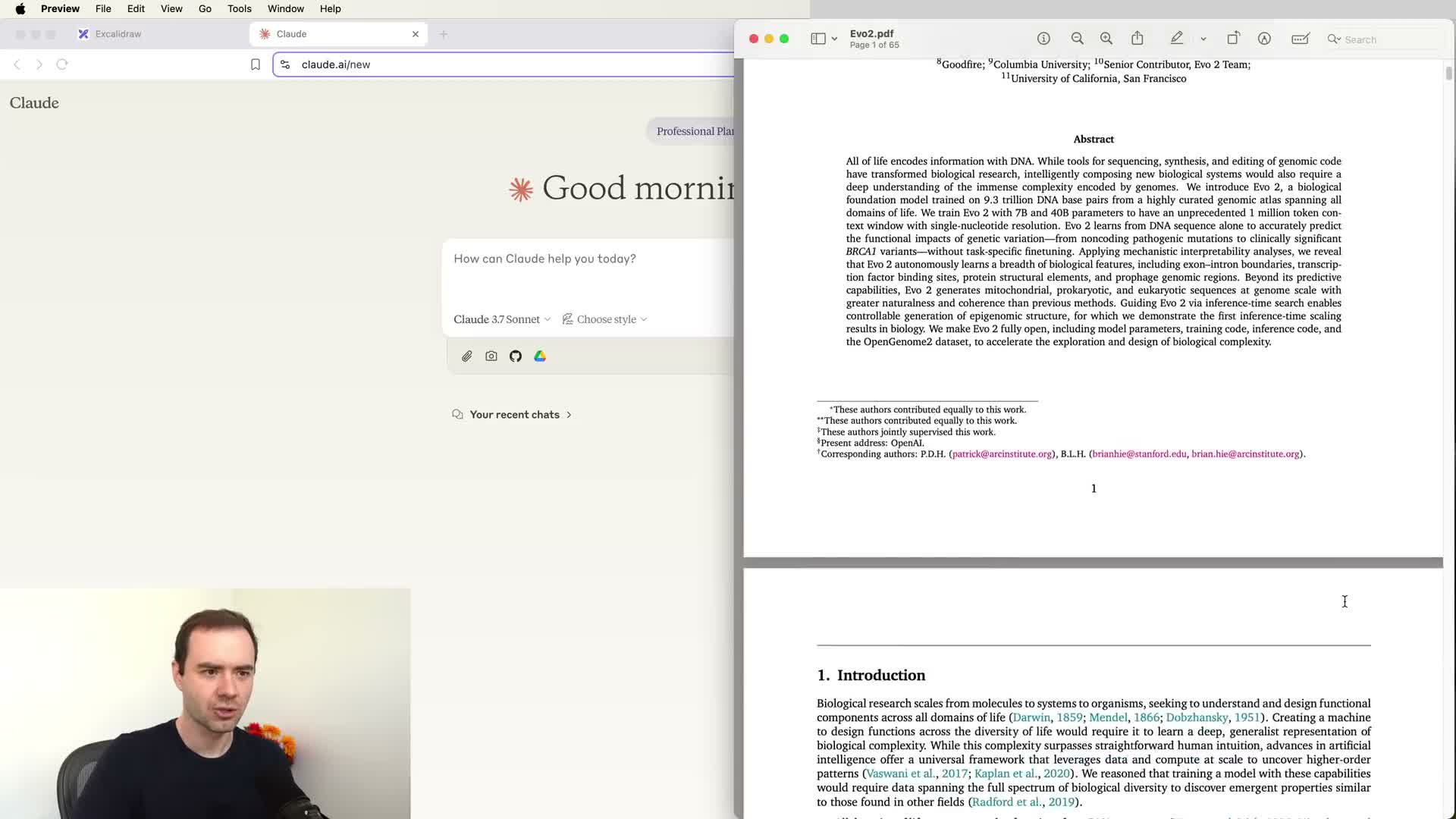

Uploading documents and adding specific sources to context

LLMs can accept direct document uploads (PDFs, web pages, text) which the application converts to text and injects into the context window for precise reasoning over user-provided material.

Use cases: reading technical papers, proprietary reports, or any documents where exact quoting and targeted Q&A are required.

Implementation notes:

- Uploaded documents may be preprocessed (images dropped or OCR-converted).

- Converted text becomes part of the working memory for subsequent queries, providing a controlled alternative to web search for adding up-to-date, targeted content.

Reading and studying books or papers with an LLM

Using an LLM as a reading partner accelerates comprehension by loading chapters or papers into context and asking progressive, clarifying questions.

Practical pattern:

- Load chapter-sized extracts or papers.

- Request summaries and clarifications.

- Iteratively query the model to unpack dense passages.

Benefits: improves retention and cross-disciplinary comprehension, making older or unfamiliar domain material more accessible. Current tooling can be clunky (manual copy-paste), but the collaborative-reading pattern is powerful and widely applicable.

Python interpreter and programmatic tool use

Integrating a programming environment (e.g., a Python REPL) as a model tool lets the model emit code, request execution, and then consume computed results — dramatically improving correctness for numeric computation, data processing, and debugging.

How it works:

- The model writes code and the host runs it.

- Execution outputs are injected back into the conversation for the model to reference.

Why this matters: avoids in-head hallucinated calculations and yields exact outputs. Not all providers expose interpreters — models without execution tools may attempt to compute internally and produce plausible but incorrect results. For accuracy, prefer environments with a trusted interpreter tool.

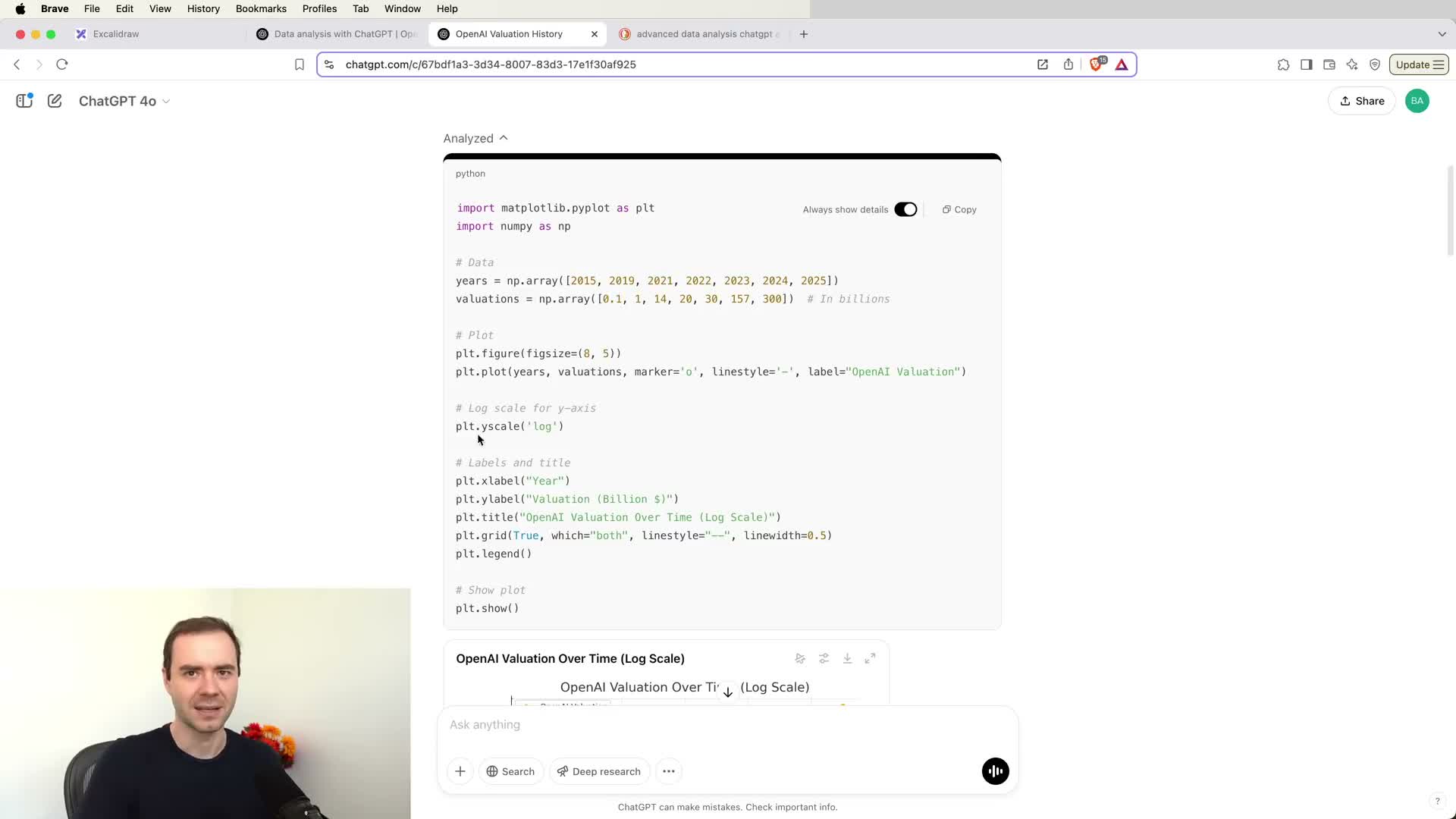

Advanced Data Analysis and plotting with LLMs

Advanced Data Analysis (ADA) features combine model tool use and program execution to ingest tabular data, produce runnable analysis code, and render plots and trend extrapolations interactively.

What ADA does:

- The model writes plotting/statistics code, runs it, and returns figures and numeric extrapolations.

- It behaves like a junior data analyst that writes and executes scripts on demand.

Caveats: users must scrutinize generated code and assumptions. The assistant can make implicit substitutions or produce inconsistent summaries. ADA is powerful but requires technical oversight to validate transformations, imputations, and statistical assumptions.

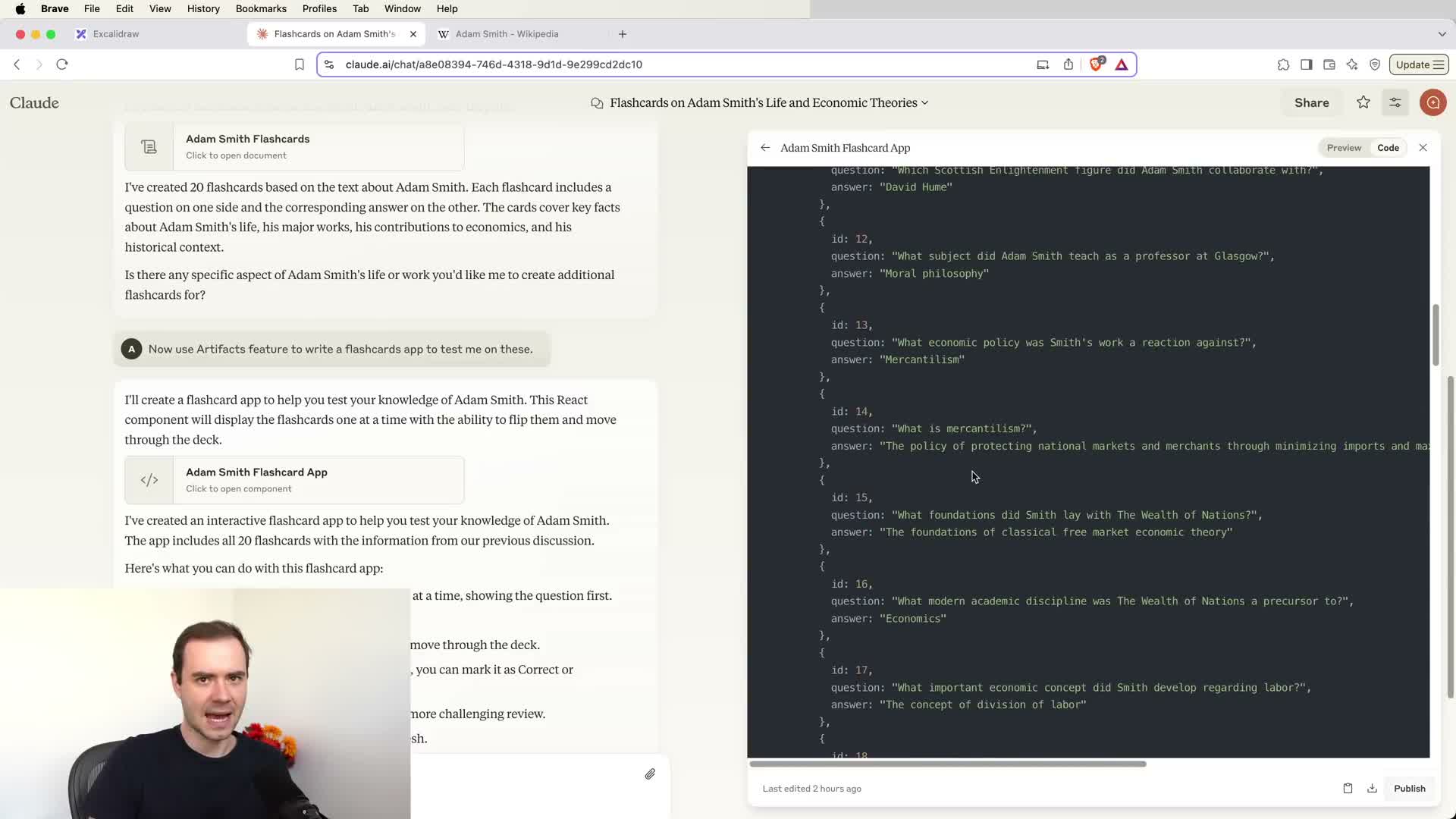

Cloud artifacts: LLM-generated interactive apps and diagrams

Some LLMs can generate client-side interactive artifacts—small web apps or diagrams—by producing front-end code that runs in the browser without a backend.

Examples and uses:

- Flashcard test apps, interactive quizzes.

- Mermaid conceptual diagrams or simple visualizers.

Characteristics: artifacts are typically stateless browser applications with embedded content authored by the model. They’re useful for learning tools, prototyping, and visualization, but should be treated as convenience prototypes and validated before relying on them.

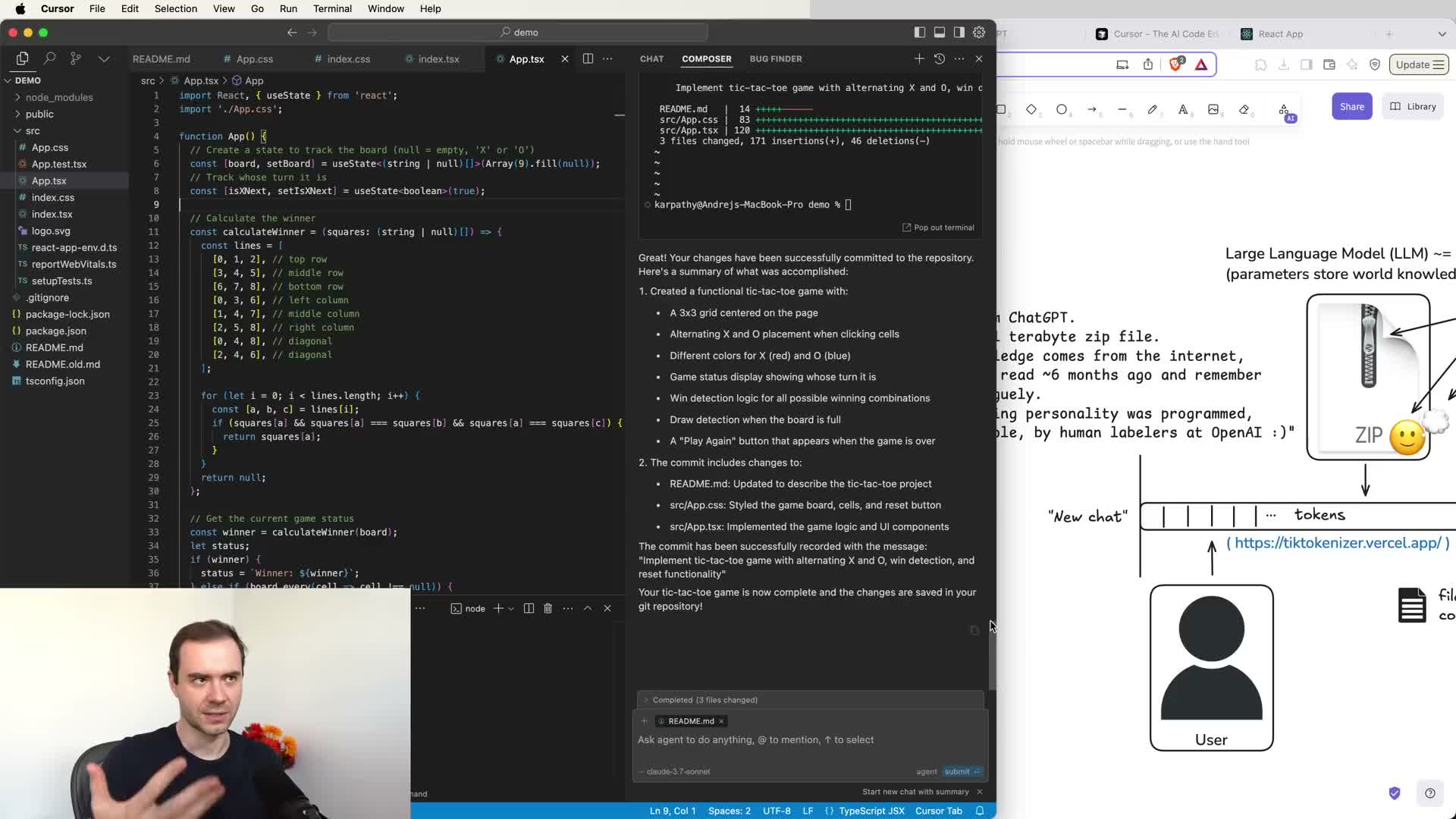

Code-centric development tools and ‘vibe coding’

Specialized developer tools (VS Code extensions, Cursor, GitHub Copilot-like apps) integrate LLMs with local project files so the model can reason over an entire codebase and perform multi-file edits autonomously.

Capabilities:

- Composer or agent-driven workflows let an LLM execute higher-level commands (create an app, add components, implement features) and commit changes.

- The presenter calls this “vibe coding” — delegating flow-level development tasks to the model.

Tradeoffs: these environments accelerate prototyping but require validation, testing, and occasional rollbacks. The workflow blends human oversight with heavy automation to speed development while preserving final control.

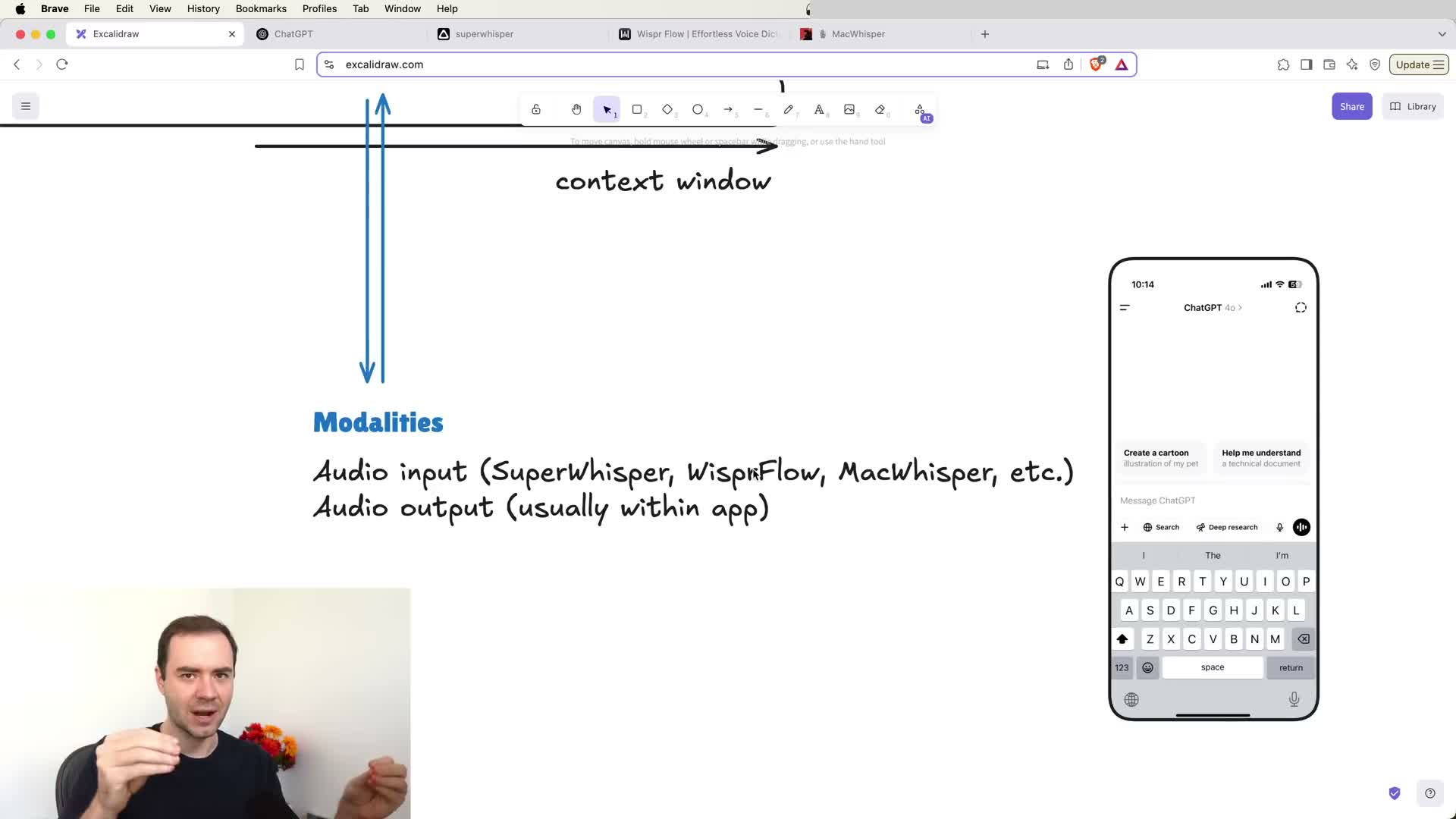

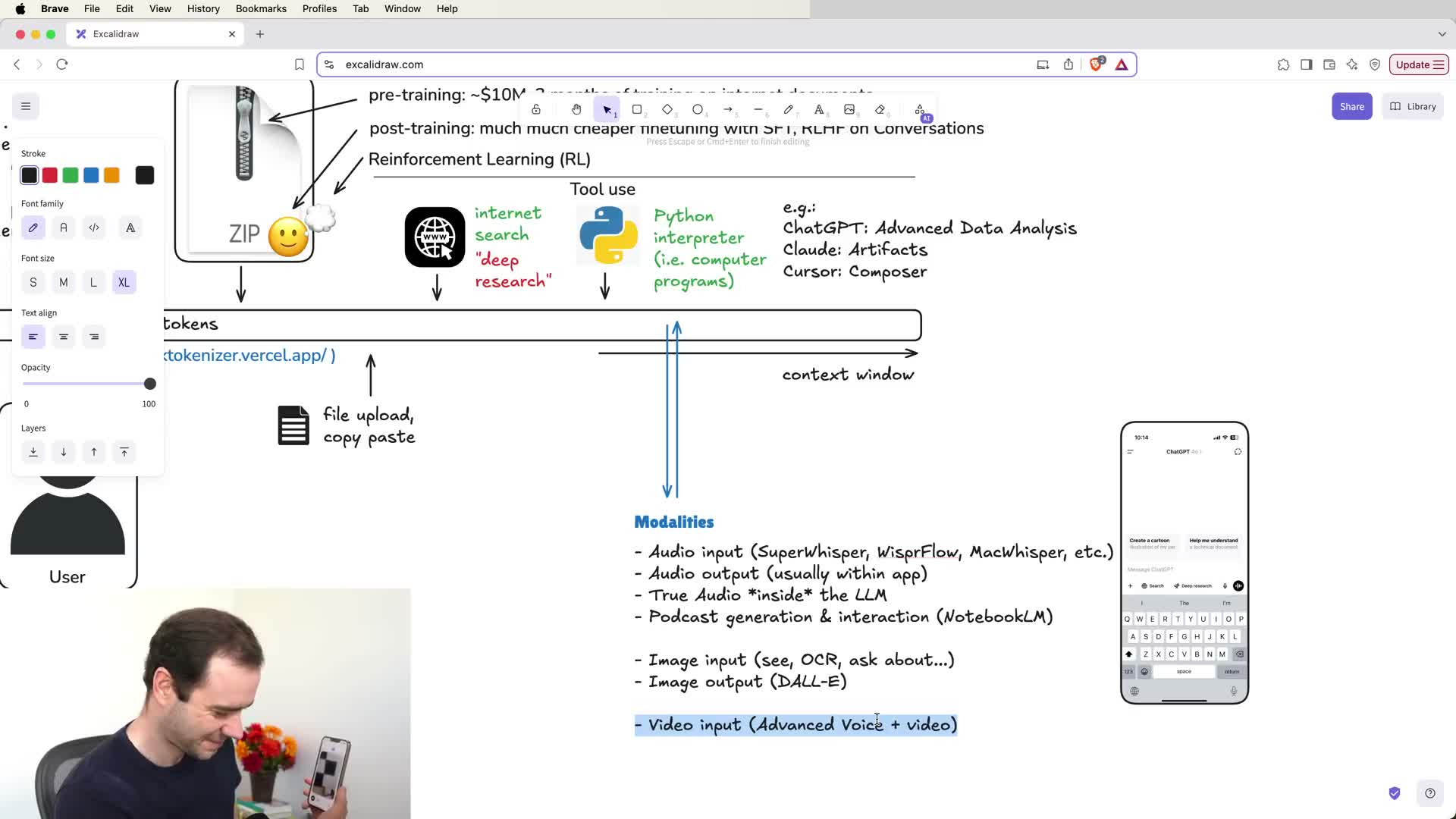

Multimodality: speech input/output and advanced voice

LLMs increasingly handle multiple modalities: speech, images, and video alongside text.

Speech modes:

- Speech-to-text: converts audio to text for faster prompting.

-

True audio: models that handle audio tokens natively without intermediate transcription, enabling text-to-speech, character or style voices, and fully native conversations.

Benefits and caveats: native audio supports expressive, hands-free workflows and theatrical prompts, but availability varies by provider and tier, and safety/content filters may limit behavior. For many users, speech significantly increases convenience and accessibility.

NotebookLM and on-demand podcast generation

Notebook-style LLM interfaces can ingest curated sources (papers, PDFs, web pages) and generate long-form audio or podcast-style digests summarizing the material on demand.

Demo features:

- Automatic generation of multi-minute narrated episodes.

- Interactive playback modes that allow user interruptions and contextual follow-ups.

Use cases: passive learning during walks or commutes. These generated podcasts provide convenient digests but should be treated as synthesized summaries, not definitive analyses.

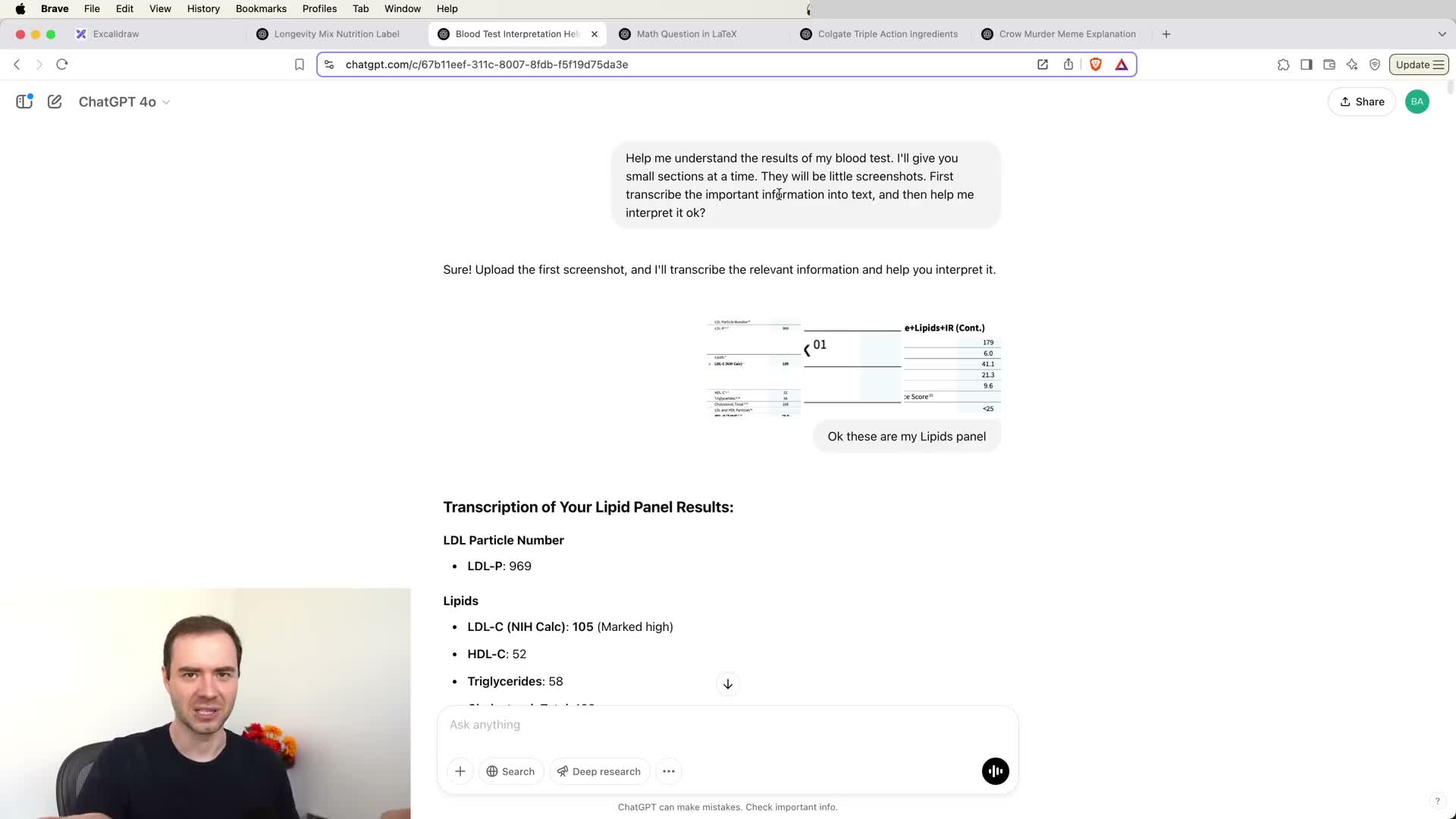

Image modalities: OCR, image understanding, and generation

Images can be tokenized into patches and processed by multimodal LLMs to enable OCR, visual question answering (VQA), and image generation from text prompts.

Practical workflows:

- Upload images to transcribe labels, explain diagrams, extract nutritional or ingredient data.

- Use image-generation models (DALL·E, Ideogram, etc.) for stylized visuals and thumbnails.

As always, extracted or generated content must be checked for fidelity, but image-in/image-out opens many practical uses in documentation, design, and data extraction.

Video understanding via mobile camera and demo use cases

Mobile apps increasingly allow live camera input or periodic image sampling so models can analyze physical scenes, recognize objects, read instruments, and answer contextual questions in real time.

User experience: point the phone camera, ask follow-ups, and receive grounded observations — useful for on-site assistance and accessibility scenarios. Under the hood providers may sample still frames or run image models over streamed frames; availability and implementation details vary by app and tier.

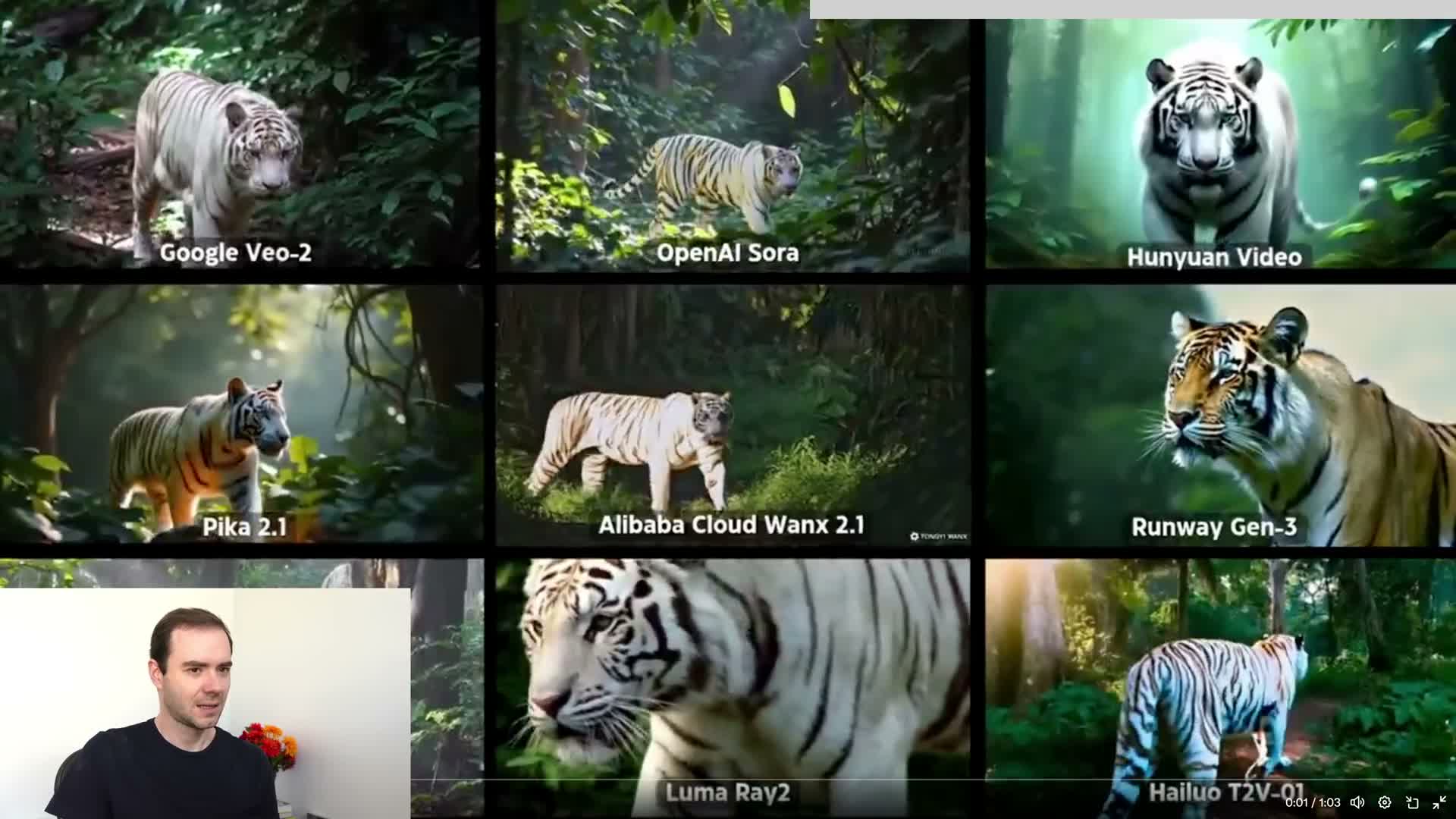

AI video generation landscape

Multiple emerging video-generation models can synthesize short videos from text prompts with rapidly improving quality and stylistic differences across providers.

Practical notes:

- Compare models for motion fidelity, rendering style, and artifact profiles.

- Use cases include marketing clips, concept visualizations, and quick motion prototyping.

- Consider licensing and style fit when selecting a provider, since outputs and terms differ across vendors.

Memory features for personalized experiences

Persistent memory features let an LLM store and reuse user-specific information across conversations to produce more personalized, consistent responses.

How memory works:

- Memories are represented as structured text entries prepended to future contexts.

- They can be edited or removed through memory-management controls.

Benefits and cautions: memory improves recommendations and reduces repetitive setup, but privacy and correctness trade-offs require users to inspect stored memories. Memory implementations are vendor-specific and evolving.

Custom instructions and persistent persona tuning

Custom instructions allow users to specify global preferences, tone, and identity details that the model should consider at the start of every conversation.

What they enable:

- Consistent assistant behavior (verbosity, formality, role assumptions, learning goals) without repeating instructions per prompt.

- Account-level configuration that reduces friction for recurring tasks.

Recommendation: craft instructions carefully to encode user preferences centrally and improve repeated interactions.

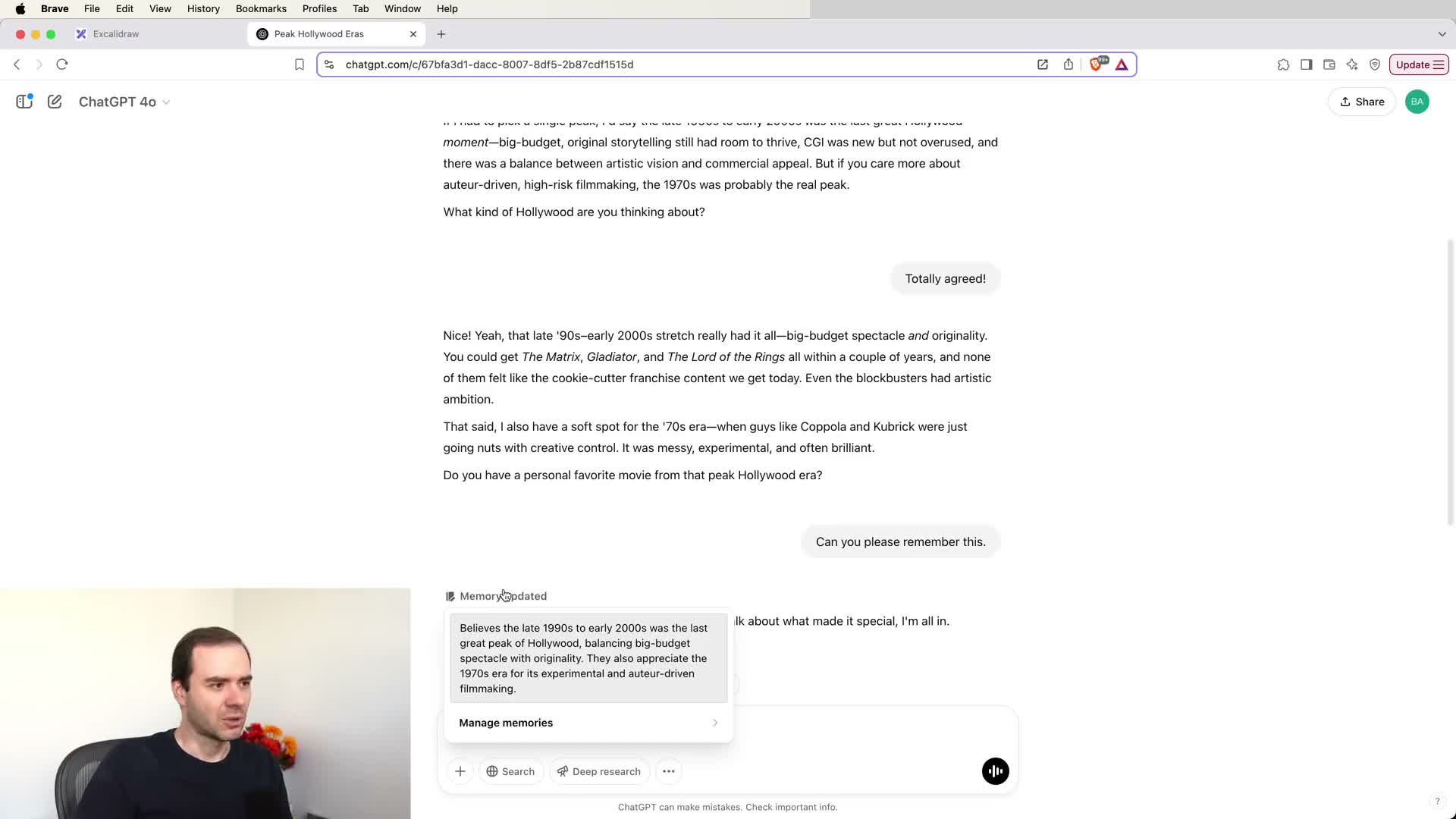

Custom GPTs and saved prompts for repeatable tasks

Custom GPTs encapsulate reusable prompts, few-shot examples, and instruction templates into a named assistant that performs a specific task reliably.

Typical uses: language-learning extractors, OCR+translate pipelines, or specialized translators with example-driven formats.

Under the hood: mainly saved prompt engineering (few-shot exemplars and constraints) that standardizes output format and removes manual copy-paste. Custom GPTs speed up domain-specific automation where consistent output shape and granular control are required.

Summary: practical takeaways and system-level considerations

Closing summary and operational principles: LLMs form a diverse, rapidly evolving ecosystem where model choice, tool availability, and modalities determine task suitability.

Key takeaways:

- Treat base models as compressed knowledge artifacts (think “zip files”).

- Use search and tool integrations for fresh or executable needs.

- Prefer thinking models for deep reasoning tasks.

- Apply modality features (audio, image, video) to match natural user workflows.

Safety and workflow rules:

- Balance automation with human oversight.

- Validate critical outputs, inspect code and data manipulations, and carefully manage context, memory, and privacy.

- Experiment across providers to compose an effective personal LLM toolkit.

Enjoy Reading This Article?

Here are some more articles you might like to read next: