Agent 03 - Stanford Webinar - Agentic AI - A Progression of Language Model Usage

- Introduction and outline of the talk

- Definition of a language model as next-token prediction

- Language model training stages: pre-training and post-training

- Instruction dataset template and supervised fine-tuning

- Deployment options: cloud APIs and local hosting

- Prompting as critical pre-processing and input design

- Prompt best practices: clear instructions and few-shot examples

- Providing relevant context and retrieval-augmented templates

- Encouraging reasoning via chain-of-thought and ‘time to think’ prompts

- Decomposing complex tasks into sequential stages

- Logging, tracing, and automated evaluation for development

- Prompt routing to specialized handlers and models

- Fine-tuning data requirements depend on task complexity

- Common limitations of pre-trained LMs

- Retrieval-augmented generation (RAG) and indexing workflow

- Tool usage and function-calling to access external capabilities

- Agentic language models: interaction with environment and tools

- Reasoning plus action (ReAct) as a pattern for agent behavior

- Customer-support agent example illustrating agentic workflow

- Iterative agent workflows for research and software assistance

- Agentic patterns enable more complex task execution with the same models

- Representative real-world applications of agentic AI

- Design patterns for agentic systems: planning, reflection, tools, multi-agent

- Reflection pattern and its application to code refactoring

- Tool usage, multi-agent collaboration, and persona-based agents

- Summary: agentic usage extends traditional LM practices

- Evaluating agents: beyond single-shot LLM judgment

- Guidance for augmenting agents for specific applications

- Mitigating hallucinations and implementing guardrails

- Getting started: playgrounds, APIs, and incremental experimentation

- Resources and following experts to stay current

- Closing remarks and thanks

Introduction and outline of the talk

Provides an overview of the presentation objectives and structure, introducing agentic AI as a progression of language model usage and summarizing planned topics including model overview, common limitations, mitigation methods, and agentic design patterns.

- Establishes the scope for subsequent technical discussion and situates agentic approaches as extensions to standard LM applications.

- Clarifies the talk flow: move from foundational definitions → practical patterns and evaluation → real-world use cases.

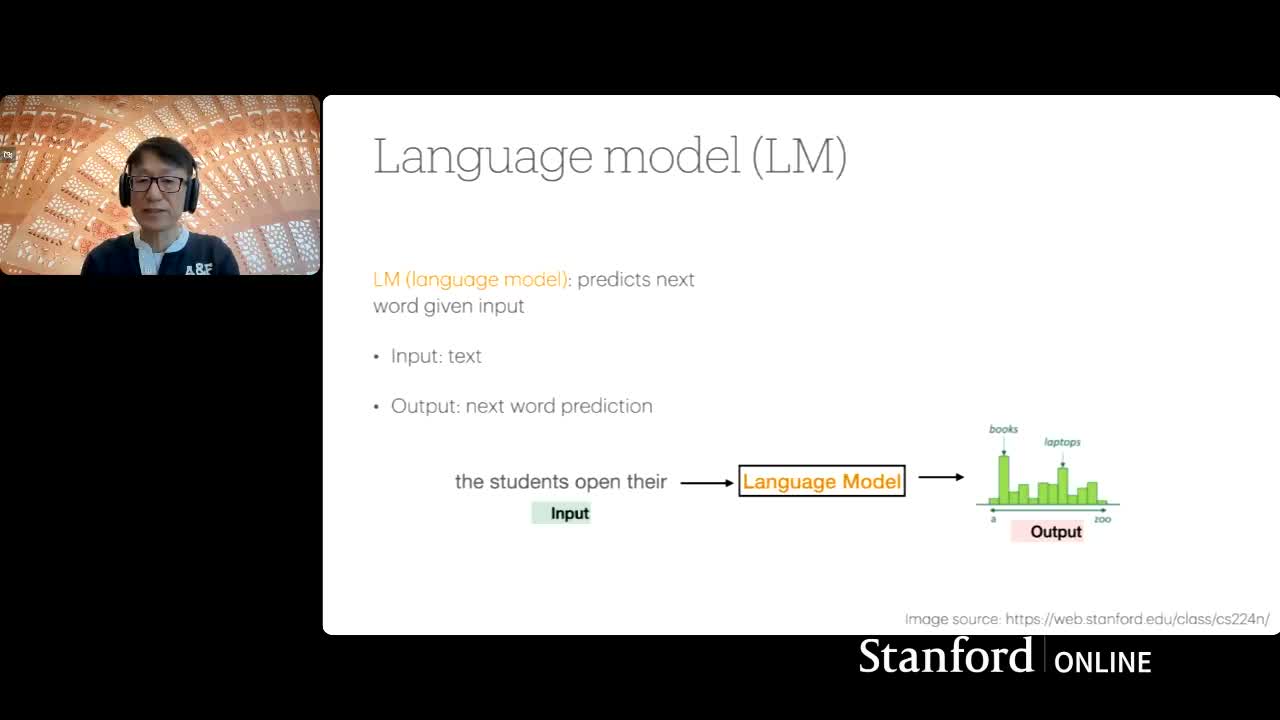

Definition of a language model as next-token prediction

Language model — a statistical machine-learning model that predicts the next token (or word) given preceding text, producing a probability distribution over the vocabulary for each next position.

- Supports autoregressive generation by repeatedly sampling or selecting the highest-probability token and feeding it back as input.

-

Large-scale pretraining on corpora yields strong priors about word sequences and common completions, which underlies much downstream performance.

Language model training stages: pre-training and post-training

Two-stage training pipeline in modern LLM development:

-

Pre-training

- Large-scale self-supervised training (next-token objective) on vast text corpora.

- Builds broad statistical knowledge and fluency.

-

Post-training adaptations

- Instruction tuning reshapes behavior toward helpful, instruction-following outputs using supervised input-output pairs.

-

Reinforcement Learning from Human Feedback (RLHF) refines alignment to human preferences via reward models and policy optimization, improving safety and usability.

Instruction dataset template and supervised fine-tuning

Supervised fine-tuning with templated instructions — using datasets that pair explicit instruction fields with expected outputs to train response generation conditioned on the instruction.

- The model is trained to map instruction + context → desired response distribution, which improves reliability in downstream apps.

-

Dataset design and example selection directly influence stylistic and task-specific behaviors, so careful curation matters.

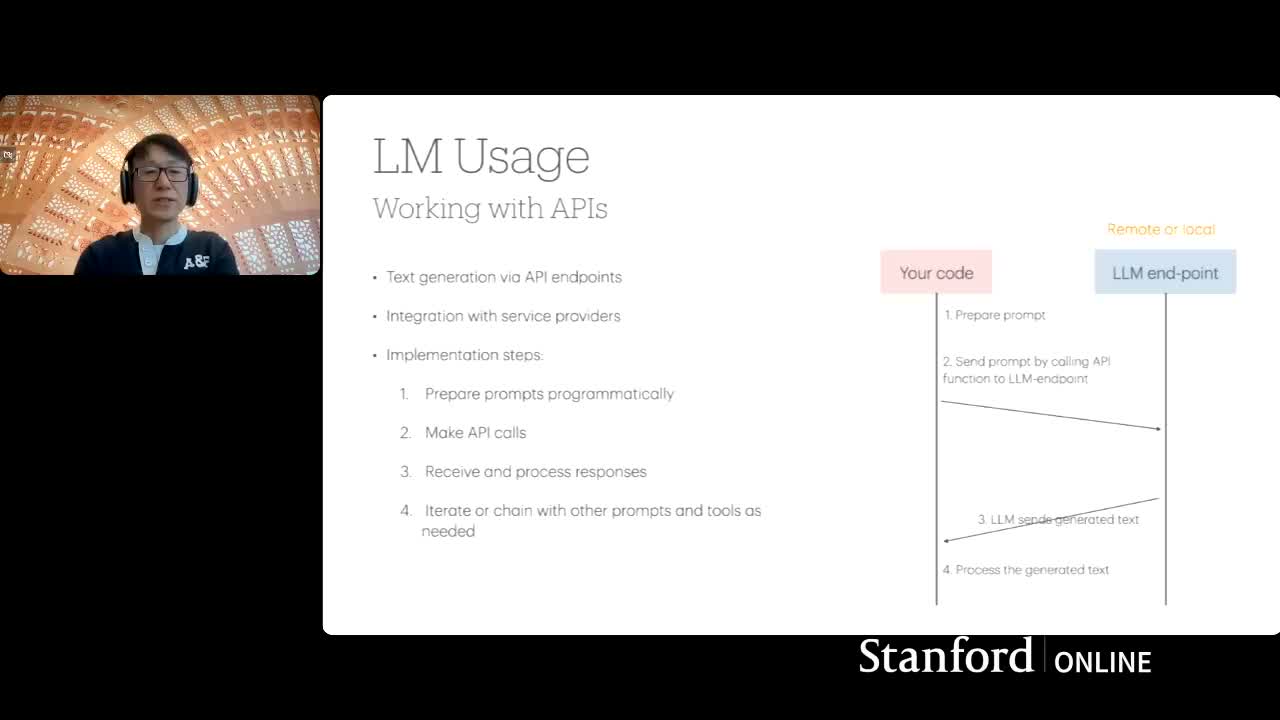

Deployment options: cloud APIs and local hosting

Deployment strategies for integrating LMs into applications:

-

Cloud / API hosting

- Serialize prompts → send to provider endpoint → receive generated outputs.

- Easy scaling and model updates; often higher recurring cost and data transmission to third parties.

-

Local / edge hosting (for smaller models)

- Reduced latency, greater data control and privacy; requires compatible compute and ops effort.

- Reduced latency, greater data control and privacy; requires compatible compute and ops effort.

- Tradeoffs: cost, performance/latency, privacy, and operational complexity drive the deployment choice.

Prompting as critical pre-processing and input design

Prompt engineering as a core engineering task — designing free-form natural language inputs to elicit reliable, relevant outputs.

- Effective prompts reduce ambiguity and failure modes by specifying: task, formatting, examples, constraints, and desired output style.

- Prompt design affects latency, token usage, and downstream parsing logic; good prompts turn an unconstrained generator into a predictable component.

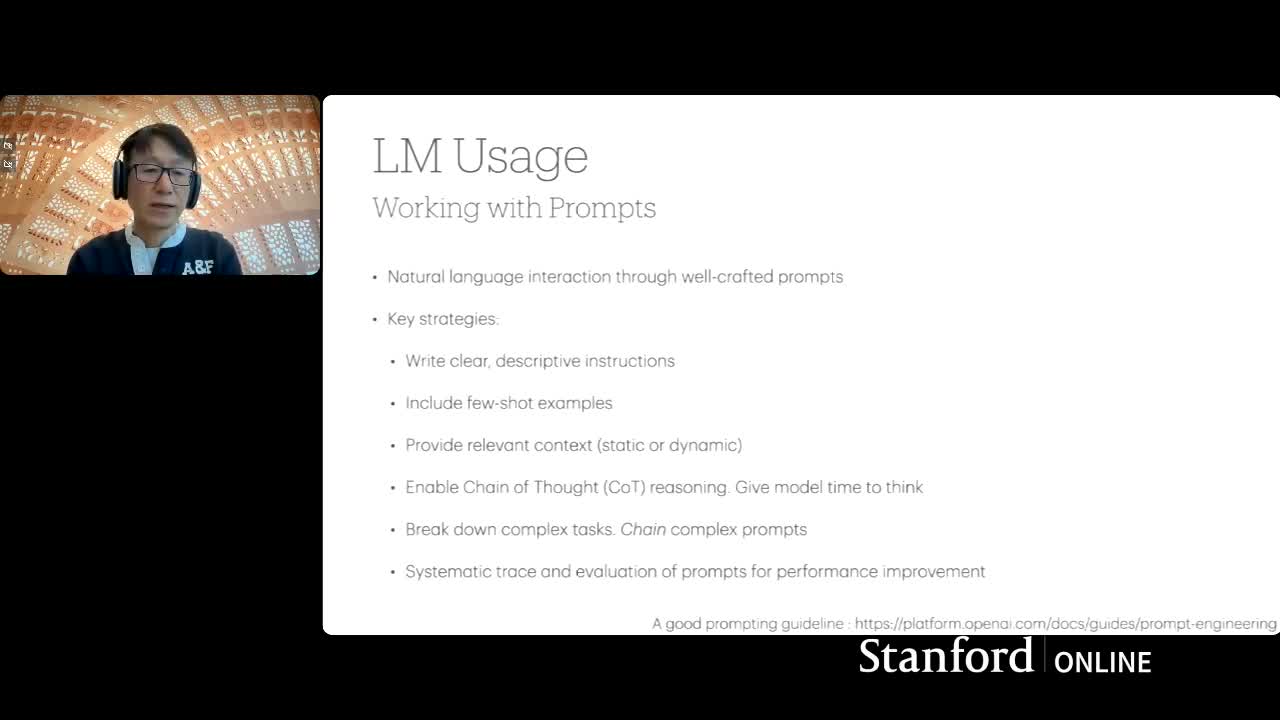

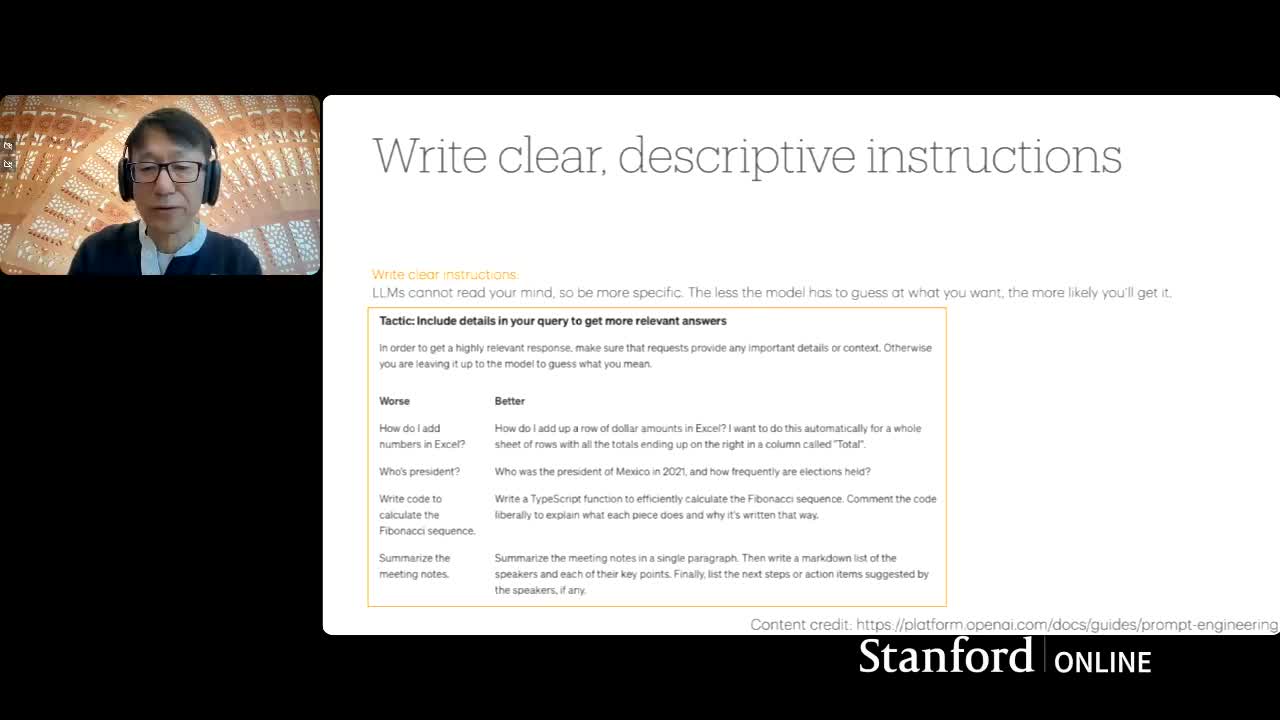

Prompt best practices: clear instructions and few-shot examples

Explicit instructions & few-shot examples — practical prompt techniques to constrain model behavior:

- Write clear, descriptive instructions to reduce the model’s need to infer user intent.

- Include few-shot examples (input-output pairs) to condition consistent style and structure.

- These practices push the model’s response distribution toward the intended format, lowering variance across responses.

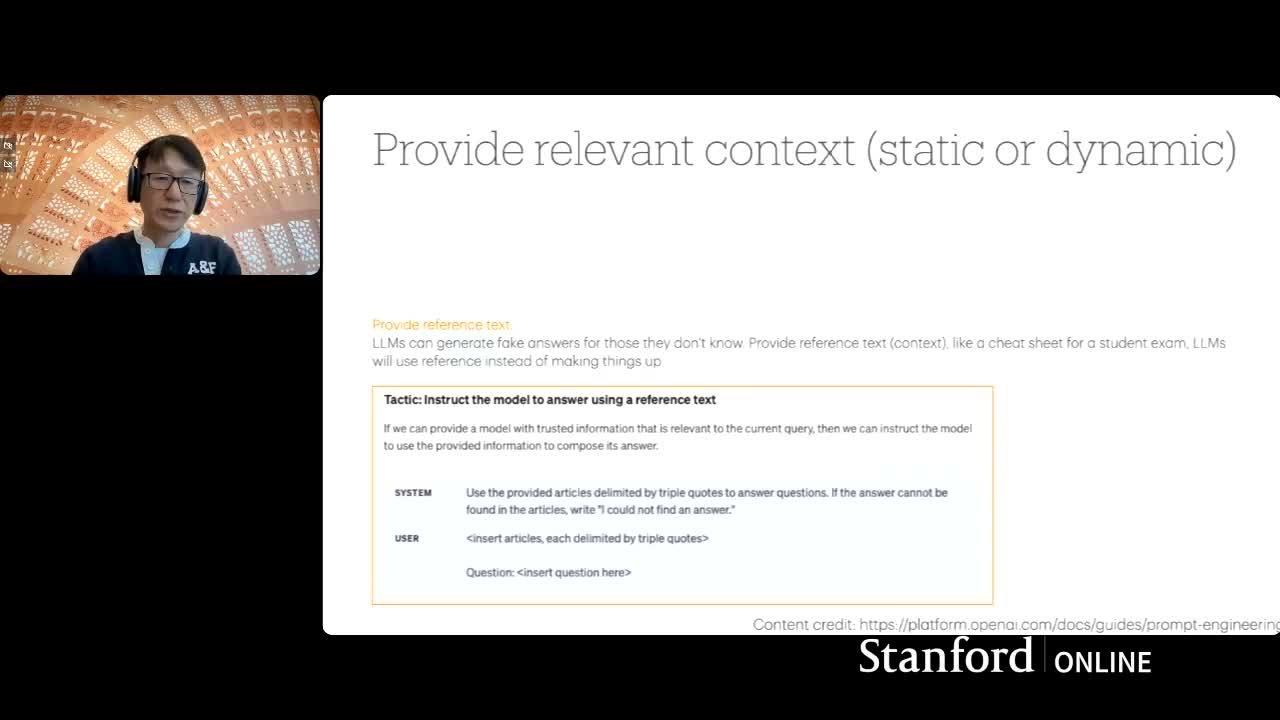

Providing relevant context and retrieval-augmented templates

Grounding with context & Retrieval-Augmented Generation (RAG) — supply context and references in prompts to reduce hallucination:

- Use templates that instruct the model to answer only using provided sources and to declare when no answer is found.

- This pattern enables traceable citations and improves factuality for domain-specific queries.

- RAG workflows allow integration of proprietary or frequently updated content as the model’s evidence base.

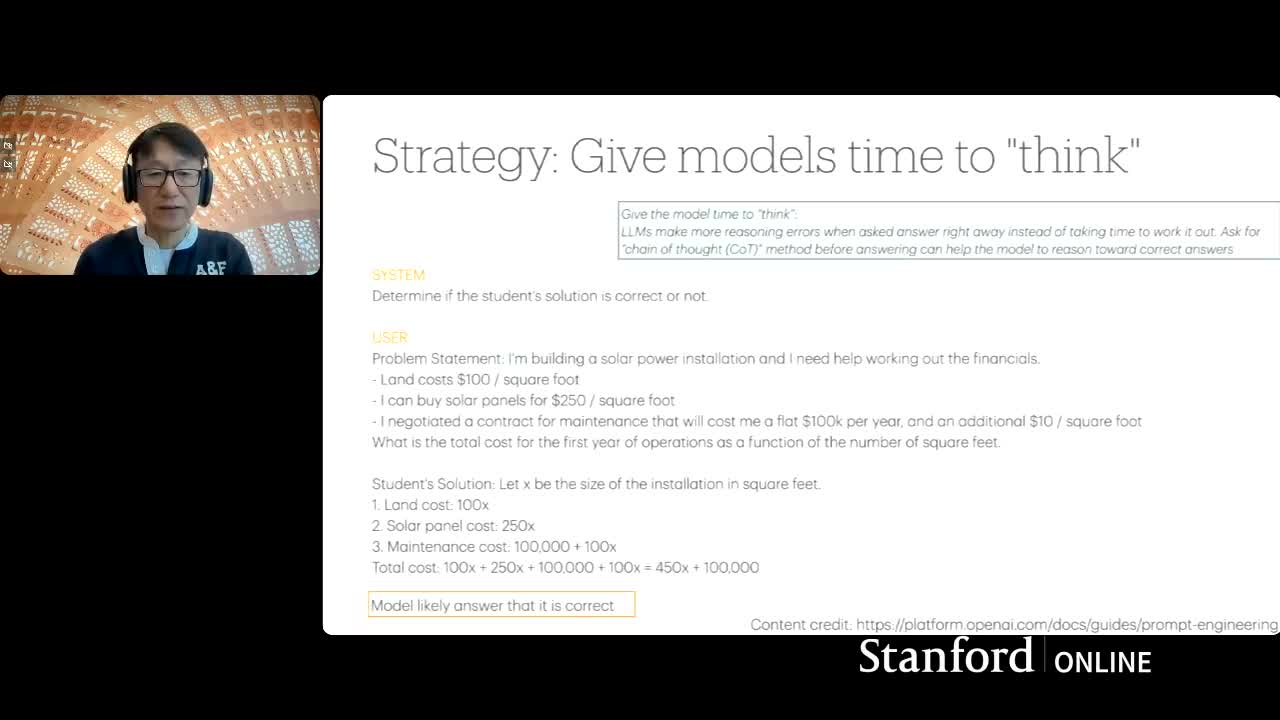

Encouraging reasoning via chain-of-thought and ‘time to think’ prompts

Chain-of-thought / explicit intermediate reasoning — ask the model to produce intermediate steps before a final answer:

- Request the model to “work out” a solution and then conclude to surface internal calculations and attention to details.

- Often improves correctness on multi-step, logical, arithmetic, and proof-style tasks.

- Tradeoff: increased token usage and latency for greater reliability in reasoning-intensive tasks.

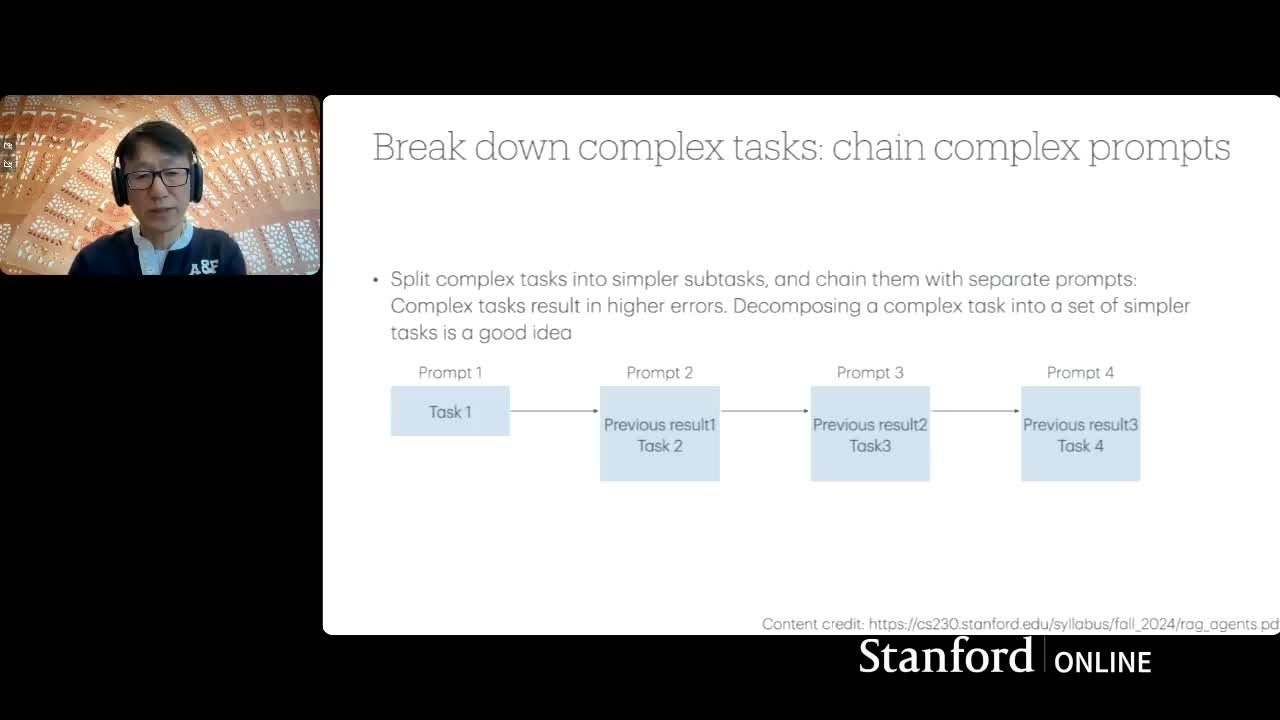

Decomposing complex tasks into sequential stages

Chaining prompts / decomposition — break complex tasks into a sequence of smaller prompts:

- Decompose the task into focused subtasks.

- For each subtask, call the model with a single clear operation consuming previous outputs.

- Optionally orchestrate the sequence with code or higher-level agents.

- Benefits: improved interpretability, modularity, and error isolation; reduces failure rates relative to monolithic prompts.

Logging, tracing, and automated evaluation for development

Logging & automated evaluation — essential engineering practices for LM-based applications:

- Systematic logging enables debugging, auditing, and tracking as models and prompts evolve.

- Automated evaluation pipelines use curated input–ground-truth pairs and either human raters or LM-based judges to score outputs.

- Continuous evaluation supports reproducible comparisons across model versions and safe migrations when upstream models change.

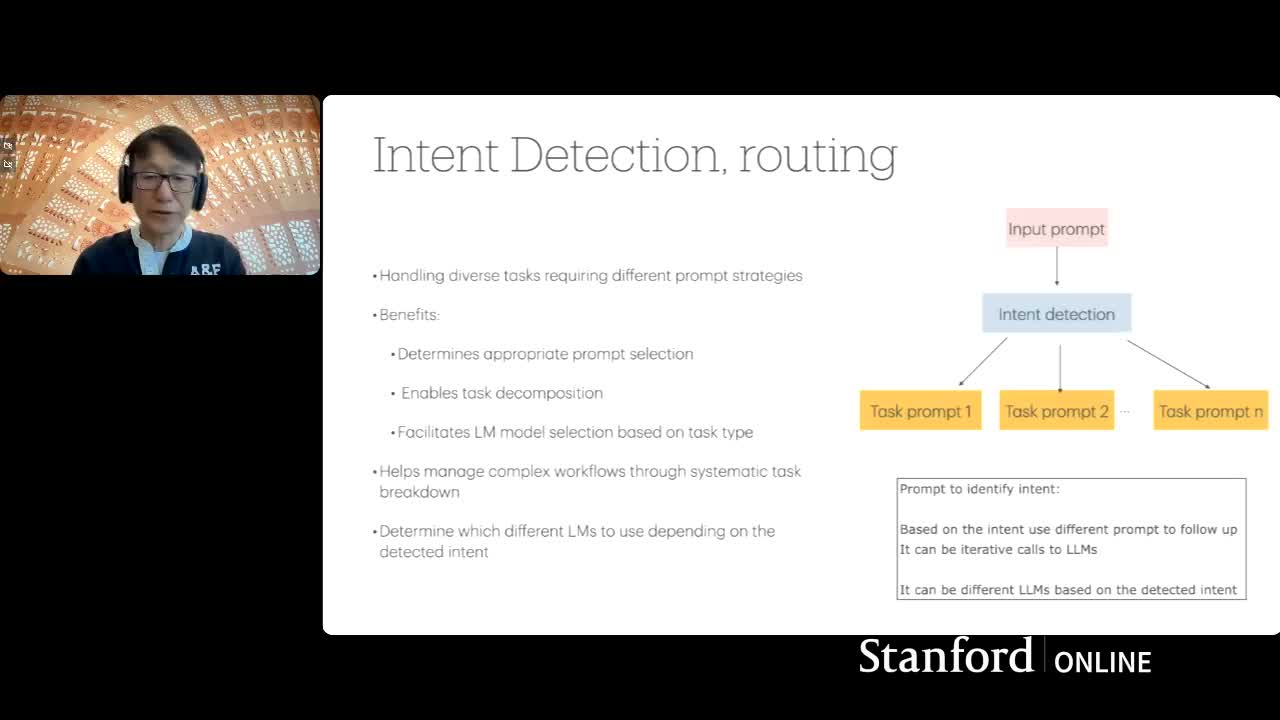

Prompt routing to specialized handlers and models

Prompt routing — classify incoming queries by intent and dispatch to specialized handlers or appropriately sized models:

- A router reduces cost by avoiding expensive calls for simple queries and improves relevance by selecting tailored handlers.

- Supports hybrid systems combining lightweight classifiers/heuristics with larger LMs for complex needs.

Fine-tuning data requirements depend on task complexity

Fine-tuning data strategy — practical guidance on dataset sizing and iteration:

- Start with small, focused datasets (tens to hundreds of examples) to validate behavior before scaling.

- Use iterative experimentation with small supervised pairs for rapid feedback.

-

Synthetic augmentation using LMs can expand training data when needed; prioritize pragmatic incremental refinement over large upfront labeling investments.

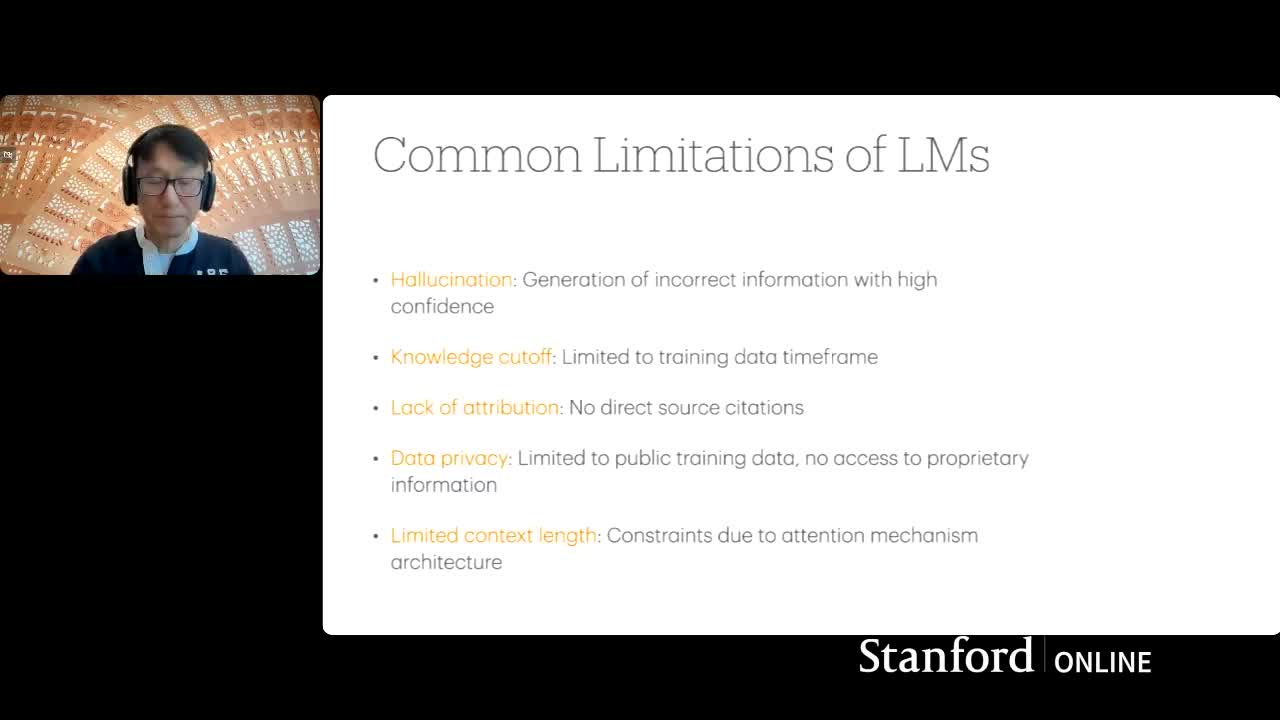

Common limitations of pre-trained LMs

Common LM limitations — typical shortcomings to address:

- Hallucination: fabricated or incorrect outputs.

- Knowledge cutoffs: outdated pretraining data.

- Lack of source attribution.

- Data-privacy gaps for proprietary information.

-

Constrained context windows that trade off length with latency and cost.

- These motivate system-level interventions such as retrieval augmentation, tool integration, and memory architectures for production use.

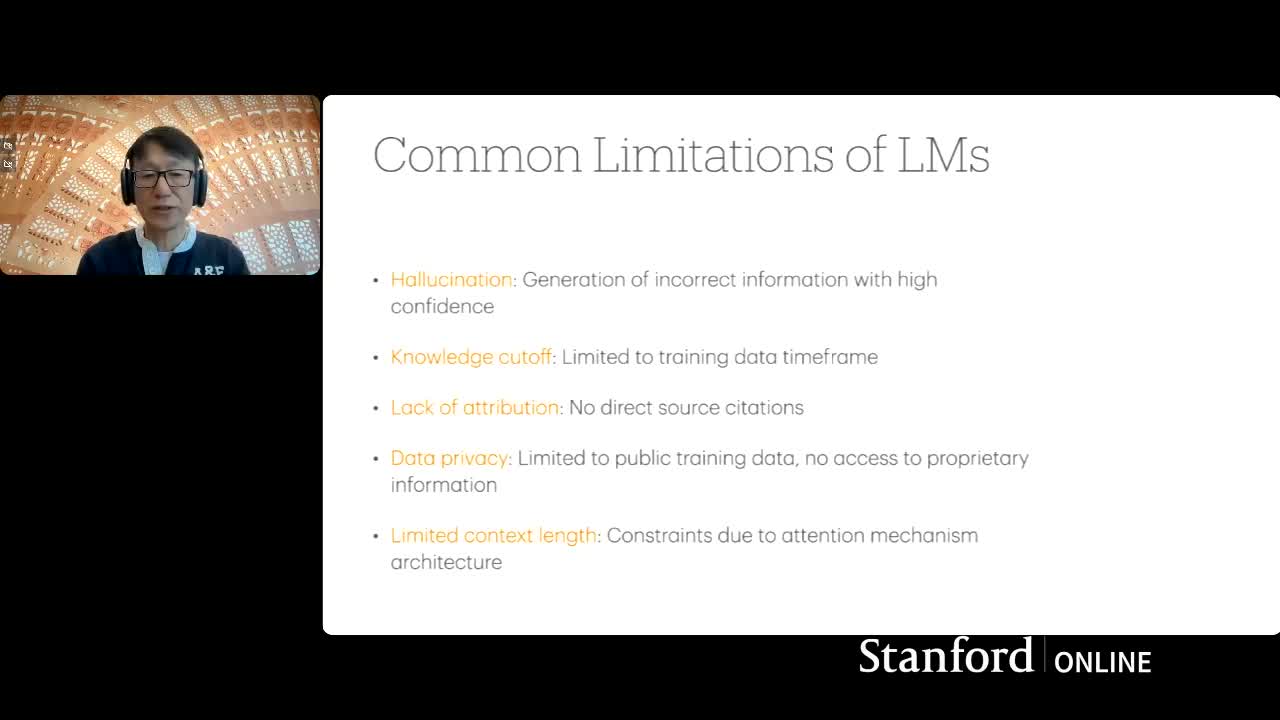

Retrieval-augmented generation (RAG) and indexing workflow

Retrieval-Augmented Generation (RAG) systems — how they work and variants:

- Pre-index textual corpora by chunking documents and embedding chunks into vector spaces.

- Store embeddings in a vector database for nearest-neighbor retrieval on query embeddings.

-

At query time, retrieve top-K relevant chunks to include as grounded context in the prompt, enabling citation and evidence-based answers.

- Variants: web search augmentation, knowledge-graph retrieval, or other domain-specific retrieval strategies chosen by precision and domain needs.

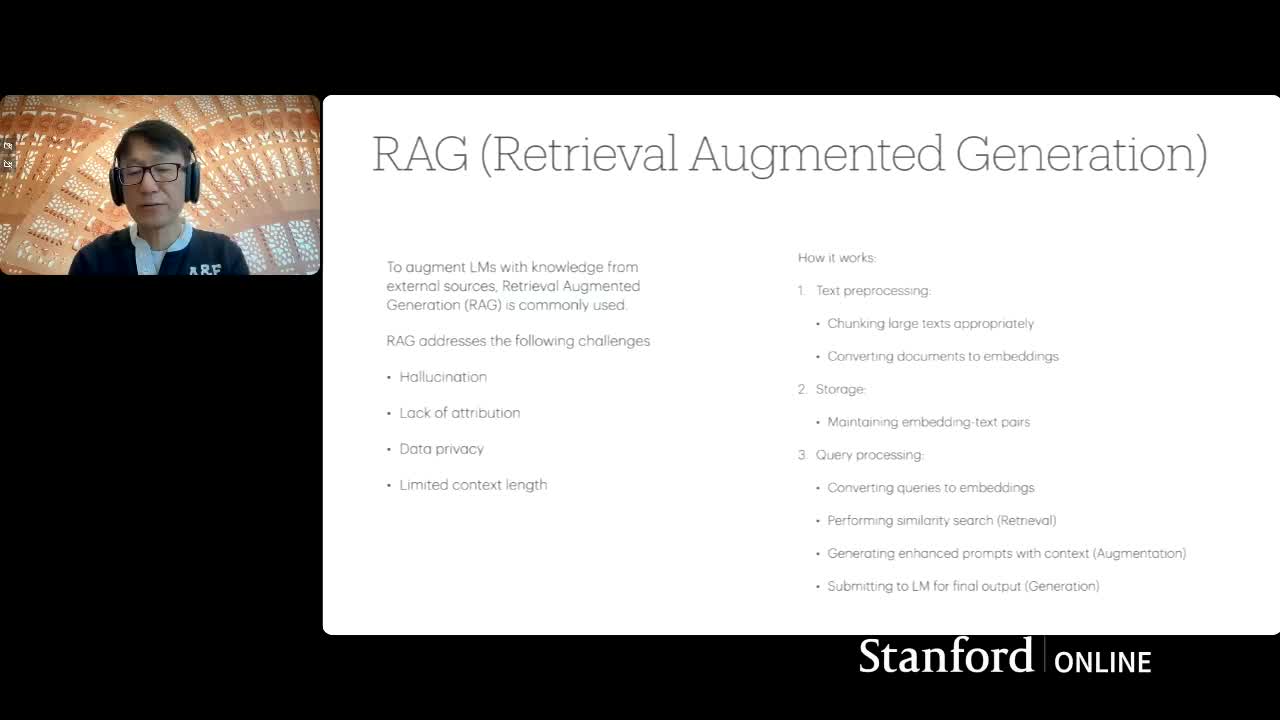

Tool usage and function-calling to access external capabilities

Function-calling / tool invocation patterns — structured outputs that orchestration software executes:

- The LM emits structured calls or API-like outputs that are parsed to invoke external services (e.g., weather APIs) or to run code in sandboxes.

- Enables real-time data access, deterministic computation, and integration with systems of record while keeping a human-friendly interface.

- Orchestration returns results to the LM as observations for final synthesis, closing the loop between reasoning and action.

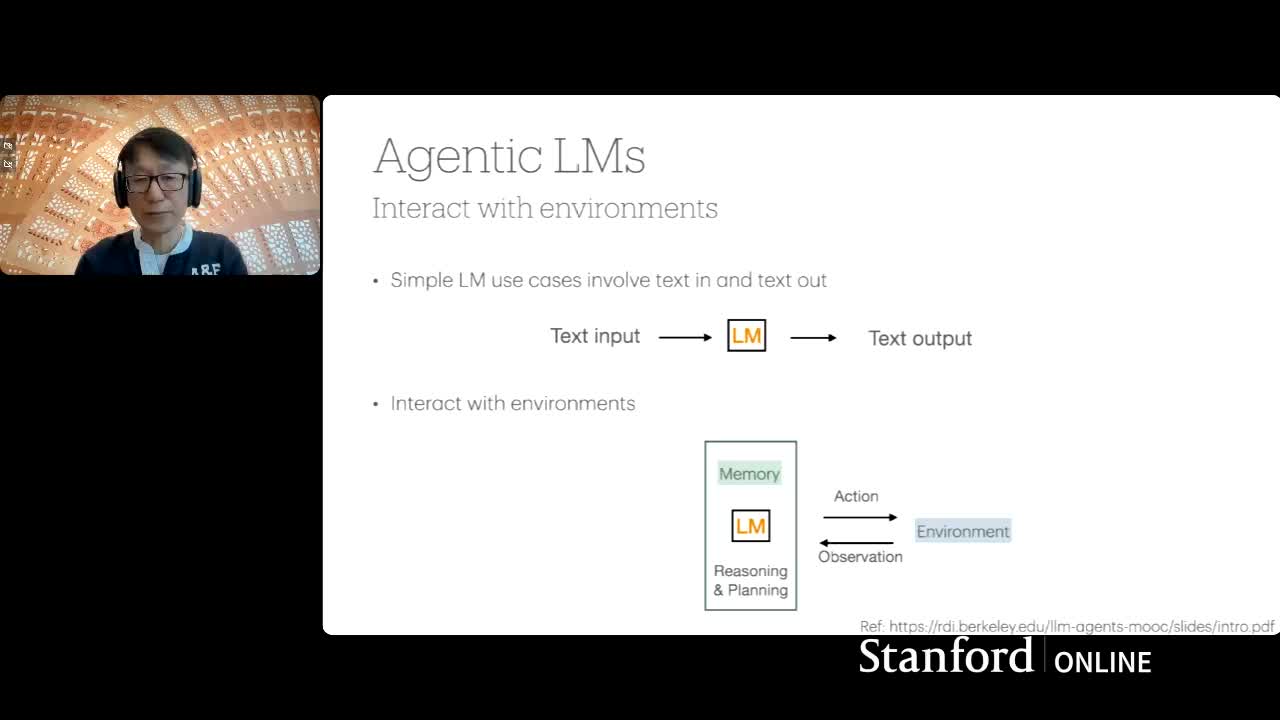

Agentic language models: interaction with environment and tools

Agentic LM usage — systems where a core LM interacts with an external environment via retrievals, tool calls, or executable programs:

- Agentic behavior couples deliberation (reasoning) with action, allowing the system to gather evidence, perform computations, and modify external state.

- Iterative planning and memory incorporation turn a passive text generator into an active agent capable of multi-step, context-aware operations.

Reasoning plus action (ReAct) as a pattern for agent behavior

ReAct paradigm — alternating explicit reasoning steps with concrete actions:

- The model alternates between reasoning (explicit chains of thought) and actions (API calls, searches).

- Planning decomposes tasks into actionable subtasks.

- Memory preserves interim findings and history for future decisions.

- Together these elements enable complex task completion that single-shot generation cannot achieve.

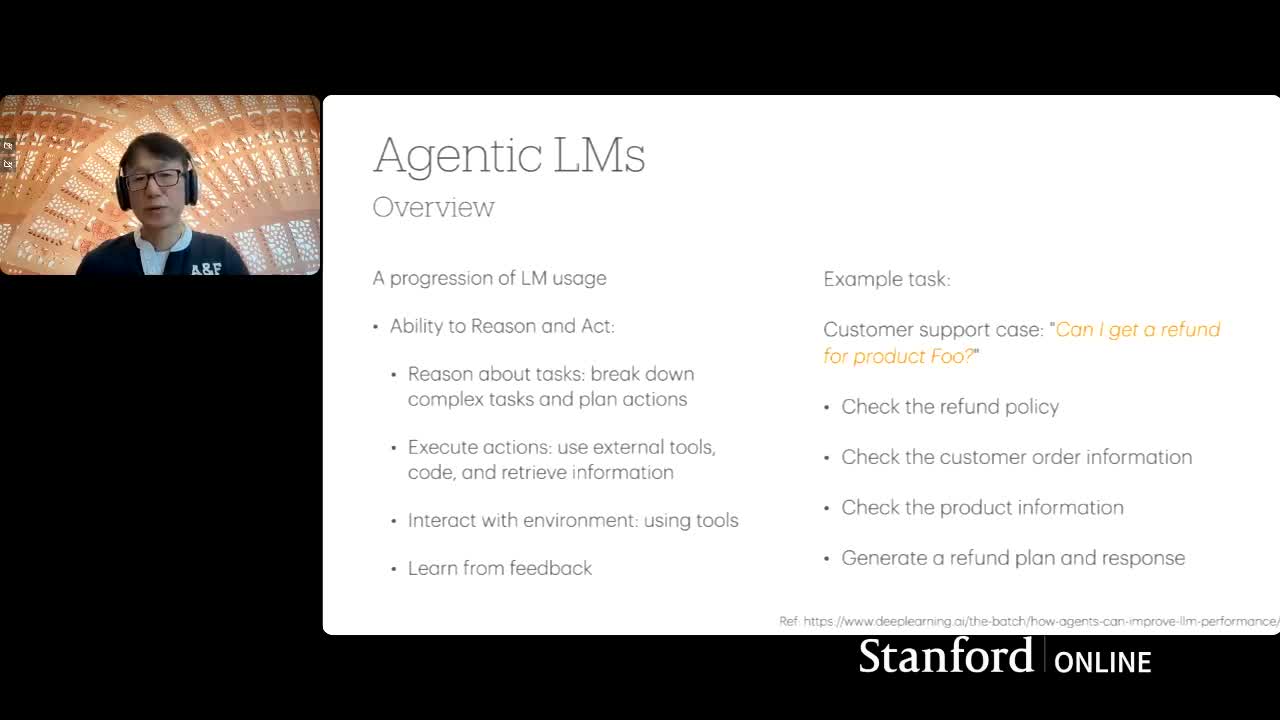

Customer-support agent example illustrating agentic workflow

Refund-request workflow (concrete example) — an agent decomposes the user query into steps and issues API retrievals:

- Check policy — retrieve refund policy and constraints.

- Check customer info — fetch account, order history, and eligibility.

- Check product — validate product details, shipping, and returns.

-

Decide — synthesize evidence and produce a policy-compliant recommendation.

- Each step produces structured calls to retrieval/order systems; the agent synthesizes evidence into a response draft and a follow-up API action for approval or execution.

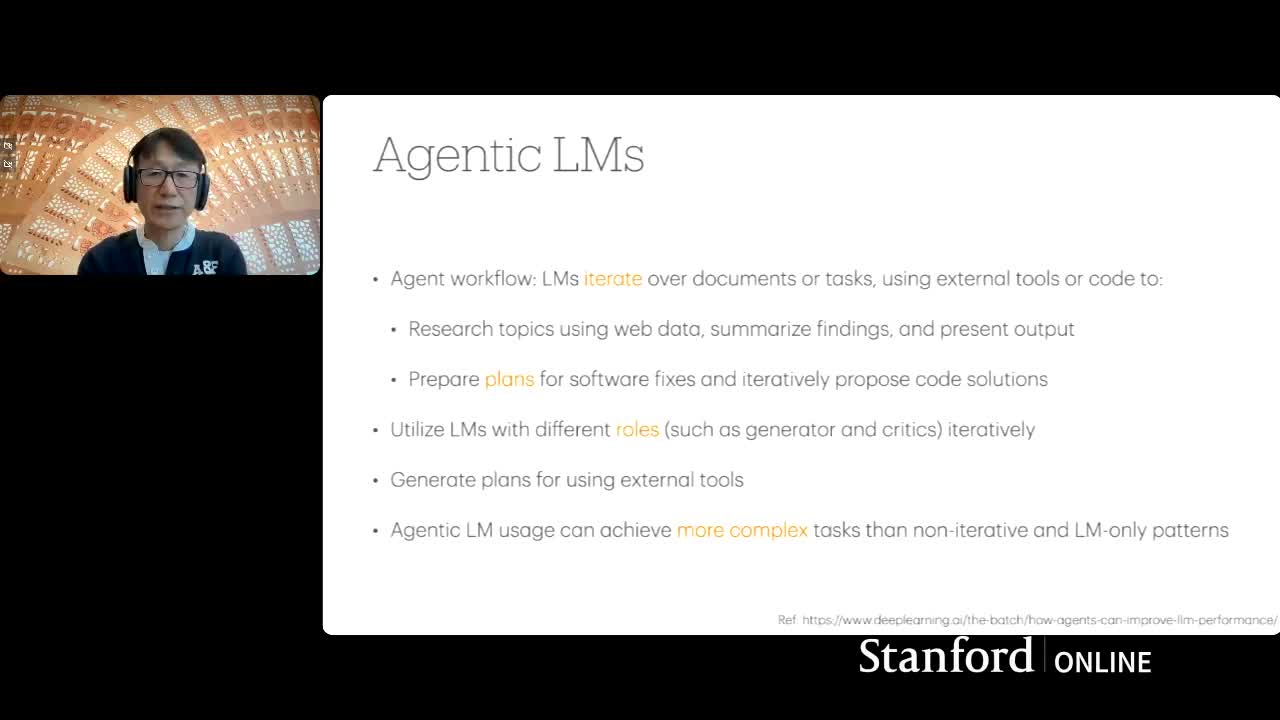

Iterative agent workflows for research and software assistance

Iterative agent workflows — repeated observation-action loops for convergence:

- Use cases: research reports, debugging code, software assistants that identify files, hypothesize fixes, run tests in sandboxes, and iterate until an acceptable patch is produced.

- Workflow: search → summarize → refine → repeat.

- Repeated cycles typically converge on higher-quality solutions than single-pass generation.

Agentic patterns enable more complex task execution with the same models

Why agent workflows expand capability — structuring tasks as agent workflows compensates for LM weaknesses:

- Decomposition, retrieval, and tool use make complex tasks tractable without changing base models.

- Orchestration of focused calls leverages external systems for facts and computation and organizes intermediate results into coherent outputs.

- This raises the ceiling of achievable automation using existing LMs.

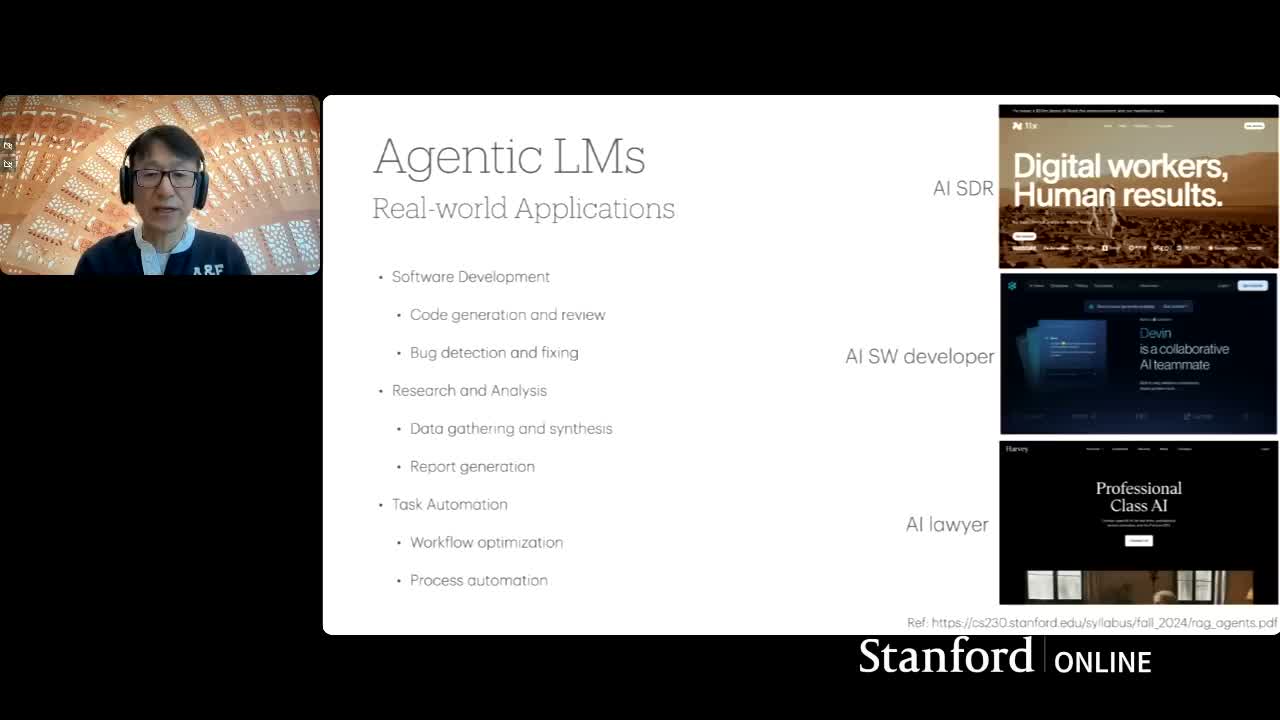

Representative real-world applications of agentic AI

Application areas for agents — primary domains where agentic designs add value:

- Software development: code generation, bug fixing, automated testing, PR creation.

- Research & analysis: information synthesis, summarization, iterative literature review.

-

Business process automation: customer support workflows, billing, approvals.

- Benefits across domains: iterative reasoning, external data integration, action capabilities, traceability, and modular architectures.

Design patterns for agentic systems: planning, reflection, tools, multi-agent

Core agentic design patterns — recurring building blocks:

- Planning: decompose tasks into actionable subtasks.

- Reflection: critique and improve outputs via meta-evaluation.

- Tool usage: access external capabilities or deterministic compute.

-

Multi-agent collaboration: coordinate specialized agents to parallelize and specialize work.

Reflection pattern and its application to code refactoring

Two-step reflection (self-critique + refactor) — an iterative improvement pattern:

- Audit / critique — instruct the model to review outputs and list constructive feedback.

-

Refactor — prompt the model to produce an improved version using that feedback.

- Leverages the model’s evaluative capabilities to produce higher-quality refactorings than single-pass generation; applicable to many content-improvement tasks.

Tool usage, multi-agent collaboration, and persona-based agents

Persona-based agents & orchestrator — tool usage and multi-agent coordination:

- Implement agents as distinct personas or prompts dedicated to specific tasks (e.g., climate, lighting, security).

- A central orchestrator routes requests, resolves conflicts, and coordinates actions.

- Persona separation simplifies reasoning scope per agent and supports heterogeneous model selection and specialized tool interfaces.

Summary: agentic usage extends traditional LM practices

Conclusion: agentic as a progression of LM engineering — how agentic builds on existing practices:

- Retains prompting best practices while adding retrieval, tool integration, multi-step workflows, and orchestration.

- Treats the LM as a reasoning core augmented by external actions and memory to enable more sophisticated applications.

- Adoption requires additional infrastructure for retrieval, tooling, evaluation, and safety but leverages existing model capabilities.

Evaluating agents: beyond single-shot LLM judgment

Evaluation strategies for agentic systems — stronger, multi-stage judging patterns:

- Extend single-shot LLM judging with agentic judging that uses reflection and hierarchical critique (e.g., junior-level assessment followed by senior-level re-evaluation).

- Iterative, multi-stage judging flows often yield more robust quality signals and can be automated within the agent framework.

- Reliable evaluation is critical for model selection, prompt tuning, and safe deployment.

Guidance for augmenting agents for specific applications

Start simple, iterate toward agents — pragmatic development advice:

- Begin with the simplest LM approach that meets requirements.

- Experiment with iterative LM calls, small dataset fine-tuning, and prompt engineering before investing in full agentic infrastructure.

- Incremental augmentation (add retrieval, tool calls) and small labeled samples validate directions prior to large-scale efforts.

Mitigating hallucinations and implementing guardrails

Layered safety & guardrails — reducing hallucination and undesirable outputs:

- Use output filtering via classifiers, lightweight LM-based validators, and rule-based checks.

- Apply input-stage sanitization to detect risky queries.

- Enterprise measures: stricter input validation, approval workflows, sandboxed execution, and monitoring.

- Continuous monitoring and domain-specific validation are essential because probabilistic generation cannot be fully eliminated.

Getting started: playgrounds, APIs, and incremental experimentation

Pragmatic onboarding path — a three-step approach:

- Experiment in a provider playground to iterate on prompts quickly.

- Integrate via simple API calls from code to understand behavior and operational costs.

-

Decide whether to adopt libraries or build custom scaffolding based on learnings.

- This incremental path prioritizes rapid feedback and informed decisions about fine-tuning and orchestration investments.

Resources and following experts to stay current

Staying up-to-date — tracking experts, courses, and community resources:

- Follow domain experts, curated course materials, and community resources (blogs, social media, video channels, academic/industry courses).

- Prefer a small set of reputable sources and supplement with targeted deep dives to filter signal from noise.

- Regularly update tooling and evaluation knowledge as the field evolves quickly.

Closing remarks and thanks

Closing remarks — wrap-up and call to action:

- Reiterate the rapid pace of progress in LLMs and agentic AI.

- Encourage continued experimentation, iteration, and community engagement as practical takeaways.

- Thank participants and invite further questions and exploration.

Enjoy Reading This Article?

Here are some more articles you might like to read next: