Agent 06 - Building Agents with MCP by Mahesh Murag Anthropic

- Introduction and session overview

- Motivation: models require context

- Precedent protocols (APIs and LSP) and origin of mCP

- mCP conceptual model and primary interfaces

- State of the ecosystem before mCP: fragmentation

- mCP client-server architecture and examples

- Value proposition for developers, tool providers, and enterprises

- Early adoption and community contributions

- High-level build model for mCP interactions

- Tools: model-controlled capabilities

- Resources: application-controlled data artifacts

- Prompts: user-controlled templates and command surfaces

- Q&A: separation of model-controlled tools vs application-controlled resources

- Q&A: exposing vector databases and tool granularity

- Q&A: mCP and agent frameworks (compatibility and adapters)

- Q&A: whether mCP replaces agent frameworks

- Q&A: dynamic prompts and resource notifications vs tools

- Demo overview: Cloud for Desktop as mCP client

Introduction and session overview

The presenter opens the workshop by introducing the Model Context Protocol (mCP) and outlining the session structure and goals.

- Goals include explaining the mCP philosophy, common adoption patterns, how agents use mCP, and the roadmap for the protocol.

- The talk is framed as an interactive session that invites questions and discussion.

- mCP is presented as a practical protocol designed to standardize how models access external context, tools, and data.

This orientation places the subsequent technical material in a product and developer adoption context and explains that the discussion will move from motivation → practical patterns → implementations.

Motivation: models require context

Models are only as effective as the context provided to them.

Modern AI applications benefit when models have direct hooks into relevant data sources and tools rather than relying on manual copy‑paste of context.

- The move from manual context injection to programmatic, integrated context access motivates a standard protocol.

- That protocol must enable secure, composable, and reusable integrations between models, applications, tools, and data stores.

The motivation for mCP is to reduce duplication and enable richer, more personalized AI experiences.

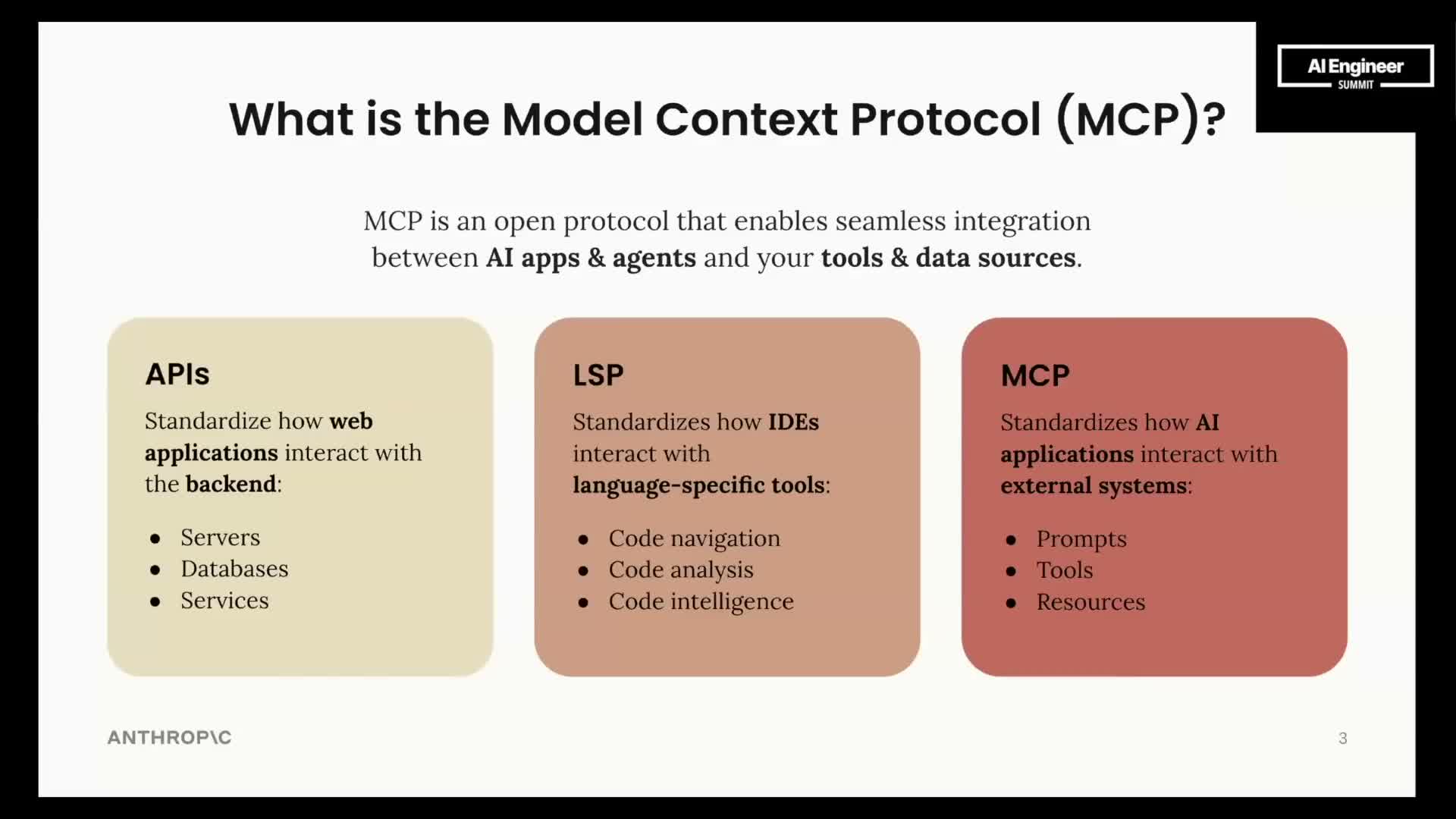

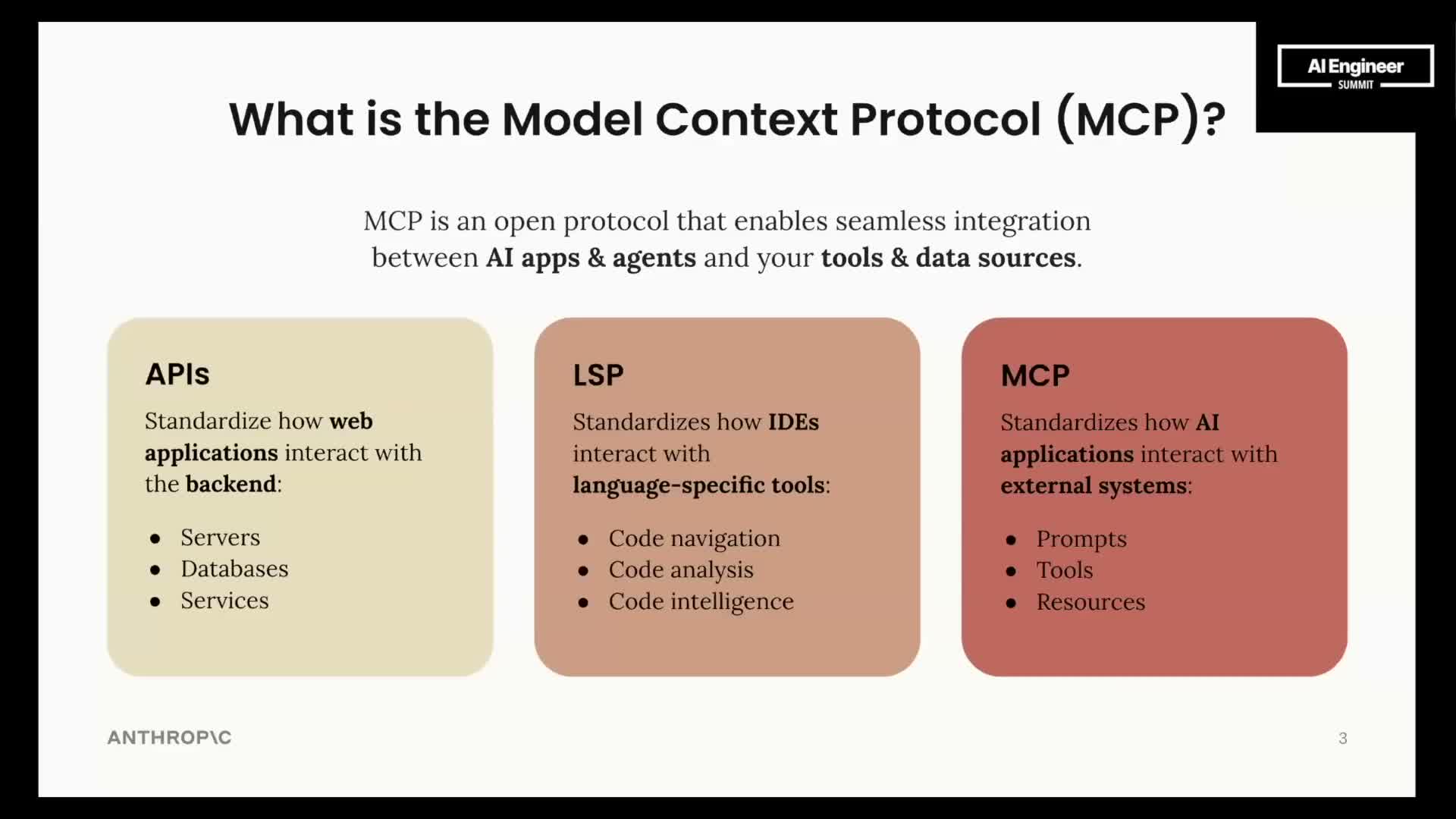

Precedent protocols (APIs and LSP) and origin of mCP

Historical precedents shaped mCP’s design:

-

APIs standardized communication between front‑ends and back‑ends.

- The Language Server Protocol (LSP) standardized how IDEs interact with language tooling.

By analogy, mCP aims to standardize how models access external systems.

- Like LSP, mCP provides a consistent interface so any mCP‑compatible client can interact with any mCP‑compatible server.

- This enables reuse of integrations across clients and positions mCP as the next‑layer standard to make model‑oriented integrations portable and discoverable.

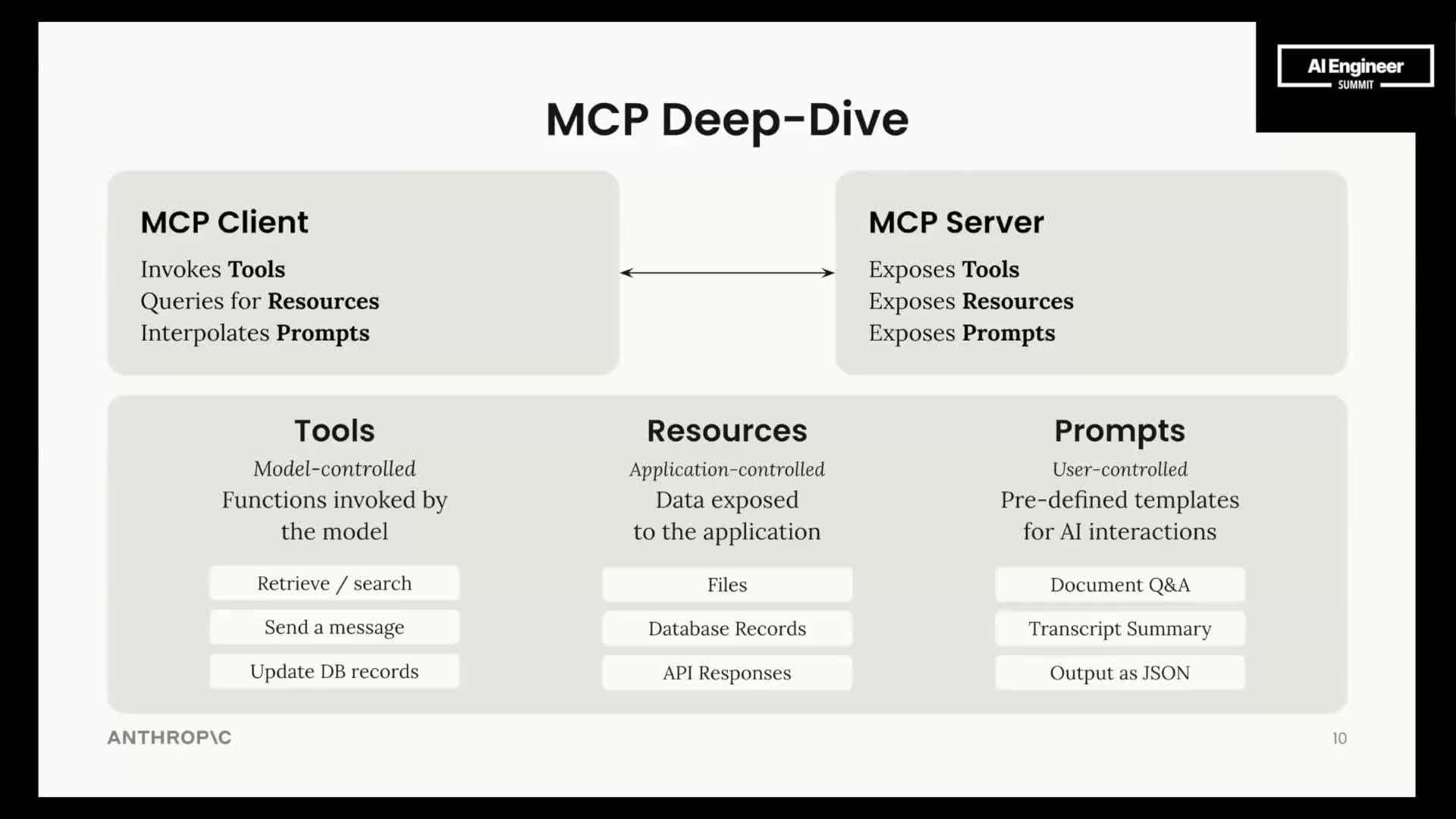

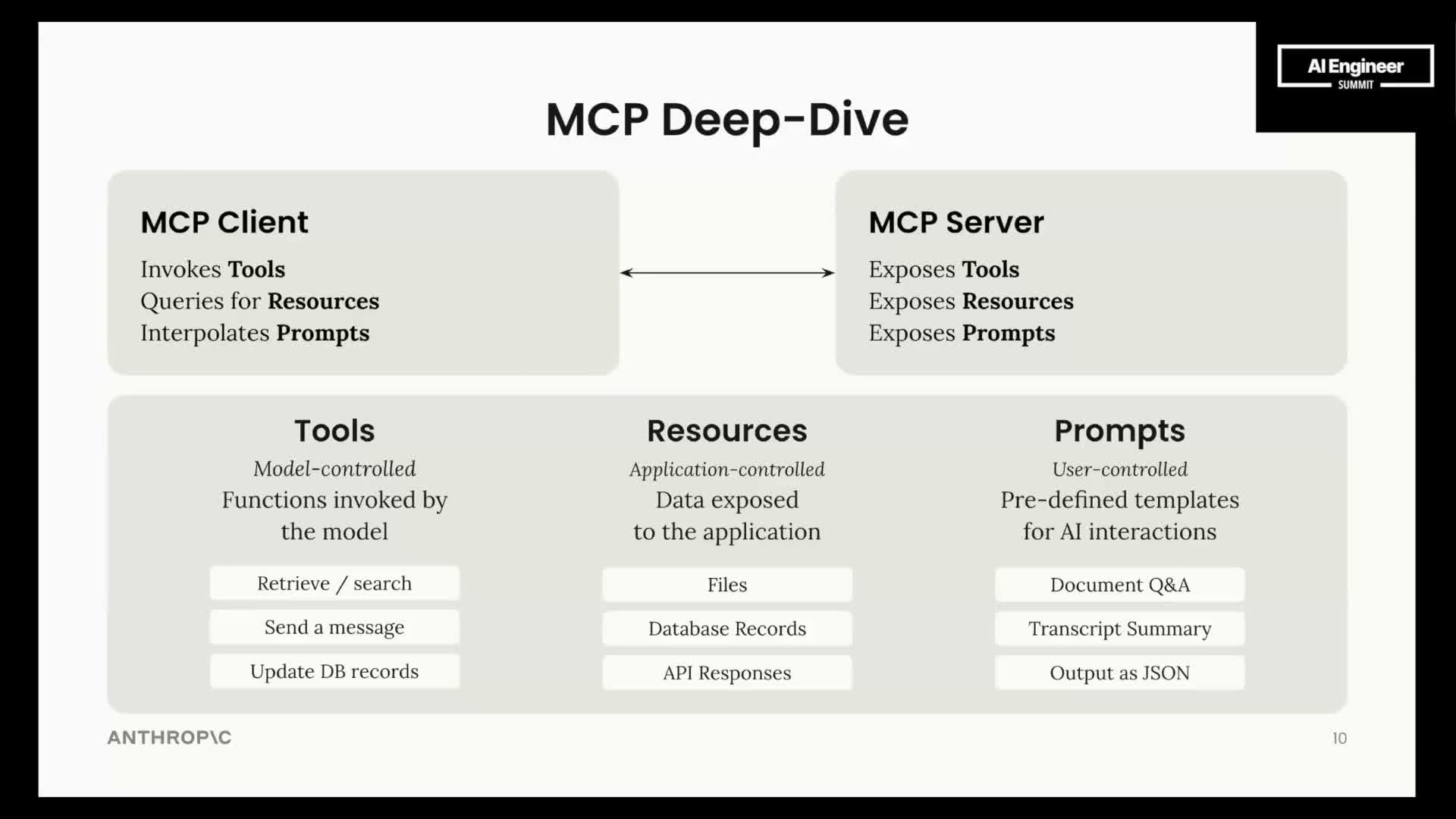

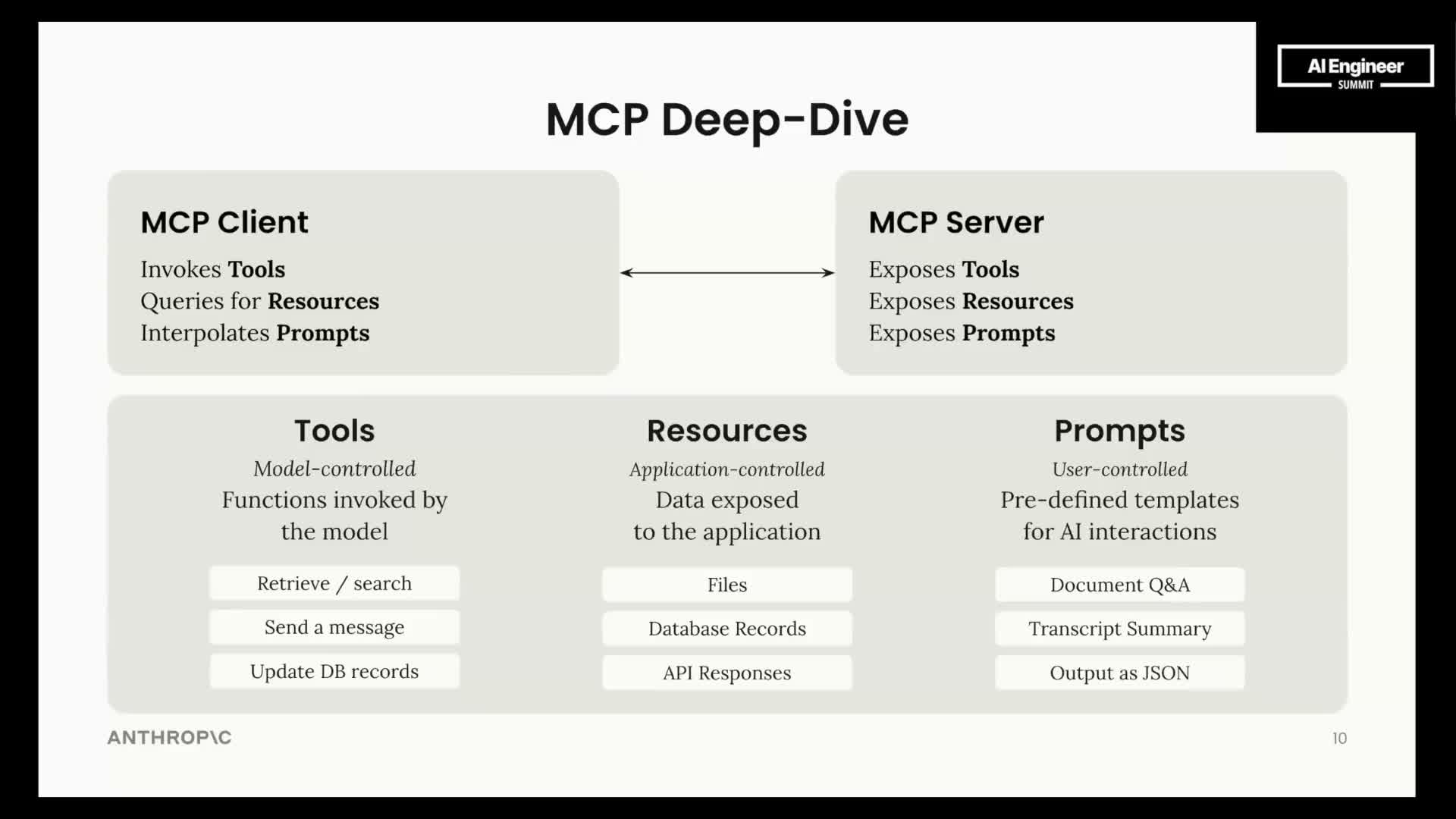

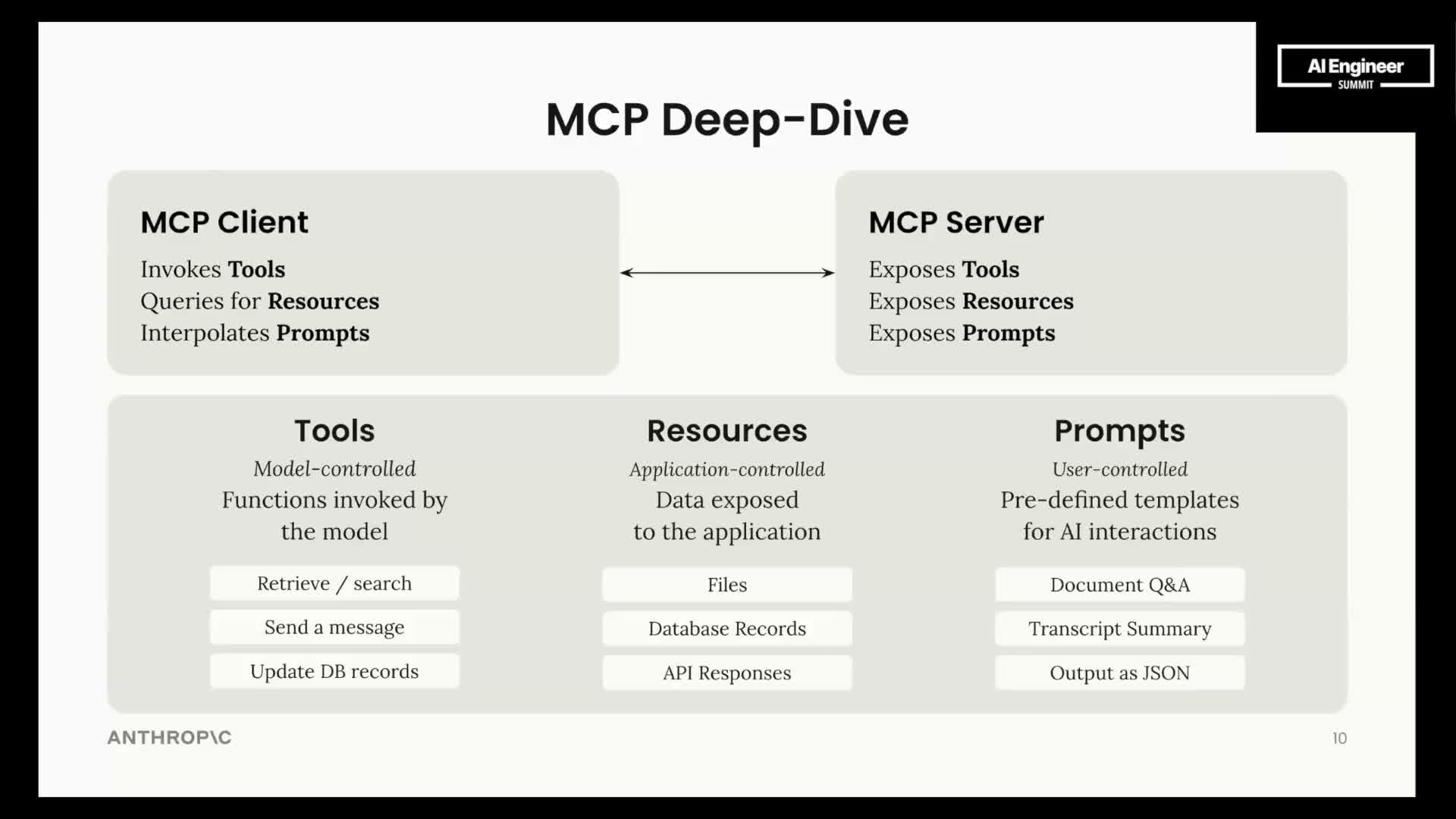

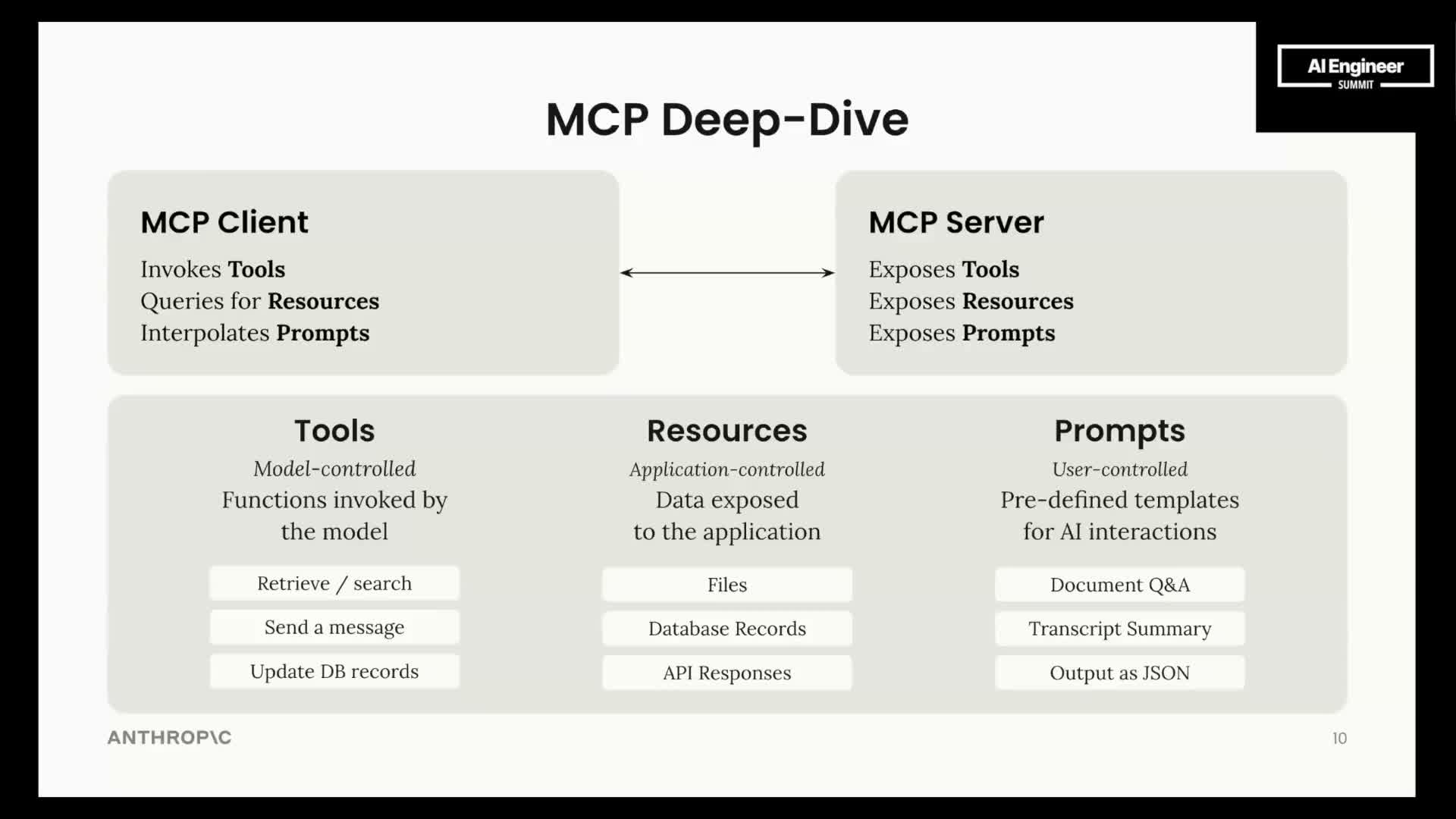

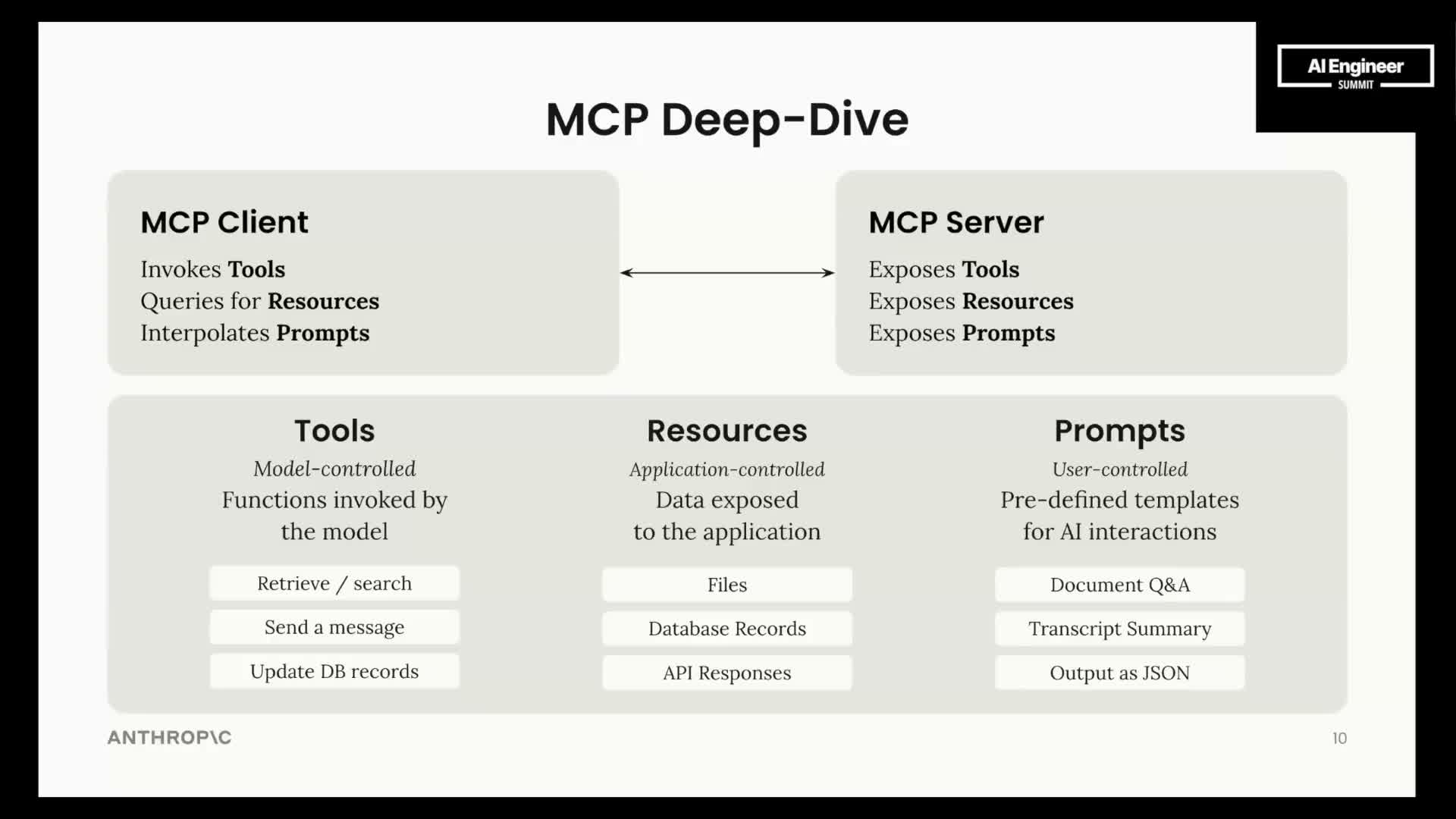

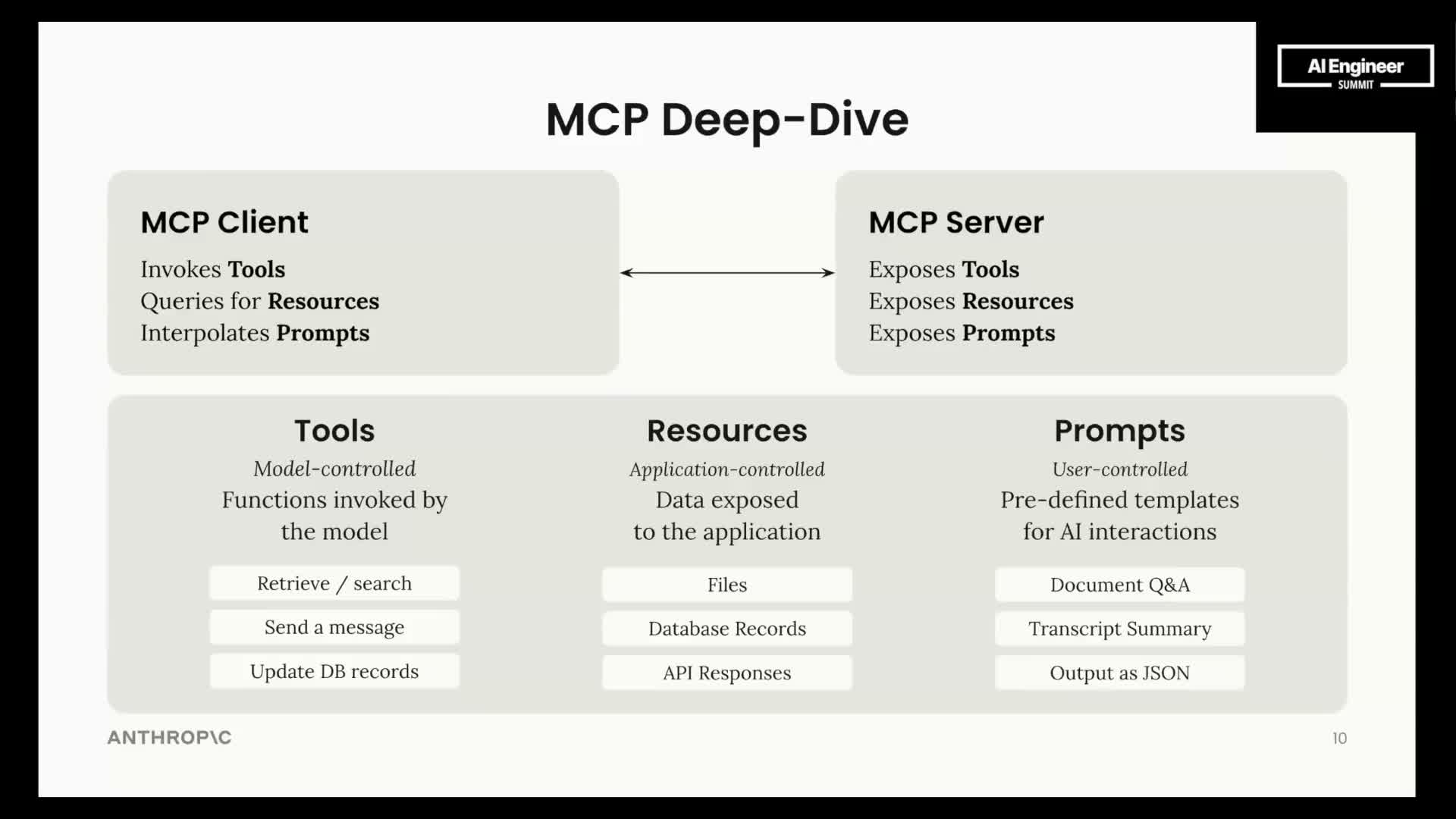

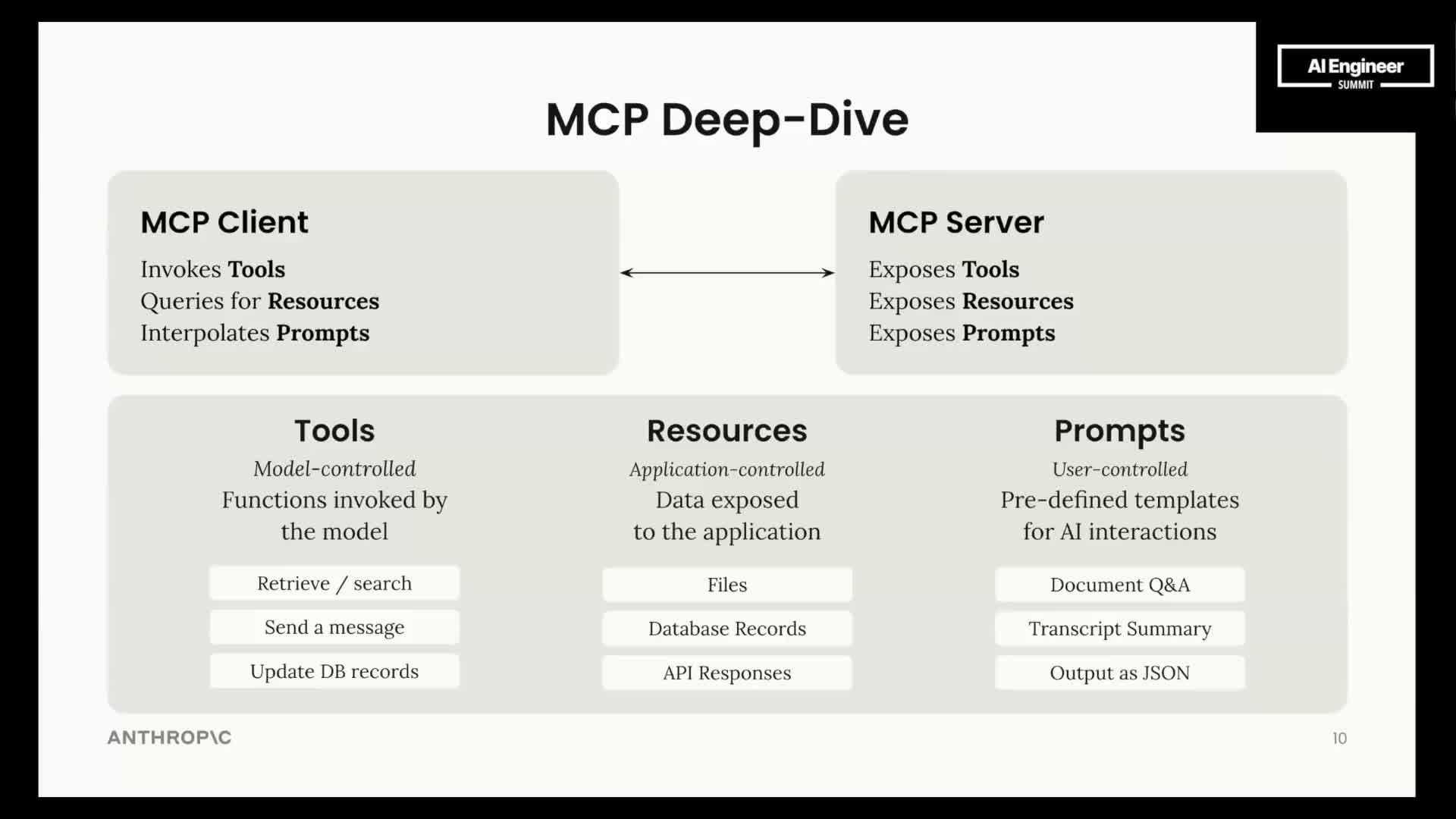

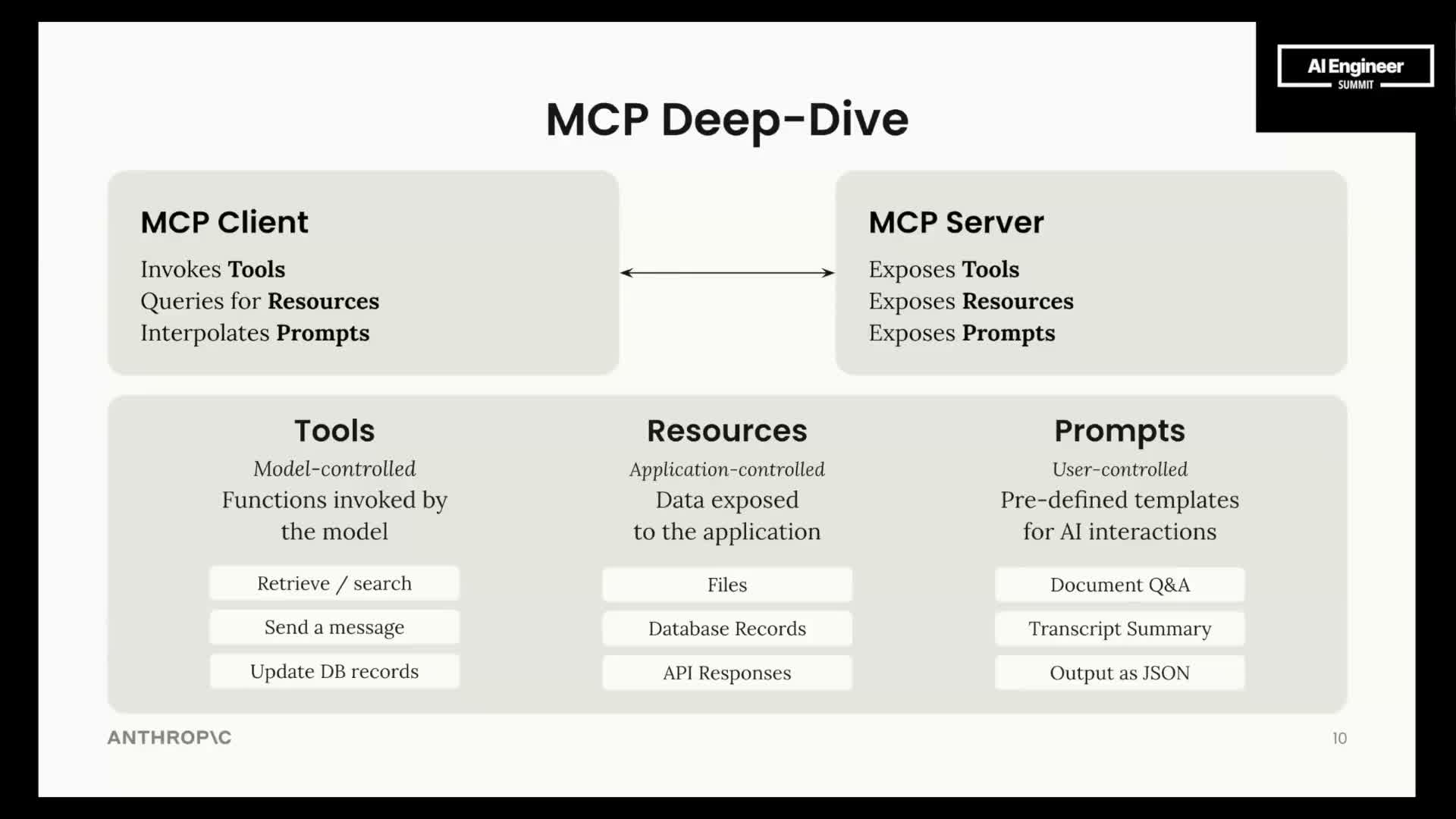

mCP conceptual model and primary interfaces

mCP standardizes interactions via a triad of interfaces: prompts, tools, and resources.

-

Prompts — user‑controlled templates for structured interactions.

-

Tools — model‑invokable capabilities that act on external systems.

-

Resources — application‑controlled data artifacts surfaced to clients and models.

This separation of responsibilities creates a clear contract for service exposure and lets clients and servers negotiate capabilities and context without bespoke per‑app logic.

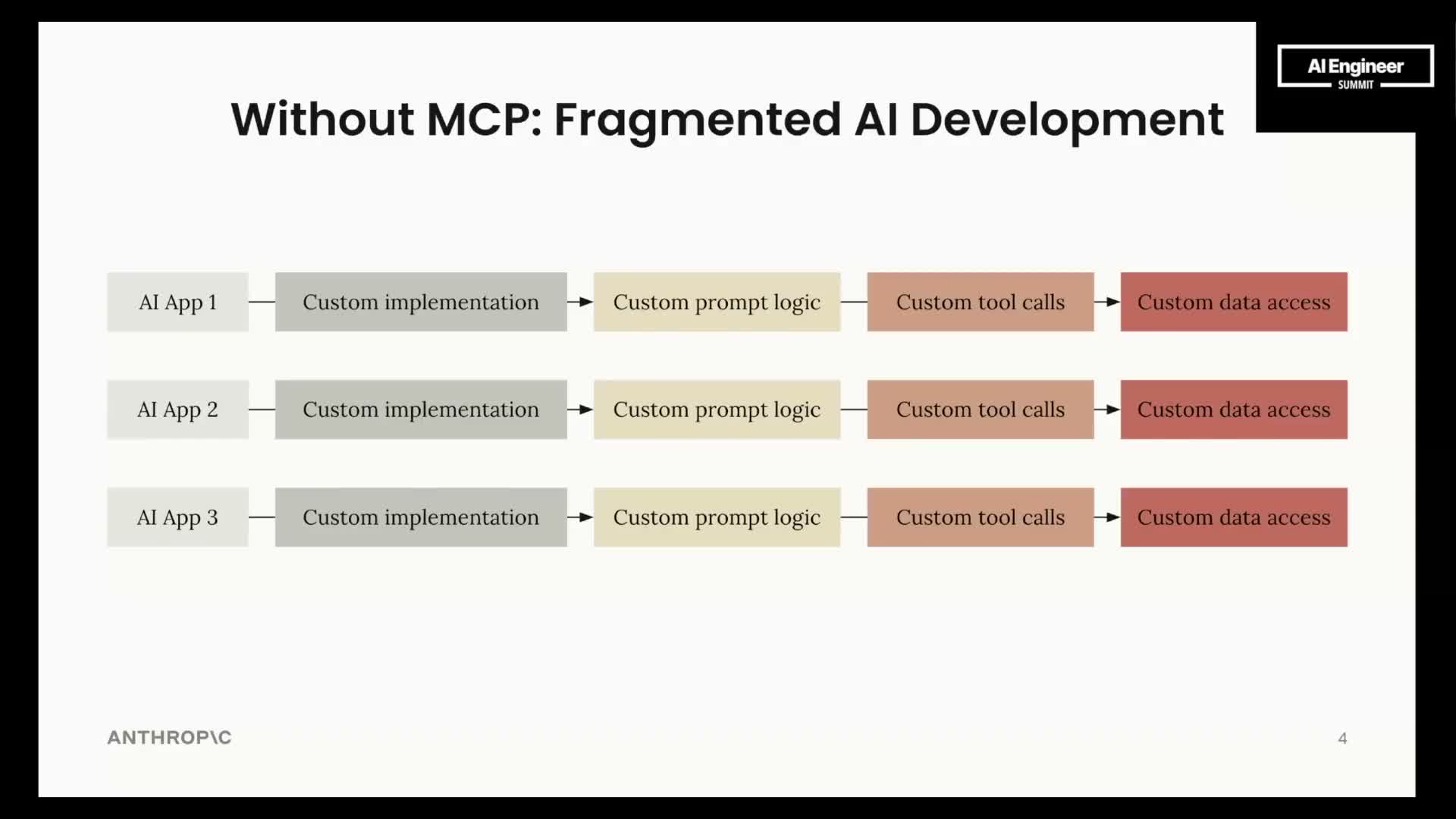

State of the ecosystem before mCP: fragmentation

Before mCP, many teams built bespoke integrations that duplicated core logic, producing an N×M integration problem.

- Common duplicated pieces: prompt logic, tooling access, federated auth, chunking and retrieval strategies.

- Negative effects: slowed development, inconsistent UX, repeated reimplementation of security and data‑access policies.

mCP aims to flatten that complexity by providing a common protocol layer so teams can share and reuse integrations instead of rebuilding them.

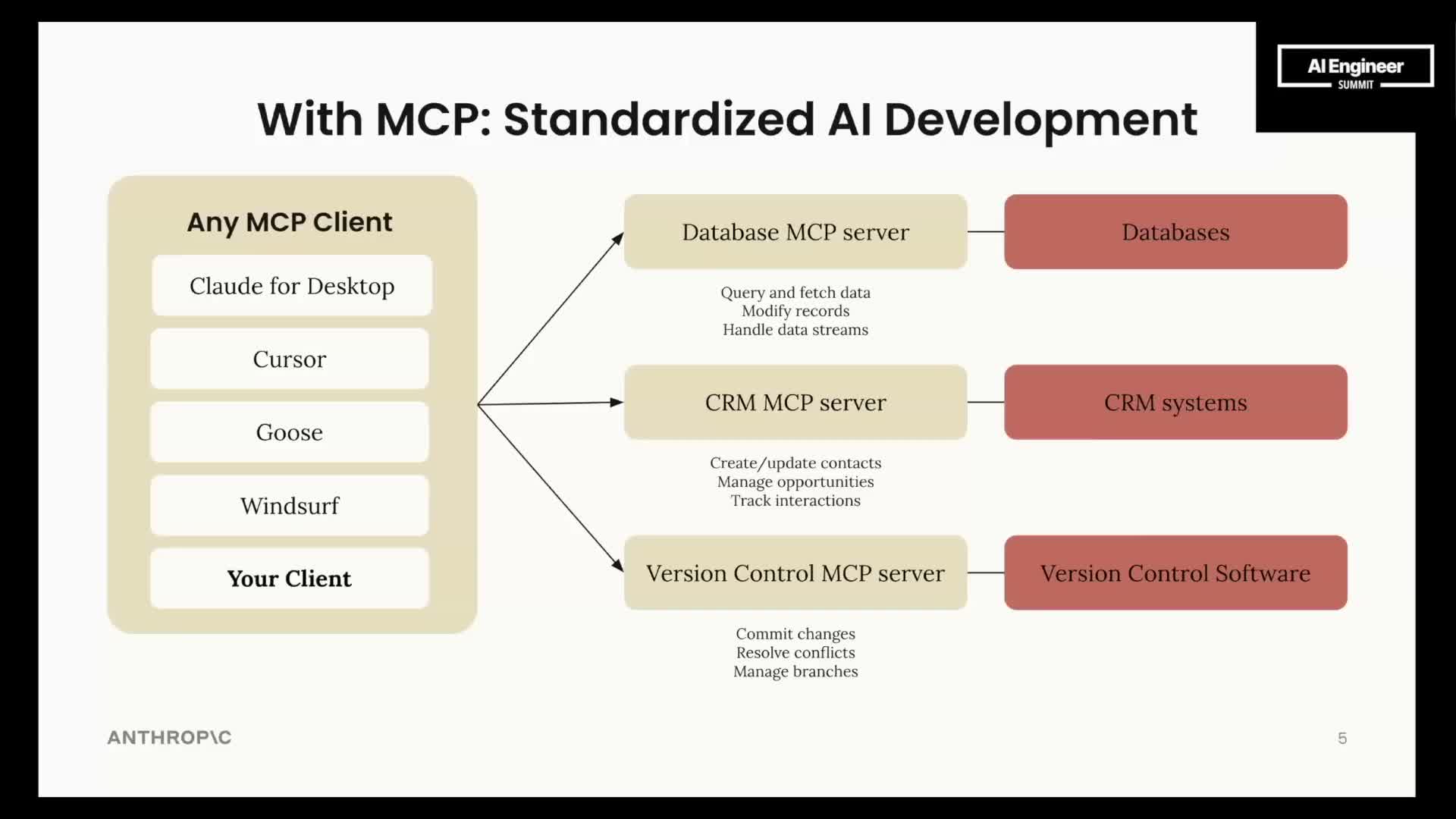

mCP client-server architecture and examples

mCP defines two complementary roles: the client and the server.

-

Client — applications and IDEs that consume services (examples: first‑party apps, IDE integrations, third‑party agent UIs).

-

Server — a wrapper that federates access to tools, APIs, and data sources (examples: connectors exposing databases, CRMs, local files).

Clients connect to servers via a standardized interface, enabling any compliant client to interoperate with any compliant server with minimal integration effort.

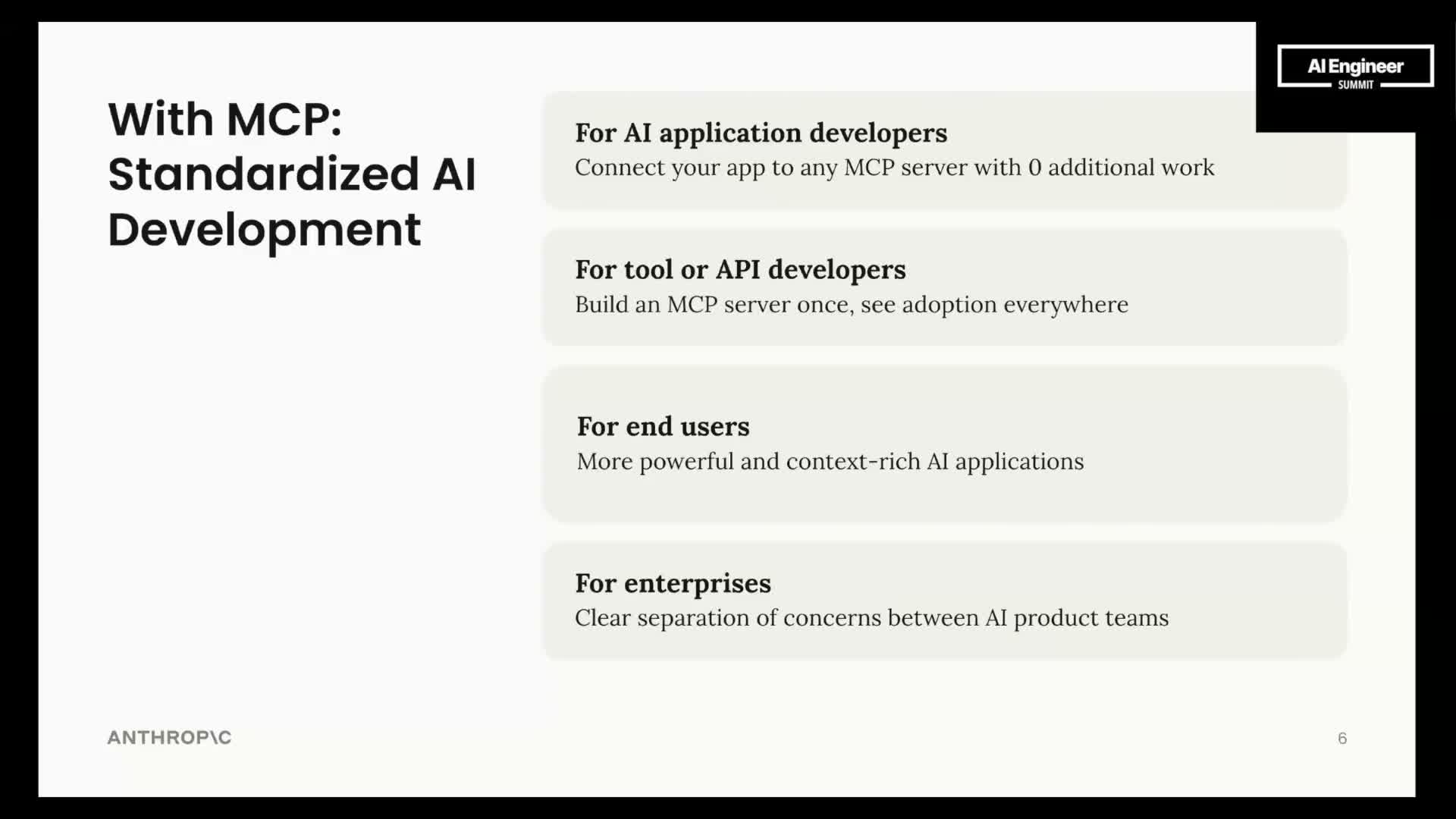

Value proposition for developers, tool providers, and enterprises

mCP delivers concrete benefits across stakeholders:

- For application developers: write‑once clients that can connect to many servers.

- For tool/API providers: a single mCP server implementation can reach many clients.

- For enterprises: specialized infra teams can publish standardized server interfaces for internal consumers.

Outcomes include flattening the N×M problem, accelerating cross‑team development, and delivering richer, context‑aware end‑user experiences.

Centralizing prompting, chunking, and access control at the server level also enables organizations to enforce governance while letting application teams iterate quickly.

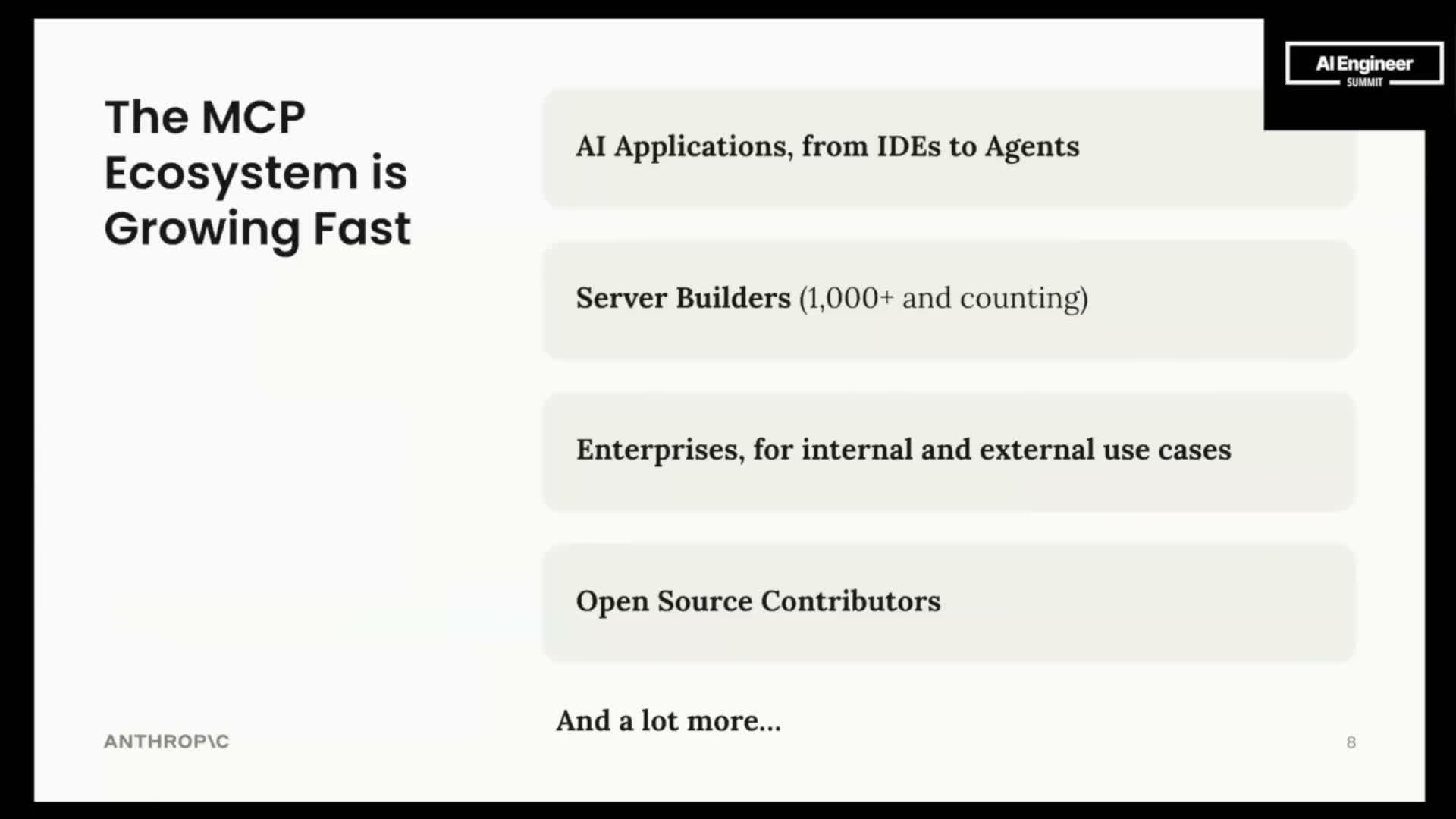

Early adoption and community contributions

Adoption has appeared across multiple personas and environments:

-

IDE/agent clients integrating mCP endpoints.

-

Community‑built servers rapidly appearing as open‑source projects.

-

Corporate integrations where companies ship official mCP servers.

Within months, a large number of open‑source servers and corporate integrations demonstrated developer appetite for a standard and showed that mCP reduces friction for exposing contextual services to models.

High-level build model for mCP interactions

The mCP runtime model describes a simple discovery/use flow:

- The client interpolates context into prompts and decides what it needs.

- The client queries servers for resources and discovers available tools and prompts.

- The server exposes tools, prompts, and resources in a consumable format and documents intended usage.

- The client chooses when to use resources or to invoke model‑driven tools; servers provide the complex access logic while clients remain lightweight and discovery‑oriented.

Tools: model-controlled capabilities

Tools are the model‑invokable capabilities a server exposes—operations that can read, write, or act on external systems.

- Typical tools: data retrieval, database updates, file‑system operations, third‑party API calls, or action‑triggering write operations.

- Servers publish tool descriptions, intended usage, and invocation semantics so models can decide at runtime whether to call a tool based on prompt context and internal reasoning.

Resources: application-controlled data artifacts

Resources are richer data artifacts exposed by servers and controlled by the application, not the model.

- Resources can be static or dynamically interpolated by the server using user or app context.

- Examples: generated images, JSON snapshots of application state, or files surfaced as attachments in the client UI.

Resources enable asynchronous workflows, subscription/notification semantics, and richer UI interactions beyond simple text exchanges.

Prompts: user-controlled templates and command surfaces

Prompts are predefined templates exposed by servers for common interactions and are invoked by users or client UI actions (for example, slash‑commands in IDEs).

- They encode structured, reusable instruction patterns such as document Q&A formats or conversion templates.

- Prompts can be dynamically parameterized by client context to provide consistent, curated interactions without requiring the model to discover the interaction pattern itself.

Q&A: separation of model-controlled tools vs application-controlled resources

A core design goal of mCP is a clear separation between what the model can autonomously invoke (tools) and what the application controls (resources).

- Clients decide when to attach resources based on UI logic or deterministic rules.

- Models decide whether and when to call certain tools when invocation is ambiguous.

This split reduces accidental or unsafe actions by ensuring some interactions require application‑level approval rather than unfettered model autonomy.

Q&A: exposing vector databases and tool granularity

Exposing a vector database is a concrete design decision that can be modeled two ways:

- As a tool when invocation should be conditional or ambiguous and the model must reason about whether to run a search.

- As a deterministic resource when access patterns are fixed and retrieval is handled by server‑side logic.

The choice balances autonomy, cost control, latency, and security considerations and should reflect the desired behavior and governance model.

Q&A: mCP and agent frameworks (compatibility and adapters)

mCP is complementary to existing agent frameworks rather than a replacement.

-

Adapters/connectors allow frameworks like LangGraph to plug into mCP servers so existing agent logic can call standardized servers.

- Agent frameworks typically manage orchestration, knowledge management, and the agent loop, while mCP standardizes discovery and access to external tools, prompts, and resources.

This interoperability reduces integration work and lets agent frameworks focus on agent behavior while leveraging a broad ecosystem of mCP servers.

Q&A: whether mCP replaces agent frameworks

While mCP standardizes the access layer, agent frameworks continue to add value:

- Frameworks handle orchestration, agent reasoning, state management, and domain‑specific control flows.

- mCP can replace bespoke tool‑access code and context provisioning inside agents, but frameworks remain important for defining multi‑agent workflows, knowledge management, and loop orchestration.

In short: mCP standardizes access; frameworks operationalize behavior.

Q&A: dynamic prompts and resource notifications vs tools

Prompts and resources provide protocol‑level capabilities that go beyond a simple tool call:

- They support dynamic interpolation based on runtime context.

-

Server‑initiated notifications allow resources to be pushed or subscribed to, enabling event‑driven and asynchronous experiences.

These capabilities let servers act as application‑layer controllers rather than simple API proxies for model inputs.

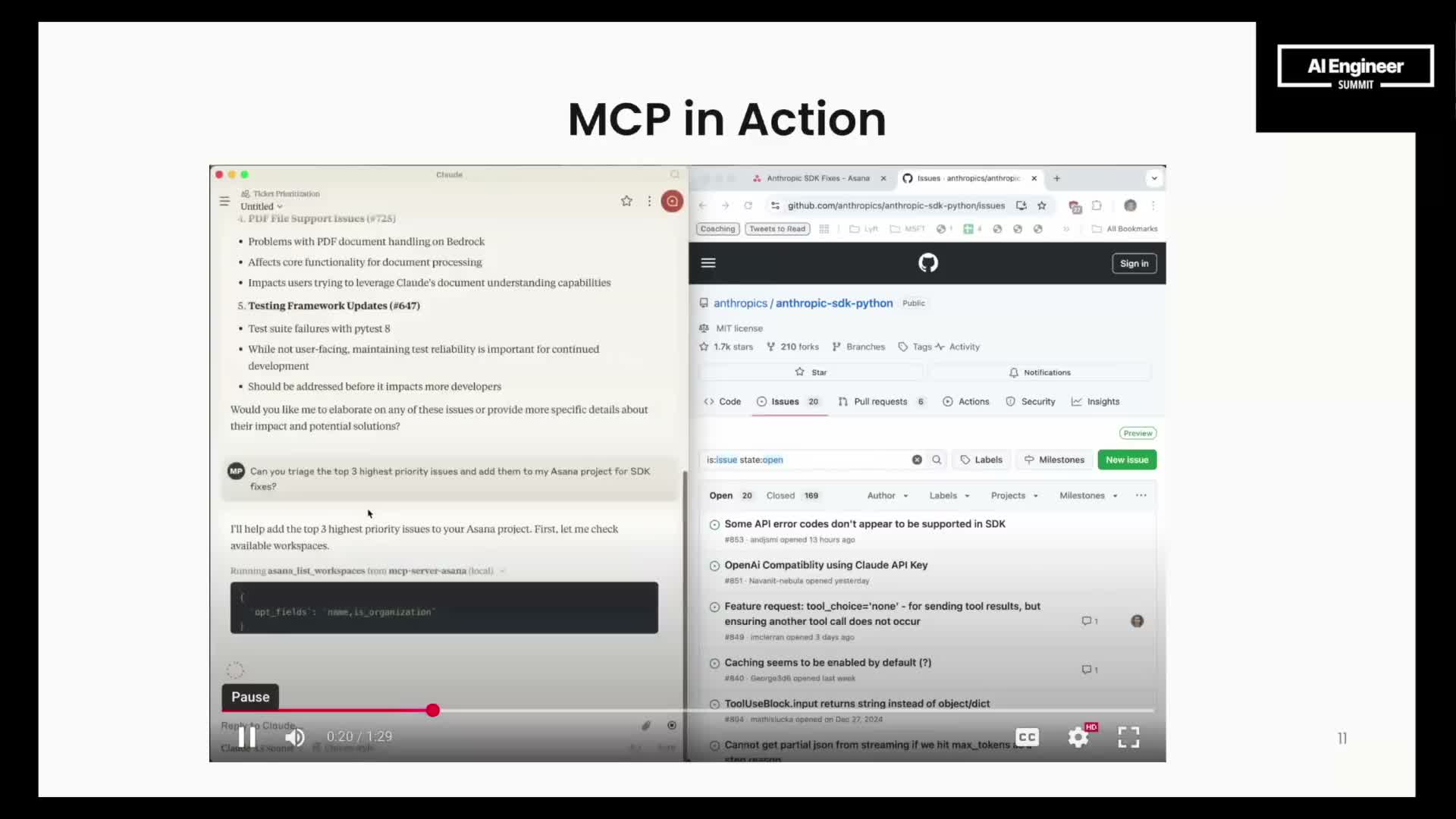

Demo overview: Cloud for Desktop as mCP client

Cloud for Desktop is an example mCP client that demonstrates real‑world orchestration across servers.

- The UI orchestrates multiple mCP servers (for example, GitHub and Asana) to triage issues and take actions driven by model reasoning.

- The demo shows the model autonomously invoking list‑issues tools, summarizing results, triaging by inferred user preferences, and invoking Asana tools to create tasks.

This illustrates how community‑contributed servers can be small, composable building blocks that integrate into daily workflows and are reused across clients.

Enjoy Reading This Article?

Here are some more articles you might like to read next: