Agent 07 - Building an Agent from Scratch by AI Engineer

- Talk goals: demonstrate a minimal agent, enable experimentation with code, and build intuition for agent components

- Slides and code are published and attendees are encouraged to try the repository.

- An agent is composed of an LLM, memory, planning, tools, and a loop or conditional control flow.

- Step 0: Start with a basic chat-completion LLM call to produce a baseline response.

- Step 1: Add a conditional evaluator LLM that judges whether the previous answer is complete.

- Tools are external functions described by a JSON schema that the LLM can request the client to call; the client must invoke these tool calls and return results to the LLM.

- Tool execution can fail due to schema validation or parameter constraints, exposing the need for robust error handling.

- Step 3: Refactor to support parallel tool calls and attach tool-call identifiers for tracing.

- Step 4: Implement explicit planning via a to-do list to provide readable/writable state and drive multi-step task execution.

- Example run: the agent used the to-do planner with search and browse tools to gather and summarize information about building agents without a framework.

- Practical example: the agent coordinated multiple searches and browsing steps to plan a local date and dining options.

- Next steps: integrate vector databases or RAG pipelines, iterate on agent design, and leverage the published code and slides for further experimentation.

Talk goals: demonstrate a minimal agent, enable experimentation with code, and build intuition for agent components

The talk sets three primary objectives to orient the audience and the repository work:

-

Expose a minimal, operational definition of an agent — a compact, implementable specification that you can run and reason about.

-

Encourage hands-on experimentation — clone, run, and deliberately break the included code so learners see behavior change in real time.

-

Provide intuition for foundational building blocks used by agent frameworks — so subsequent examples map directly to implementable components.

- Emphasis is placed on the pedagogical value of iterating on code to observe the transition from deterministic, scripted behavior to emergent, agent-like behavior.

- The goal framing prioritizes practical learning and conceptual clarity, setting expectations for the rest of the talk and motivating active engagement with the provided repository and slides.

Slides and code are published and attendees are encouraged to try the repository.

The presenter shares the canonical artifacts and a call to action:

-

Slides, code, and social/contact links are provided up front so attendees can follow along.

- Attendees are explicitly encouraged to clone, run, and modify the example repository as the primary learning path.

Recommended learning strategies emphasized in this segment:

-

Rapid iteration — make small changes and observe outcomes quickly.

-

Fault injection — introduce errors intentionally to reveal where deterministic code starts behaving like an agent.

- Treat the rest of the presentation as a guided tour of the codebase, not just theory, and consult the published artifacts for hands-on practice.

An agent is composed of an LLM, memory, planning, tools, and a loop or conditional control flow.

Agent definition (operational): an architecture that combines a large language model (LLM) with persistent memory, planning logic, and external tools, all orchestrated by a looping control structure.

-

Memory: readable and writable state the agent uses to persist context across iterations.

-

Loop: the control structure implementing conditional repetition and termination logic.

-

Planning: decomposes tasks into substeps or a to-do list the agent can act on using tools.

-

Tools: external capabilities (e.g., web search, browsing) invoked by the agent to perform actions outside the LLM.

This decomposition makes it easy to reorder or refactor responsibilities across components during implementation and aids clear mapping from concept to code.

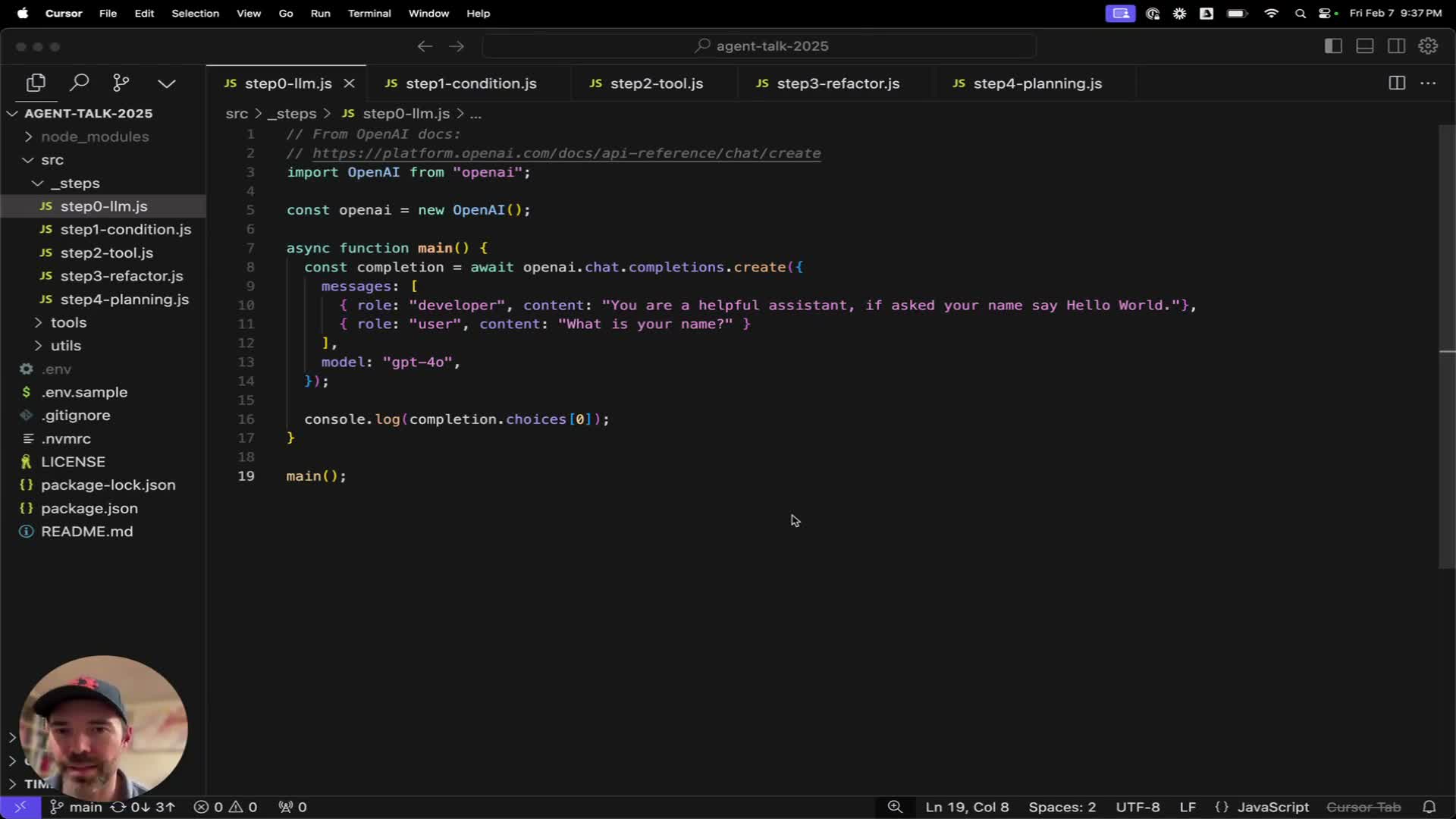

Step 0: Start with a basic chat-completion LLM call to produce a baseline response.

Initial baseline implementation (single-turn generation):

- Call a standard chat completion endpoint via the provider API to generate a baseline answer to a user prompt.

- Observe this as the simplest agent behavior: single-turn, fully deterministic request/response semantics given identical model parameters and context.

- The baseline is intentionally minimal to serve as a clear control case before adding validation, tooling, and state.

- Implementation checklist at this stage:

- Verify API call patterns.

- Confirm message formatting.

- Validate response parsing.

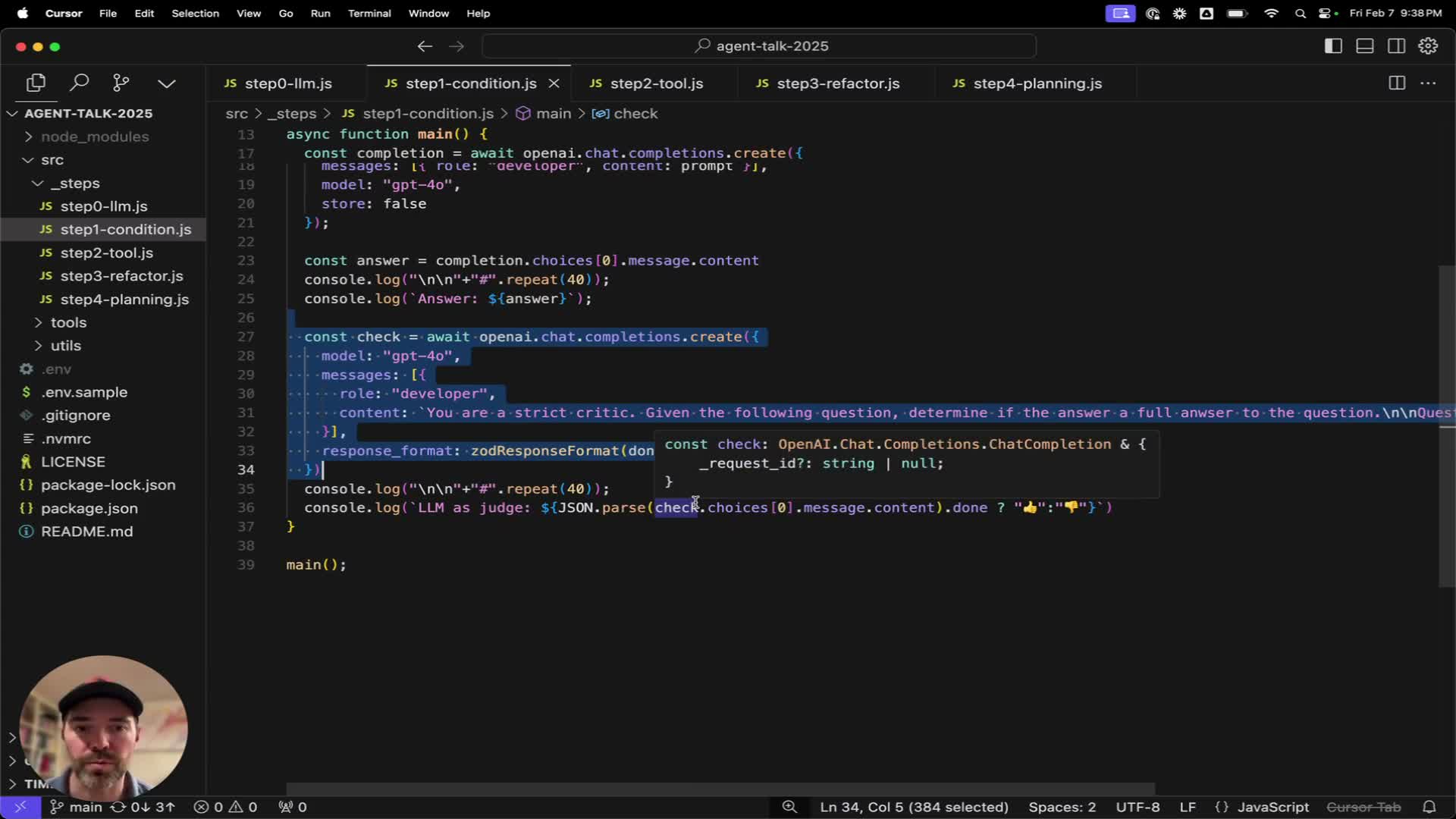

Step 1: Add a conditional evaluator LLM that judges whether the previous answer is complete.

Introduce a secondary LLM acting as a strict critic / judge to validate generated responses:

- The judge LLM evaluates the initial LLM response against a completion criterion and returns a forced JSON schema (for example, a Boolean field named done) so downstream client code can decide to continue or terminate.

- The judge is invoked with instructions to produce a deterministic JSON object, reducing ambiguity about whether the user intent has been satisfied.

Benefits and caveats:

- This two-step pattern separates generation and validation, enabling iterative or corrective behavior without hard-coded client heuristics.

- Still dependent on accurate judge prompts and strict schema enforcement; incorrect judge outputs or lax schema handling can produce false positives or premature termination.

Tools are external functions described by a JSON schema that the LLM can request the client to call; the client must invoke these tool calls and return results to the LLM.

Tools are modeled as function-like endpoints that the client registers with the LLM:

- Each tool includes:

- name

- description

- strictness flag

-

parameter schema

- When the LLM decides to use a tool, it returns an object indicating which tool to call and with which parameters.

- The OpenAI SDK (and similar SDKs) does not execute arbitrary tool logic on behalf of the client:

- The client must parse the tool request,

- Execute the corresponding local function or external API (e.g., a Google search wrapper),

- Then append the tool response back into the conversation for the LLM to consume.

Design principles and safety:

- This pattern lets the LLM orchestrate external actions while keeping side effects and security checks under client control.

- Tool configuration must include precise parameter validation to avoid runtime failures and support deterministic tooling behavior for the agent.

Tool execution can fail due to schema validation or parameter constraints, exposing the need for robust error handling.

A concrete failure mode was demonstrated: an unhandled rejection due to a tool schema validation constraint on a location parameter.

- Tool schemas may restrict allowable strings; improper inputs must be caught and handled by client code.

- The judge LLM may still assert completion even if a tool call failed, so you must reconcile LLM judgments with observable tool execution outcomes.

Practical mitigations:

- Design retry, fallback, and error-reporting strategies.

- Ensure tool parameter schemas are compatible with likely LLM outputs (or sanitize LLM outputs before calling tools).

- Proper validation prevents brittle behavior and reduces false-positive completion signals from the judge.

Step 3: Refactor to support parallel tool calls and attach tool-call identifiers for tracing.

Refactor: move the complete-with-tools logic into a utility module and add parallel execution semantics.

Key changes and rationale:

- Support multiple tool invocations per LLM turn and execute them concurrently (for example, using Promise.all) to increase throughput.

- Associate each tool invocation with a tool-call ID so the client can include that ID when appending the tool response back into the conversation; the LLM can then correlate responses to requests.

- Treat tools conceptually as text transformations (typically string-to-string) and standardize how results are serialized into the conversational context.

- This refactor enables cases where the LLM asks the same tool to be called multiple times with different parameters and improves overall performance and traceability.

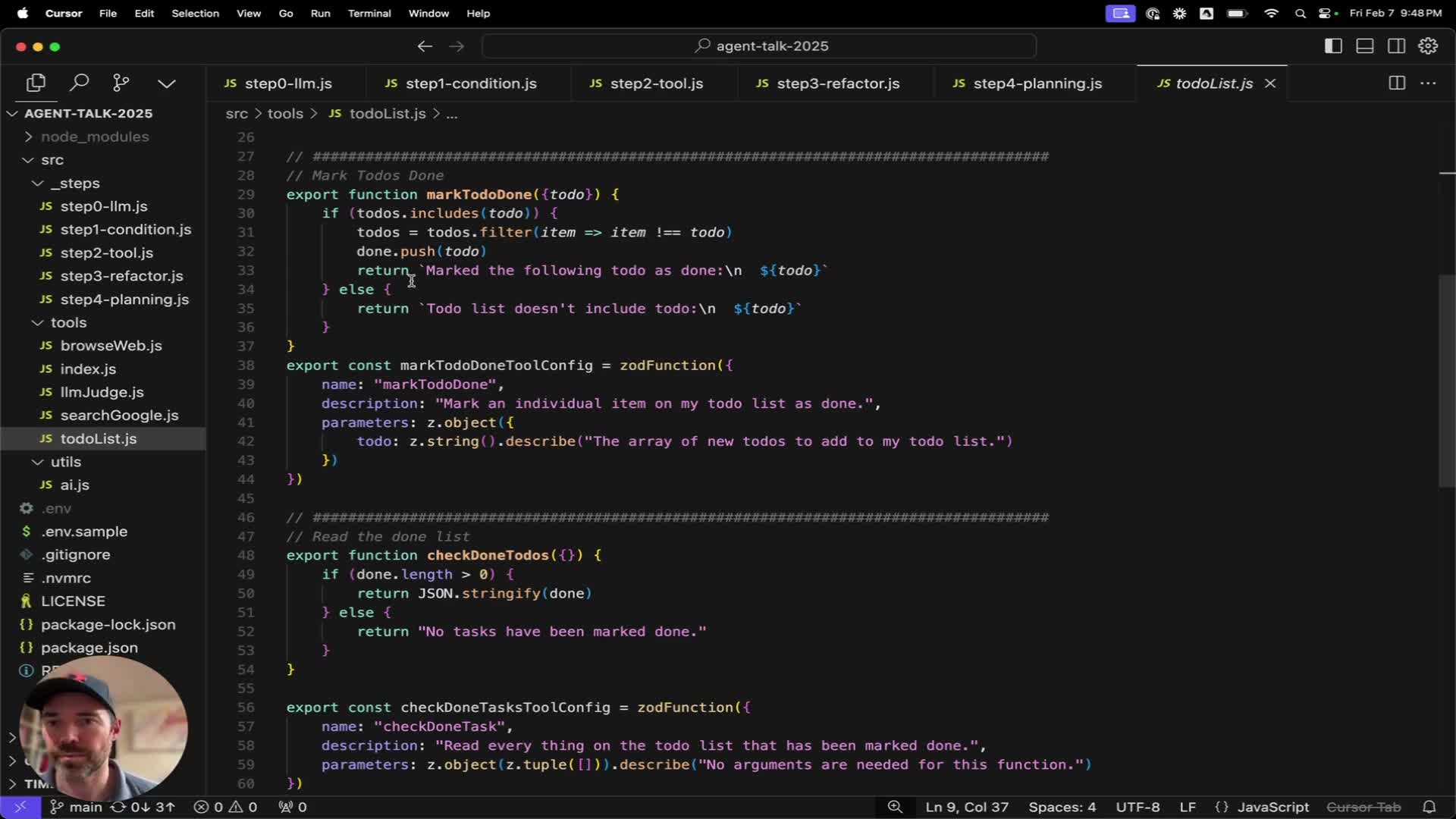

Step 4: Implement explicit planning via a to-do list to provide readable/writable state and drive multi-step task execution.

Introduce an explicit to-do list as a manipulable memory structure that consolidates planning, iteration control, and state persistence:

- Operational model:

- The LLM is prompted to always create a plan by writing to the to-do list before performing actions.

- Client-side tools implement operations with defined parameter schemas:

- add_todo

- mark_done

- check_done

-

list_todos

- The LLM is prompted to always create a plan by writing to the to-do list before performing actions.

- The to-do list acts as both the planner and the working memory for multi-step workflows:

- Tasks can be added, marked done, listed, and queried via tool endpoints.

- Tasks can be added, marked done, listed, and queried via tool endpoints.

Guardrails and recommendations:

- Use maximum iteration counts, context pruning policies, or other termination heuristics to prevent infinite loops or non-converging invocation patterns.

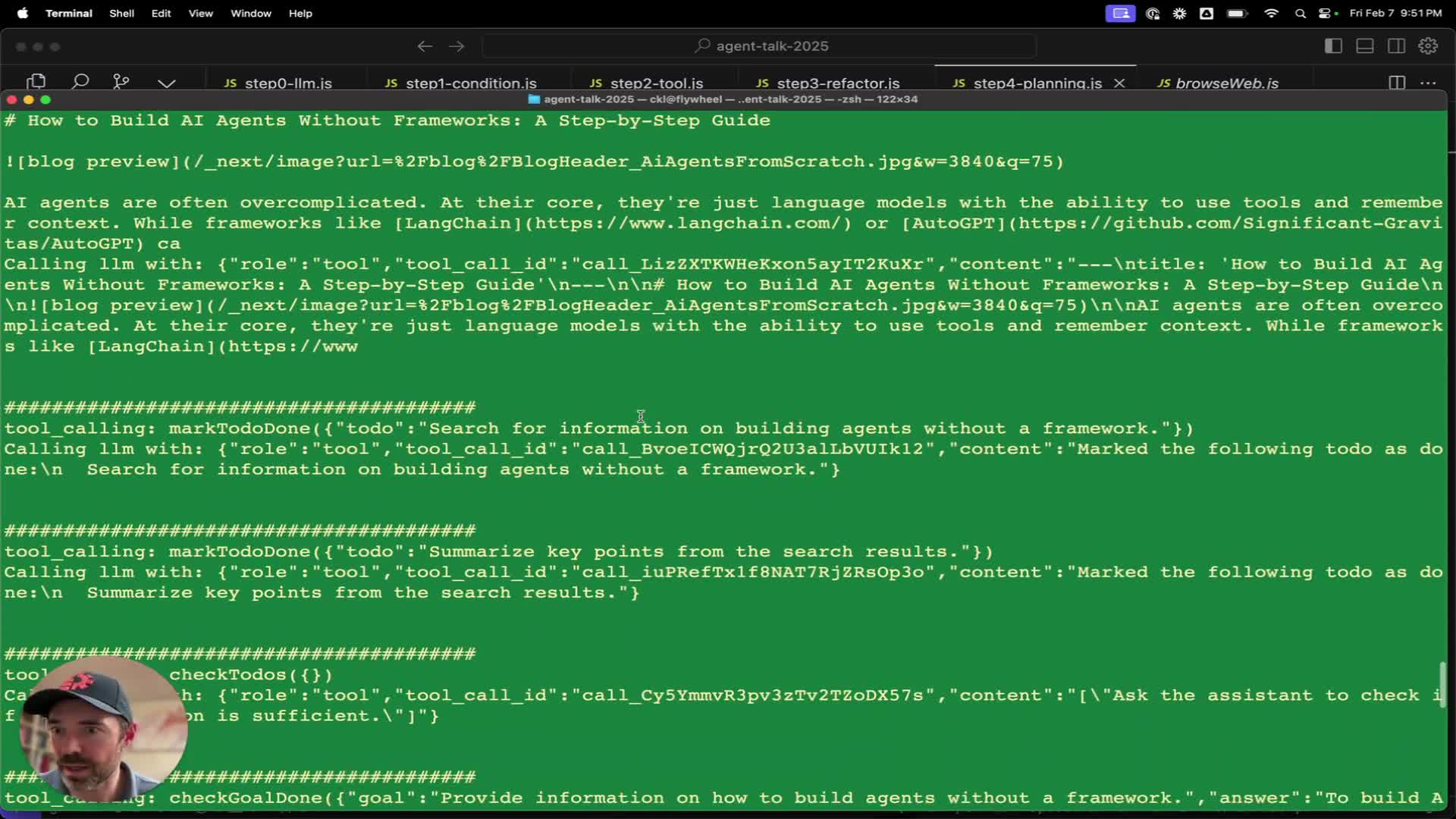

Example run: the agent used the to-do planner with search and browse tools to gather and summarize information about building agents without a framework.

Example: running a to-do-driven workflow to answer “how to build agents without a framework”:

- The agent generated a plan such as (search, summarize, check sufficiency) and wrote it to the to-do list.

- It invoked searchGoogle and browseWeb tools, ingested returned markdown, summarized key points, and marked relevant to-dos done.

- The agent delegated final goal verification to the judge tool.

Outcome and takeaways:

- The pipeline produced a concise summary enumerating core recommendations:

- Define tools

- Maintain memory for context

- Implement a loop for iteration

- Employ prompt engineering

-

Prefer direct API/state storage for certain operations

- This example illustrates how planning, tooling, and evaluation work together to produce coherent multi-step answers and shows the value of serializing intermediate results back into the conversation for traceability and re-evaluation by the LLM.

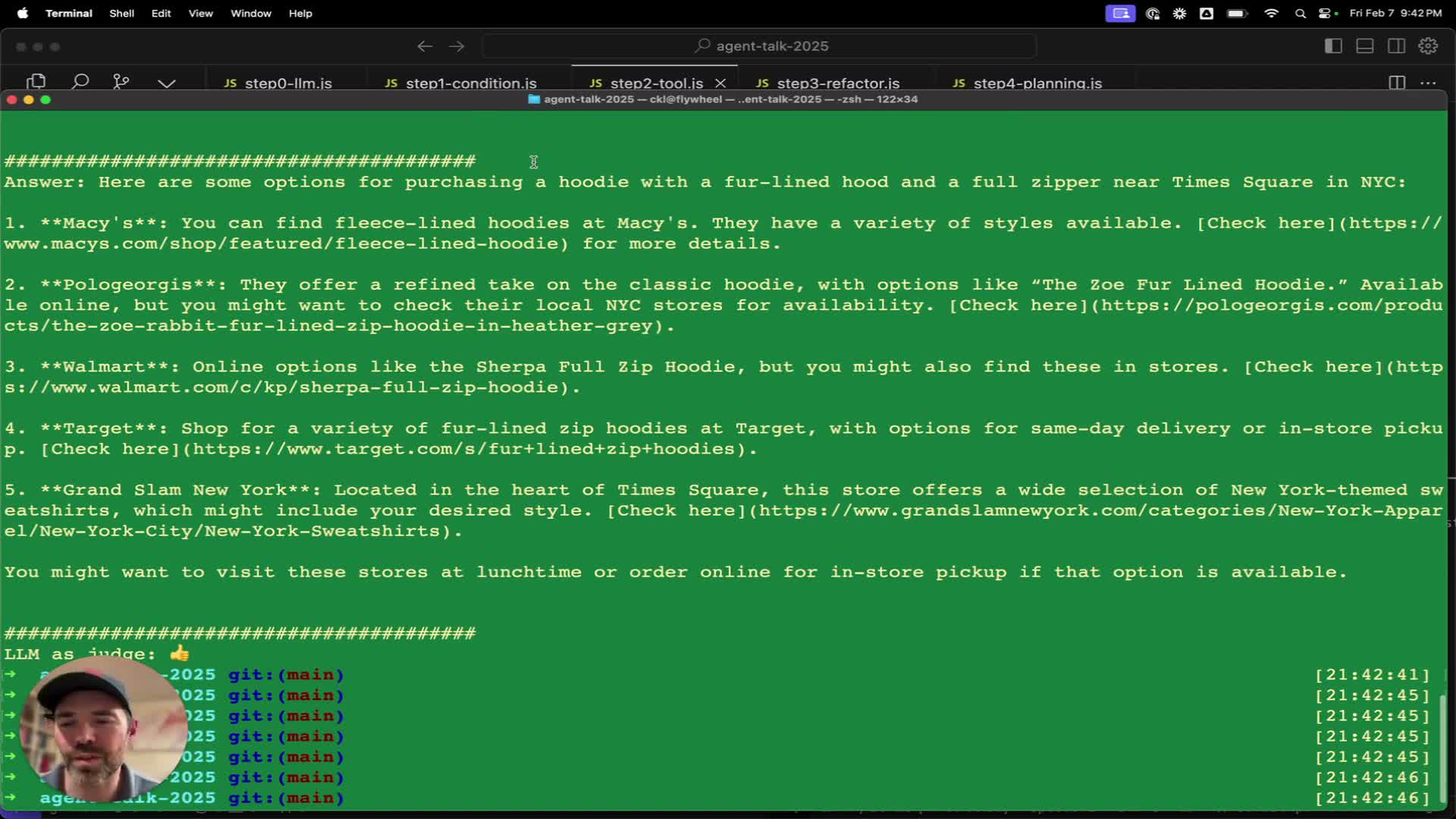

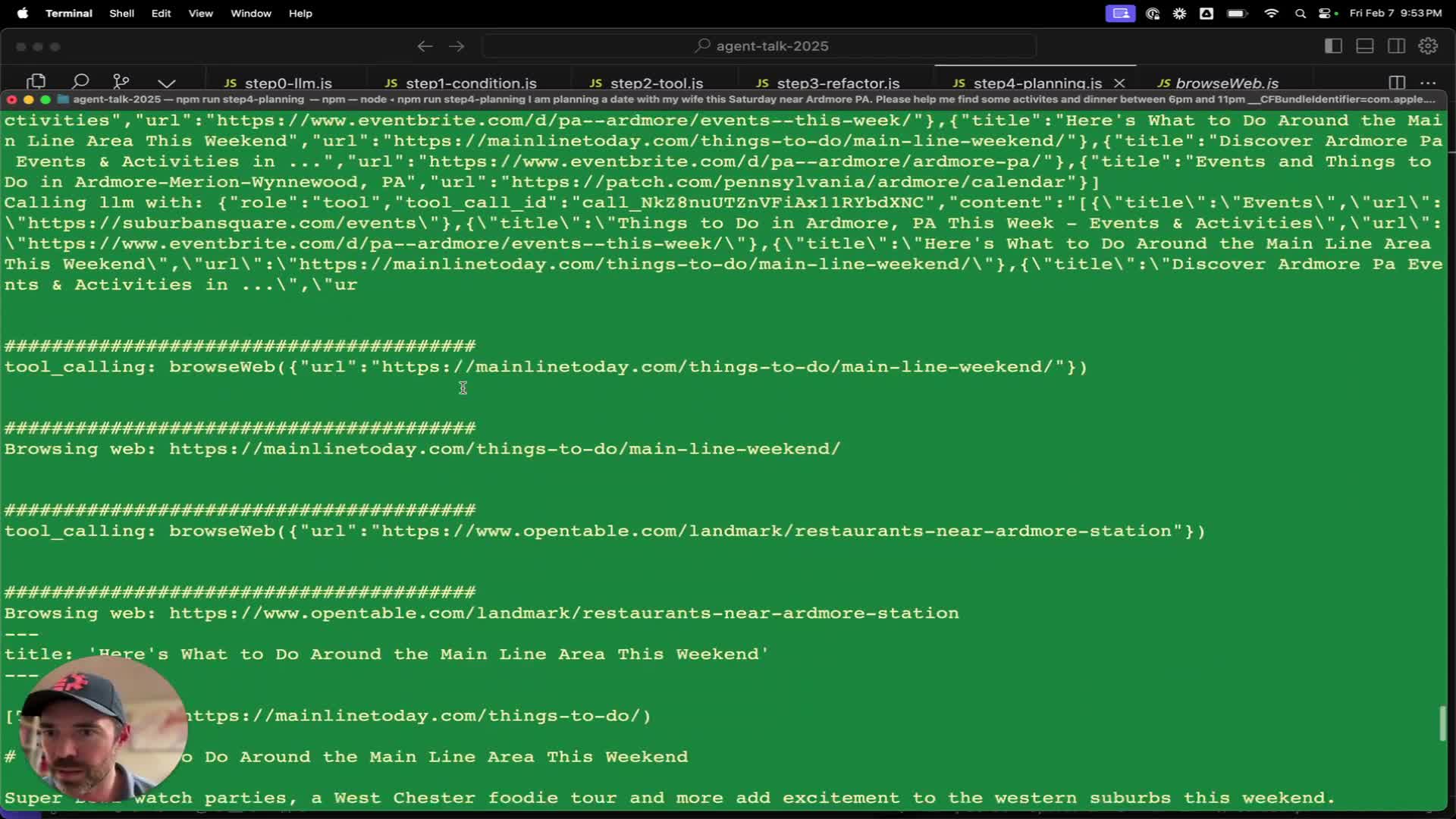

Practical example: the agent coordinated multiple searches and browsing steps to plan a local date and dining options.

User example: planning a date within a specified time window — demonstrates multi-step orchestration:

- The agent decomposed the goal into to-dos (activities, timing, dinner).

- It executed local searches for activities and restaurants, browsed web pages to extract markdown summaries, and marked tasks complete as content was collected.

- The agent compiled candidate activities and restaurant options and returned a prioritized suggestion list to the user.

Lessons learned:

- The run is not flawless but shows how incremental enhancements to tools, schemas, and memory rapidly improve practical utility.

- Implementers should iteratively refine tool parameter schemas and browsing parsers to increase reliability.

Next steps: integrate vector databases or RAG pipelines, iterate on agent design, and leverage the published code and slides for further experimentation.

Next steps and recommended extensions to the architecture:

-

Persist browsed content and tool outputs into a vector database (for example, Chroma) to enable retrieval-augmented generation (RAG) and longer-term memory across sessions.

- Injecting scraped pages into an embedding store allows:

- Semantic retrieval of past results,

- Reduced context window pressure,

- Improved factual grounding of agent responses.

Closing call to action:

- Experiment with the open-sourced code, contribute improvements, or provide feedback.

- The provided slides and code links plus contact information support follow-up collaboration.

- These next steps position the basic agent components for production-grade improvements such as better grounding, state management, and iterative evaluation.

Enjoy Reading This Article?

Here are some more articles you might like to read next: