Agent 08 - Building Game Simulation Agents

- Course introduction and objectives

- Course lesson plan and structure

- Interactive simulation demo and learning motivation

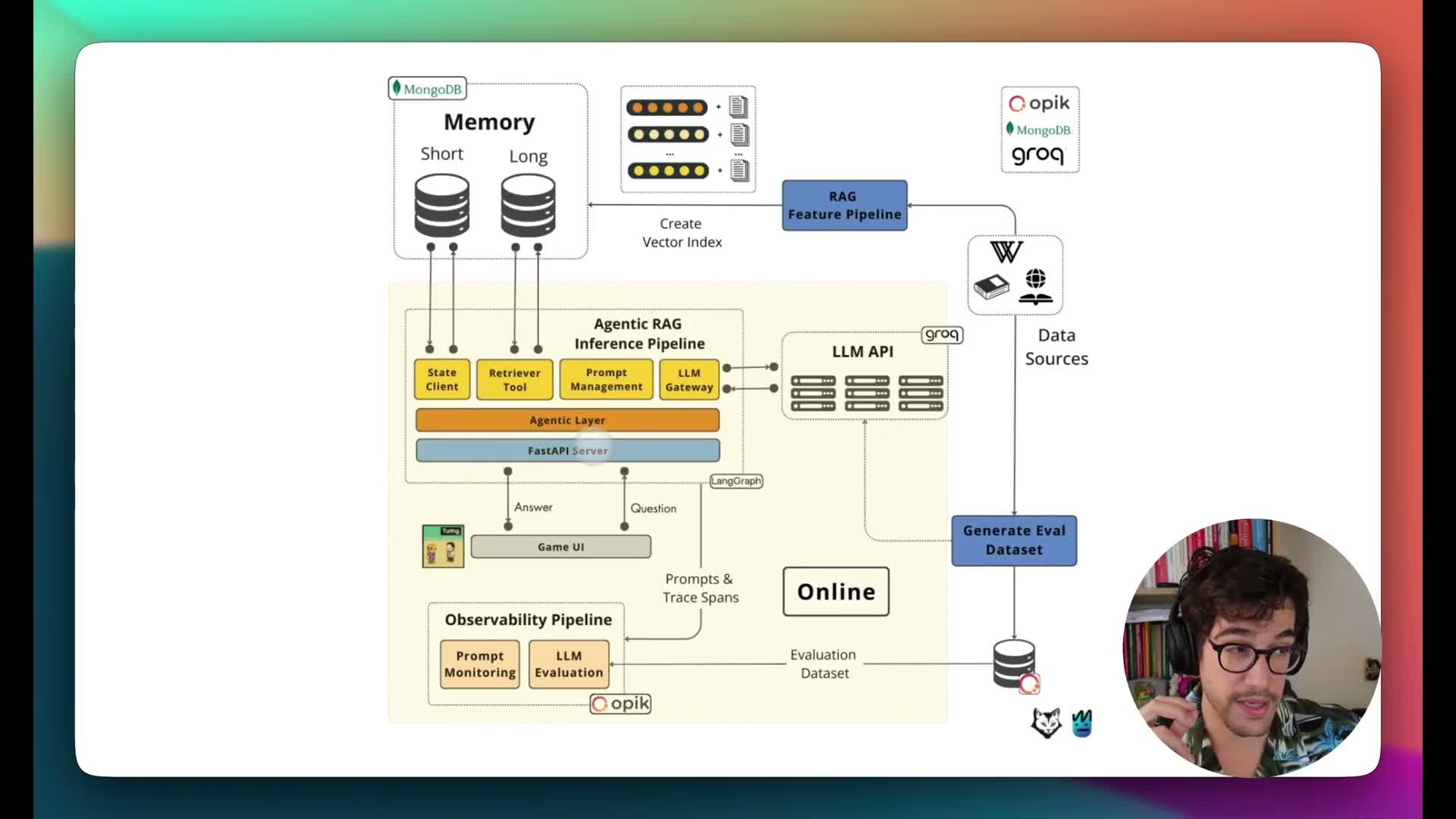

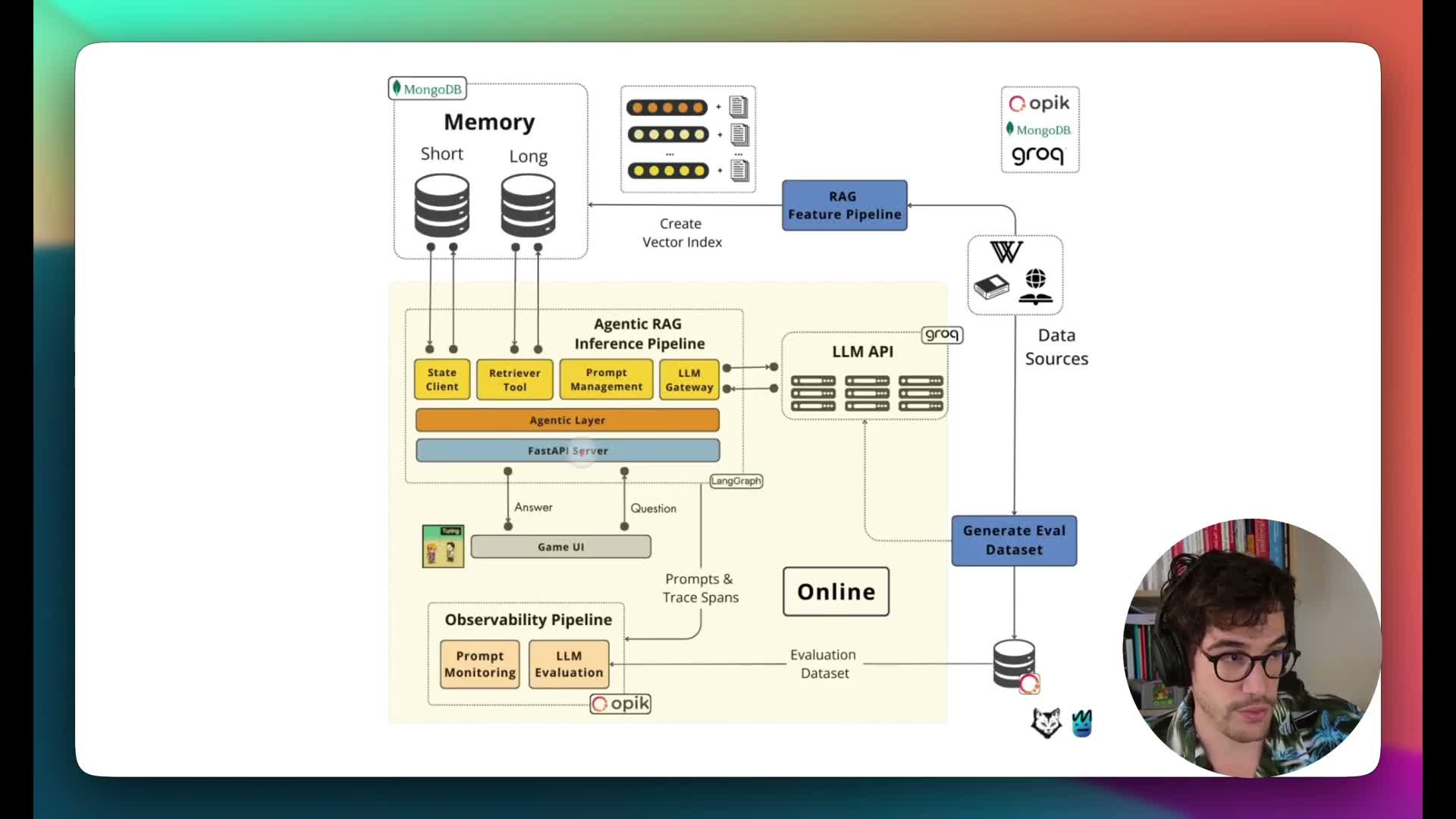

- Lesson 1 introduction and architecture overview

- Offline pipeline and long-term memory population

- Evaluation dataset generation and Opic integration

- Runtime components: UI, API, agentic layer and LLM gateway

- Three-component flow and tool-enabled response example

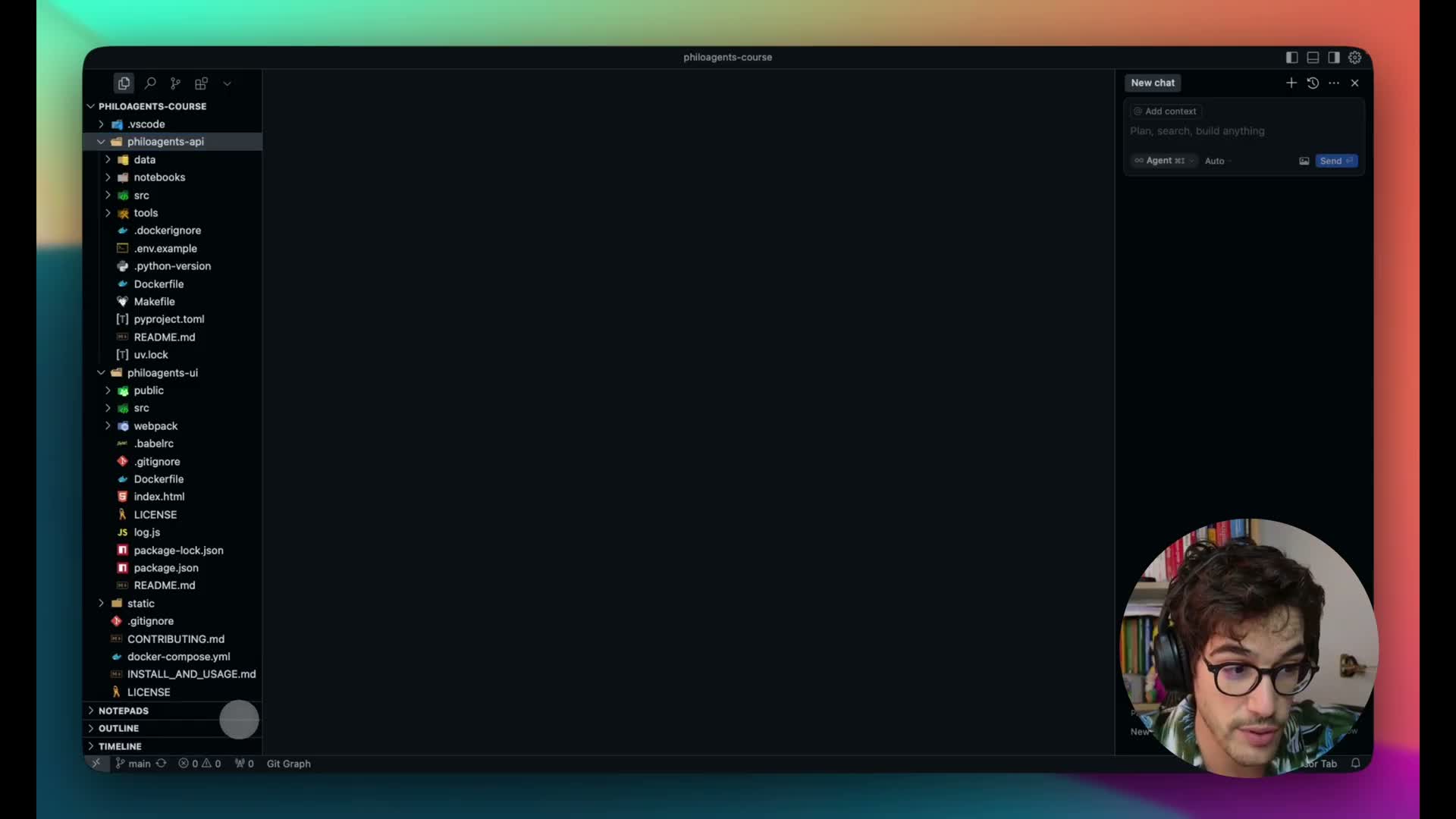

- Repository layout, cloning and development environment

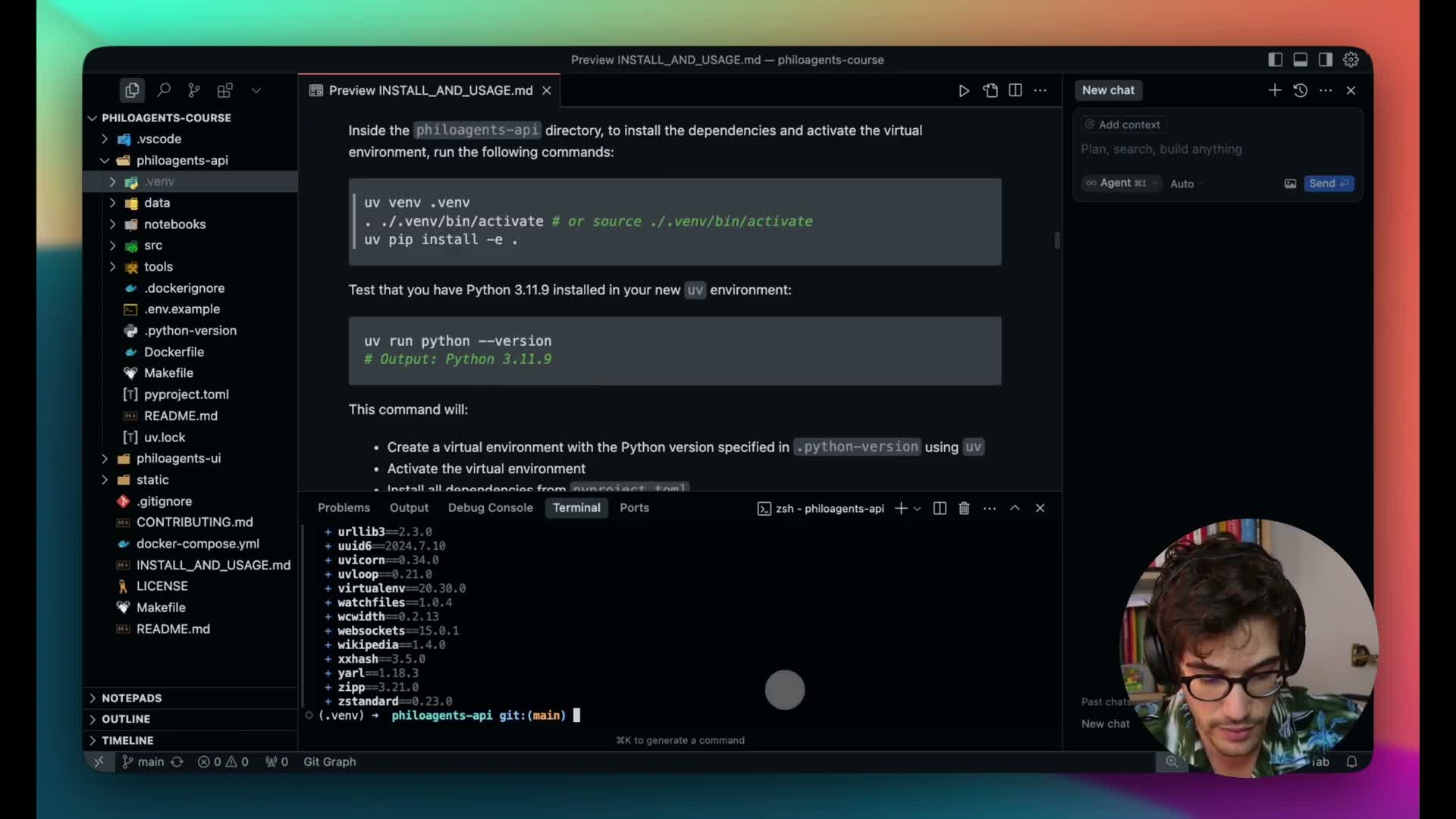

- Installing dependencies, environment variables and local infra

- Game UI walkthrough and interactive agent demo

- UI internals: dialogue manager, WebSocket service and API binding

- Philosopher domain model, prompt templates and state checkpointing

- Langraph Studio visualization and conversation node behavior

- Implementation of nodes, chains and RAG loop in code

- Short-term memory concept and storage model

- Notebook demo comparing no-memory vs persisted memory

- Long-term memory purpose and ingestion pipeline

- Building the long-term memory toolchain and persistent index

- Runtime retrieval behavior and example queries

- WebSockets rationale for real-time agentic systems

- FastAPI WebSocket implementation and client integration

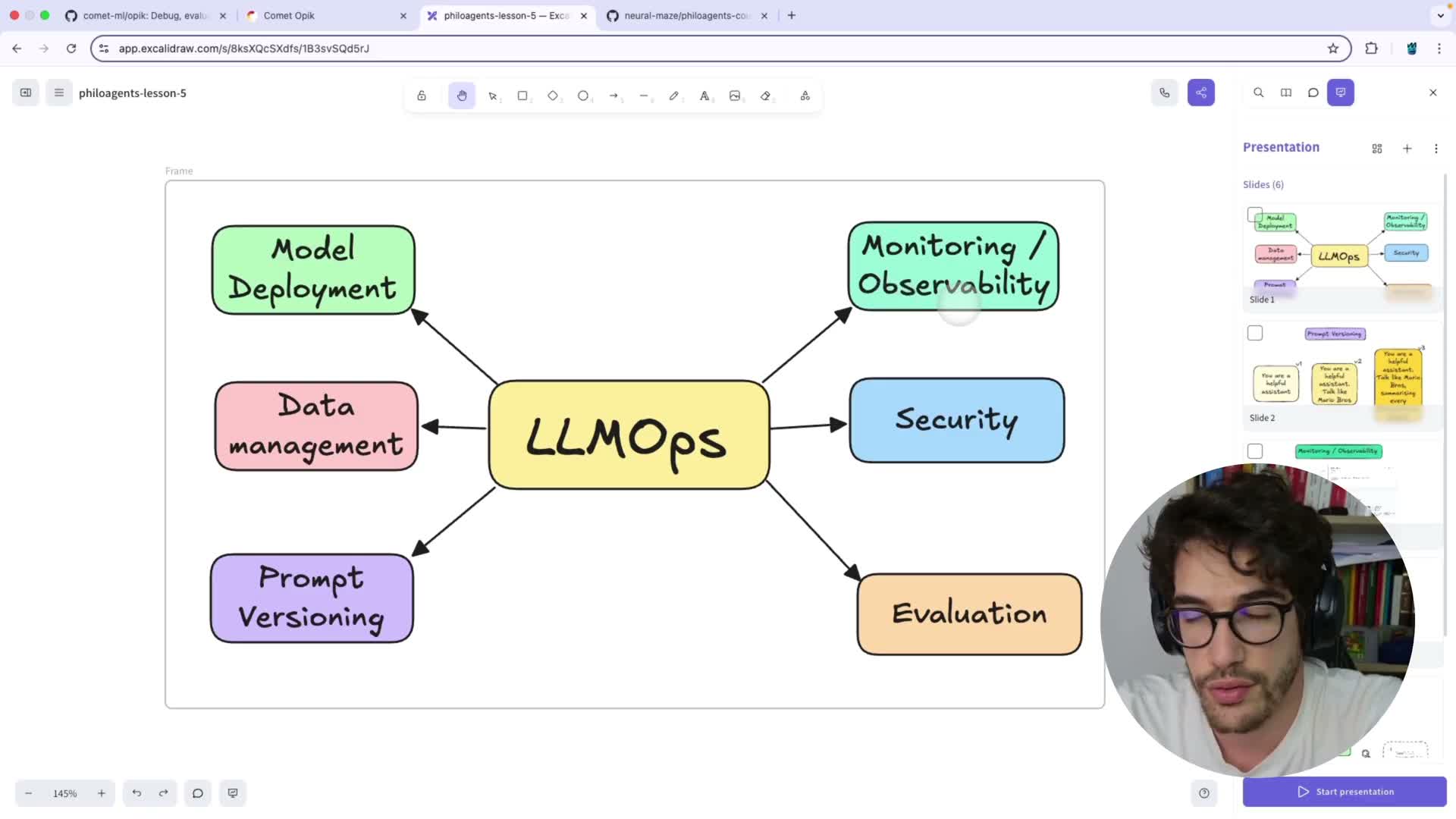

- LM-Ops definition and major components

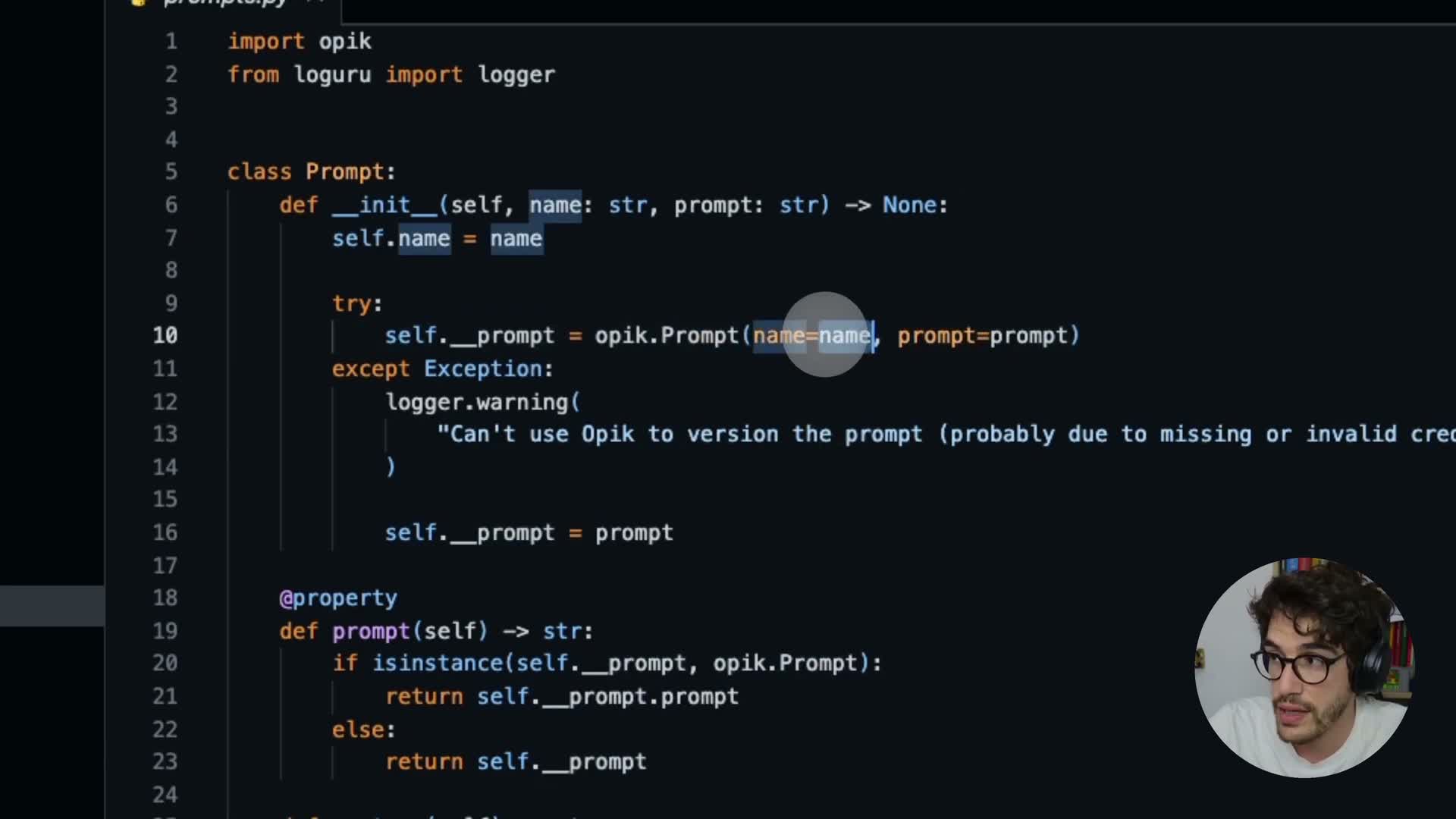

- Prompt versioning workflow with Opic

- Monitoring and observability via Opic traces

- Evaluation dataset generation pipeline using a large LLM

- Automated evaluation metrics and Opic-driven scoring

Course introduction and objectives

The course introduces an open-source project for building an AI agent simulation engine that brings historical figures to life inside an interactive game environment.

It emphasizes end-to-end engineering practices beyond pure model development, including:

-

Robust memory systems with MongoDB for short- and long-term state

-

Agentic workflow orchestration using Langraph

-

LLM inference via Grok (with Llama 3 37B used for dialogs)

-

Deployment with FastAPI and WebSockets for real-time communication

-

Observability and LM-Ops tooling for tracing, evaluation, and monitoring

The curriculum targets production-ready concerns such as:

- API / UX integration and prompt/version management

- Containerization with Docker and local/cloud deployment practices

- Monitoring and reliability for real-world usage

Participants gain a complete stack demonstration (deployable agentic applications) rather than isolated toy examples.

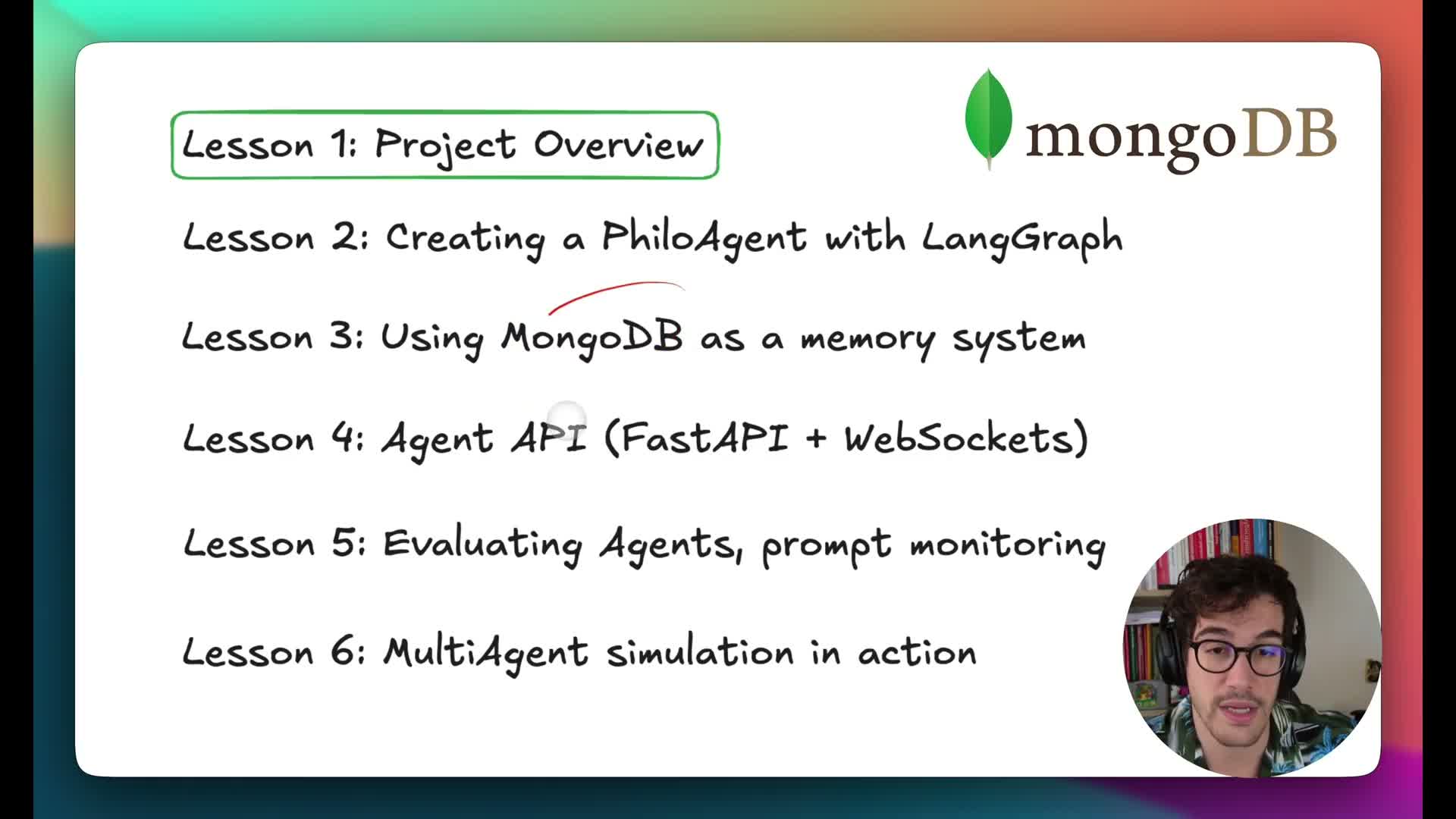

Course lesson plan and structure

The course is organized into a sequence of lessons that each focus on a specific system layer:

-

Architecture & UI / API design — overall system separation and responsibilities

-

Agent workflow construction with Langraph — graph-based agent orchestration

-

Short-term & long-term memory design (MongoDB) — persistence and retrieval strategies

-

Real-time API integration (FastAPI + WebSockets) — streaming and low-latency interaction

-

LM-Ops evaluation and monitoring (Opic) — tracing, prompt/versioning, and metrics

Each lesson includes practical artifacts to support hands-on learning:

- Code, Jupyter notebooks, and guided exercises

- Local-first replication steps and cloud deployment pointers

- A modular structure that supports incremental validation of each component

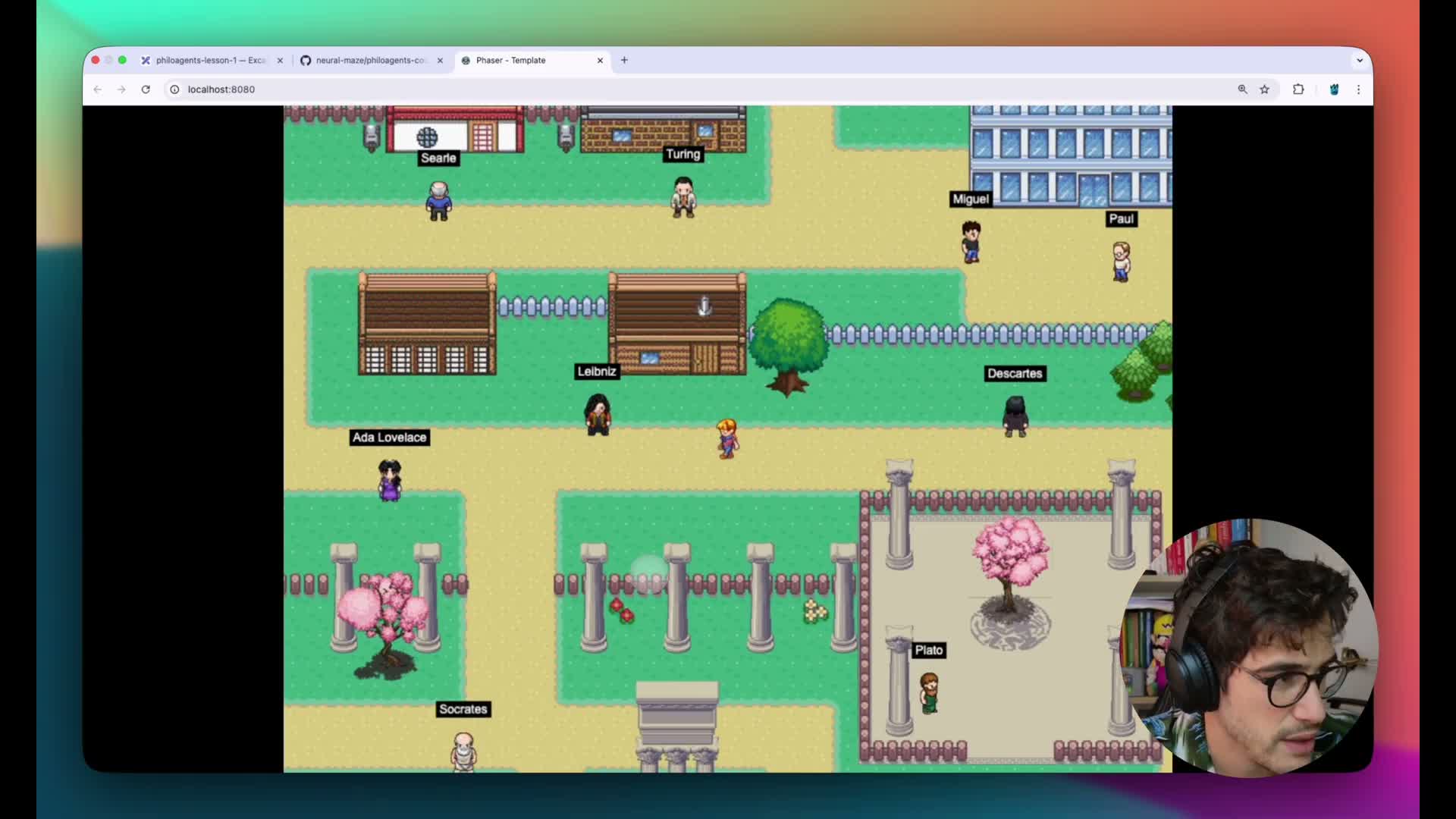

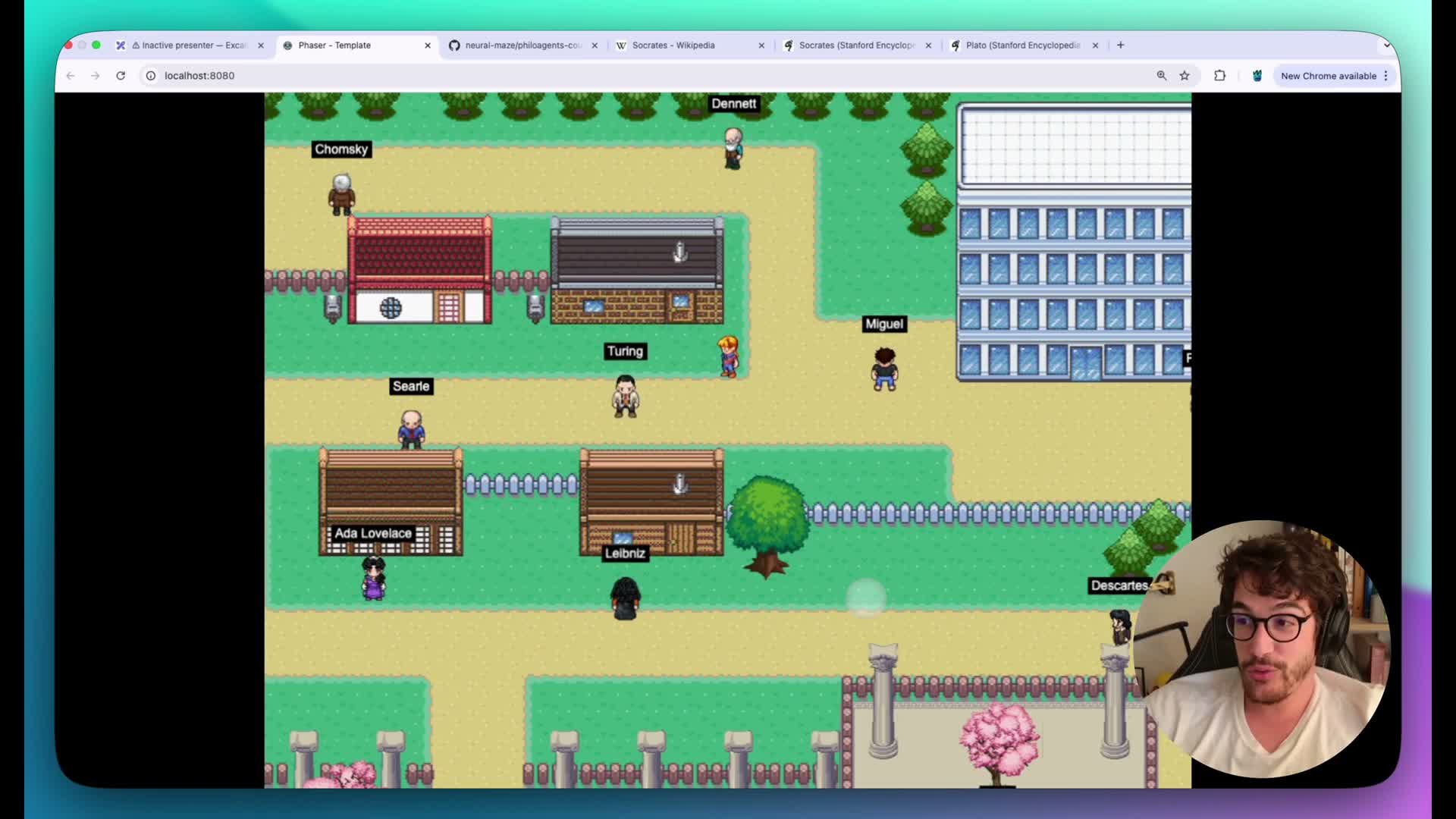

Interactive simulation demo and learning motivation

An interactive demo motivates the engineering concepts by showing AI agents impersonating philosophers in a browser-based game:

- Players interact with NPC philosophers (e.g., Plato, Aristotle, Turing) in a village scene

- The demo highlights core techniques: memory, retrieval-augmented generation (RAG), workflow orchestration, and real-time streaming

- Agents are grounded in authoritative sources to produce richer, historically coherent dialogues

- The demo sets expectations for the end-to-end learning outcome: a simulation that is fun, interactive, and technically realistic

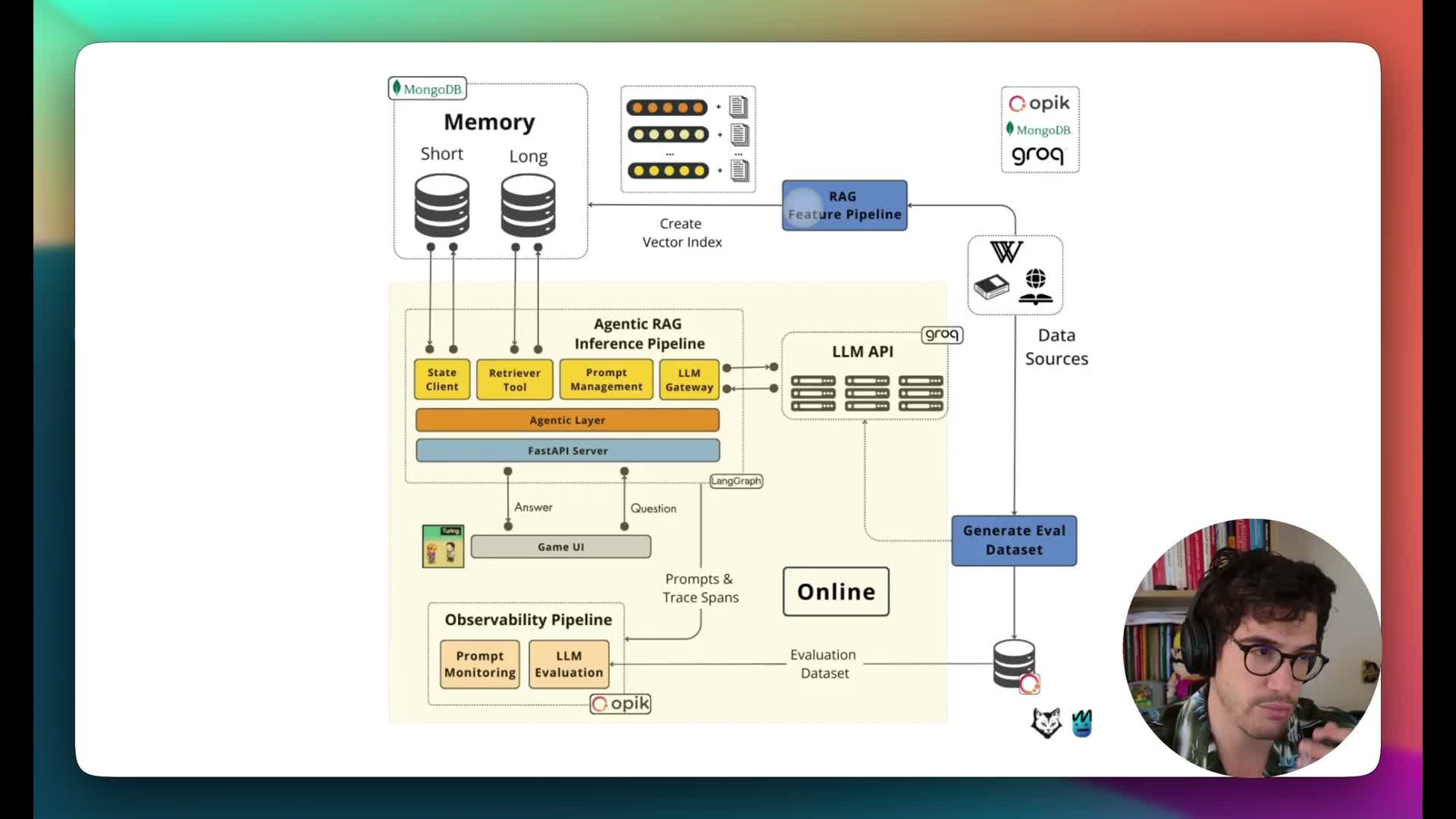

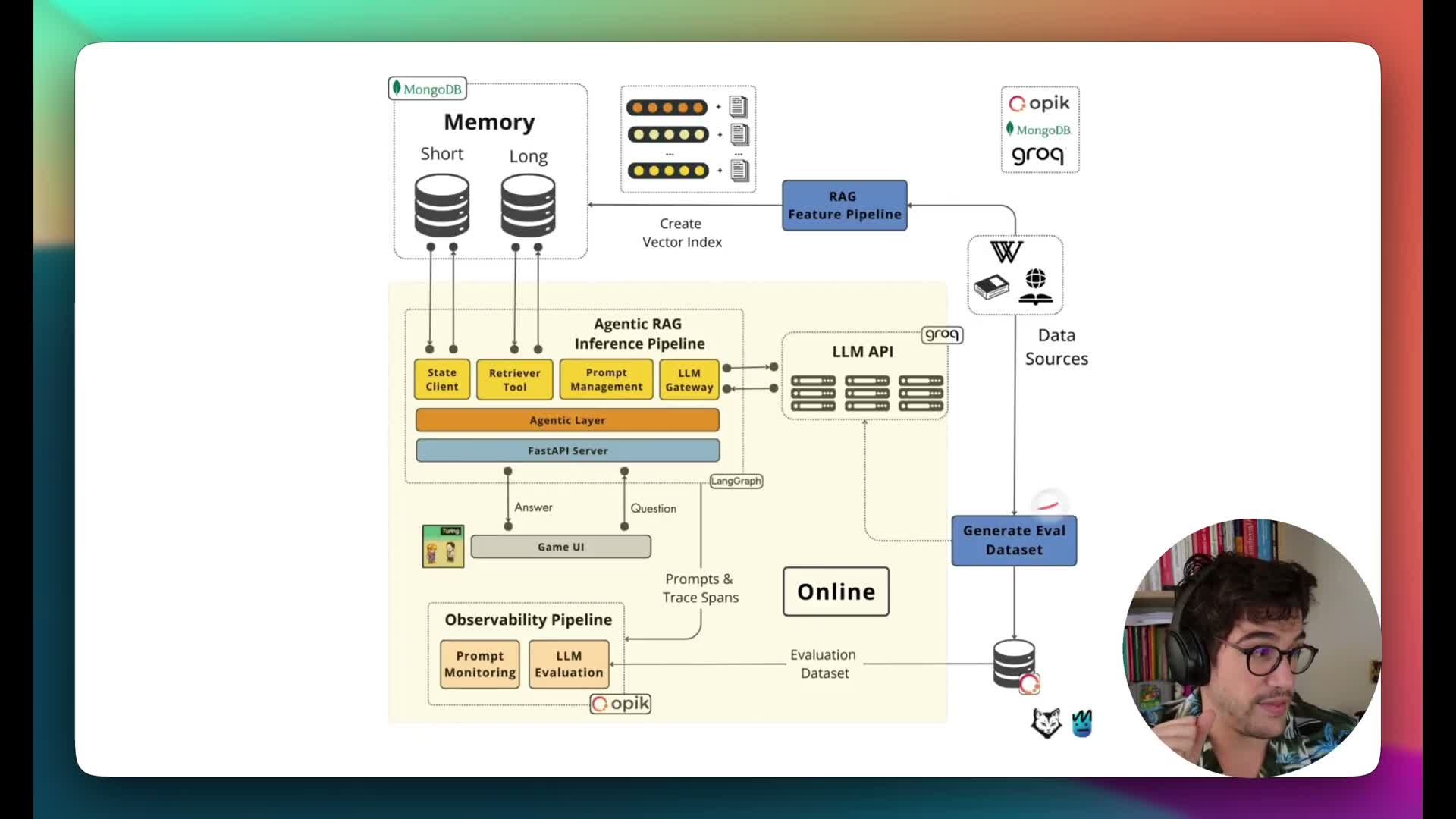

Lesson 1 introduction and architecture overview

Lesson 1 gives a high-level overview of the Fellow Agents architecture and full tech stack used across the course:

- Architectural separation:

-

Online phase — real-time gameplay and agent inference

-

Offline phase — data ingestion, feature pipeline, and evaluation dataset generation

-

Online phase — real-time gameplay and agent inference

- Key runtime components:

-

Phaser game UI for in-browser interaction

-

FastAPI server for agent serving and WebSocket streaming

-

Langraph workflows for agent behavior orchestration

-

MongoDB for short-term checkpoints and long-term vector memory

-

Phaser game UI for in-browser interaction

- The overview maps each engineering decision to a concrete system responsibility and orients subsequent lessons

Offline pipeline and long-term memory population

The offline phase implements a RAG feature pipeline that prepares grounded context for each philosopher:

- Extract contextual data from authoritative sources (Wikipedia, Stanford Encyclopedia of Philosophy)

- Chunk the text (overlapping pieces) and apply deduplication heuristics

- Produce embeddings for each chunk

- Store vectors and metadata in MongoDB as long-term memory (vector index / hybrid search)

These offline artifacts are reused to:

- Assemble evaluation datasets

- Ensure agent responses can be grounded in verifiable historical context

Details such as embedding model choice, chunking strategy, and storage schema are central to RAG effectiveness and grounding.

Evaluation dataset generation and Opic integration

The generate / eval dataset component produces question-and-answer datasets per philosopher to enable objective evaluation of RAG behavior:

- Generated datasets exercise the retrieval pipeline and surface regressions or hallucinations

-

Opic (observability/evaluation tool) is integrated to:

- Host datasets and traces

- Version prompts and evaluation configs

- Run automated evaluations comparing agent responses to gold outputs

- Host datasets and traces

- This setup enables iterative improvement via metrics-driven validation of the RAG pipeline and agent workflows

Runtime components: UI, API, agentic layer and LLM gateway

The online phase orchestrates interaction between three main runtime components:

-

Game UI (Phaser) — user actions map to API calls

-

FastAPI server — receives UI calls and invokes Langraph agent workflows

-

Memory / agent stack — short-term state + long-term retrieval tools in MongoDB

Runtime behavior:

- FastAPI invokes a Langraph-defined workflow that binds prompts, tools, and an LLM gateway

- The workflow consults short-term state and conditionally calls long-term retrieval tools (RAG)

-

Grok (with Llama 3 37B in dialogs) serves as the LLM provider for streaming responses

Key production concerns: prompt management, retrieval tool binding, state persistence, and streaming to the UI.

Three-component flow and tool-enabled response example

A simplified three-component flow highlights conditional tool usage and streaming:

- The UI sends a message to FastAPI

-

FastAPI invokes the Langraph workflow (agent graph)

- The agent evaluates whether to use a retrieval tool (conditional decision)

- If needed, the tool queries MongoDB long-term memory and returns ranked chunks

- The LLM (Grok/Llama 3 37B) generates a response which streams back to the UI in partial chunks

This flow demonstrates how conditional retrieval, streaming responses, and tool orchestration enable grounded, context-rich replies in a real-time game.

Repository layout, cloning and development environment

The project repository contains two core components:

-

filagents-api (Python)

- Implements the agentic backend with a clean architecture (application, domain, infrastructure layers)

- Includes Docker files, notebooks, and evaluation data

- Implements the agentic backend with a clean architecture (application, domain, infrastructure layers)

-

filagents-ui (Phaser JavaScript)

- Phaser 3 project with scenes, dialog management, and HTTP / WebSocket services

- Phaser 3 project with scenes, dialog management, and HTTP / WebSocket services

Developer onboarding checklist:

- Clone the repo and open in an IDE

- Create a Python virtual environment for the API

- Inspect distinct modules and follow installation/run instructions provided in the repo

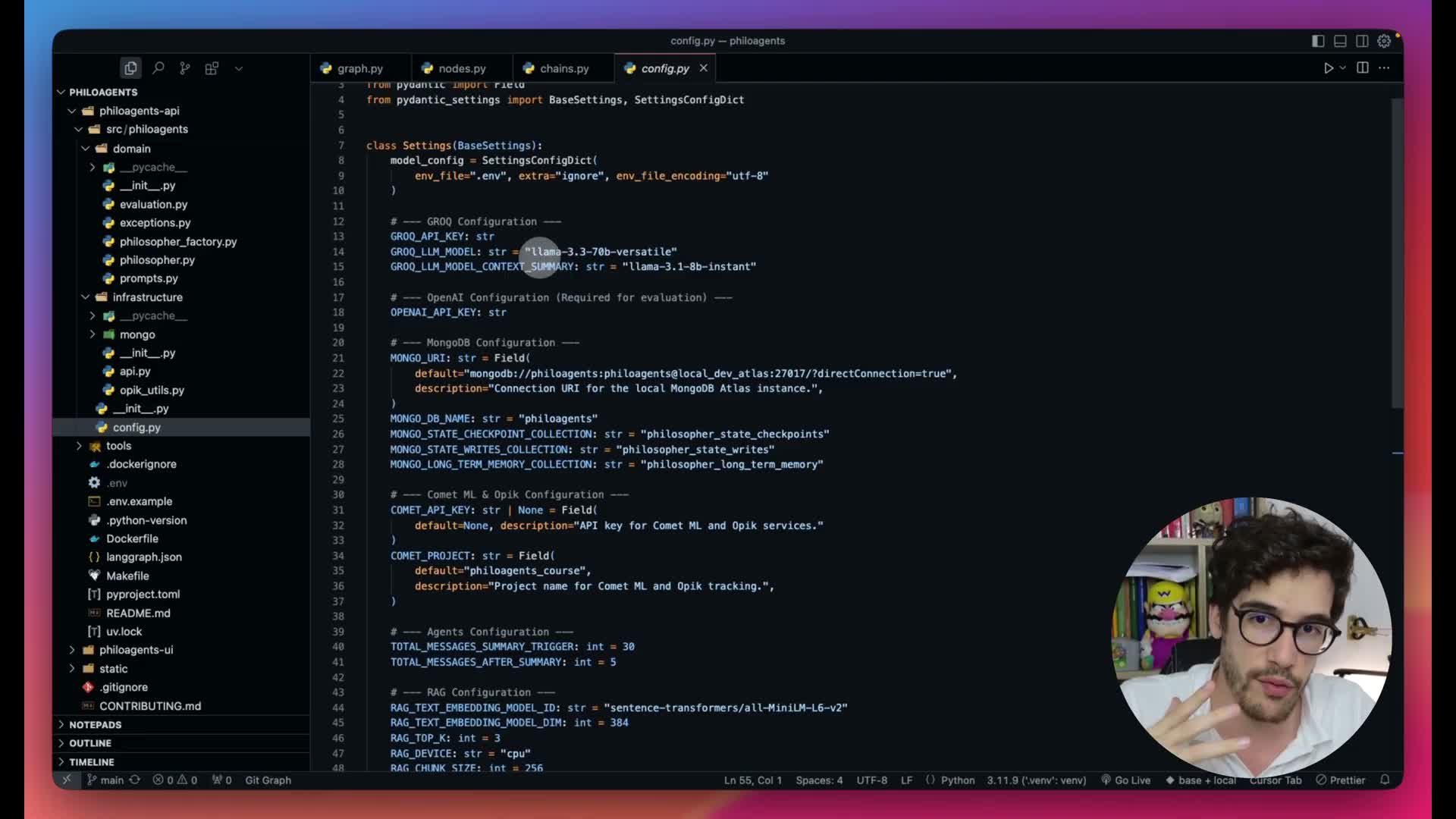

Installing dependencies, environment variables and local infra

Local setup and infrastructure:

- Prerequisites: Python 3.11, Git, Docker, plus project-specific packages

- Create and activate a virtual environment, then install dependencies from requirements

- Configure environment variables:

- Copy example .env -> .env and set keys for Grok, OpenAI (for Opic), and Comet

- Copy example .env -> .env and set keys for Grok, OpenAI (for Opic), and Comet

- Start local infrastructure via Make (make infrastructure_app) which launches three Docker services:

- Local MongoDB (dev Atlas emulation), the FastAPI backend, and the Phaser UI

- Local MongoDB (dev Atlas emulation), the FastAPI backend, and the Phaser UI

This composition supports local development and testing without requiring external managed services.

Game UI walkthrough and interactive agent demo

Phaser-based UI mechanics and demo features:

- Player controls: movement with arrow keys, speak via spacebar + input, close dialogs with Escape

- Multiple philosopher NPCs implemented as Langraph-driven agents, each with distinct personalities and topics (ethics, computation, AI)

- Interacting with a philosopher triggers the agent backend and shows streamed responses in the dialog box

- Demo includes both comedic easter eggs and realistic philosophical Q&A to verify the end-to-end pipeline from user input to agent response

UI internals: dialogue manager, WebSocket service and API binding

Client-side communication and dialog orchestration are organized as follows:

-

Dialogue manager — orchestrates dialog boxes, tracks the active philosopher, and routes incoming WebSocket messages

-

WebSocket API service — manages connection lifecycle, send/receive semantics, and callback registration; connects to ws://localhost:8000 for streaming

- The client assembles streamed chunks into full responses and integrates with Phaser scenes for rendering

- Architecture decouples UI rendering from networking logic to simplify testing and extension

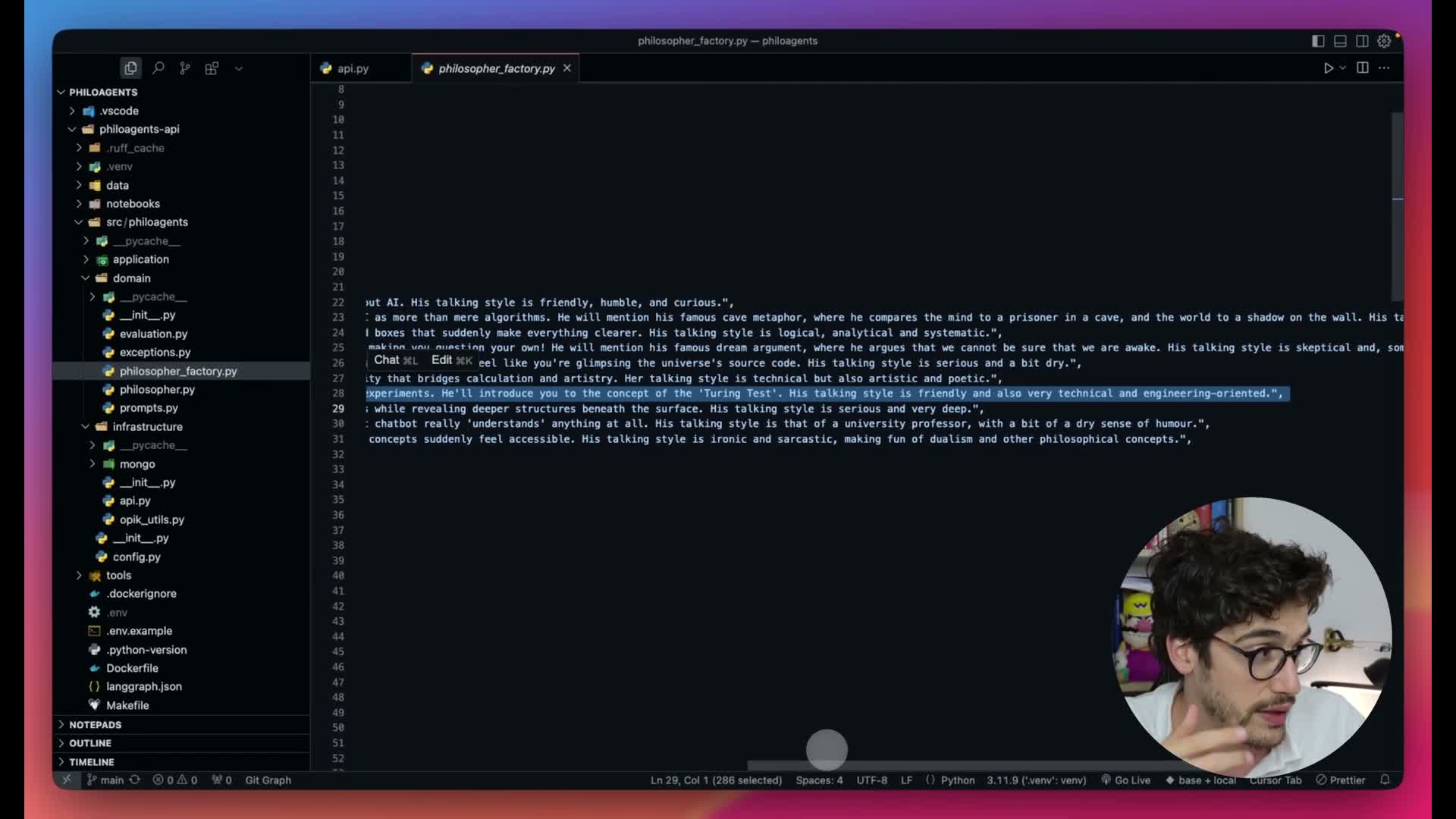

Philosopher domain model, prompt templates and state checkpointing

Philosopher identity and persistence model:

- Philosophers modeled as domain objects (Pydantic models) with fields:

-

id, name, perspective, style, and character prompts

-

id, name, perspective, style, and character prompts

-

Character prompts are assembled from domain fields to produce a system prompt that conditions personality and voice

-

Langraph graph state persists conversation history and philosopher-specific attributes (context, summary, etc.)

- The FastAPI backend configures a Langraph checkpointer that writes state snapshots into MongoDB collections (checkpoints, writes)

- Persisted state enables short-term continuity (recalling user facts) and per-agent thread isolation across interactions

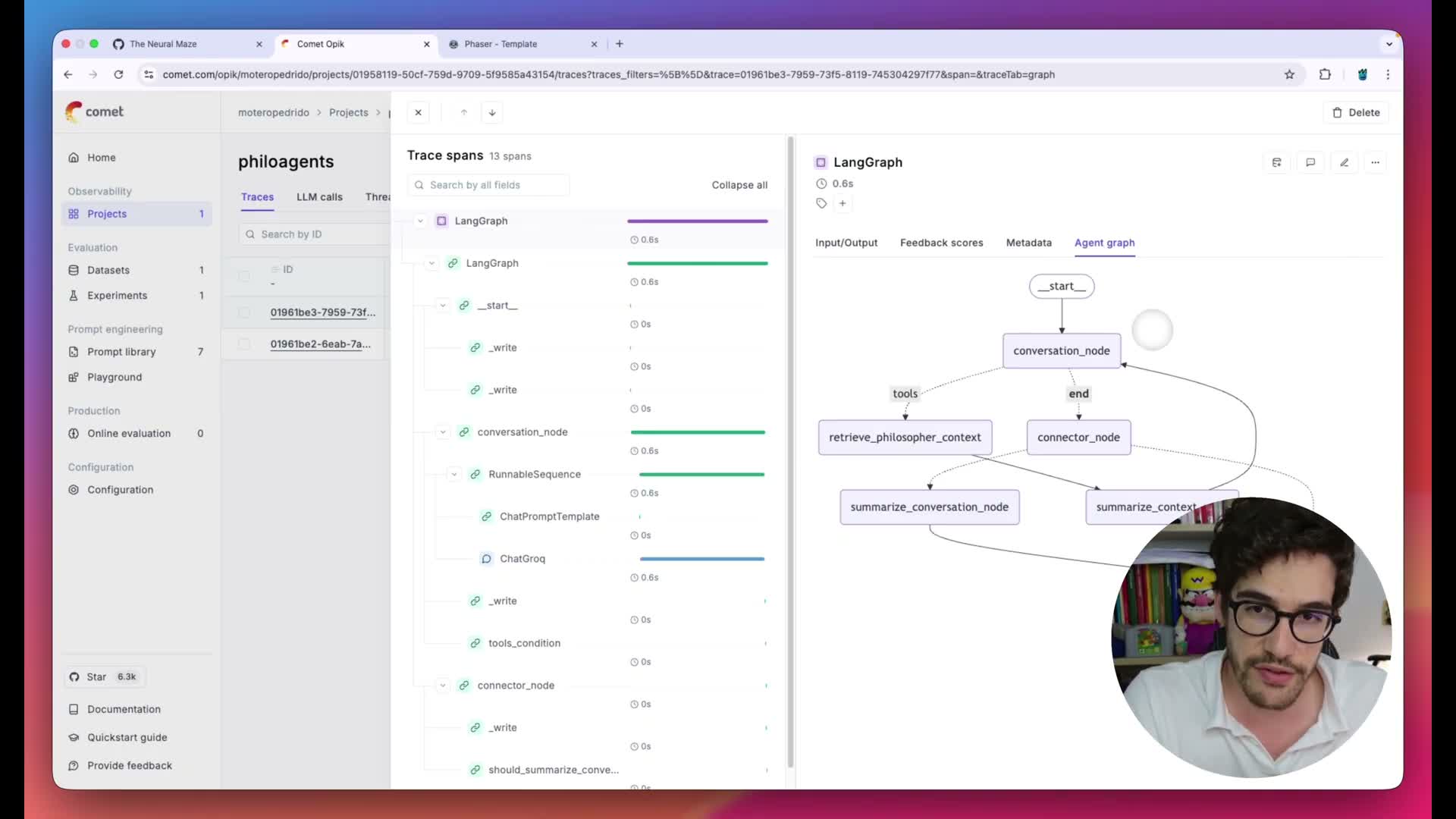

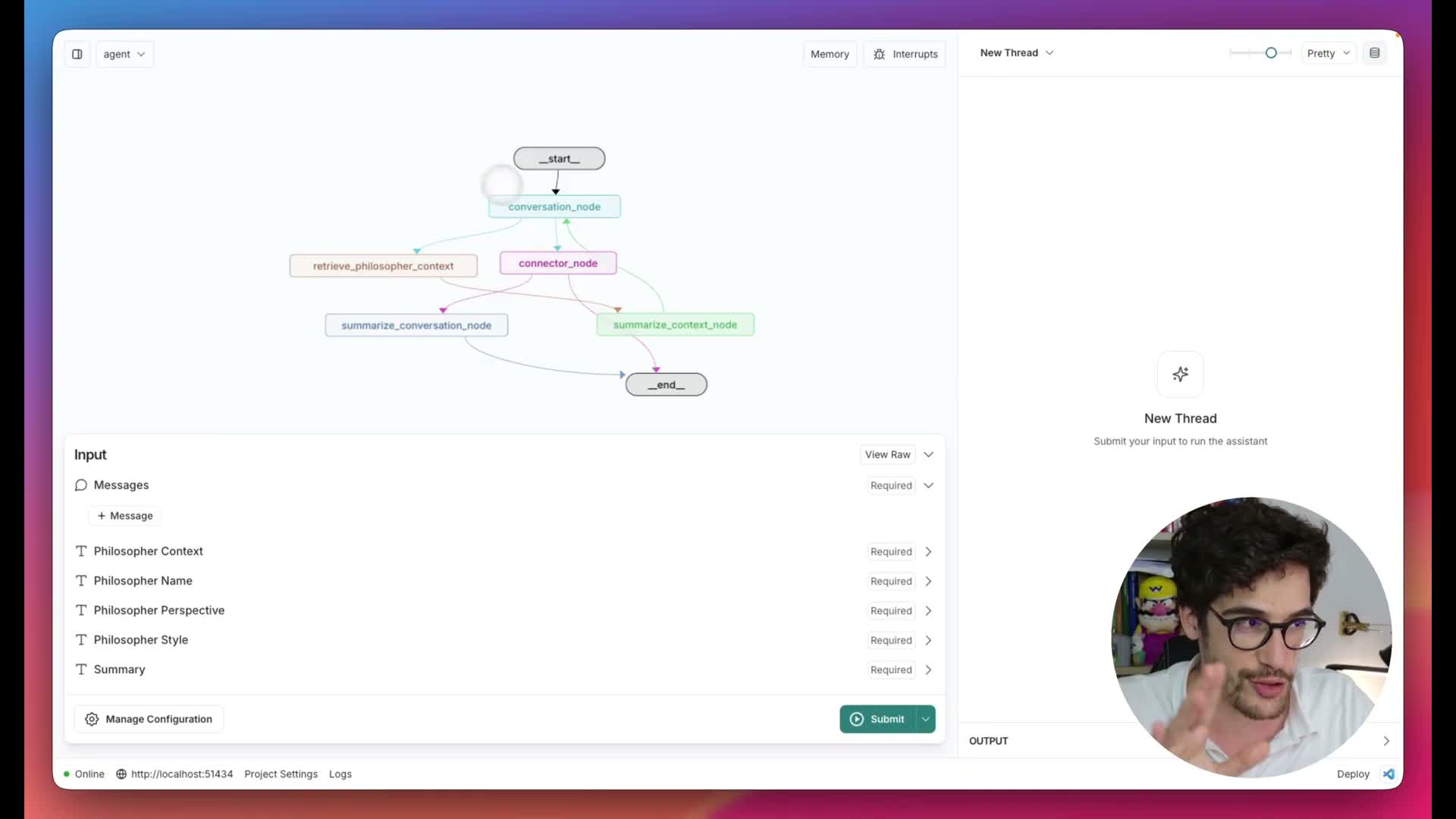

Langraph Studio visualization and conversation node behavior

Langraph Studio visualizes the agent workflow as a directed graph:

- Start node → Conversation node, where a tool condition decides whether to call the retriever (conditional/dotted edges)

- When retrieval is triggered:

- Returned context is summarized and injected back into the conversation loop

- Returned context is summarized and injected back into the conversation loop

- Connector and summarization nodes implement architecture-level concerns:

-

Token compression, flow control, and context summarization

-

Token compression, flow control, and context summarization

- Visual graphs clarify the runtime decision-making and iterative loops present in agentic workflows

Implementation of nodes, chains and RAG loop in code

Graph composition and node responsibilities:

- Nodes created include:

-

Conversation (conversation chain binding LLM, prompts, tools)

-

Retriever (MongoDB hybrid retriever wrapped as a Langraph tool node)

-

Context summarizer, conversation summarizer, and a transparent connector node

-

Conversation (conversation chain binding LLM, prompts, tools)

- Edges implement conditional RAG loops:

- conversation → retriever → summarize context → conversation

- Additional conditional edge summarizes conversations when message length exceeds a threshold (e.g., 30 messages)

- conversation → retriever → summarize context → conversation

- The conversation node binds Grok / Llama 3 37B, prompts, and tools to enable streaming and tool orchestration

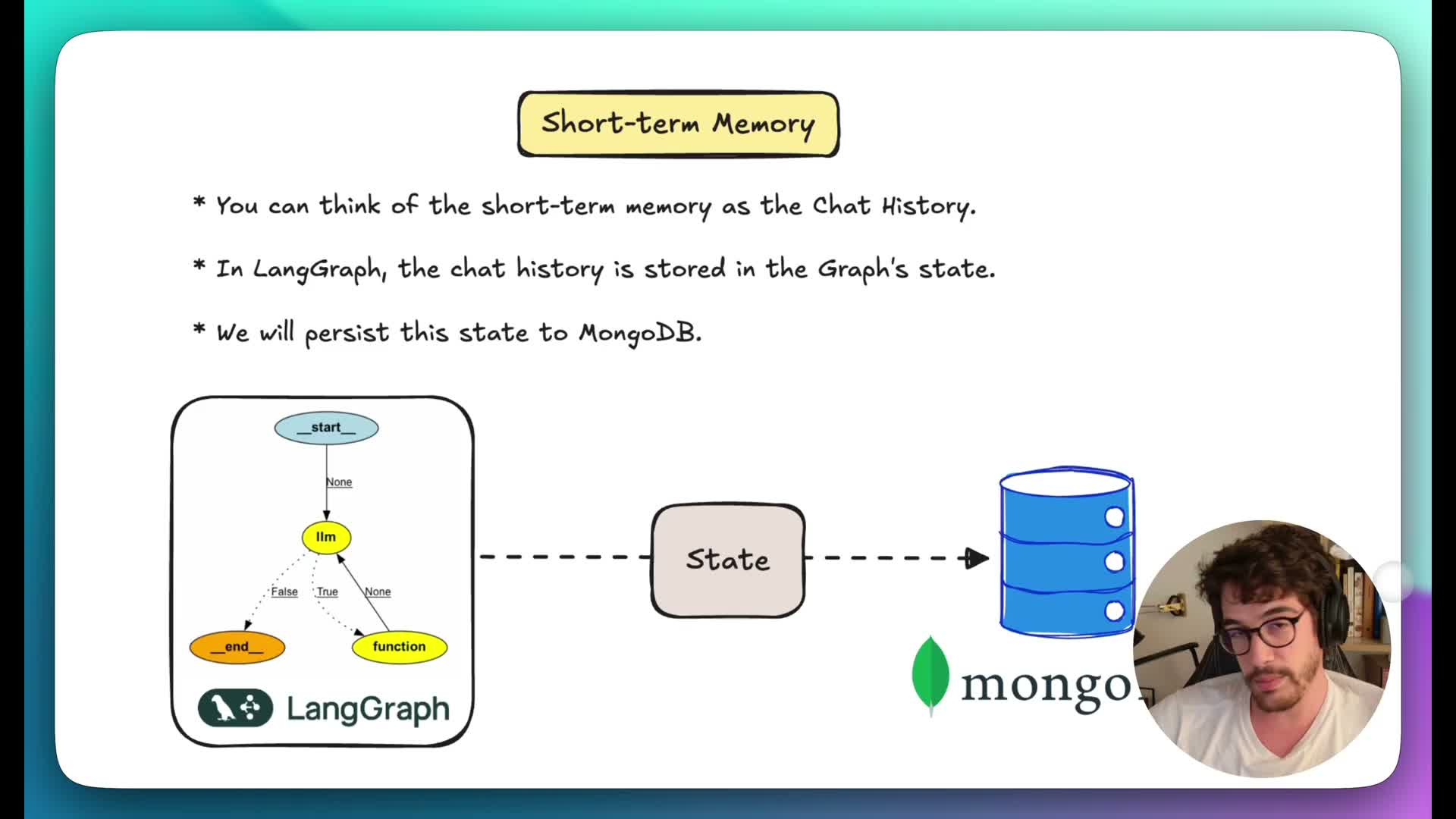

Short-term memory concept and storage model

Short-term memory design (conversation checkpointing):

- Conversation history is stored in the Langraph graph state as a messages list representing chat history

- The messages state is extended with philosopher-specific attributes (context, name, perspective, style, summary)

- An async MongoDB saver acts as a Langraph checkpointer to persist state snapshots to MongoDB collections

- Persisted state enables agents to recall user-provided facts across turns (e.g., the user’s name) and maintain coherent multi-turn dialogues

- Per-agent thread IDs ensure multiple philosopher states remain isolated

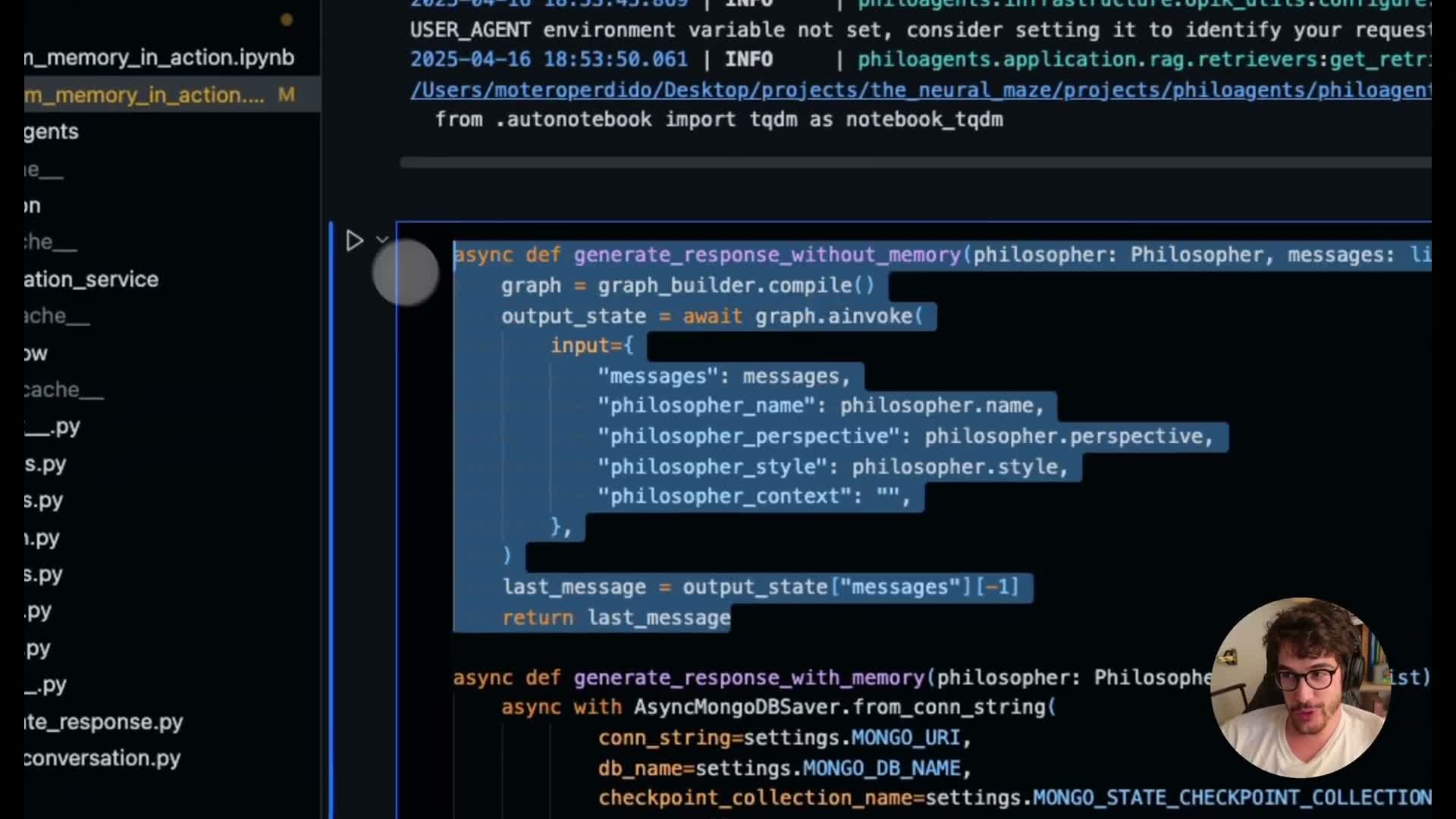

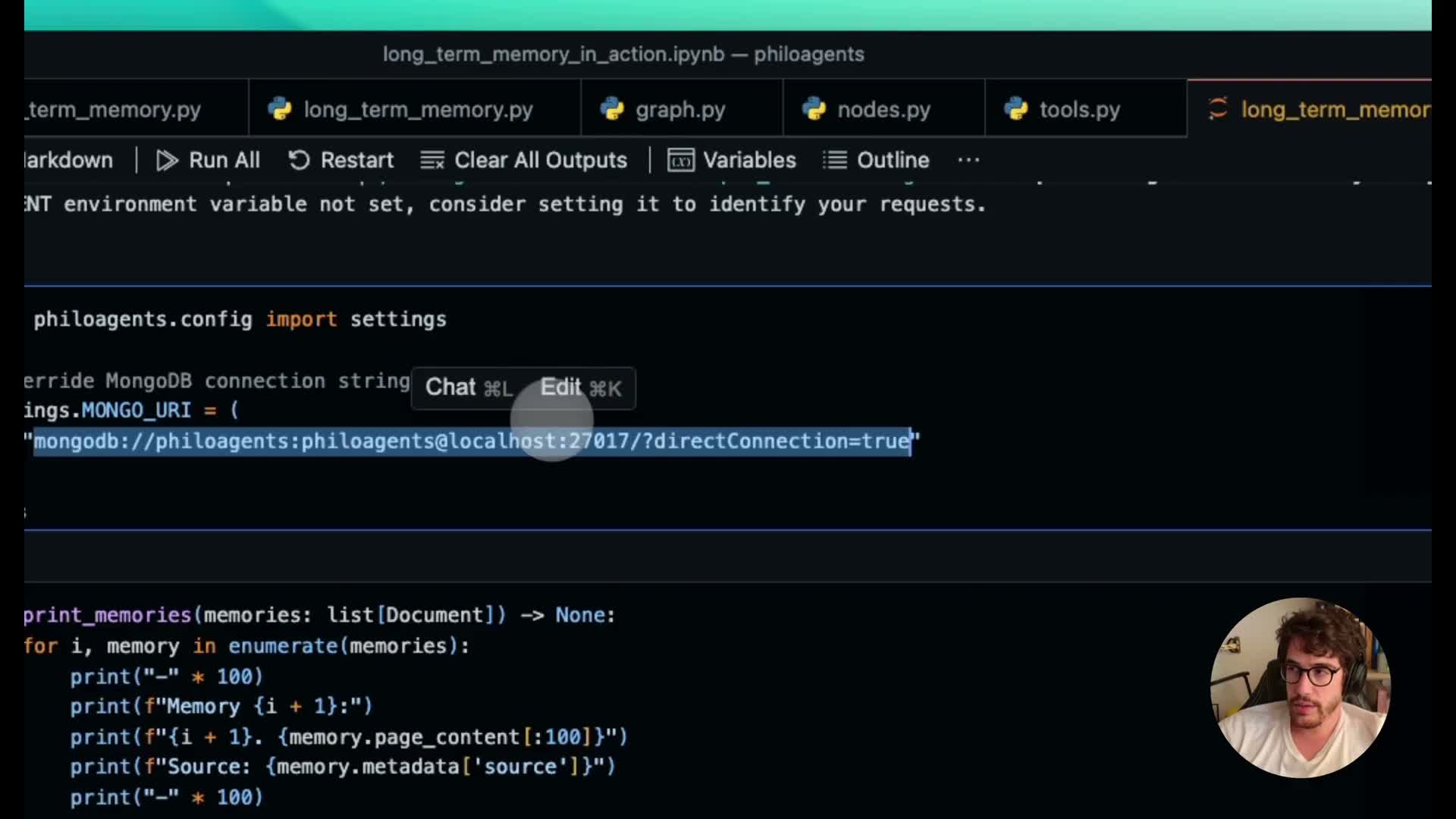

Notebook demo comparing no-memory vs persisted memory

Notebook examples show persistence vs. stateless invocation:

-

generate_response_without_memory — runs the graph without a checkpointer (stateless); the agent forgets prior user turns

-

generate_response_with_memory — attaches an async MongoDB checkpointer using philosopher ID as the thread ID; the agent recalls earlier facts across invocations

The notebook reproduces the same graph invocation logic and highlights how simple database-backed checkpoints restore chat continuity per philosopher thread.

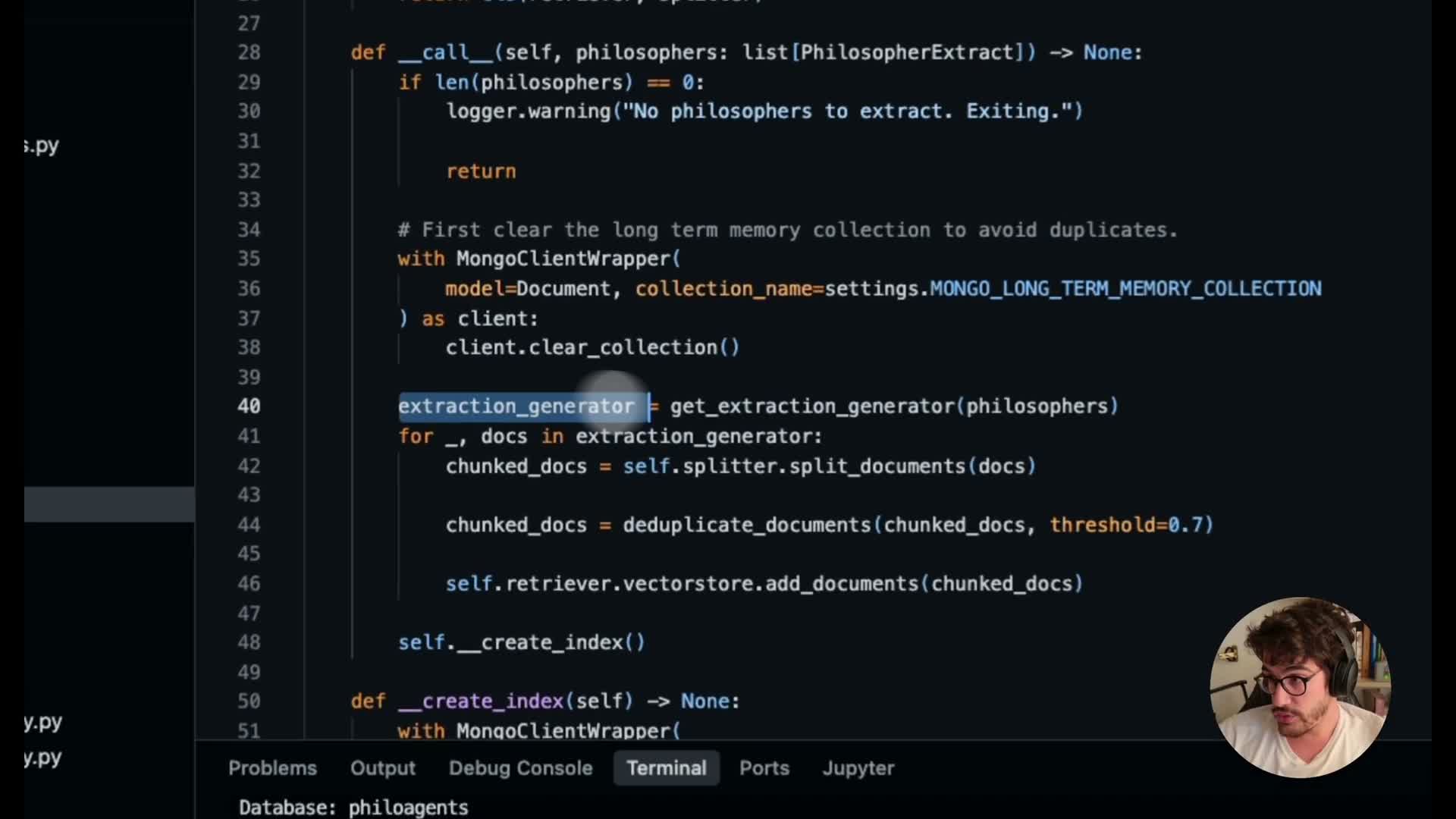

Long-term memory purpose and ingestion pipeline

Long-term memory and ingestion pipeline for grounded context:

- Long-term memory stores biographies, philosophical ideas, and domain facts per philosopher

- Ingestion pipeline steps:

- Download documents from Wikipedia and Stanford Encyclopedia of Philosophy

- Apply a recursive character splitter to produce overlapping chunks

- Deduplicate chunks using content-similarity heuristics (MinHash-style or others)

- Produce embeddings per chunk

- Store vectors + metadata into MongoDB’s vector index

- Download documents from Wikipedia and Stanford Encyclopedia of Philosophy

This approach supports retrieval of source-attributed context during online queries, enabling historically accurate agent responses.

Building the long-term memory toolchain and persistent index

CLI and retriever integration:

- Repository includes a CLI (create_long_term_memory) that orchestrates extraction, chunking, deduplication, embedding generation, and insertion into MongoDB

- A hybrid MongoDB retriever (Langraph / LangChain integration) is constructed using a chosen embedding model and MongoDB Atlas hybrid search or local vector features

- After ingestion, the philosopher_launcher_memory collection contains chunked documents with source attribution suitable for retrieval tools

- The retriever is exposed as a Langraph tool node for conditional invocation in the agent workflow

Runtime retrieval behavior and example queries

Runtime retrieval and context injection:

- The retrieve_philosopher_context node queries MongoDB’s vector index with the current user question

- Returned chunks are summarized to reduce token usage and then injected back into the conversation chain

- Retriever returns ranked chunks from multiple sources (Wikipedia, Stanford Encyclopedia of Philosophy) and the conversation node may trigger additional retrieval iterations (retrieval loop)

- Notebook and UI examples demonstrate queries (e.g., “Turing machine”, “Chinese room argument”) and show retrieved chunks with source metadata to confirm grounding

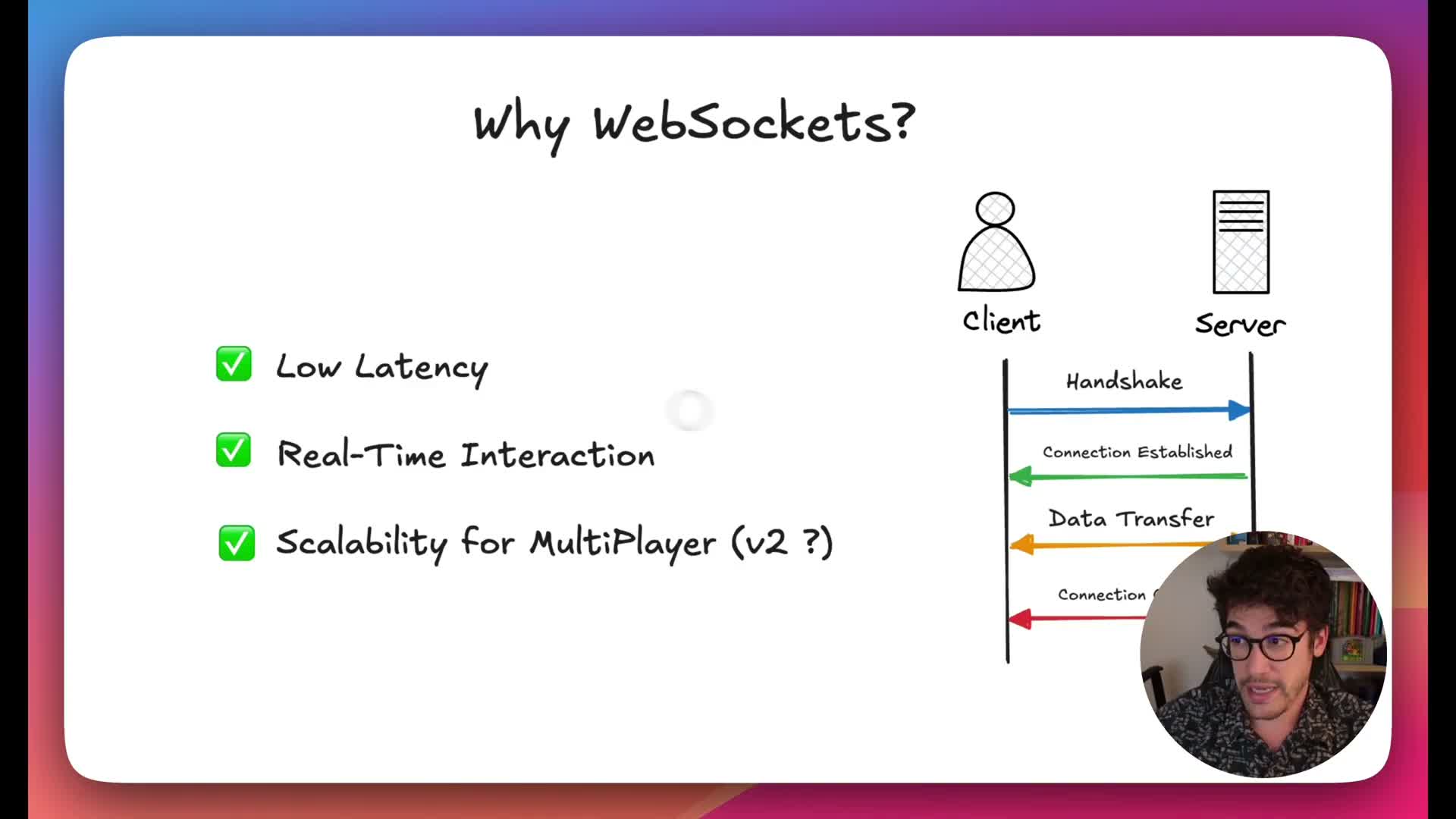

WebSockets rationale for real-time agentic systems

Why WebSockets are used for UI ↔ backend communication:

-

Persistent, bidirectional connections enable low-latency interaction and streaming partial responses

- Advantages over HTTP:

- No per-interaction handshake overhead

- Support for server-to-client pushes and true streaming of partial LLM outputs

- Better fit for interactive game experiences and scalable multiplayer scenarios

- No per-interaction handshake overhead

- WebSockets are therefore the preferred protocol to stream Langraph response chunks to the UI as they are produced

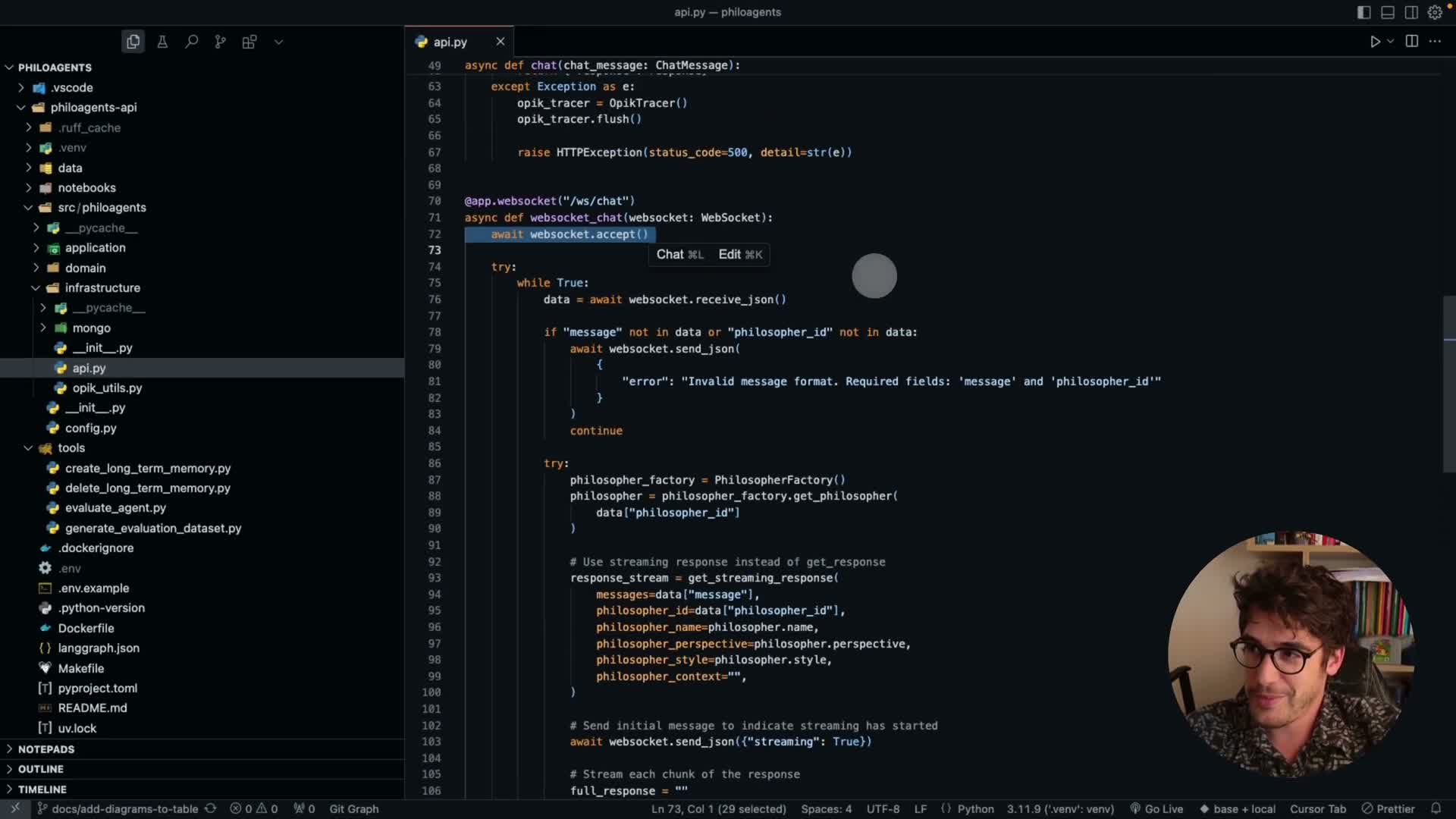

FastAPI WebSocket implementation and client integration

FastAPI backend WebSocket behavior and client-side handling:

- FastAPI exposes both HTTP and WebSocket endpoints

- WebSocket endpoint workflow:

- Accept connection and receive JSON payloads from the client

- Invoke the Langraph streaming graph (graph.stream) for partial outputs

- Send an initial “streaming started” message

- Stream partial chunks as JSON messages while graph produces them

- Send a final message with streaming=false and the assembled full response

- Accept connection and receive JSON payloads from the client

- The Phaser client implements a WebSocket service that manages handshake, chunk assembly, callbacks, and disconnect logic to enable real-time rendering of streaming agent responses

LM-Ops definition and major components

LM-Ops fundamentals for production LLM systems:

LM-Ops is the set of practices, tools, and techniques to optimize the production lifecycle of LLM-based systems. Core components include:

-

Model deployment — packaging and serving model binaries and inference endpoints

-

Data management — datasets for training, evaluation, and reproducibility

-

Prompt versioning — tracking prompt edits like code/version control

-

Monitoring & observability — traces, token usage, latency, tool-call telemetry

-

Security — privacy, guardrails, and access control

-

Evaluation — metrics, benchmarking, and automated tests

A production agentic system requires processes in each area to ensure safety, reliability, and continuous improvement.

Prompt versioning workflow with Opic

Prompt versioning and Opic integration:

- Treat prompts as versioned artifacts analogous to code and models

- Use Opic to store, name, and version prompts centrally

- Code maps Opic prompt objects to domain prompt templates (e.g., philosopher_character_card) and writes prompt updates to Opic on deployment/run

- Opic’s prompt library provides a history of versions so teams can:

- Track changes and attribute behavioral shifts to prompt edits

- Roll back to prior prompt states when needed

- Track changes and attribute behavioral shifts to prompt edits

- Langraph chains fetch prompt content or version metadata as part of the agent configuration

Monitoring and observability via Opic traces

Tracing Langraph executions with Opic:

- Attach an Opic tracer as a callback to the compiled Langraph graph so each execution emits trace spans and metadata

- Traces capture:

- Node-level runtimes (start node, conversation node, retriever usage)

- Prompt inputs and model selections

- Tool invocations, durations, and latency metrics

- Node-level runtimes (start node, conversation node, retriever usage)

- Instrumentation enables:

- Per-step performance analysis and error tracing

- Correlation of prompt/retriever changes with downstream metrics

- Diagnostics for regressions and optimization opportunities

- Per-step performance analysis and error tracing

Evaluation dataset generation pipeline using a large LLM

Generating evaluation datasets via synthetic grounded conversations:

Pipeline to create evaluation corpus:

- Select chunk subsets from the philosopher knowledge corpus

- Use a large LLM (Grok / Llama 3 37B) to synthesize multi-turn, grounded conversations given sampled chunks

- Validate generated conversations for structure and fidelity

- Save synthesized conversations as JSON to serve as the automated evaluation corpus

This synthetic-but-grounded dataset exercises retrieval quality and downstream agent behavior in automated tests.

Automated evaluation metrics and Opic-driven scoring

Automated evaluation workflow and metrics in Opic:

- Opic runs automated evaluations using an external judge model (OpenAI) to score five metrics:

-

Hallucination (0.0–1.0; 1.0 = fully grounded) — measures if the response is supported by sources

-

Answer relevance — relevance of the response to the question and context

-

Moderation — toxicity / safety scoring

-

Context precision — proportion of retrieved context that is relevant

-

Context recall — proportion of relevant context that was retrieved

-

Hallucination (0.0–1.0; 1.0 = fully grounded) — measures if the response is supported by sources

- Evaluation process:

- Upload dataset to Opic

- Invoke an evaluation job that executes agent responses for each sample

- Use prompt-based LLM judgment to compute metrics and per-sample traces

- Upload dataset to Opic

- Results surface as experiments in Opic with aggregate metrics, timelines, and per-sample traces to guide iterative improvements of prompts, retrievers, and workflows

Enjoy Reading This Article?

Here are some more articles you might like to read next: