LLM Series - Part 5 - System Design for LLMs

In this blog post, I will discuss the system design for applications that use LLMs as a core component. However, the goal is to prepare for a technical interview rather than to build a real product :D.

Fundamental Concepts

Retriever-augmented generation (RAG)

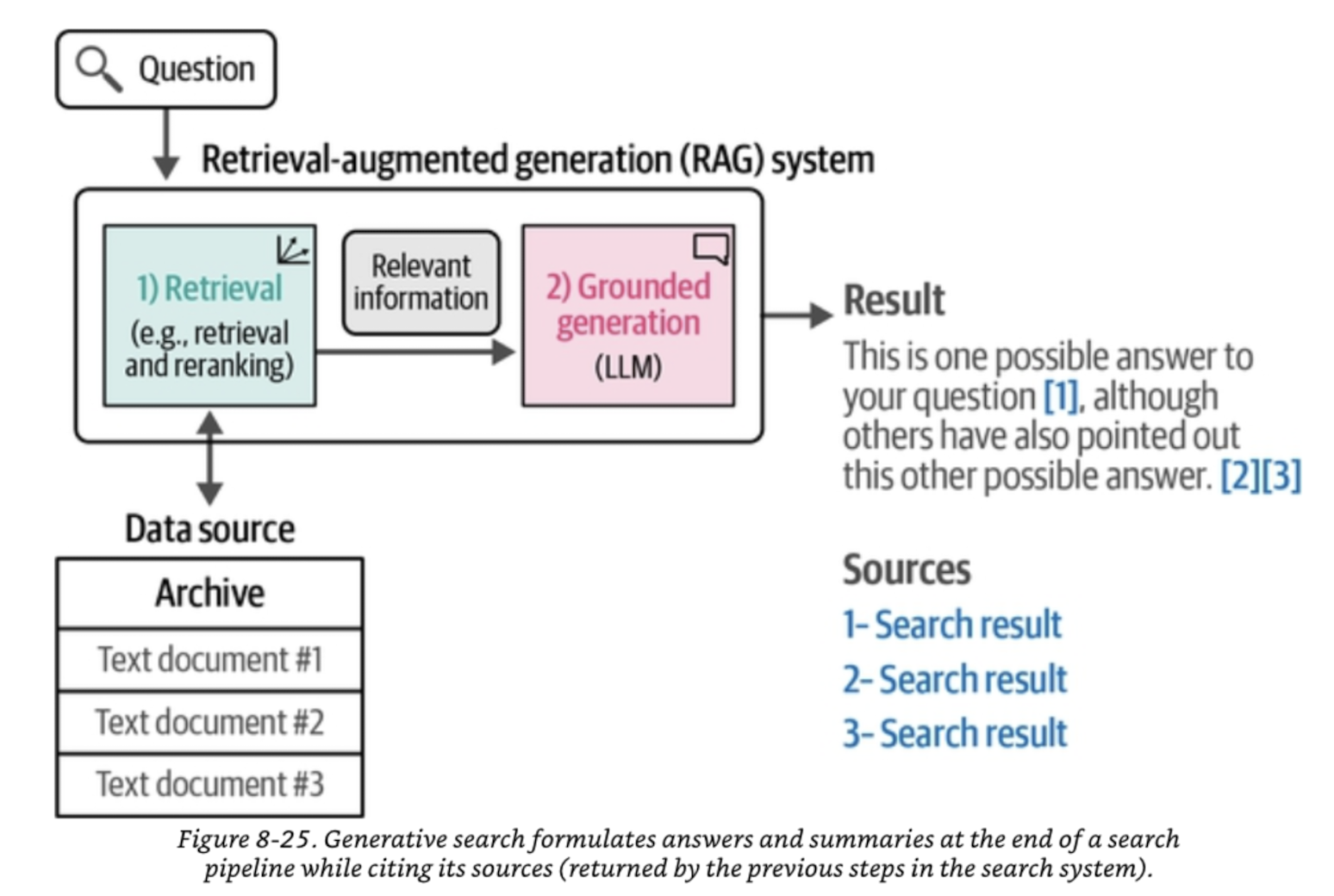

RAG is a technique that allows LLMs to access external knowledge sources to generate more accurate and relevant responses. It is a two-step process:

- Retrieve relevant documents from a knowledge base.

- Generate a response using the retrieved documents as additional context with grounded generation.

In some LLMs APIs (like Cohere’s API), the chat has a document argument to pass in the documents that are relevant to the conversation.

query = "What is the capital of France?"

results = search_api.search(query)

documents = [doc.page_content for doc in results]

response = llm.chat(message=query, documents=documents)

For local LLMs, we can use Langchain to inject relevant documents into the LLM’s context.

from langchain import PromptTemplate

# Create a prompt template

template = """<|user|>

Relevant documents: {documents}

Provide a concise answer to the following question using the relevant documents provided above.

{question}

<|end|>

<|assistant|>"""

prompt = PromptTemplate(template=template, input_variables=["documents", "question"])

from langchain.chains import RetrievalQA

# RAG pipeline

rag = RetrievalQA.from_chain_type(llm=llm, chain_type="stuff", retriever=db.as_retriever(),chain_type_kwargs={"prompt": prompt})

Advanced RAG

Query Rewriting Using an LLM to rewrite the query to improve the retrieval results.

Multi-hop Retrieval Using an LLM to break down the query into multiple sub-queries and retrieve relevant documents for each sub-query. For example, if the query is “What are the biggest mining companies in Australia? Are they profitable in 2025?” we might need to first retrieve the list of biggest mining companies in Australia, and then retrieve the financial data for each company to check if they are profitable in 2025.

Semantic Search and Vector Database

The core component of RAG is the vector database which is used to store embeddings of the documents and retrieve relevant documents based on the query’s semantic similarity. Unlike the traditional search engine that uses keyword-based matching or rule-based, the semantic search is dense retrieval that form a semantic space (i.e., with property like two similar documents are close to each other in the semantic space). Contrastive learning, therefore, is a common technique to train the embedding model to improve the semantic search performance.

In terms of system design, I consider more about how to build a vector database in practice rather than the theoretical details. More specifically, as discussed in [2], here are some considerations:

- What if the vector database does not have the answer for the query? In this case, showing the distance between the query and the most similar documents in the vector database can help to make a more informed decision. A lot of search systems present the user with the best info they can get and leave the decision to the user.

- What if the query is exact match rather than relevant? In this case, hybrid search, which includes both semantic search and keyword search is a better approach.

- Domain shift When the vector database is built on a specific domain that differs from the query domain, the semantic search performance will degrade. Similar to the first challenge, showing meta data might be helpful.

- Chunking long documents The best way of chunking long documents depends on the application. Approaches can be: sentence chunking, several sentences per chunk, paragraph chunking. Adding title of documents or overlapping sentences between chunks might be also helpful.

FAISS

Indexing Vectors

FAISS creates an index to store and organize vectors efficiently. The indexing method affects performance:

- Flat Index (IndexFlatL2) → Exact k-NN search (slow but accurate).

- IVF (Inverted File Index) → Faster search with approximate results.

- HNSW (Hierarchical Navigable Small World) → Graph-based ANN search (fast & accurate).

import faiss

import numpy as np

# Generate random 512-dimension vectors for products

dimension = 512

num_vectors = 10000

vectors = np.random.random((num_vectors, dimension)).astype('float32')

# Create a FAISS index

index = faiss.IndexFlatL2(dimension) # L2 distance (Euclidean)

index.add(vectors) # Add vectors to the index

Searching for Similar Items

# Generate a random query vector

query_vector = np.random.random((1, dimension)).astype('float32')

# Find the top 5 nearest neighbors

k = 5

distances, indices = index.search(query_vector, k)

print("Nearest Neighbors:", indices)

print("Distances:", distances)

Prompt Engineering

Core components of an LLM system

Input handling and processing

Because each application has different input types, for example, code will differ from clinical notes, we need to have a module that can handle specific input types related to the application.

Knowledge Base and Data Resources

The purpose of this module is to:

- Provide the necessary related knowledge to the LLM.

- To store user-specific data for personalized output/decision.

This can be done by:

The core LLM-powered module

This is the main module that uses the LLM to generate the output/decision.

Prompting module

This can be integrated into the core LLM-powered module to improve the quality of the output/decision. We can have a sub-module to classify the input into different categories and use different prompt templates for each category.

We can also leverage the response from the LLM as well as the user feedback to improve the prompt.

Filtering and Validation

- Validation by rule-based logic check, to make sure the output/input is correct and valid. However, because of the rule-based nature, it is not always flexible to handle all cases.

- Validation by another machine learning model, for example, another LLM model or a uncertainty estimation model.

- Optional human-in-the-loop (HITL) validation by a human expert, especially in critical applications like medical diagnosis.

Safe Guarding

Agentic Framework

Agent Tools

These are external tools or resources that the LLM can access to perform specitic actions or gather information. This could be a calculator, a search API, or a external database.

Multi-agent system

Data Distribution Shifts and Monitoring

Continual Learning

Evaluation

Offline Evaluation

Test in Production

Deployment and Scaling

Case Study

Discuss on User Journey

User journey describes how a user interacts with a product, starting from the first touchpoint - i.e.,user’s input, to the final interaction - i.e., displaying the result to the user.

Discussing on the user journey helps us to understand the flow interaction between the user and the product, and identify the core components that are involved in the interaction.

Recommender System

User Interaction Layer

- Chat Interface: Users can describe their interests, ask questions, and discuss product features.

- Input Handling: Supports text-based and voice-based interactions.

NLP Module

- Intent Recognition: Extracts user intent (e.g., “I want a lightweight laptop for travel”).

- Entity Extraction: Identifies key product attributes (e.g., “lightweight,” “laptop,” “travel”).

- Sentiment Analysis: Understands user sentiment to refine recommendations.

Product Database

- Structure: Contains product details, including:

- Name, Category, Price

- Features & Specifications

- User Reviews & Ratings

The most important component of this module is the embedding model to convert all the data into vectors so that we can use the vector database to store and search for similar items. The ideal vector space should be able to capture the semantic meaning of the data, i.e., the more similar the data is, the closer the vectors are in the vector space.

The most commonly used embedding models fall into three categories:

1️⃣ General-Purpose Text Embeddings (Best for Q&A, knowledge retrieval)

2️⃣ Domain-Specific Embeddings (Optimized for medical, legal, code, etc.)

3️⃣ Multimodal Embeddings (For text + images)

Recommendation Engine

- Content-Based Filtering: Matches user preferences with product attributes.

- Collaborative Filtering: Uses customer behavior data to suggest items others with similar preferences liked.

- Hybrid Approach: Combines content-based and collaborative filtering.

RAG-based recommendation

RAG is built on two main components:

1️⃣ Retriever (Information Fetching)

- Dense Vector Search (FAISS, Annoy, Pinecone, Weaviate, ChromaDB) that uses embeddings (e.g., BERT, SBERT, DPR) to find semantically similar documents.

- Traditional Search (BM25, ElasticSearch, Google Search API) that retrieves documents using keyword-based matching.

2️⃣ Generator (Text Generation)

- Pre-trained LLMs (GPT, BART, T5, LLaMA) generate responses using the retrieved documents as additional context.

- Can use fine-tuned models for domain-specific responses (e.g., finance, medical).

Key Steps in RAG:

1️⃣ User Input → A query is given (e.g., “What are the latest gaming laptops?”).

2️⃣ Retrieval Module → Finds the most relevant documents using vector search (FAISS, BM25, ElasticSearch, etc.).

3️⃣ Context Injection → The retrieved documents are passed to the generation model.

4️⃣ Response Generation → The model generates a final, coherent answer using both the query and retrieved documents.

References

[1] Build your first LLM agent application: https://developer.nvidia.com/blog/building-your-first-llm-agent-application/

[2] Hands-on Large Language Models: Language Understanding and Generation

Enjoy Reading This Article?

Here are some more articles you might like to read next: