Fantastic Targets for Concept Erasure in Diffusion Models and Where to Find Them

(Shameless plug ![]() ) Our other papers on Concept Erasing/Unlearning:

) Our other papers on Concept Erasing/Unlearning:

Fantastic Targets for Concept Erasure in Diffusion Models and Where to Find Them,

Tuan-Anh Bui, Thuy-Trang Vu, Long Vuong, Trung Le, Paul Montague, Tamas Abraham, Junae Kim, Dinh Phung

ICLR 2025 (arXiv 2501.18950)

Erasing Undesirable Concepts in Diffusion Models with Adversarial Preservation,

Tuan-Anh Bui, Long Vuong, Khanh Doan, Trung Le, Paul Montague, Tamas Abraham, Dinh Phung

NeurIPS 2024 (arXiv 2410.15618)

Hiding and Recovering Knowledge in Text-to-Image Diffusion Models via Learnable Prompts,

Tuan-Anh Bui, Khanh Doan, Trung Le, Paul Montague, Tamas Abraham, Dinh Phung

ICLR 2025 DeLTa Workshop (arXiv 2403.12326)

Abstract

Concept erasure has emerged as a promising technique for mitigating the risk of harmful content generation in diffusion models by selectively unlearning undesirable concepts. The common principle of previous works to remove a specific concept is to map it to a fixed generic concept, such as a neutral concept or just an empty text prompt. In this paper, we demonstrate that this fixed-target strategy is suboptimal, as it fails to account for the impact of erasing one concept on the others. To address this limitation, we model the concept space as a graph and empirically analyze the effects of erasing one concept on the remaining concepts. Our analysis uncovers intriguing geometric properties of the concept space, where the influence of erasing a concept is confined to a local region. Building on this insight, we propose the Adaptive Guided Erasure (AGE) method, which dynamically selects optimal target concepts tailored to each undesirable concept, minimizing unintended side effects. Experimental results show that AGE significantly outperforms state-of-the-art erasure methods on preserving unrelated concepts while maintaining effective erasure performance.

Key Observations

Geometric Properties of the Concept Graph

- Locality: The concept graph is sparse and localized, which means that the impact of erasing one concept does not strongly spread to all the other concepts but only local concepts that are semantically related to the erased concept \(c_e\).

- Asymmetry: The concept graph is asymmetric such that the impact of erasing concept \(c_e\) on concept \(c_j\) is not the same as the impact of erasing concept \(c_j\) on concept \(c_e\).

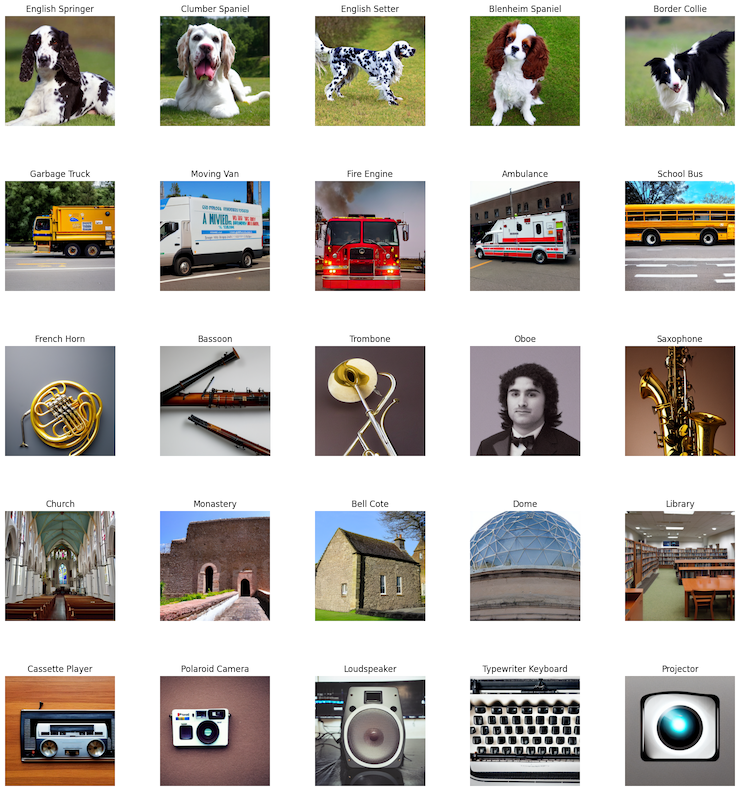

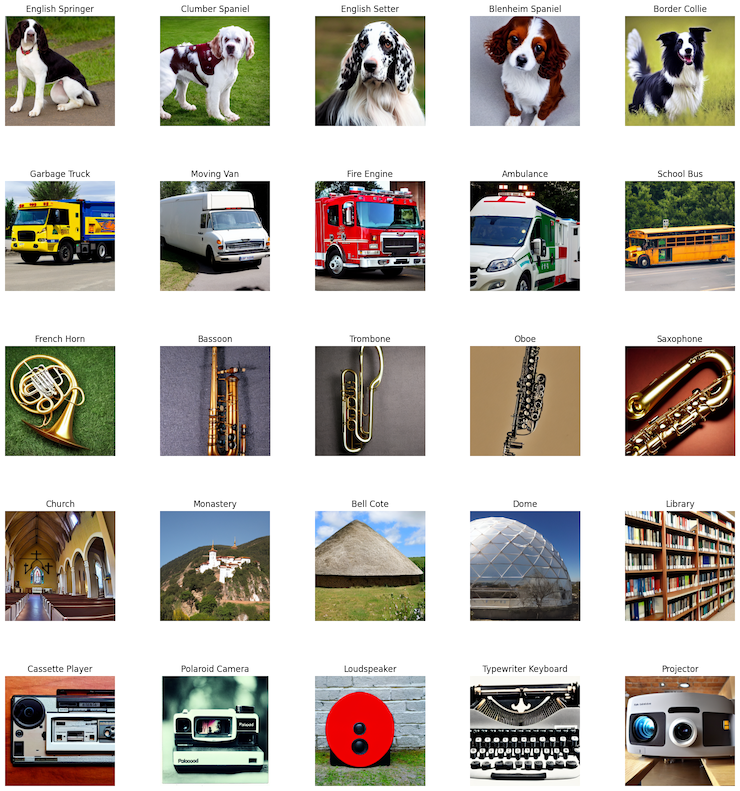

- Abnormal: The two abnormal concepts “Bell Cote” and “Oboe”, which have low generation capability to begin with, are sensitive to the erasure of any concept.

What are the Fantastic Concepts?

-

Locality: Regardless of the choice of the target concept, the impact of erasing one concept is still sparse and localized.

-

Abnormal: The two abnormal concepts “Bell Cote” and “Oboe” are still sensitive to the erasure of any concept regardless of the choice of the target concept.

-

Synonym ❌❌❌: Mapping to a synonym of the anchor concept leads to a minimal change as evidenced by the lowest \(\Delta(c_e, c_j)\) for all \(c_j\). However, it also the least effective in erasing the undesirable concept \(c_e\).

-

All Unrelated Concepts ❌❌: Mapping to semantically unrelated concepts demonstrates the similar performance as the generic concept “ “, as evidenced by the similar \(\Delta(c_e, c_j)\) between the three last rows (\(5^{th}-7^{th}\)) in each subset.

-

General concepts ❌: While choosing a general concept as the target concept is intuitive and reasonable, it does not necessary lead to good preservation performance. For example, “English Springer” → “Dog” or “French Horn” → “Musical Instrument Horn” still cause a drop on related concepts in their respective subsets. Moreover, there are also small drops on erasing performance compared to other strategies, shown by DS-5 of 83%, 91%, and 78% when mapping “Garbage Truck” to “Truck”, “French Horn” to “Musical Instrument Horn”, and “Cassette Player” to “Audio Device”, respectively. The two observations indicate that choosing a general concept is not an optimal strategy.

-

In-class ✅: The highest preservation performance which is consistently observed in all five subsets is achieved when the target concept is a closely related concept to \(c_e\). For example, “English Springer” → “Clumber spaniel” or “Garbage Truck” → “Moving Van” or “School Bus” in the “Dog” and “Vehicle” subsets.

Analogy to the Concept Graph

The concept space of a generative model can be represented as a graph like a spiderweb, where each node is a concept and edges are the impact of one concept on the others. When we remove a concept, it’s like dropping a ball into this conceptual spiderweb, triggering a chain reaction. The impact of erasure spreads out, strongly affecting locally related concepts while weakly affecting those further away or unrelated.

When we map a concept to a target concept, it’s like pull two nodes of the spiderweb closer together, therefore, the impact of erasure will be proportional to the distance between the two nodes, i.e., the further away between the two concepts, the more impact of erasure to the whole concept space. The fantastic target concepts found in our paper are the ones that are locally connected to the concept to be erased but are not its synonyms, therefore, being the optimal target concepts for erasure that minimize the impact on the whole concept space.

Implications of the Concept Graph

One of the most interesting contributions of this work is the introduction of the concept graph, which represents a generative model’s capability as a graph, where nodes are discrete visual concepts, and edges are the relationships between those concepts.

Understanding this graph structure is essential for many tasks, such as:

- Machine Unlearning 🗑️: The goal here is to remove the model’s knowledge of certain concepts while retaining its knowledge of others. The concept graph structure helps identify which concepts are critical to the model’s performance and should be preserved.

- Personalization 👤: The goal is to personalize the model’s knowledge for a specific user. For instance, changing “a photo of a cat before Vancouver Convention Center” to “a photo of a cat before the user’s house.” Traditional methods like Dreambooth, which fine-tune the model on a small user-specific dataset, often overfit to the specific concept and degrade the model’s general capability. Prior approaches address this by collecting large datasets of heuristically selected concepts—e.g., if the personalized concept is “a user’s house,” the preservation dataset would include a variety of house images. Our concept graph structure can help identify which concepts are specific to the user and should be preserved, improving the balance between personalization and generalization.

Where to Find the Fantastic Concepts?

Motivated by the above observations, we propose to select the target concept for erasure adaptively for each query concept to mitigate the side effects of erasing undesirable concepts in diffusion models.

More specifically, the ideal target concept should satisfy the following properties:

- It should not be a synonym of the query concept that resembles a similar visual appearance (e.g., “nudity” to “naked” or “nude”, or “Garbage Truck” to “Waste Collection Vehicle”). This ensures that the erasure performance on the query concept remains effective.

- It should be closely related to the query concept but not identical (e.g., “English Springer” to “Clumber Spaniel”, or “Garbage Truck” to “Moving Van”). This helps preserve the model’s generation capabilities on other concepts. As suggested by the locality property of the concept graph, changes in the model’s output can be used to identify these locally related concepts.

Although it is possible to manually select the ideal target concept for each query concept based on the above properties, this approach is not scalable for a large erasing set E. Therefore, we propose an optimization-based approach to automatically and adaptively find the optimal target concept for each query concept. Specifically, we aim to solve the following optimization problem:

\[\underset{\theta^{'}}{\min} \; \underset{c_e \in \mathbf{E}}{\mathbb{E}} \; \underset{c_{t} \in \mathcal{C}}{\max} \left[ \underbrace{\left\|\epsilon_{\theta^{'}}(\tau(c_e)) - \epsilon_{\theta}(\tau(c_{t})) \right\|_2^2}_{L_1} + \lambda \underbrace{\left\|\epsilon_{\theta^{'}}(\tau(c_{t})) - \epsilon_{\theta}(\tau(c_{t}))\right\|_2^2}_{L_2} \right]\]where \(\lambda\) is a trade-off hyperparameter and \(\mathcal{C}\) is the search space of target concepts \(c_t\).

Interpretation of the optimization problem:

Minimizing the objective \(L_1\) w.r.t. \(\theta^{'}\) ensures that the output of the sanitized model for the query concept \(c_e\) is close to the output of the original model but for the target concept \(c_t\), which serves the purpose of erasing the undesirable concept \(c_e\).

Meanwhile, minimizing the objective \(L_2\) w.r.t. \(\theta^{'}\) ensures that the output of the two models remain similar for the same input concept \(c_t\), preserving the model’s capability on the remaining concepts.

In summary, the outer minimization problem optimizes the sanitized model parameters \(\theta^{'}\) to simultaneously erase undesirable concepts and preserve the model’s functionality for other concepts.

On the other hand, maximizing the objective \(L_1\) w.r.t. \(c_t\) ensures that the solution \(c_t^*\) is not a synonym of the query concept \(c_e\), while maximizing the objective \(L_2\) w.r.t. \(c_t\) finds a sensitive concept to the change of the model’s parameter \(\theta \rightarrow \theta^{'}\).

This helps identify the most related concept to the query concept \(c_e\), as suggested by the locality property of the concept graph. By maximizing both \(L_1\) and \(L_2\) w.r.t. \(c_t\), we ensure that the solution \(c_t^*\) satisfies both key properties of the ideal target concept.

Comparison with Adversarial Preservation

The definition of adversarial concepts- those most affected by changes in model parameters when a concept is erased- was first introduced in our previous work “Erasing Undesirable Concepts in Diffusion Models with Adversarial Preservation”.

Building on empirical observations that erasing different concepts impacts the remaining ones in various ways and that preserving a neutral concept alone is insufficient to maintain the model’s capabilities, we propose a method for preserving sensitive concepts while erasing undesirable ones. This approach is similar to the adversarial training principle which has been successfully applied to improve the robustness of models against adversarial attacks.

Our ICLR 2025 paper is inspired by the idea of adversarial concepts but with several key differences:

- Instead of focusing on the to-be-preserved concept, we investigate the impact of the target concept on the remaining concepts, offering new insights into the selection and importance of the target concept in the erasure problem.

- We propose an improved optimization method that more accurately identifies adversarial concepts, explicitly excluding those identical to the to-be-erased concept—an aspect not considered in our NeurIPS 2024 paper.

- For the first time, we introduce the concept of a \textit{concept graph} and its properties, providing a deeper understanding of concept relationships in the latent space.

Experiments

We conducted comprehensive experiments on three erasure tasks to demonstrate the effectiveness of our method. Please refer to the paper for more details.

Beside the experiments that support the superiority of our method (of course as all other papers :D), in this blog post (with more relaxed style), we would like to emphasize some interesting observations that has been discussed in Appendix D of our paper that we think are worthy of future research.

Abnormal Concepts

One interesting observation is that there are some special concepts that are sensitive to the erasure of any concept, such as “Bell Cote” and “Oboe”. We call them abnormal concepts. It is interesting to see that the abnormal concepts are always the ones that already have low generation capability to begin with, i.e., the lowest DS-5 score (red + blue bar) in the bar chart of the figure below.

Mixture of Concepts

Why mixture of concepts?

Given that the concept space \(\mathcal{C}\) is discrete and finite, a natural approach to solving Equation 3 would be to enumerate all the concepts in \(\mathcal{C}\) and select the most sensitive concept \(c_t\) that maximizes the total loss at each optimization step of the outer minimization problem. However, this method is computationally impractical due to the large number of concepts in \(\mathcal{C}\). Moreover, many concepts are inherently complex, often composed of multiple attributes, and cannot always be interpreted as singular, isolated concepts within the space \(\mathcal{C}\).

Since the concepts are represented textually and the output of Latent Diffusion Models is controlled by textual prompts, the most intuitive way to create a mixture of concepts, such as combining \(c_1\) and \(c_2\), is through a textual template. For example, using a prompt like “a photo of a {$c_1$} and a {$c_2$}” ensures that both concepts appear in the generated image. However, this approach has a significant limitation: it does not provide gradients, preventing us from using standard backpropagation to learn and fine-tune the target concept \(c_t\).

To address this issue, we employ the Gumbel-Softmax operator to approximate a mixture of concepts. We set the temperature to a value below 1, ensuring that the resulting target concept is a combination of a few dominant concepts, rather than an indiscriminate mixture of all the concepts in \(\mathcal{C}\).

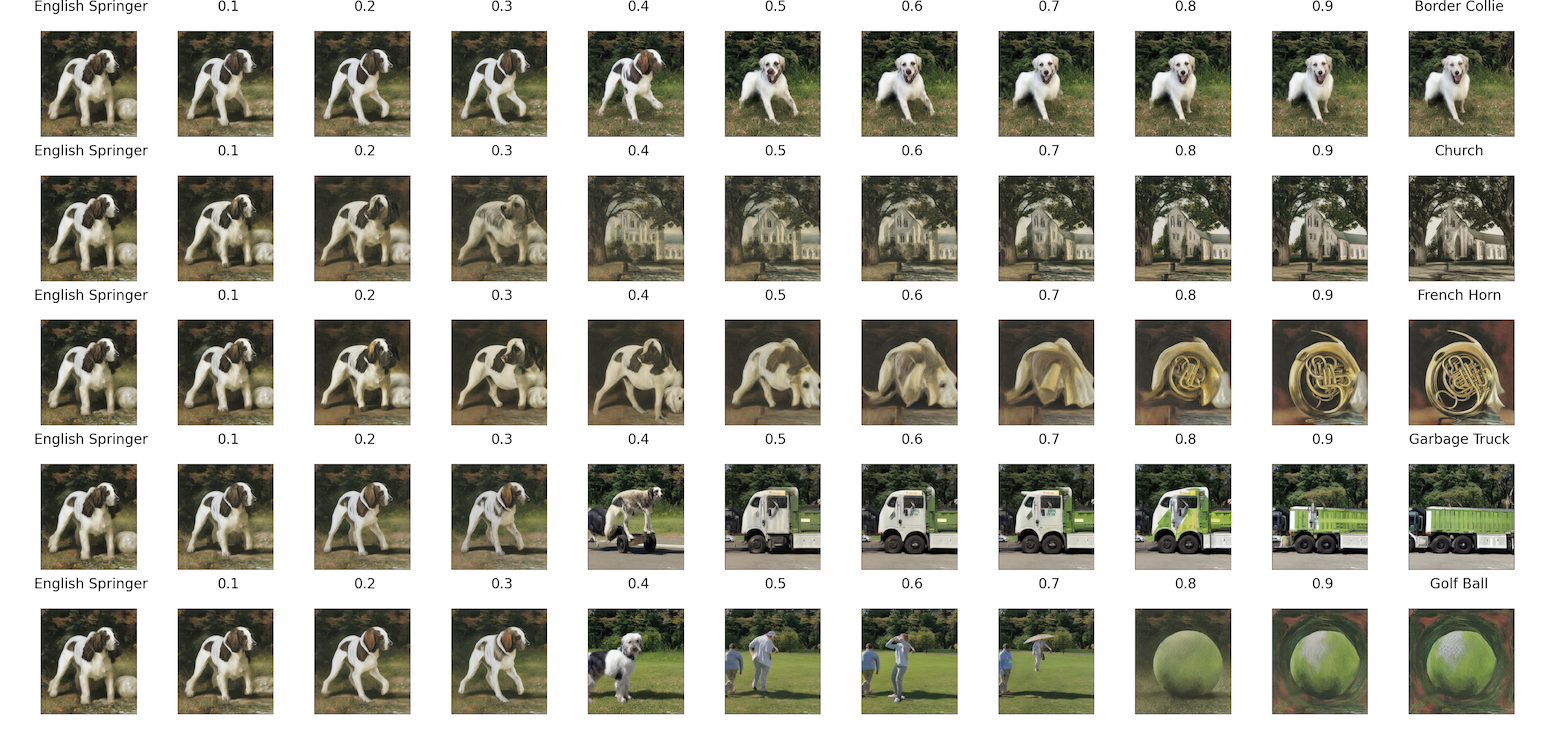

Visualization

To illustrate the effect of concept mixtures as described in Equation 4, we visualize the generated images \(g(z_T, (1 - \alpha) \tau(c_1) + \alpha \tau(c_2))\) in Figure below, where \(g()\) represents the image generation function (the diffusion backward process), \(z_T\) is the initial noise input, and \(\tau\) is the textual encoder. We fix \(c_1\) as “English Springer” and vary \(\alpha\) from 0 to 1 with different \(c_2\) values to simulate the mixture of concepts \(G(\pi) \cdot \mathcal{C}\) in Equation 3. Intuitively, we expect the generated images to gradually transition from concept \(c_1\) to concept \(c_2\) as \(\alpha\) increases.

In cases like “Church,” “French Horn,” and “Garbage Truck,” this gradual transformation is indeed observable, where the image transitions smoothly from “English Springer” to the target concept as \(\alpha\) increases. However, for other concepts like “Border Collie” and “Golf Ball,” the transformation is less smooth. For instance, the image shifts abruptly from “English Springer” to “Border Collie” when \(\alpha\) increases from 0.4 to 0.5, or from “English Springer” to “Golf Ball” as \(\alpha\) changes from 0.4 to 0.7.

This phenomenon suggests that mixing concepts is not merely a linear interpolation between two target concepts, but is influenced by the intrinsic nature of the concepts \(c_1\) and \(c_2\).

Citation

If you find this work useful in your research, please consider citing our paper (or our other papers ![]() ):

):

@article{bui2024erasing,

title={Erasing Undesirable Concepts in Diffusion Models with Adversarial Preservation},

author={Bui, Anh and Vuong, Long and Doan, Khanh and Le, Trung and Montague, Paul and Abraham, Tamas and Phung, Dinh},

booktitle={NeurIPS},

year={2024}

}

@article{bui2025fantastic,

title={Fantastic Targets for Concept Erasure in Diffusion Models and Where to Find Them},

author={Bui, Anh and Vu, Thuy-Trang and Vuong, Long and Le, Trung and Montague, Paul and Abraham, Tamas and Kim, Junae and Phung, Dinh},

journal={ICLR},

year={2025}

}

@inproceedings{bui2024hiding,

title={Hiding and recovering knowledge in text-to-image diffusion models via learnable prompts},

author={Bui, Anh Tuan and Doan, Khanh and Le, Trung and Montague, Paul and Abraham, Tamas and Phung, Dinh},

booktitle={ICLR 2025 Workshop on Deep Generative Model in Machine Learning: Theory, Principle and Efficacy},

year={2024}

}