Hiding and Recovering Knowledge in Text-to-Image Diffusion Models via Learnable Prompts

(Shameless plug ![]() ) Our other papers on Concept Erasing/Unlearning:

) Our other papers on Concept Erasing/Unlearning:

Fantastic Targets for Concept Erasure in Diffusion Models and Where to Find Them,

Tuan-Anh Bui, Thuy-Trang Vu, Long Vuong, Trung Le, Paul Montague, Tamas Abraham, Junae Kim, Dinh Phung

ICLR 2025 (arXiv 2501.18950)

Erasing Undesirable Concepts in Diffusion Models with Adversarial Preservation,

Tuan-Anh Bui, Long Vuong, Khanh Doan, Trung Le, Paul Montague, Tamas Abraham, Dinh Phung

NeurIPS 2024 (arXiv 2410.15618)

Hiding and Recovering Knowledge in Text-to-Image Diffusion Models via Learnable Prompts,

Tuan-Anh Bui, Khanh Doan, Trung Le, Paul Montague, Tamas Abraham, Dinh Phung

ICLR 2025 DeLTa Workshop (arXiv 2403.12326)

Abstract

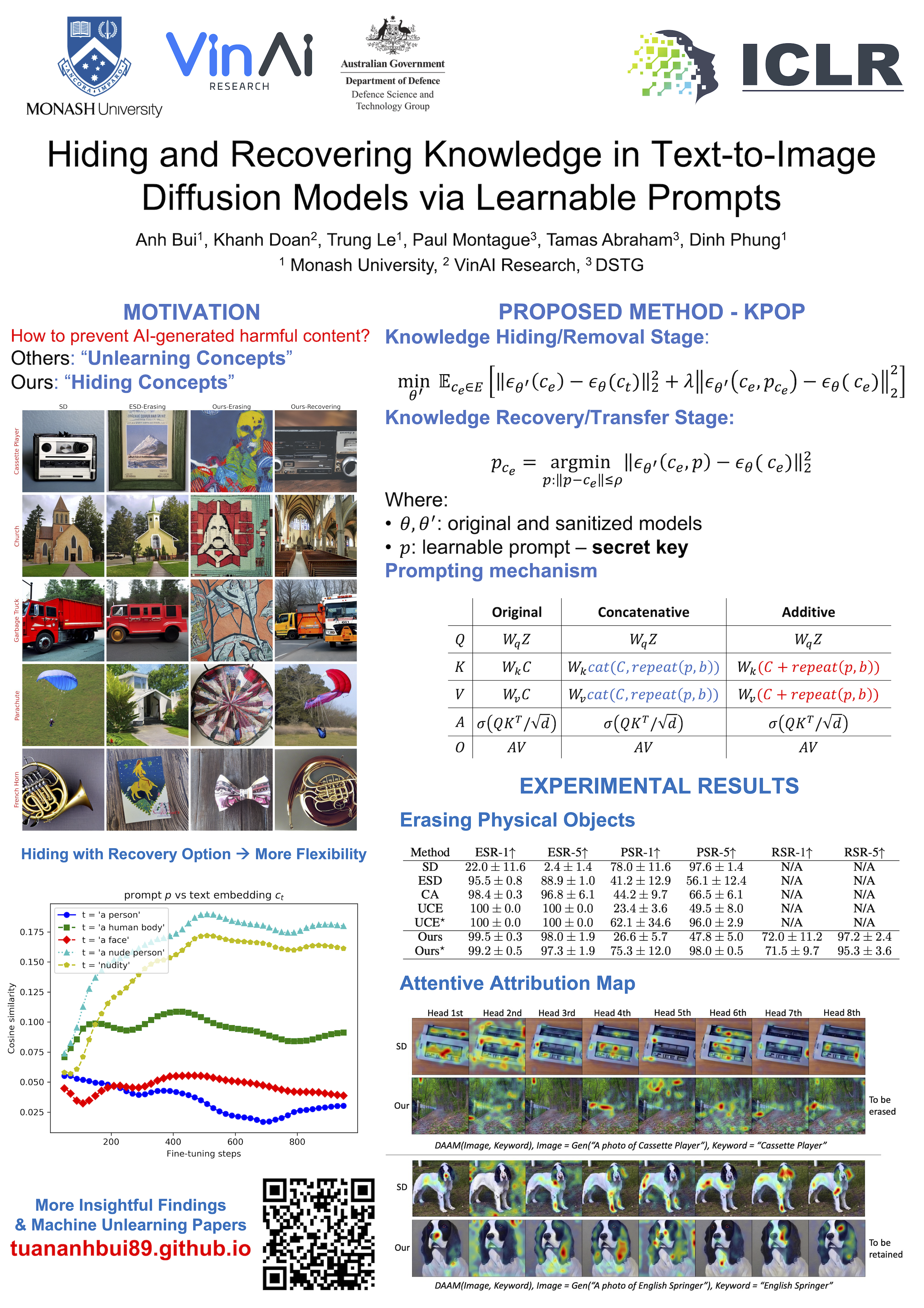

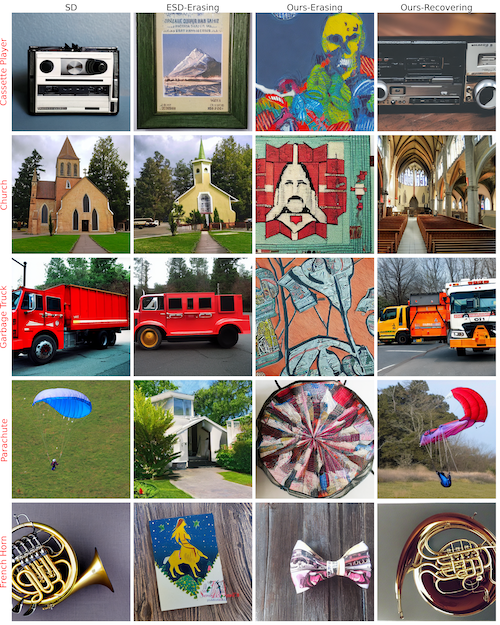

Diffusion models have demonstrated remarkable capability in generating high-quality visual content from textual descriptions. However, since these models are trained on large-scale internet data, they inevitably learn undesirable concepts, such as sensitive content, copyrighted material, and harmful or unethical elements. While previous works focus on permanently removing such concepts, this approach is often impractical, as it can degrade model performance and lead to irreversible loss of information. In this work, we introduce a novel concept-hiding approach that makes unwanted concepts inaccessible to public users while allowing controlled recovery when needed. Instead of erasing knowledge from the model entirely, we incorporate a learnable prompt into the cross-attention module, acting as a secure memory that suppresses the generation of hidden concepts unless a secret key is provided. This enables flexible access control – ensuring that undesirable content cannot be easily generated while preserving the option to reinstate it under restricted conditions. Our method introduces a new paradigm where concept suppression and controlled recovery coexist, which was not feasible in prior works. We validate its effectiveness on the Stable Diffusion model, demonstrating that hiding concepts mitigate the risks of permanent removal while maintaining the model’s overall capability.

Citation

If you find this work useful in your research, please consider citing our paper (or our other papers ![]() ):

):

@article{bui2024erasing,

title={Erasing Undesirable Concepts in Diffusion Models with Adversarial Preservation},

author={Bui, Anh and Vuong, Long and Doan, Khanh and Le, Trung and Montague, Paul and Abraham, Tamas and Phung, Dinh},

booktitle={NeurIPS},

year={2024}

}

@article{bui2025fantastic,

title={Fantastic Targets for Concept Erasure in Diffusion Models and Where to Find Them},

author={Bui, Anh and Vu, Thuy-Trang and Vuong, Long and Le, Trung and Montague, Paul and Abraham, Tamas and Kim, Junae and Phung, Dinh},

journal={ICLR},

year={2025}

}

@inproceedings{bui2024hiding,

title={Hiding and recovering knowledge in text-to-image diffusion models via learnable prompts},

author={Bui, Anh Tuan and Doan, Khanh and Le, Trung and Montague, Paul and Abraham, Tamas and Phung, Dinh},

booktitle={ICLR 2025 Workshop on Deep Generative Model in Machine Learning: Theory, Principle and Efficacy},

year={2024}

}