Mitigating Semantic Collapsing Problem in Generative Personalization with Test-time Embedding Adjustment

Table of Contents

- Table of Contents

- Abstract

- Motivation

- Contributions

- Semantic Collapsing Problem (Witch Hunting)

- Test-Time Embedding Adjustment

- Surprising Impact of TEA on Anti-DreamBooth

- Experiments

- Citation

- References

Abstract

In this paper, we investigate the semantic collapsing problem in generative personalization, an under-explored topic where the learned visual concept (\(V^*\)) gradually shifts from its original textual meaning and comes to dominate other concepts in multi-concept input prompts. This issue not only reduces the semantic richness of complex input prompts like “a photo of \(V^*\) wearing glasses and playing guitar” into simpler, less contextually rich forms such as “a photo of \(V^*\)” but also leads to simplified output images that fail to capture the intended concept. We identify the root cause as unconstrained optimisation, which allows the learned embedding \(V^*\) to drift arbitrarily in the embedding space, both in direction and magnitude. To address this, we propose a simple yet effective training-free method that adjusts the magnitude and direction of pre-trained embedding at inference time, effectively mitigating the semantic collapsing problem. Our method is broadly applicable across different personalization methods and demonstrates significant improvements in text-image alignment in diverse use cases. Our code is published at https://github.com/tuananhbui89/Embedding-Adjustment.

Motivation

Misalignment between the input prompt and the generated output is a grand challenge in generative personalization. While the goal is to faithfully preserve the personalized concept and simultaneously respect the semantic content of the prompt, existing methods often fail to achieve this balance. For instance, DreamBooth [1] introduces a class-specific prior preservation loss to mitigate overfitting, while other approaches seek to disentangle the personalized concept from co-occurring or background elements in the reference set [6, 7]. Recent works further attempt to regulate semantic fidelity through regularization strategies [4, 5] or compositional disentanglement [2, 3].

Contributions

In this paper, we make three contributions that I personally really proud of:

- We first highlight the semantic collapsing problem in generative personalization, which is under-explored in the literature. We show that this phenomenon is driven by the unconstrained optimization during finetuning of personalization. To be best of our knowledge, this is the first work that explicitly points out this problem.

- We propose a training-free method that adjusts the embedding of the personalized concept at inference time, effectively mitigating the semantic collapsing problem. This is a simple, general, and yet very effective approach, and first of its kind :D.

- We make a connection between SCP and Anti-DreamBooth, showing why Anti-DreamBooth works and how TEA can be applied to partially reverse the Anti-DreamBooth effect and restore the protected concept. While several works have shown the weak security of Anti-Personalization frameworks, but most of them focus on the preprocessing phase (i.e., removing the invisible mask in the data, therefore, these data still can be personalized), our work is the first to show the vulnerability of Anti-Personalization frameworks in post-processing phase (i.e., given a thought-to-be-protected model, we can still recover the protected concept).

Semantic Collapsing Problem (Witch Hunting)

The flow of the paper is a bit odd, that is, I start by hypothesizing the problem, then propose method to show its existence, and finally propose the solution (TEA). While my colleagues argued with me with that flow (problem, solution, explain), I decided to keep it like that (problem, explain, solution) because I believe that when everyone knows the problem, the solution is very straight-forward.

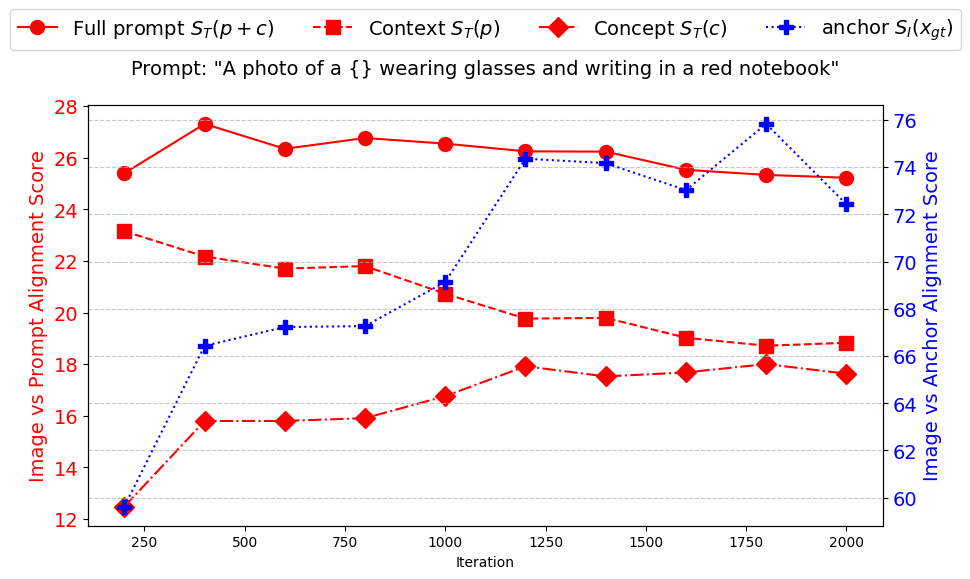

In this section, we present empirical evidence supporting the existence of the semantic collapsing problem and its impact on generation quality. Our key findings are as follows:

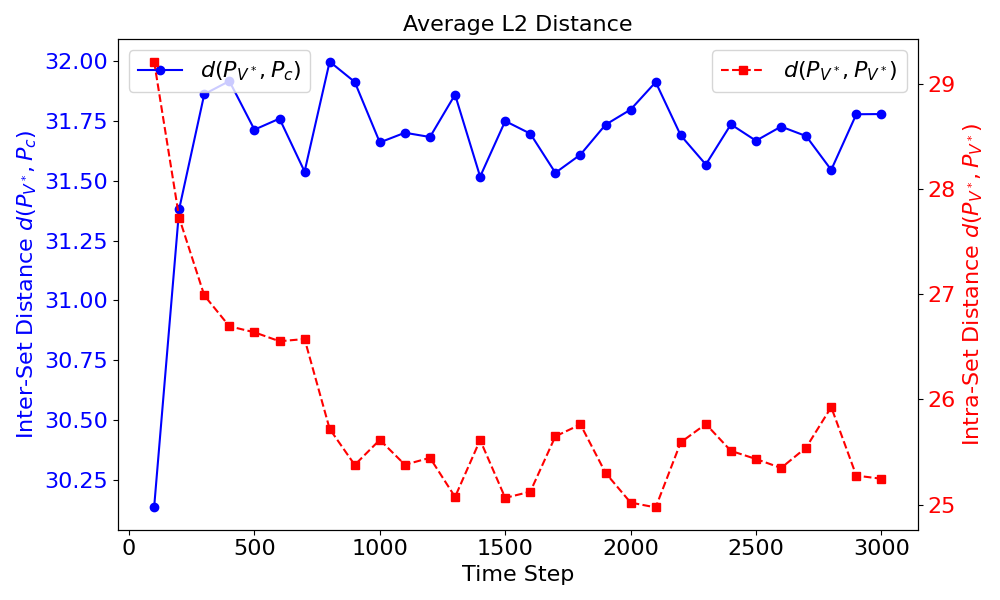

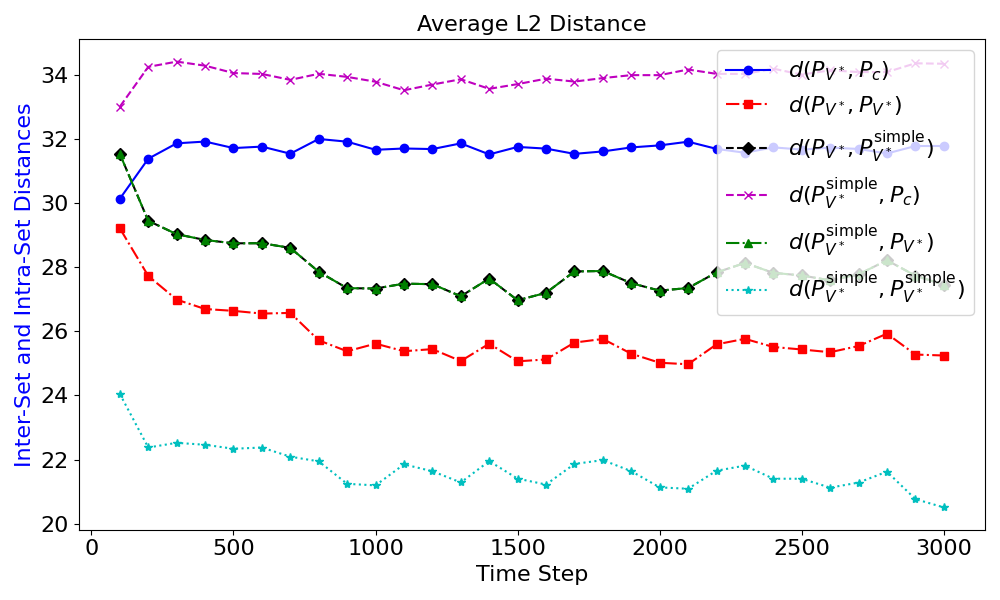

#1. Existence of SCP. SCP exists in the textual domain, where the prompt \(\lfloor p, V^* \rfloor\) is dominated by the learned embedding \(V^*\) and the semantic meaning of the entire prompt gradually collapses to the learned embedding \(V^*\), i.e., \(\tau(\lfloor p, V^* \rfloor) \rightarrow \tau(V^*)\).

#2. Negative Impact on Generation Quality. SCP leads to the degradation/misalignment in generation quality in the image space, i.e., \(G(\lfloor p, V^* \rfloor) \rightarrow G(V^*)\), particularly for prompts with complex semantic structures.

#3. Surprisingly Positive Impact. SCP can also lead to the positive impact on generation quality, particularly for prompts where the concept \(c\) requires a strong visual presence to be recognisable.

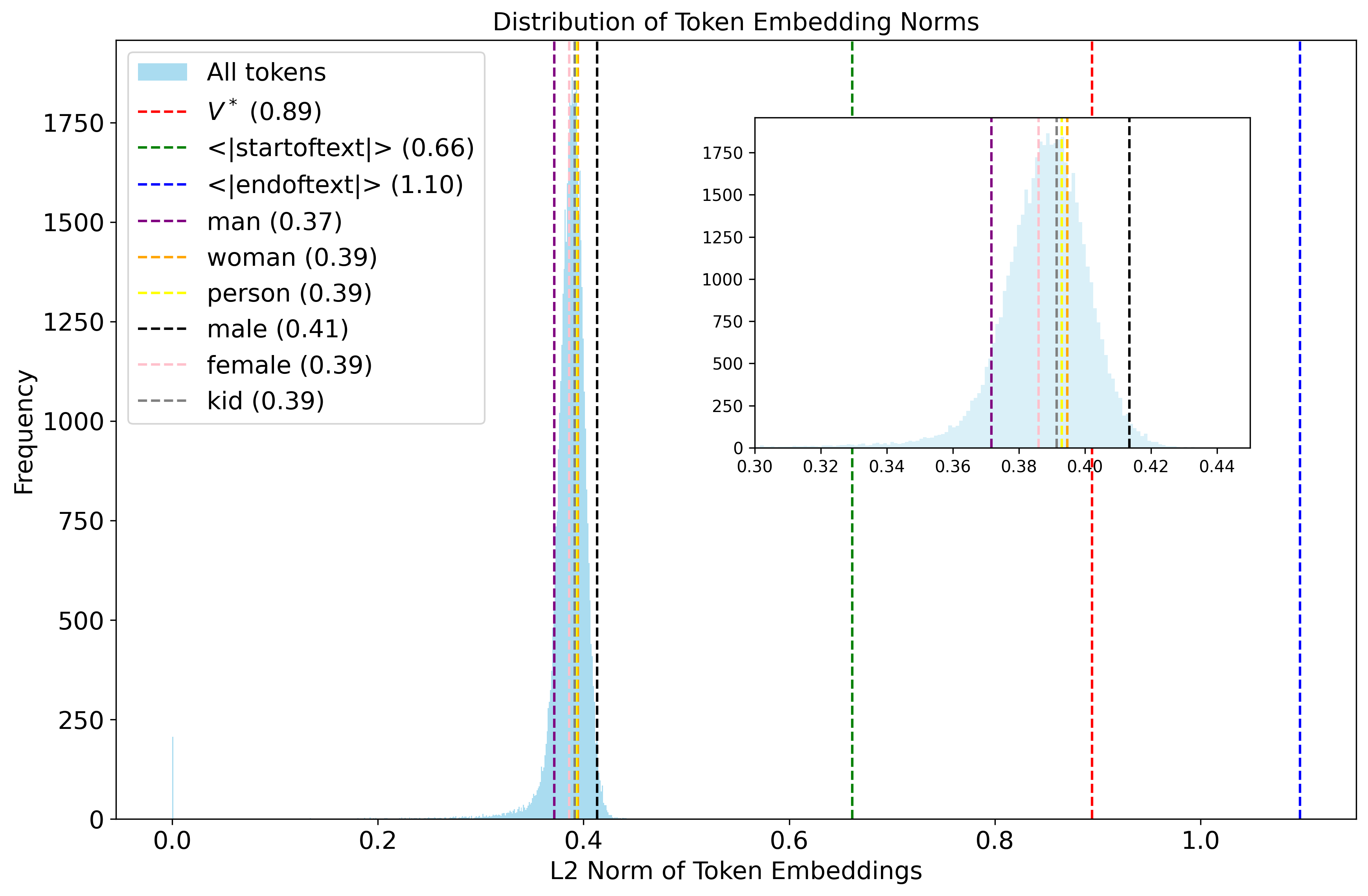

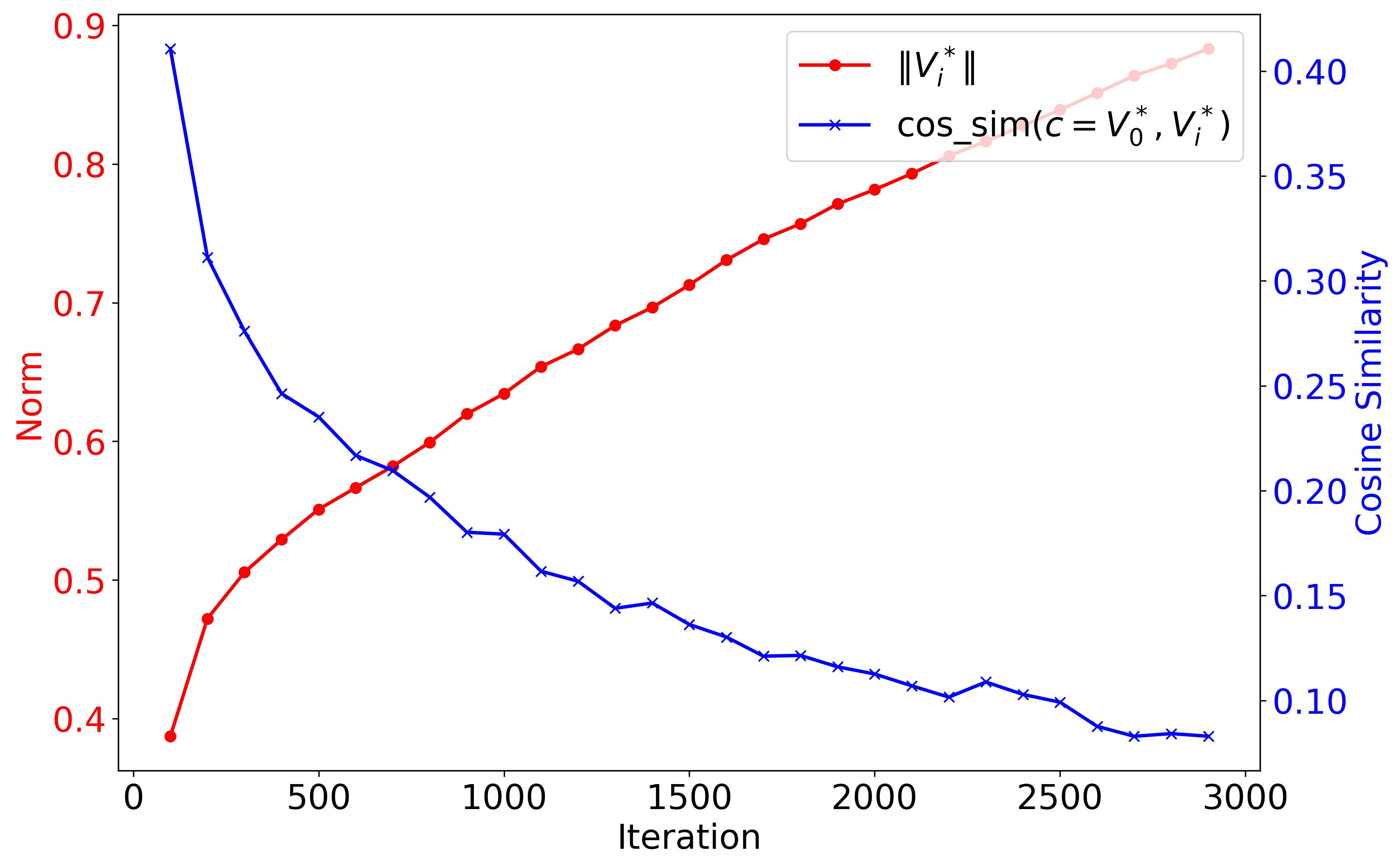

#4. Root Cause of SCP. SCP arises from unconstrained optimisation during personalization, which leads to arbitrary shifts (both in magnitude and direction) in the embedding of \(V^*\) away from its original semantic concept \(c\).

Test-Time Embedding Adjustment

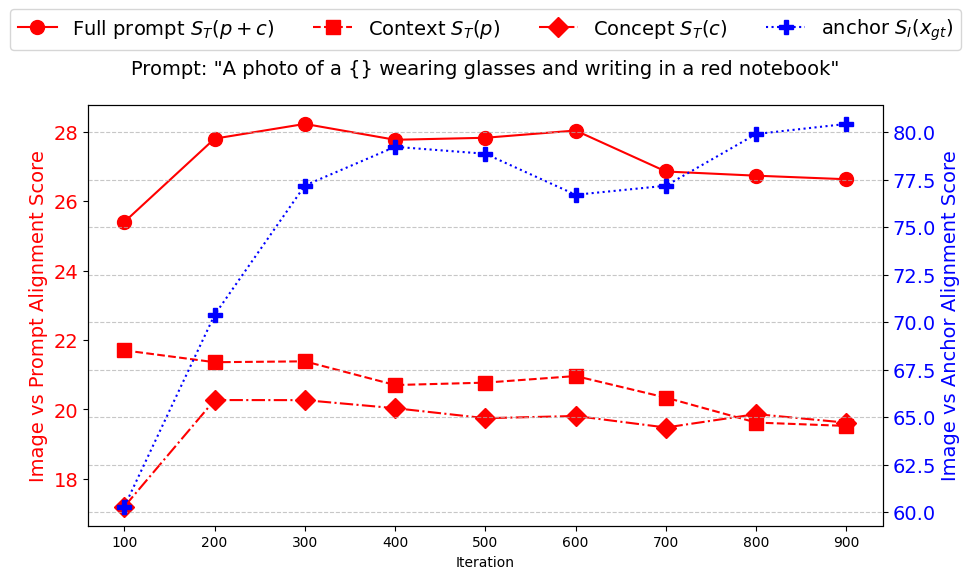

Given the root cause of SCP, it is naturally rised the question: Can we reverse this semantic shift at test time by adjusting \(V^*\), without modifying the personalization method? Surprisingly, the answer is yes, with a surprisingly simple, general, and yet very effective approach.

Embedding Adjustment

Given a pre-trained embedding matrix \(M\) that includes a learned token \(V^*\) (as in Textual Inversion), and a target concept \(c\) toward which we wish to regularise, we propose to adjust \(M_{V^*}\) by aligning both its magnitude and direction with \(M_c\). This is achieved by first normalising the vectors and then applying Spherical Linear Interpolation (SLERP) to interpolate the direction of \(M_{V^*}\) towards \(M_c\), which is effective in high-dimensional vector spaces.

\[\hat{M}_{V^*} = \frac{\sin((1-\alpha)\theta)}{\sin(\theta)} \tilde{M}_{V^*} + \frac{\sin(\alpha\theta)}{\sin(\theta)} \tilde{M}_c\]Here, \(\theta\) is the angle between the normalized vectors \(\tilde{M}_c\) and \(\tilde{M}_{V^*}\), and \(\alpha \in [0, 1]\) controls the rotation factor, where the bigger \(\alpha\) is, the more the embedding is rotated towards \(M_c\).

The normalisation vectors are defined as:

- \[\tilde{M}_{V^*} = \beta \left\| M_c \right\| \frac{M_{V^*}}{\left\| M_{V^*} \right\|}\]

- \[\tilde{M}_c = \beta \left\| M_c \right\| \frac{M_c}{\left\| M_c \right\|}\]

where \(\beta\) is the scaling factor to control the magnitude of the embedding relative to the reference concept \(c\).

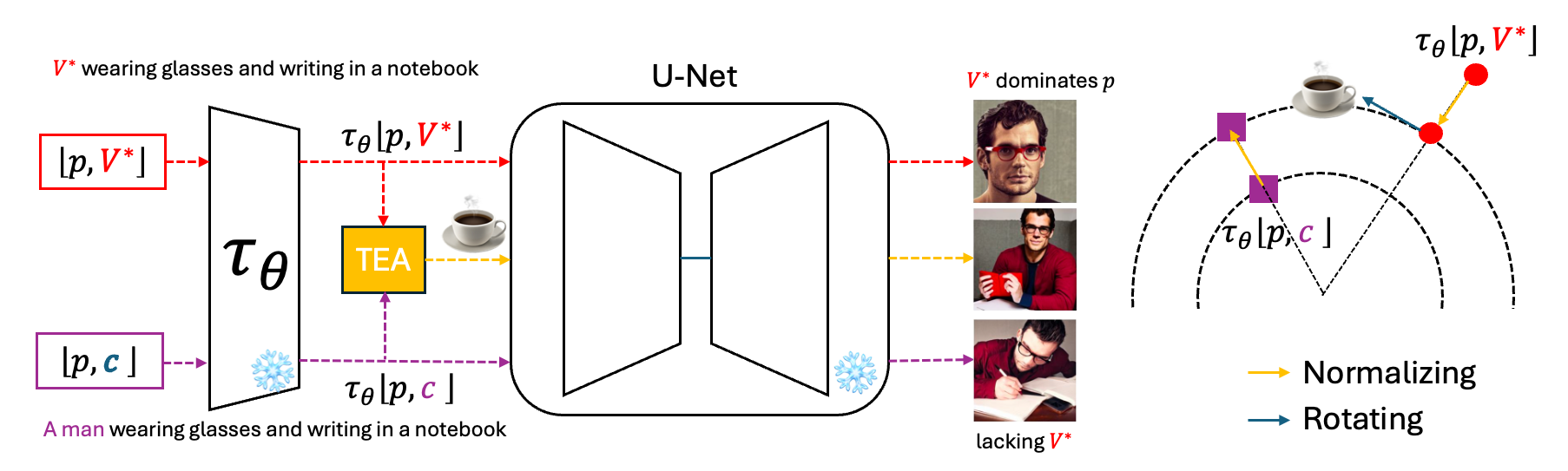

In Dreambooth-based personalization, because the embedding matrix \(M\) is not updated during the optimisation, we propose to adjust at the prompt level instead of the token level as illustrated in the figure above. More specifically, given a prompt \(\lfloor p, V^* \rfloor\) and a target prompt \(\lfloor p, c \rfloor\), we obtain the two embeddings \(\tau(\lfloor p, V^* \rfloor)\) and \(\tau(\lfloor p, c \rfloor)\) from the text encoder \(\tau_{\phi}\) and then adjust the embedding of \(\lfloor p, V^* \rfloor\) by using the above equation on every token in the prompt.

\[\hat{\tau}(\lfloor p, V^* \rfloor)[i] = \frac{\sin((1-\alpha)\theta_i)}{\sin(\theta_i)} \tilde{\tau}(\lfloor p, V^* \rfloor)[i] + \frac{\sin(\alpha\theta_i)}{\sin(\theta_i)} \tilde{\tau}(\lfloor p, c \rfloor)[i]\]where \(i\) indexes each token in the prompt, and \(\theta_i\) is the angle between the \(i\)-th token embeddings of the two prompts after normalisation.

This method enables a test-time adjustment of semantic drift without retraining, making it a lightweight and broadly applicable solution to mitigating SCP effects.

Surprising Impact of TEA on Anti-DreamBooth

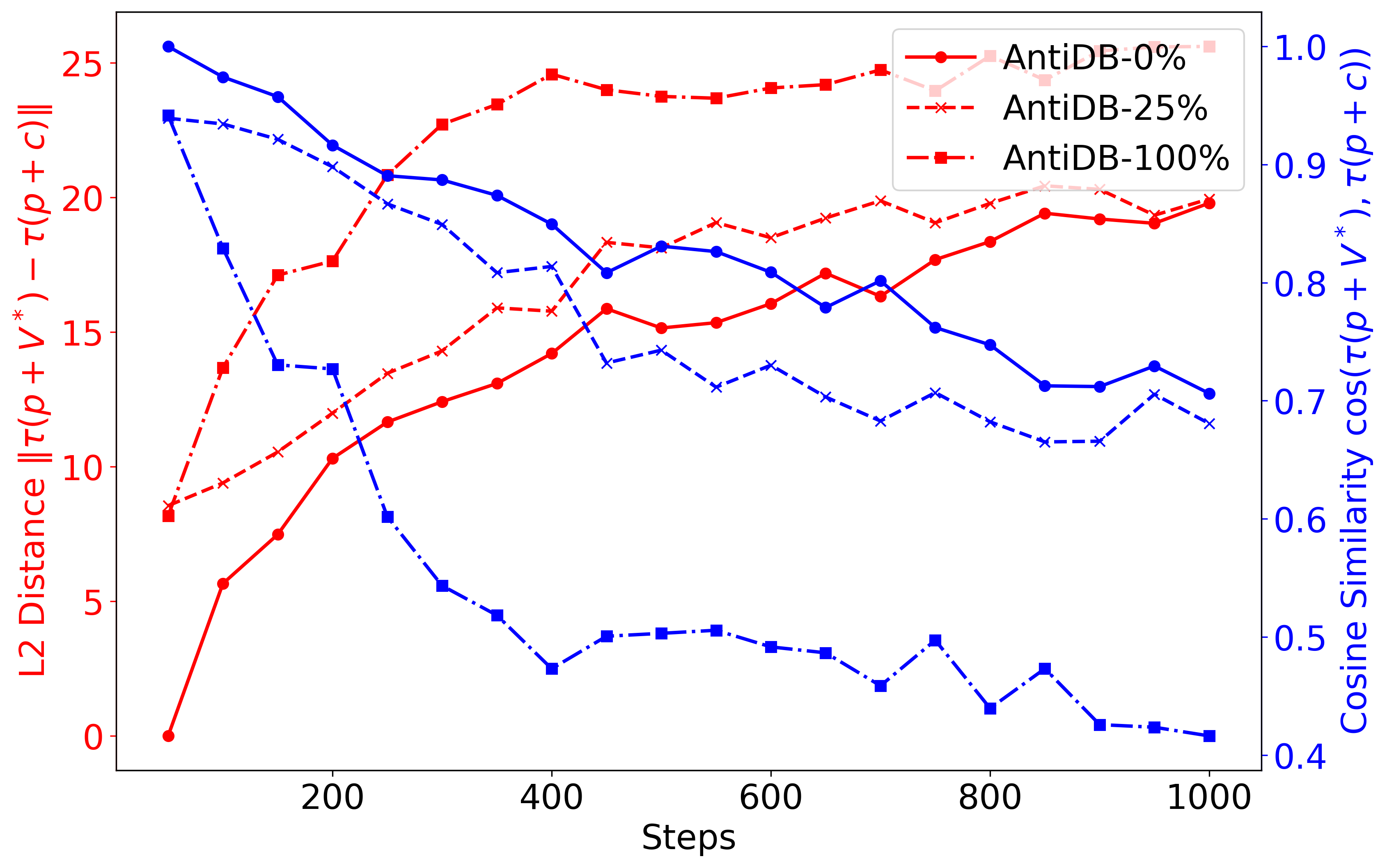

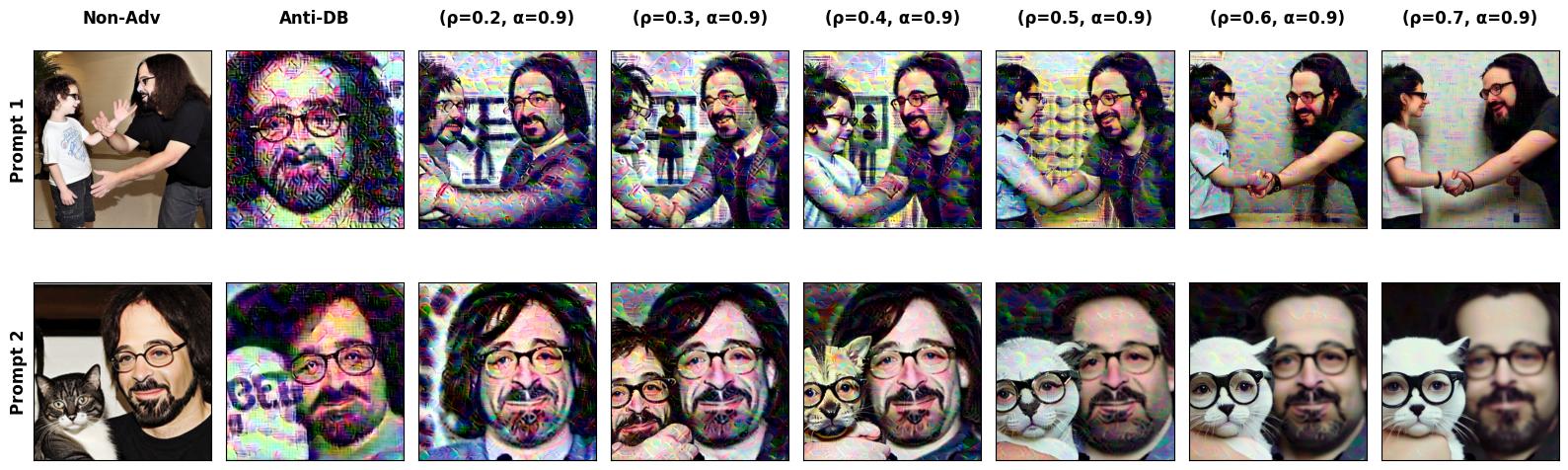

We hypothesize that the adversarial learning process of Anti-DreamBooth actually amplifies the dominance of the personalized concept \(V^*\), but with good implications for user privacy, by causing the prompt embedding \(\lfloor p, V^* \rfloor\) to drift even further from its original concept \(\lfloor p, c \rfloor\), resulting to distorted generations of the protected concept \(V^*\).

Surprisingly, when we apply TEA to DreamBooth models poisoned by Anti-DreamBooth, we observe a mitigation effect such that

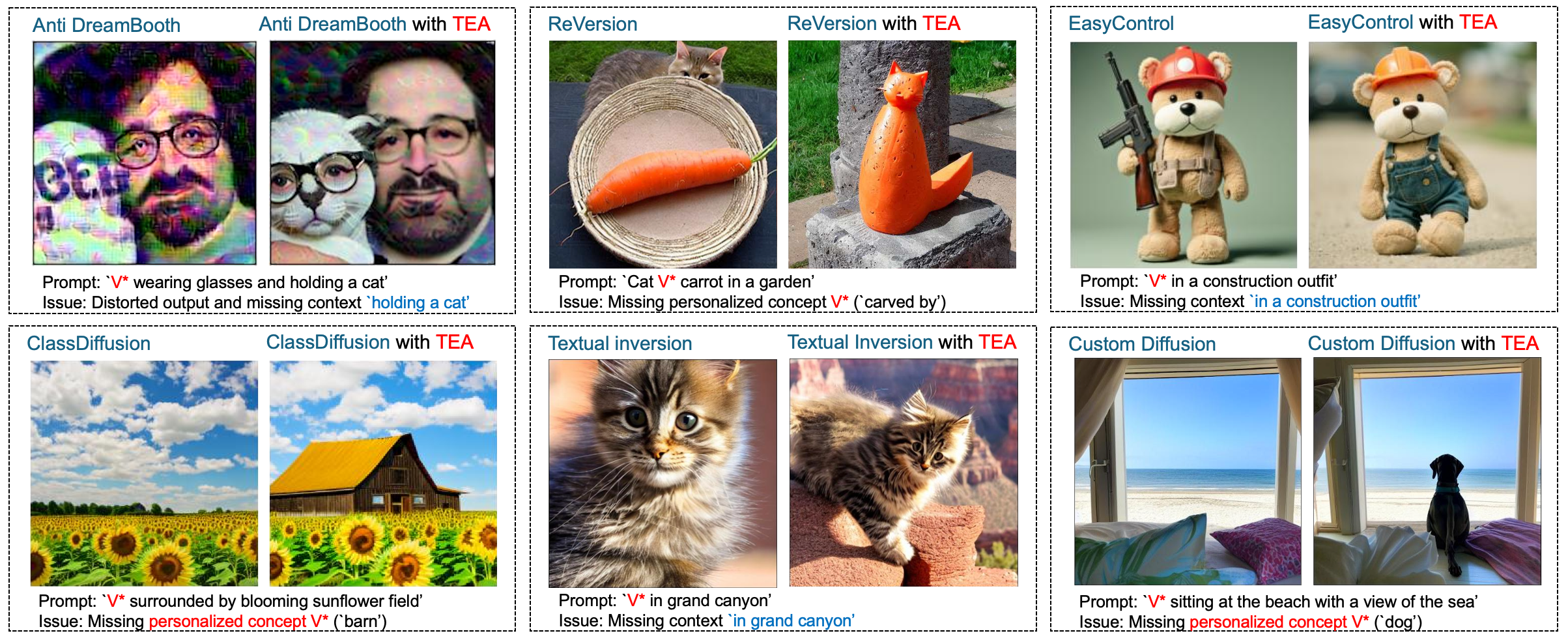

the generated images by TEA are less distorted and more aligned with the to-be-protected concept \(V^*\) as shown in Figure below.

This surprising result reveals an intriguing false sense of security of Anti-DreamBooth, such that despite adversarial masking,

the poisoned personalized model still retains traces of the correct/to-be-protected concept \(V^*\),

which can be recovered partially with our TEA. To the best of our knowledge, this is the first work to uncover such a counter-intuitive vulnerability of Anti-DreamBooth.

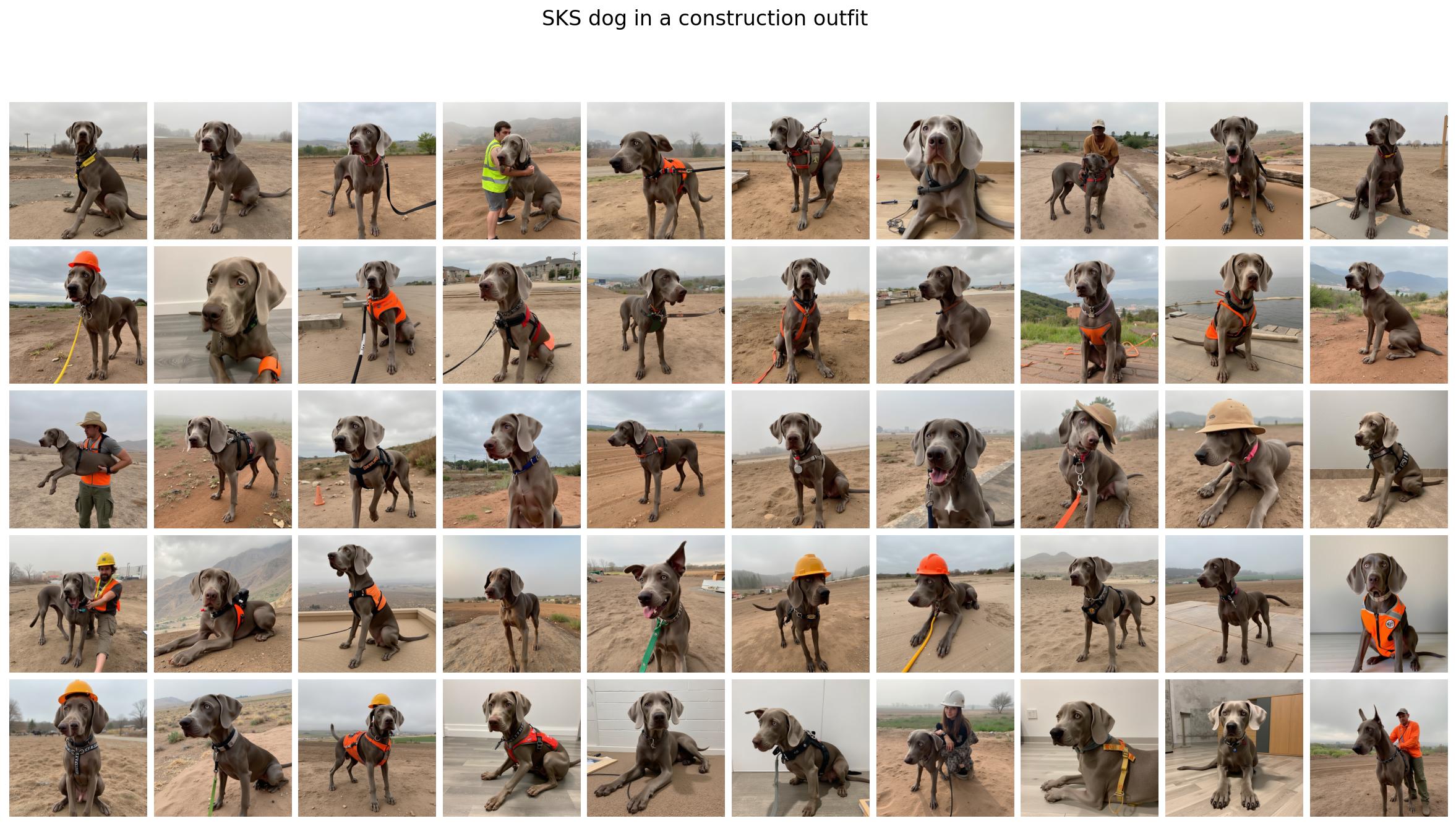

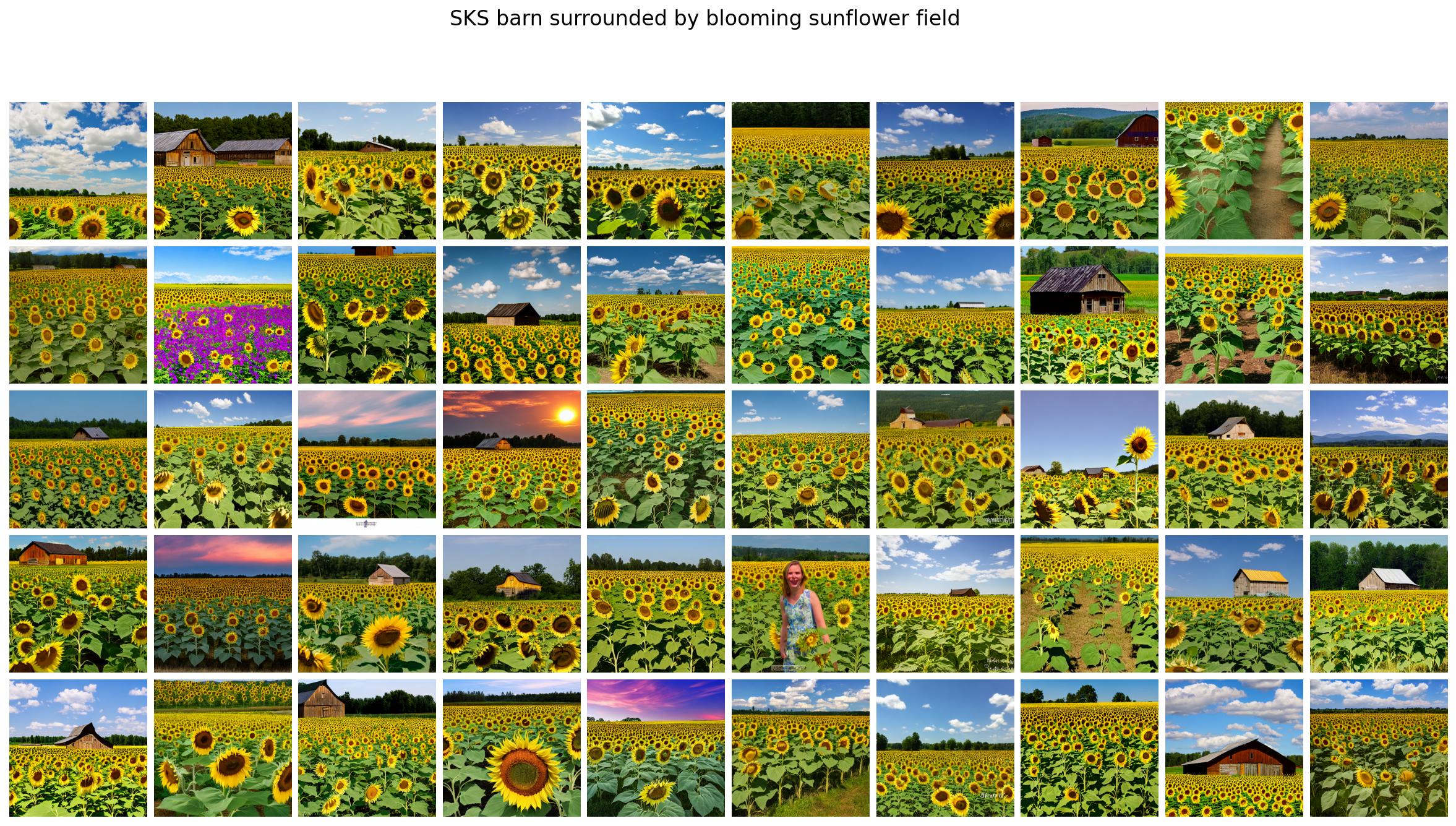

Experiments

We demonstrate the effectiveness of TEA on addressing the SCP across six representative and recent personalization methods, two architectures (Stable Diffusion and Flux) and three datasets (CS101, CelebA, and Relationship) consisting of total 22 concepts. Please refer to the paper for more details. I really proud of the results and the simplicity, generalizability, and effectiveness of TEA :D.

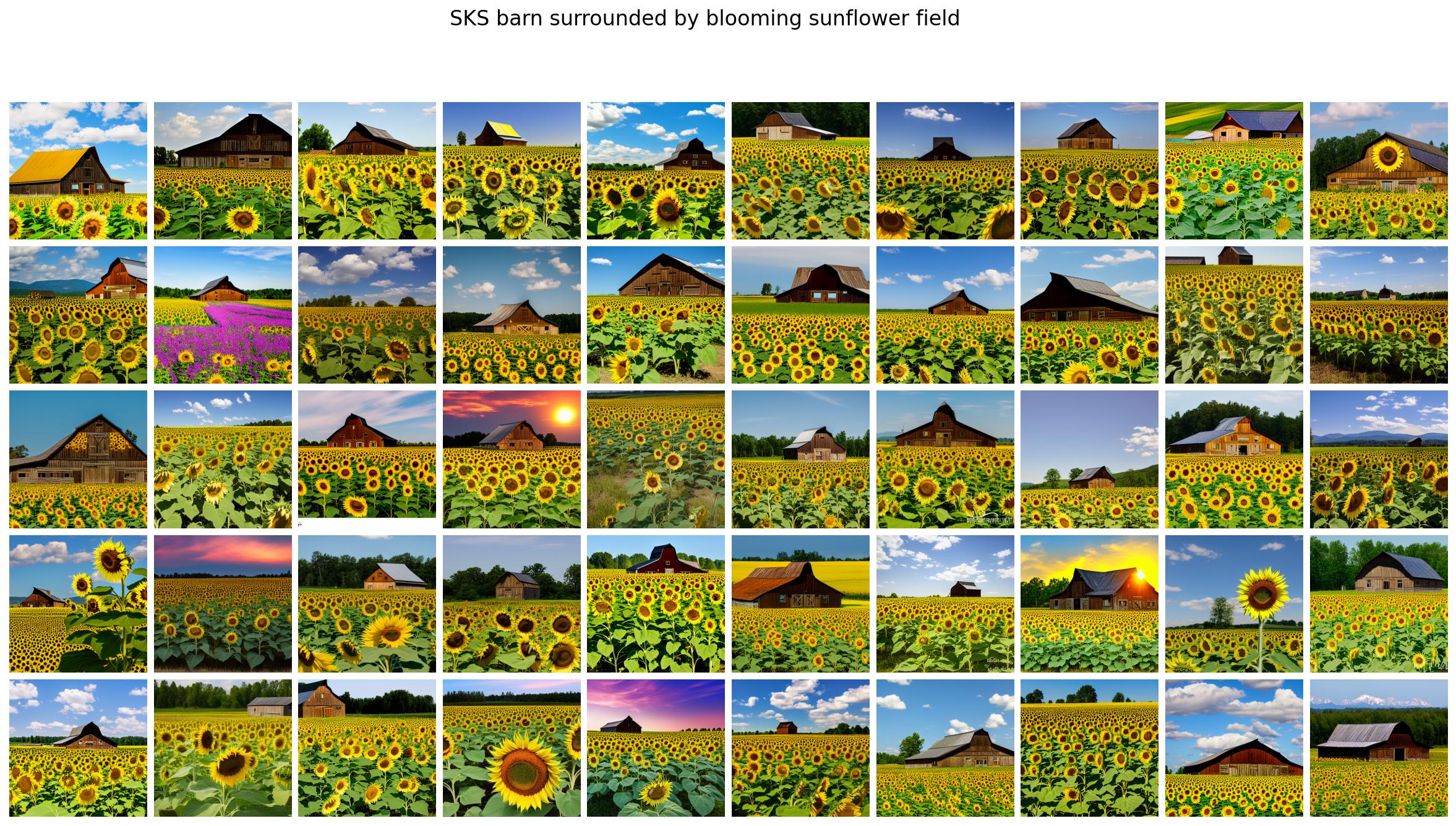

Some qualitative results are shown below when our TEA is applied to SOTA personalization methods.

Citation

If you find this work useful in your research, please consider citing our paper

@article{bui2025mitigating,

title={Mitigating Semantic Collapse in Generative Personalization with a Surprisingly Simple Test-Time Embedding Adjustment},

author={Bui, Anh and Vu, Trang and Le, Trung and Kim, Junae and Abraham, Tamas and Omari, Rollin and Kaur, Amar and Phung, Dinh},

journal={arXiv preprint arXiv:2506.22685},

year={2025}

}

References

[1] Ruiz, Nataniel, et al. “Dreambooth: Fine tuning text-to-image diffusion models for subject-driven generation.” Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2023.

[2] Motamed, Saman, Danda Pani Paudel, and Luc Van Gool. “Lego: Learning to Disentangle and Invert Personalized Concepts Beyond Object Appearance in Text-to-Image Diffusion Models.” arXiv preprint arXiv:2311.13833 (2023).

[3] Huang, Jiannan, et al. “Classdiffusion: More aligned personalization tuning with explicit class guidance.” arXiv preprint arXiv:2405.17532 (2024).

[4] Han, Ligong, et al. “Svdiff: Compact parameter space for diffusion fine-tuning.” Proceedings of the IEEE/CVF International Conference on Computer Vision. 2023.

[5] Qiu, Zeju, et al. “Controlling text-to-image diffusion by orthogonal finetuning.” Advances in Neural Information Processing Systems 36 (2023): 79320-79362.

[6] Avrahami, Omri, et al. “Break-a-scene: Extracting multiple concepts from a single image.” SIGGRAPH Asia 2023 Conference Papers. 2023.

[7] Jin, Chen, et al. “An image is worth multiple words: Discovering object level concepts using multi-concept prompt learning.” Forty-first International Conference on Machine Learning. 2024.