DreamStyler - Paint by Style Inversion with Text-to-Image Diffusion Models (AAAI-2024)

Overview

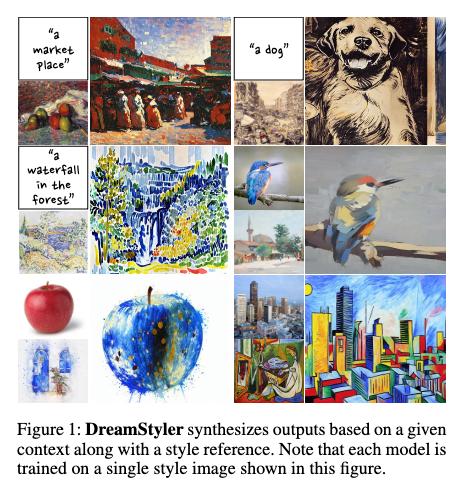

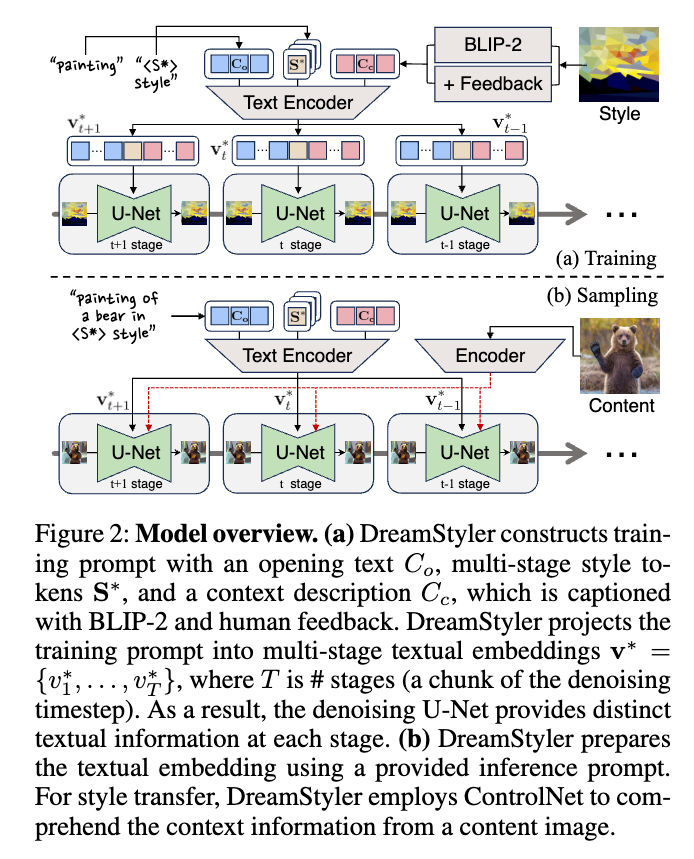

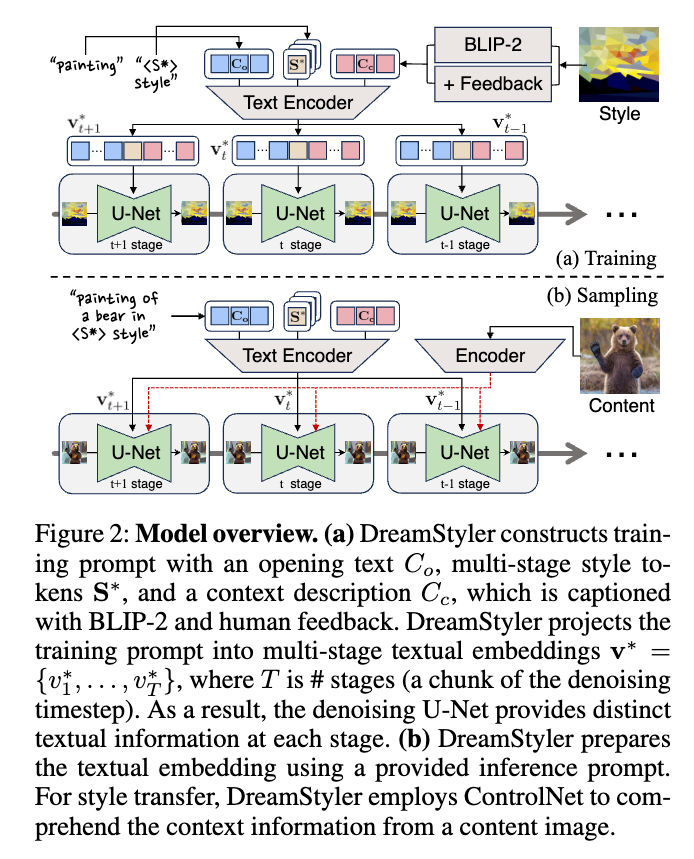

DreamStyler introduces a novel approach to style transfer by leveraging a multi-stage textual embedding combined with a context-aware text prompt. The method aims to enhance the generation of images in a specific artistic style using text-to-image diffusion models.

Key Contributions

Problem Setting

Given a set of style images with an implicit personal style (e.g., Van Gogh’s style), the goal is to fine-tune a foundation model to mimic the style \(S^*\) such that it can generate images in that style when provided with a text prompt (e.g., “A painting of a bear in \(S^*\) style”). This is traditionally done using personalized methods like DreamBooth and Textual Inversion.

Limitations of Existing Methods

Current methods face several challenges:

-

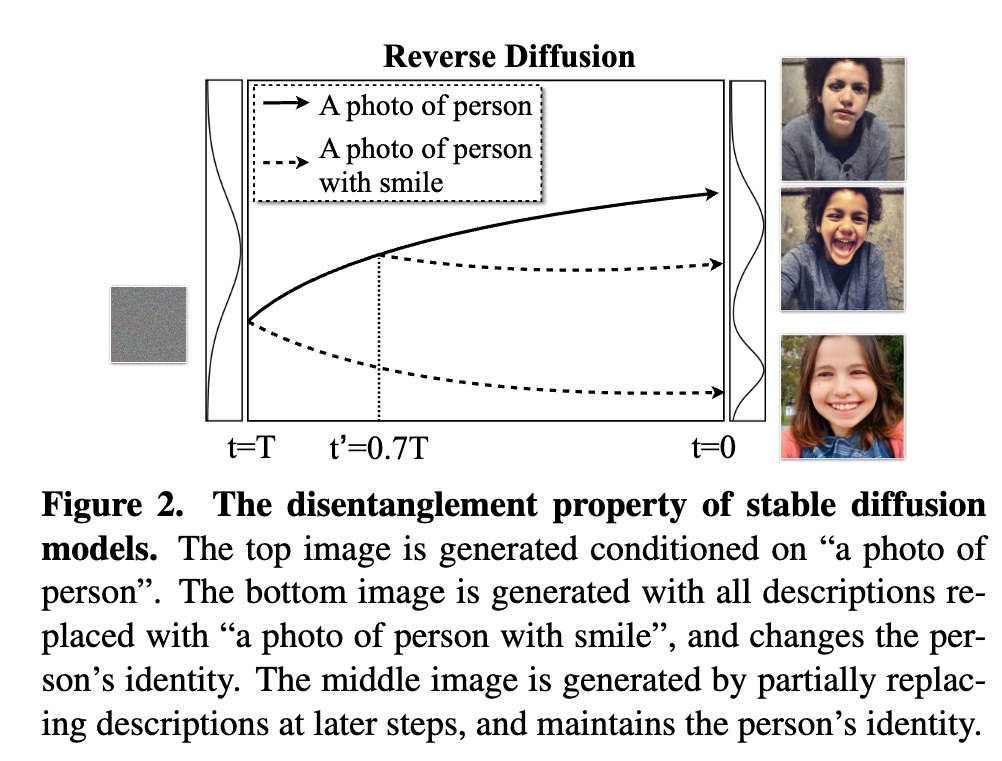

Dynamic Style Representation: Diffusion models require different capacities at various denoising steps, making it difficult for a single embedding vector to capture an entire style.

-

Local to Global Features: The denoising process moves from coarse to fine synthesis, meaning both global artistic elements (color tone) and fine-grained details (texture) need to be represented effectively.

-

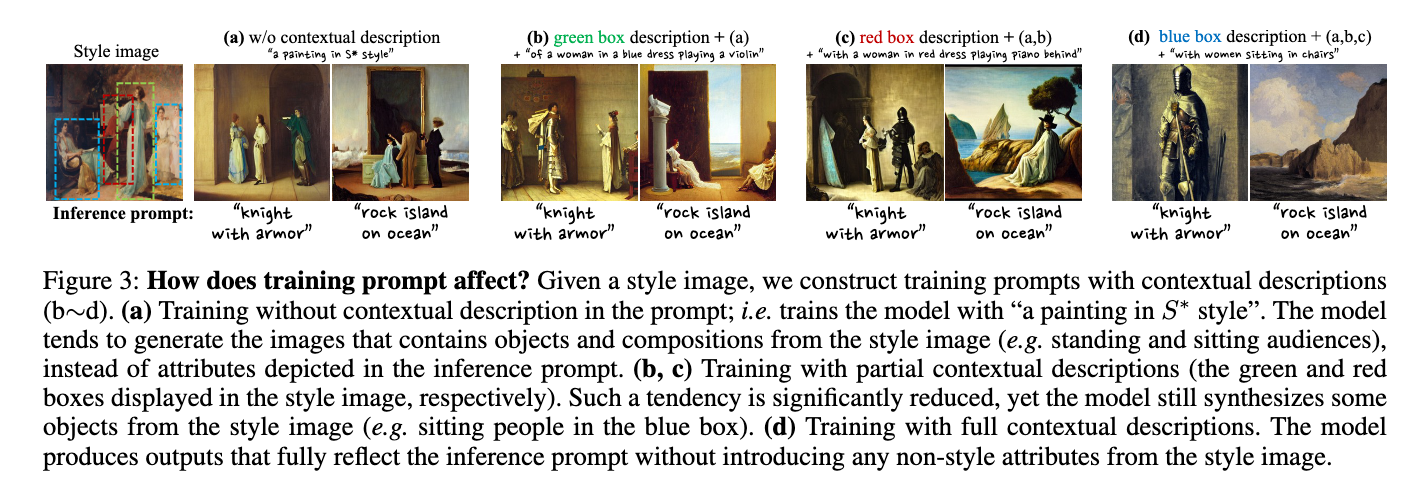

Style-Content Separation: Without a structured way to distinguish style from content, generated images may unintentionally incorporate unwanted elements from reference images.

Proposed Solution

Context-Aware Text Prompt

A style is often intertwined with content in a reference painting, making it difficult to extract only the stylistic elements. To address this, DreamStyler utilizes BLIP-2 and Human-in-the-loop methods to create a context-aware text prompt that explicitly describes non-style components (e.g., objects, composition, background).

Benefits

- Improved Style-Content Disentanglement: By explicitly describing the non-style elements of the reference image, the model can better focus on learning the stylistic features, leading to outputs that are more faithful to the user’s intent.

- Reduced Unwanted Elements: The inclusion of context descriptions helps to prevent the model from incorporating irrelevant objects, compositions, or backgrounds from the reference image into the generated images.

Implementation

The context-aware text prompt is manually assigned as an input argument:

context_prompt = "A painting of pencil, pears and apples on a cloth, in the style of {}".

self.prompt = self.template if context_prompt is None else context_prompt

Multi-Stage Textual Embedding

Motivation

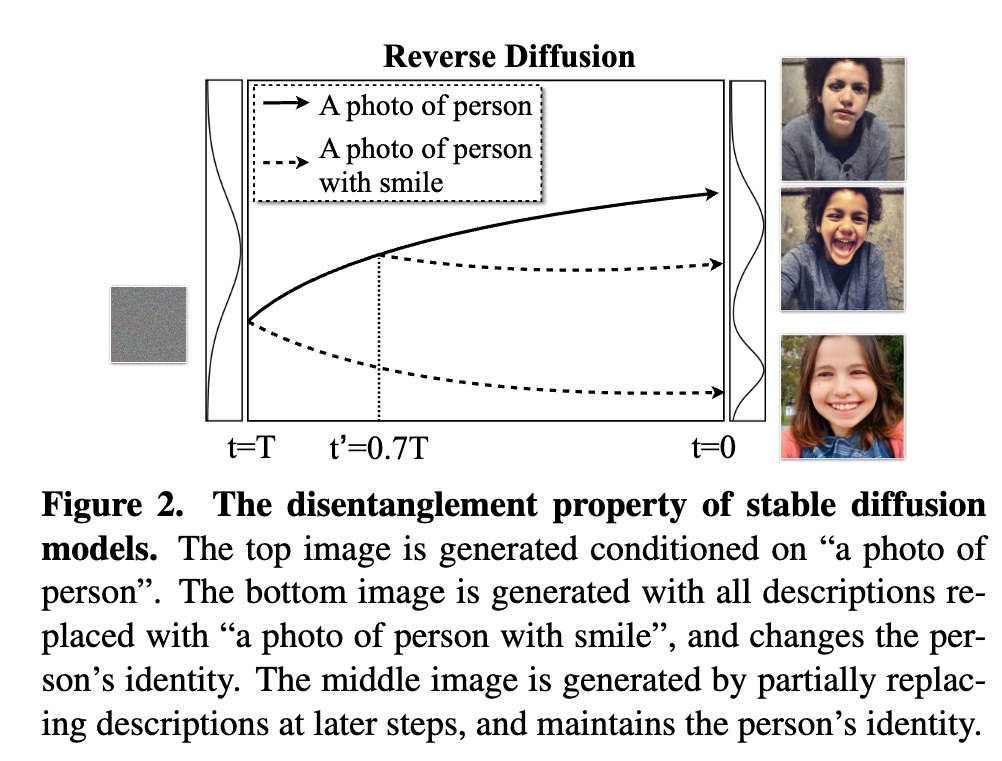

Traditional Textual Inversion (TI) relies on a single embedding vector, which may not effectively represent complex artistic styles across the entire diffusion process. Prior research shows that diffusion models require different representational capacities at various timesteps.

As demonstrated in other works, there is a dynamic property throughout the diffusion process, which requires different capacities at various diffusion steps [2]. Therefore, using a single embedding vector for all diffusion steps is not ideal, especially for representing artistic styles that involve both global elements (like color tone) and fine-grained details (like texture).

Proposed Approach

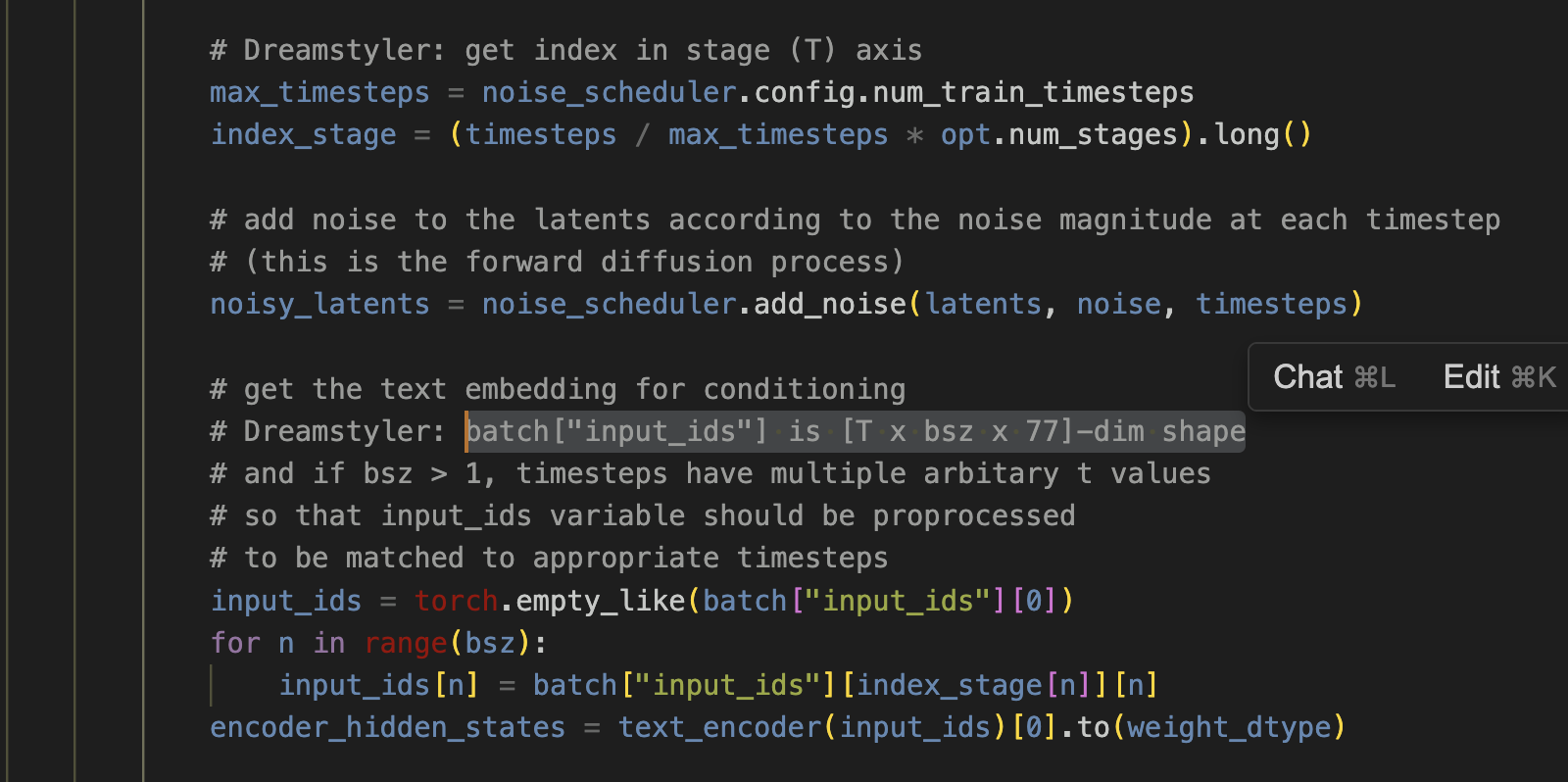

DreamStyler introduces a multi-stage textual embedding by utilizing multiple embedding vectors/tokens, each corresponding to a specific stage of the diffusion process. More specifically, the entire diffusion process is broken down into \(T\) distinct stages, and a set of \(T\) style tokens \(S_1, S_2, \cdots, S_T\) are used to represent the style at each stage.

Benefits

- Enhanced Expressiveness: Captures both global elements (e.g., color tone) and fine details (e.g., brushstrokes, textures).

- Better Adaptability: Adjusts to the changing nature of style representation during the denoising process.

Implementation of Multi-Stage Textual Embedding

Style and Context Guidance with Classifier-Free Guidance

Classifier-Free Guidance

Classifier-Free Guidance is a popular technique in the diffusion model community to improve the quality of generated images. It is a simple yet effective method to improve the diversity of generated images.

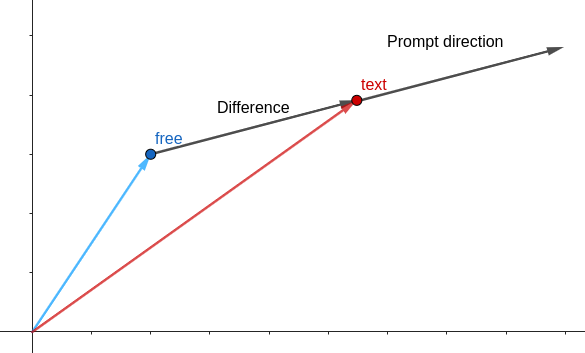

\[\hat{\epsilon}(v) = \epsilon(\emptyset) + \lambda(\epsilon(v) - \epsilon(\emptyset))\]where:

- \(\epsilon\) is the denoising function

- \(\lambda\) is the coefficient for guidance

- \(\emptyset\) is the null prompt

- \(v\) is the text prompt

Style and Context Guidance

DreamStyler introduces a style and context guidance mechanism by incorporating the style and context prompts into the Classifier-Free Guidance ()

\[\hat{\epsilon}(v) = \epsilon(\emptyset) + \lambda_{s}\left[ \epsilon(v) - \epsilon(v_c) \right] + \lambda_{c}\left[ \epsilon(v_c) - \epsilon(\emptyset) \right] + \lambda_{c}\left[ \epsilon(v) - \epsilon(v_s) \right] + \lambda_{s}\left[ \epsilon(v_s) - \epsilon(\emptyset) \right]\]where:

- \(v_c\) is the context prompt and \(v_s\) is the style prompt that are decomposed from the text prompt \(v\) as \(v = v_c + v_s\).

- \(\lambda_c\) is the coefficient for context guidance. Increasing \(\lambda_c\) encourages the model to generate images that are more faithful to the context prompt.

- \(\lambda_s\) is the coefficient for style guidance. Increasing \(\lambda_s\) encourages the model to generate images that are more aligned with the style prompt.

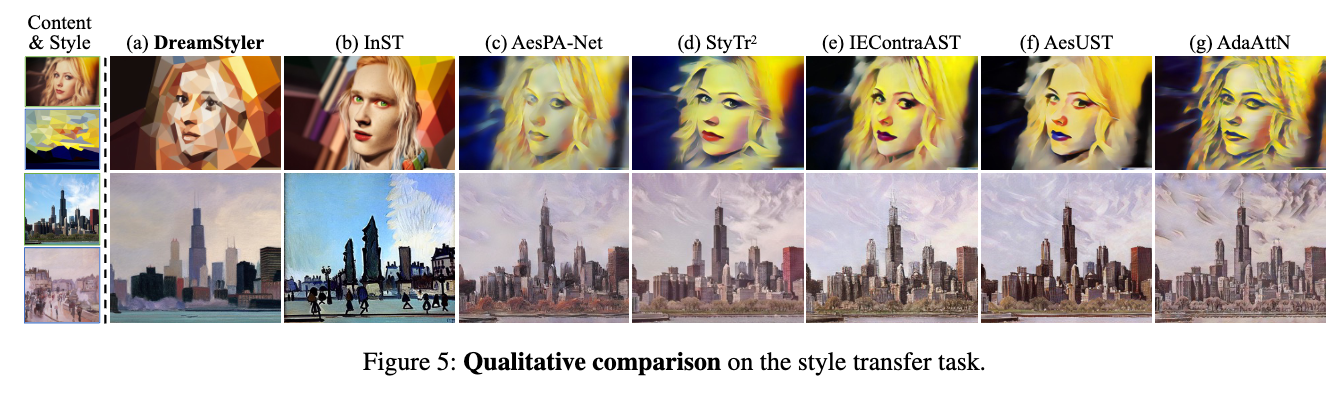

Utilizing ControlNet for Style-Preservation

DreamStyler also utilizes ControlNet to maintain the original content’s structure of the reference image in the Image-to-Image Style Transfer setting.

Results

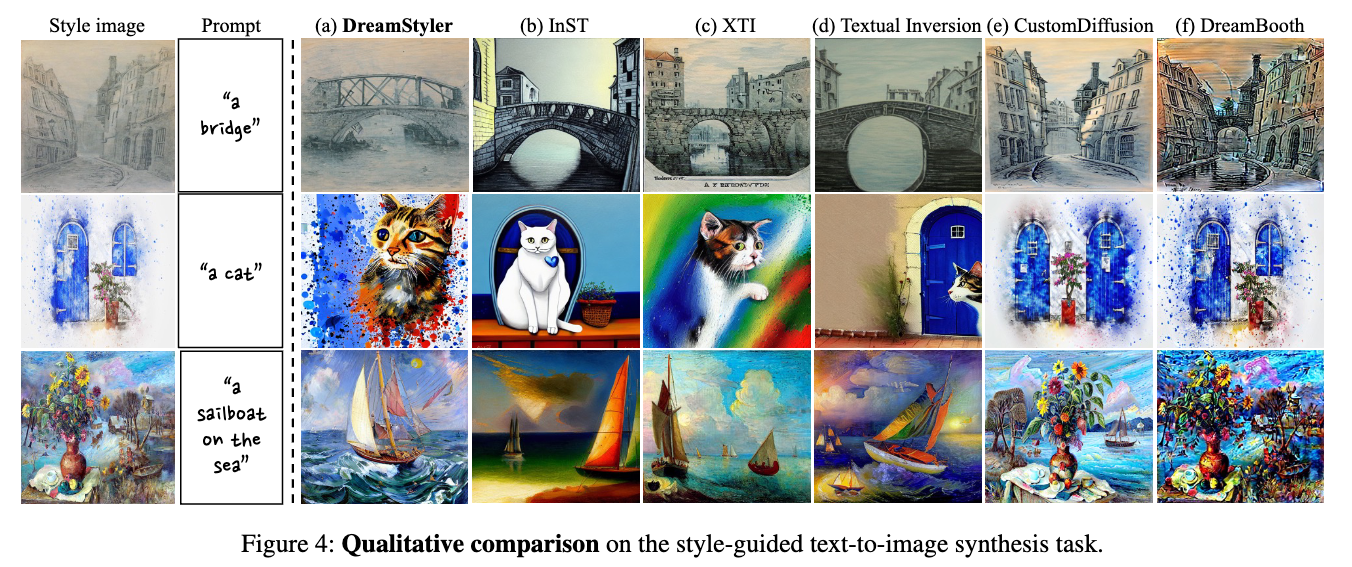

Qualitative Results

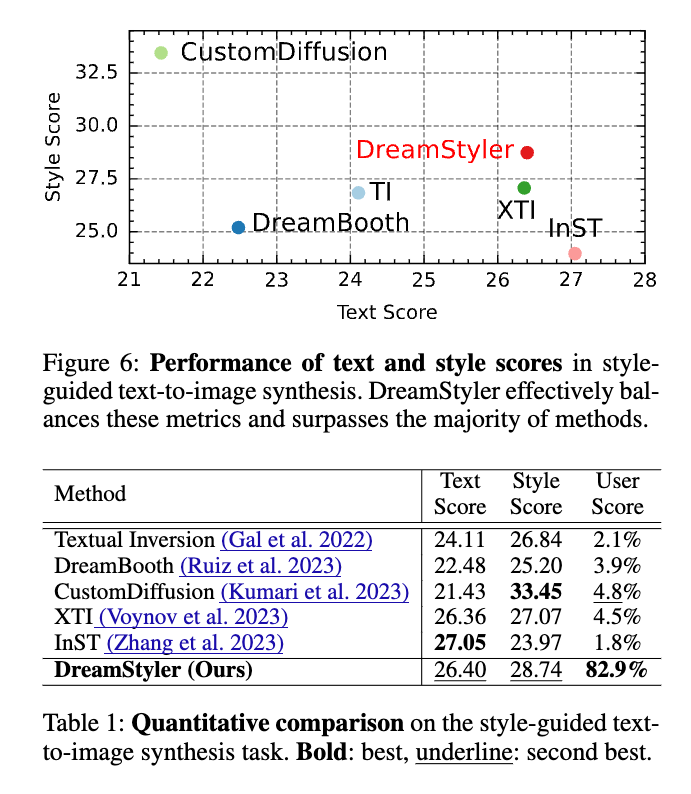

Quantitative Results

The authors utilize three scores to evaluate the performance of the proposed method:

- Text Score and Image Score: measure the alignment with a given text prompt/reference image with the generated image.

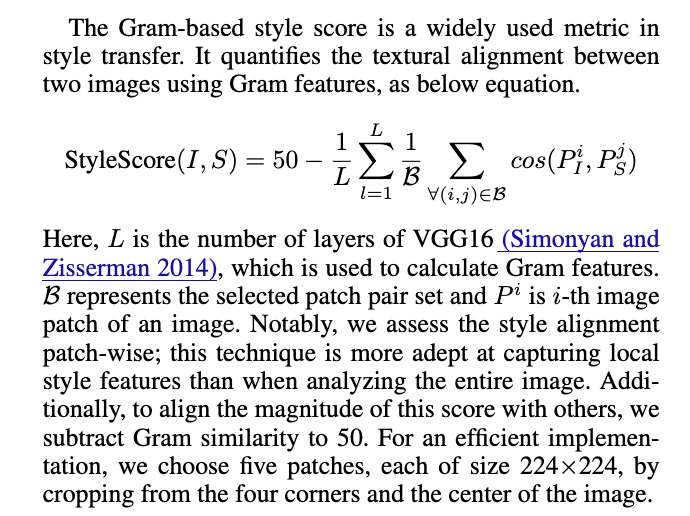

- Style Score: Assesses the style consistency by calculating the similarity of Gram features between the style and generated images.

- User Score: Human evaluation score.

Enjoy Reading This Article?

Here are some more articles you might like to read next: