FineStyle - Fine-grained Controllable Style Personalization for Text-to-image Models (NeurIPS 2024)

FineStyle: Fine-grained Controllable Style Personalization for Text-to-image Models (NeurIPS 2024)

Overview

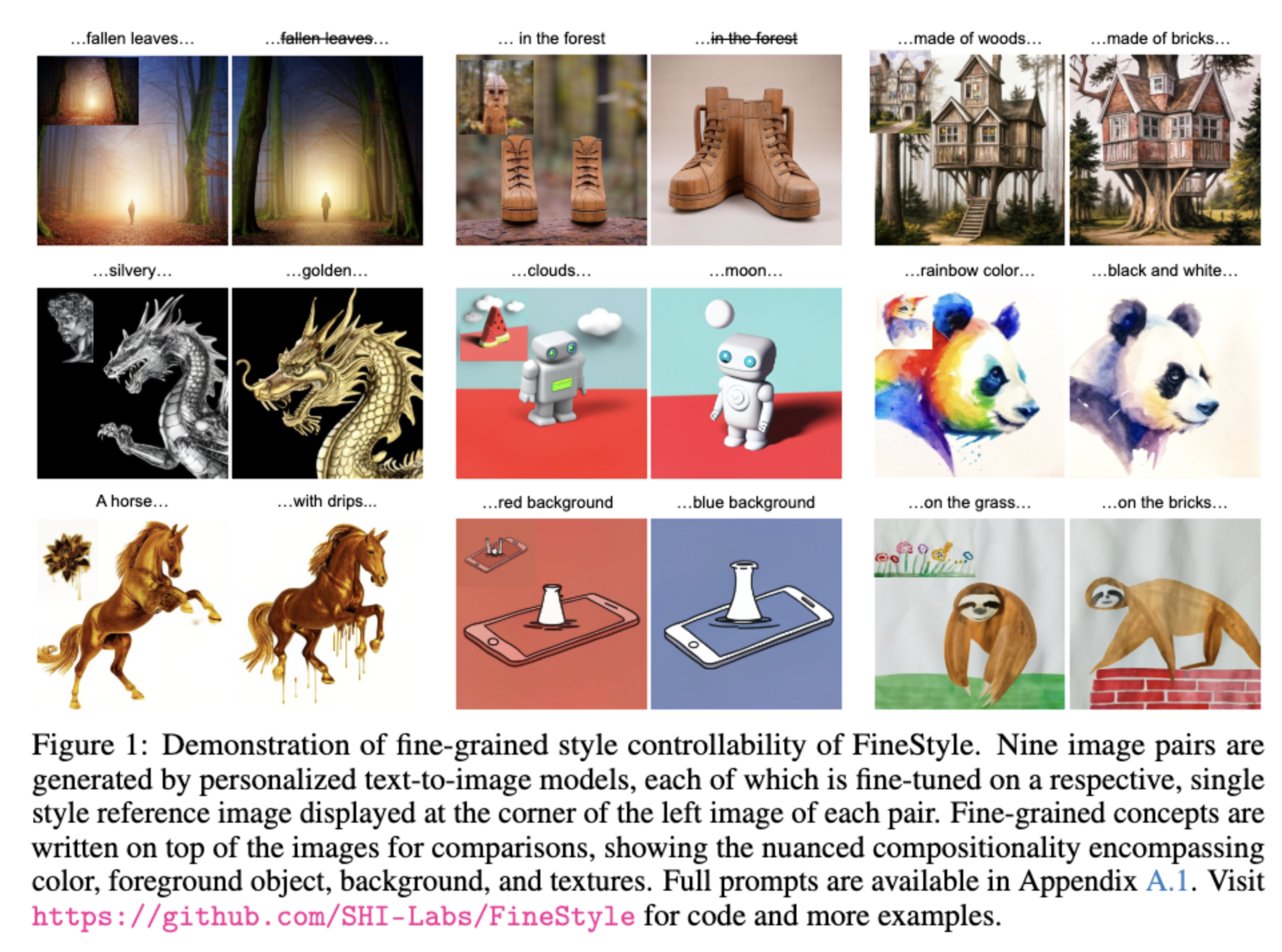

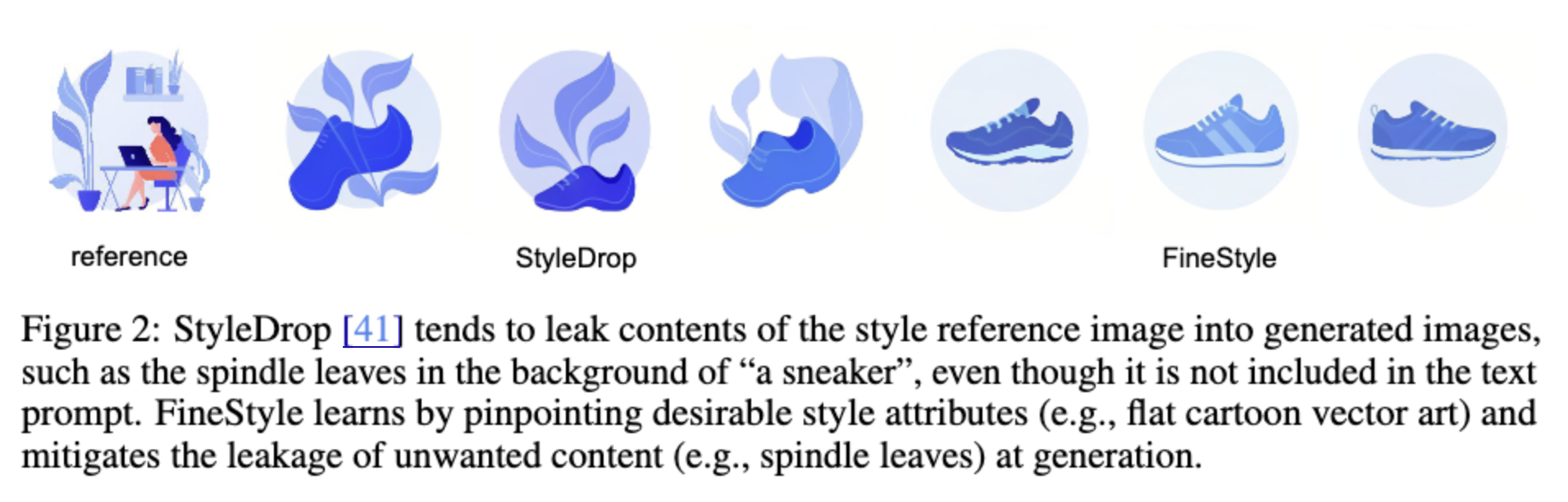

The FineStyle method proposed in the paper addresses the content leakage problem in few-shot or one-shot fine-tuning by introducing concept-oriented data scaling, which decomposes a single reference image into multiple sub-image-text pairs, each focusing on different fine-grained concepts. This approach improves the model’s ability to separate content and style while reducing leakage.

Content Leakage Problem

Content leakage in few-shot or one-shot fine-tuning happens because the model struggles to correctly associate visual concepts with corresponding text phrases when trained on only a few or a single image-text pair. The key reasons are:

-

Concept Entanglement: In large-scale training, models learn to decompose and associate individual visual concepts with text through extensive data diversity. However, with few-shot fine-tuning, the limited number of training examples makes it difficult to disentangle different visual elements, leading to unwanted content appearing in generated images.

-

Lack of Concept Alignment: When fine-tuning with only one or a few images, the model cannot effectively learn which parts of the image represent style versus specific objects. As a result, it may misinterpret background elements as essential style features, causing them to reappear in generated images even when not prompted.

-

Overfitting to Reference Image: The model tends to memorize the entire reference image, leading to a high risk of directly copying unwanted elements into generated images instead of generalizing style attributes properly.

Limitation of Existing Methods

Some approaches, like StyleDrop, attempt to mitigate content leakage through iterative fine-tuning with synthetic images curated by human or automated feedback. However, this process is computationally expensive and does not fully solve the underlying issue of disentangling style from content.

Key Contributions

- Concept-Oriented Data Scaling: Decomposes a single reference image into multiple sub-image-text pairs, each focusing on different fine-grained concepts. This helps disentangle style attributes from content.

- Parameter-Efficient Fine-Tuning via Cross-Attention Adapters: FineStyle modifies only the key and value kernels in cross-attention layers. This improves fine-grained style control and better aligns visual concepts with textual prompts while keeping the model lightweight.

FineStyle Framework

Background

Muse is a masked generative transformer for text-to-image generation, which is the foundation model of FineStyle. It consists of four main components:

- A pre-trained text encoder \(T\): encodes a text prompt into textual token space \(\tau\)

- An image encoder \(E\): encodes an image from pixel space to a sequence of discrete visual tokens \(v \in \epsilon\)

- A decoder \(D\): decodes the visual tokens back to pixel space

- A generative transformer \(G\): generates an image from the visual tokens, \(G: \epsilon \times \tau \rightarrow \mathcal{L}\)

where \(\mathcal{D}\) is the training set, \(\mathcal{M}\) is a uniformly distributed mask smapling strategy with a mask ratio as a coefficient, and \(\text{CE}\) is the weighted cross-entropy loss.

Sampling Strategy in Muse

During image synthesis, the model uses iterative decoding to generate images given a text prompt and initial visual tokens. The synthesis process is defined as:

\[\mathcal{I} = \text{D}(v_K), v_k = \text{S}(\text{G}(v_{k-1}, \text{T}(t)) + \lambda(\text{G}(v_{k-1}, \text{T}(t)) - \text{G}(v_{k-1}, \text{T}(n))))\]where:

- \(k \in [1, K]\) is the sampling step

- \(t\) is the text prompt

- \(n\) is the null prompt

- \(\text{S}\) is a sampling strategy for visual tokens

- \(\lambda\) represents the coefficient for classifier-free guidance

- \(\text{D}\) maps the final visual tokens to pixel space

The sampling strategy \(\text{S}\) is an iterative masked decoding strategy, where visutal tokens are progressively predicted and refined. The model starts with an initial sequence of visual tokens, some of which are masked. It then iteratively predicts the masked tokens, using the previous predictions to inform the next step.

StyleDrop

StyleDrop is an extension of Muse that introduces an adapter to the generative transformer \(G\) to have a better style control.

Proposed Method

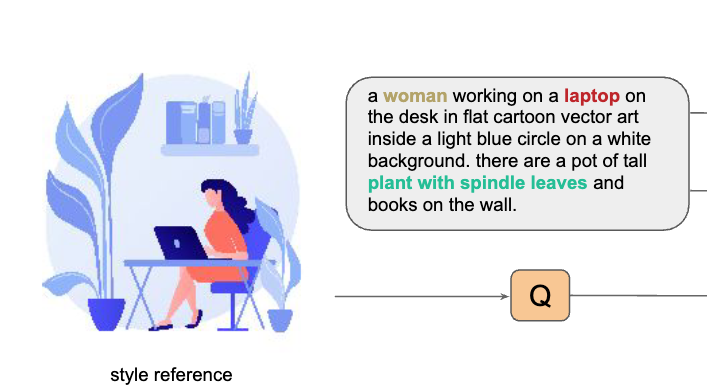

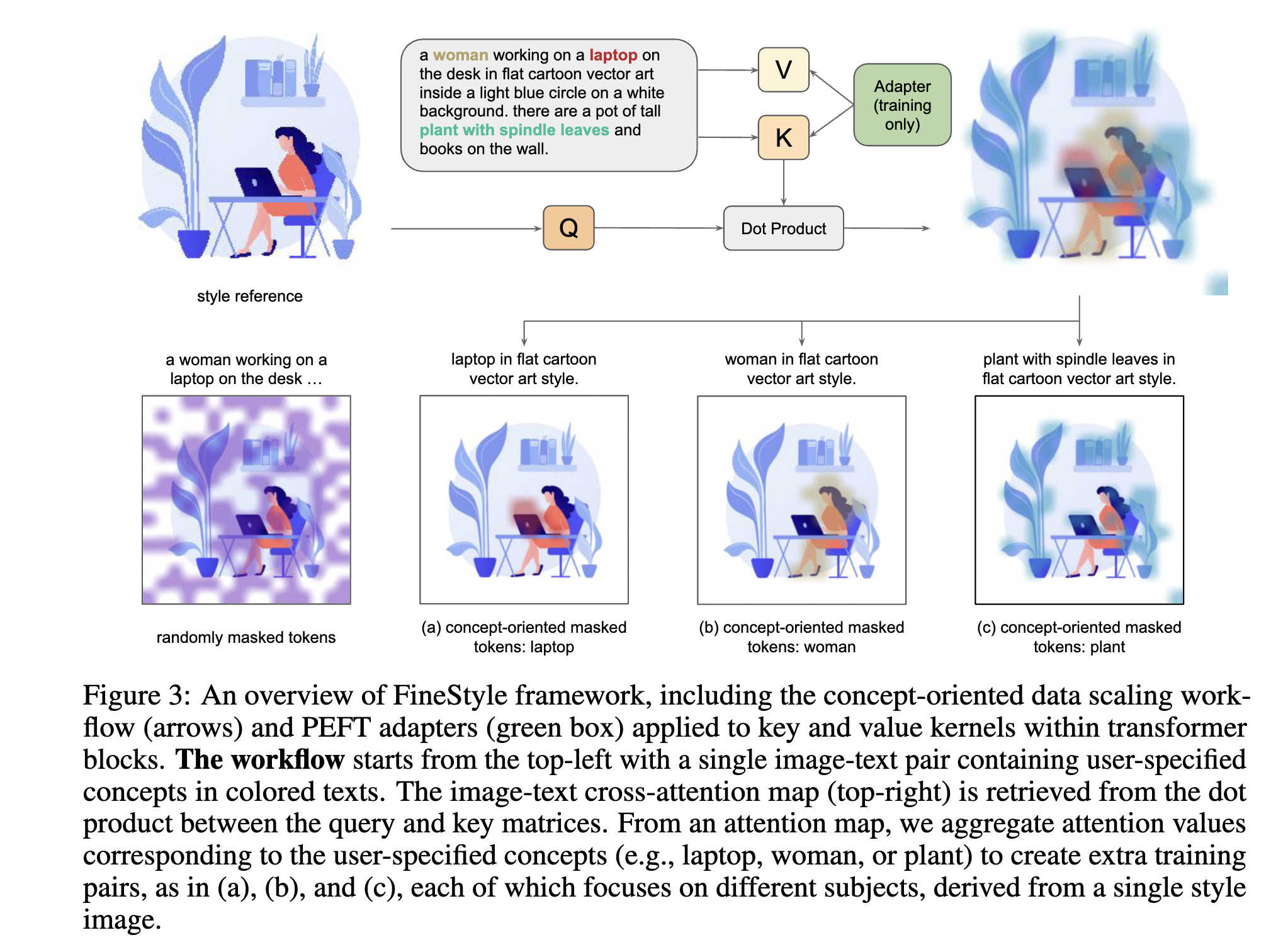

Concept-Oriented Data Scaling

Idea (Borrowed from StyleDrop): Decompose a text prompt into multiple sub-text prompts, each focusing on a different fine-grained concept. For example

- “woman”, “laptop”, “a pot of plant with spindle leaves”, and “bookshelf” for foreground subjects

- “flat cartoon vector art”, “a light blue circle”, and “white background” for style and background attributes

Then combine the two sets into a single text prompt, {concept phrase} in {style phrase} style.

Training with Concept-oriented Masking

- cropping around the area of interest associated with the concept-style text phrase

- Using a pre-trained Muse model to create the segmentation mask (as shown in Fig. 3 a-c)

Classifier-Free Guidance for Style Control

FineStyle modifies Muse’s masked visual token prediction approach by introducing style and semantic guidance. The sampling strategy helps balance text fidelity and style adherence, mitigating content leakage.

Tunable parameters (\(λ_1, λ_2\)) allow users to control the strength of style influence versus prompt adherence, making the generation more flexible and controllable.

The sampling formula for visual tokens in FineStyle is

\[v_k = \hat{G}(v_{k-1}, \text{T}(t)) + \lambda_1(\hat{G}(v_{k-1}, \text{T}(t)) - G(v_{k-1}, \text{T}(t))) + \lambda_2(\hat{G}(v_{k-1}, \text{T}(t)) - \hat{G}(v_{k-1}, \text{T}(n)))\]where:

- \(\hat{G}\) is FineStyle adapted model

- \(G\) is the original Muse model

- \(t\) is the text prompt

- \(n\) is the null prompt for guidance

- \(\lambda_1\) is the coefficient for style guidance - Adjusts how strongly the generated image follows the reference style.

- \(\lambda_2\) is the coefficient for semantic guidance - Helps prevent content leakage by reinforcing adherence to the text prompt.

Results

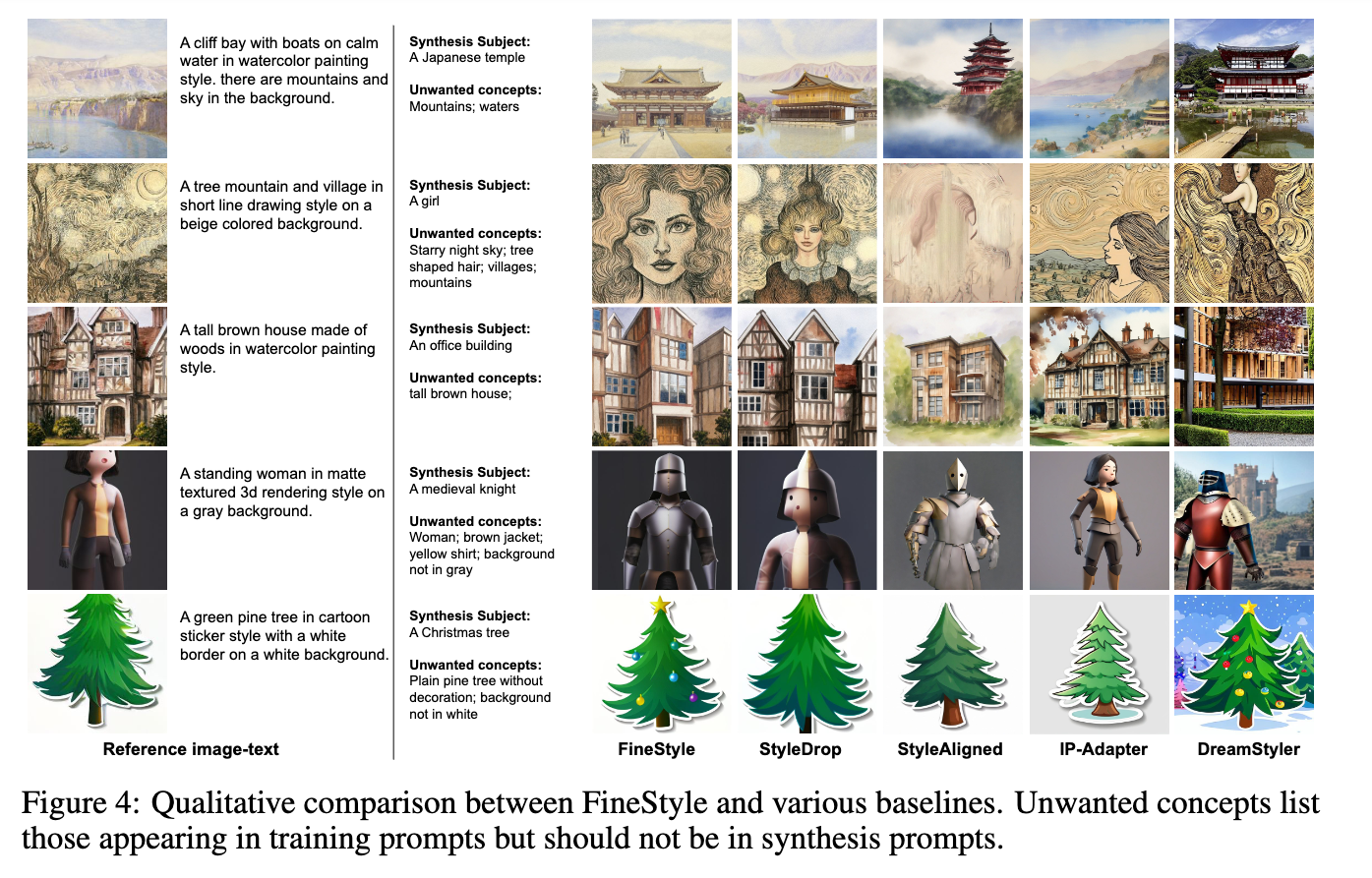

Qualitative Results

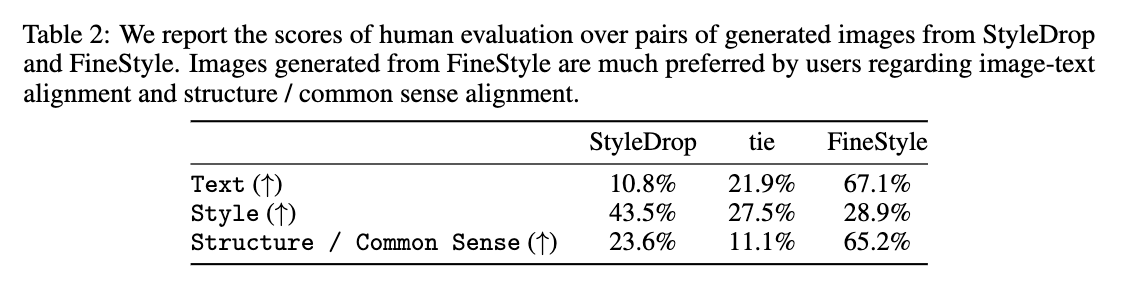

Quantitative Results

References

Enjoy Reading This Article?

Here are some more articles you might like to read next: