LLM Series - Part 1 - Important Concepts in NLP

- NLP Foundations

- Fundamental Tasks in NLP

- Evaluation

- Tokenization

- Transformer

- KV Caching

- BERT

- How to train a LLM

- LoRA and Adapters

- Prompt Engineering

- Code and Frameworks for LLMs

- Fine-tuning LLMs

- RAG

- References

NLP Foundations

References:

- https://www.geeksforgeeks.org/nlp-interview-questions/

- Stanford CS224N: Natural Language Processing with Deep Learning - Schedule - link - Lecture 1

- 63 Must-Know LLMs Interview Questions - Github Repo - link

Corpus

In NLP, a corpus is a huge collection of texts or documents. It is a structured dataset that acts as a sample of a specific language, domain, or issue. A corpus can include a variety of texts, including books, essays, web pages, and social media posts.

Popular corpus that used for training and evaluating NLP models:

- Common Crawl: A corpus of web pages collected by the Common Crawl project.

- Wikipedia: A corpus of articles from the English Wikipedia.

- Books: A corpus of books from various genres and languages.

- News Articles: A corpus of news articles from various sources.

- Social Media: A corpus of social media posts from various platforms.

Common Pre-processing Techniques

- Tokenization: The process of splitting a text into individual words or tokens.

-

Normalization: The process of converting a text into a standard form.

- Lowercasing: The process of converting a text into lowercase.

- Stemming: The process of reducing a word to its root form.

- Lemmatization: The process of reducing a word to its base form.

- Date and Time Normalization: The process of converting a date or time into a standard format.

- Stopword Removal: The process of removing common words that do not carry much meaning (e.g., “the”, “is”, “at”).

- Removal of Special Characters and Punctuation

- Removing HTML Tags or Markup

- Spell Correction

- Sentence Segmentation

What is named entity recognition in NLP?

Named entity recognition (NER) is the task of identifying and classifying named entities in a text into predefined categories such as person, organization, location, etc.

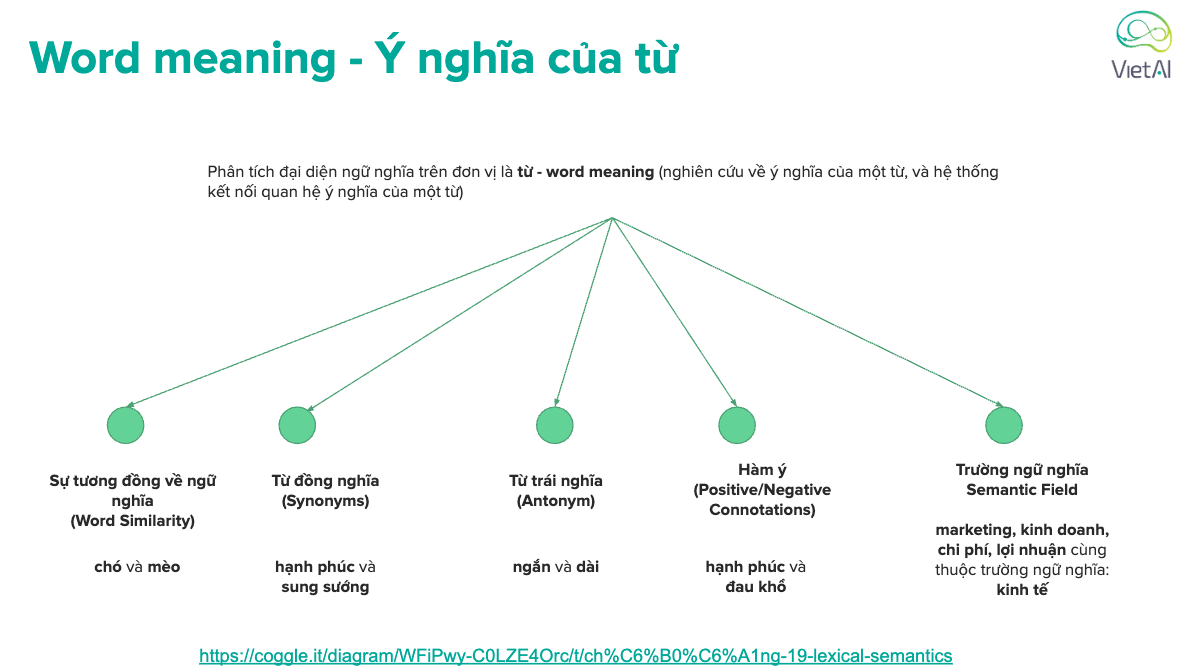

Word Meaning and Word Sense Disambiguation

- Word meaning: The meaning of a word is the concept it refers to. For example, the word “dog” refers to the concept of a four-legged animal that barks.

- Word sense: The sense of a word is the particular meaning it has in a specific context. For example, the word “bank” can refer to a financial institution or the side of a river.

- Word sense disambiguation: The task of determining which sense of a word is used in a particular context.

- Lexical semantics: The study of word meaning and word sense disambiguation.

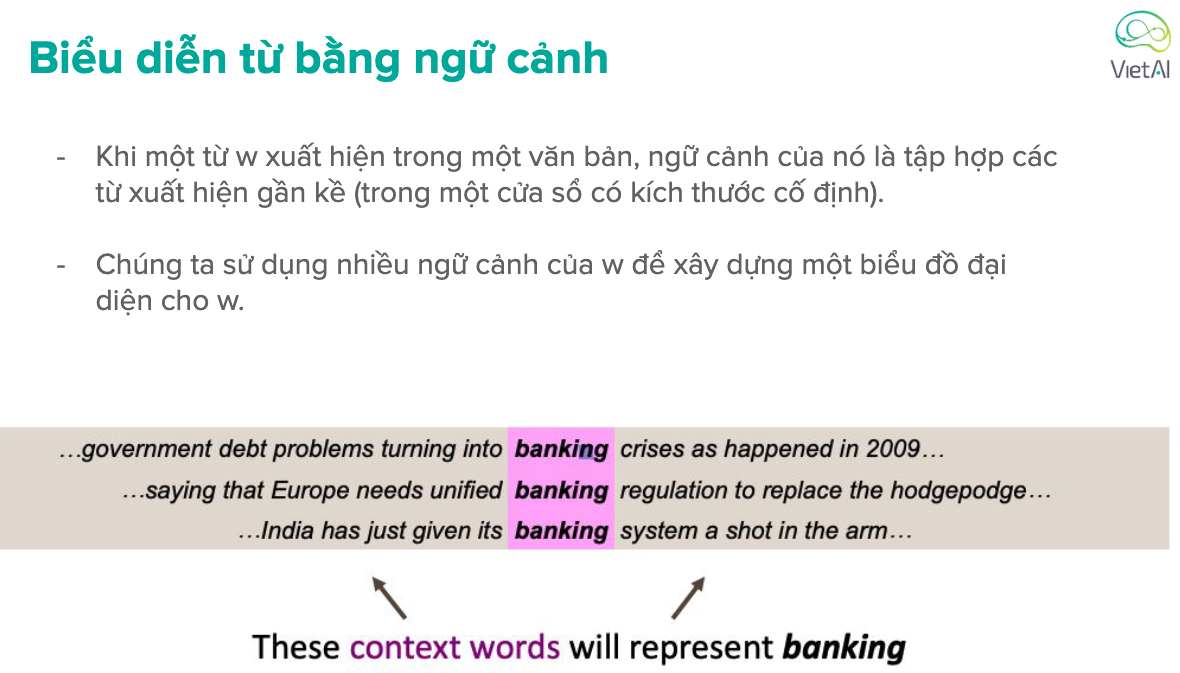

- Distributional semantics: The study of word meaning based on the distributional properties of words in text.

- Word embeddings: Dense vector representations of words that capture their meaning based on the distributional properties of words in text.

- Word similarity: The similarity between words based on their meaning, often measured using word embeddings.

- Word analogy: The relationship between words based on their meaning, often captured using word embeddings.

- Word sense induction: The task of automatically identifying the different senses of a word in a corpus of text.

Distributional semantics is based on the idea that words that occur in similar contexts tend to have similar meanings.

TF-IDF

TF-IDF is a statistical measure that evaluates how important a word is to a document in a collection or corpus. It is the product of two metrics: term frequency (TF) and inverse document frequency (IDF).

- Term Frequency (TF): The frequency of a term in a document.

- Inverse Document Frequency (IDF): The logarithm of the ratio of the total number of documents to the number of documents containing the term to measure how rare or unique a term is across the corpus.

Where \(t\) is the term, \(d\) is the document, and \(D\) is the corpus.

Key insights:

- Words that occur frequently in a document but are rare across the corpus will have high TF-IDF scores, making them important for identifying the document’s topic.

- Common words like “the,” “and,” or “is” (stopwords) will have low TF-IDF scores due to their high occurrence across documents.

Word Embeddings

- Word embeddings: Dense vector representations of words that capture their meaning based on the distributional properties of words in text.

- Word2Vec: A word embedding model that uses a neural network to learn word embeddings by predicting the context of words in a corpus of text.

- GloVe: A word embedding model that uses a global matrix factorization technique to learn word embeddings by predicting the co-occurrence of words in a corpus of text.

- FastText: A word embedding model that uses a neural network to learn word embeddings by predicting the context of words in a corpus of text.

- BERT: A word embedding model that uses a transformer architecture to learn word embeddings by predicting the masked words in a corpus of text.

Word2Vec

- CBOW (Continuous Bag of Words): Predicts the center word based on the context of the surrounding words.

- Skip-Gram: Predicts the context of the center word based on the center word.

The training objective of CBOW is:

\[P(w_t = w \mid w_{t-n}, \ldots, w_{t-1}, w_{t+1}, \ldots, w_{t+n})\]Where \(w_t\) is the center word and \(w_{t-n}, \ldots, w_{t-1}, w_{t+1}, \ldots, w_{t+n}\) are the context words.

The training objective of Skip-Gram is:

\[P(w_{t-n}, \ldots, w_{t-1}, w_{t+1}, \ldots, w_{t+n} \mid w_t)\]Because of the softmax function and the size of the vocabulary, the computation complexity is too high. Therefore, we use negative sampling to only update embeddings for a few negative samples rather than the entire vocabulary. We also use sigmoid function instead of softmax function to treat the problem as a binary classification problem.

- Given a real word pair, we want the model to output 1.

- Given a random negative sample word pair, we want the model to output 0.

GloVe

- Co-occurrence Matrix: Represents the co-occurrence of words in a corpus of text.

- Global Matrix Factorization: Decomposes the co-occurrence matrix into two lower-dimensional matrices, which represent the word embeddings.

The objective function of GloVe is:

\[J = \sum_{i,j=1}^V f(X_{ij})(w_i^T\tilde{w}_j + b_i + \tilde{b}_j - \log X_{ij})^2\]where \(V\) is the size of the vocabulary and \(X_{ij}\) is the co-occurrence count between word \(i\) and word \(j\). \(w_i\) and \(\tilde{w}_j\) are the word embeddings of word \(i\) and word \(j\), \(b_i\) and \(\tilde{b}_j\) are the bias terms of word \(i\) and word \(j\). The weighting function \(f(x)\) should satisfy the following properties:

-

\(f(0) = 0\). If \(f\) is viewed as a continuous function, it should vanish as \(x \to 0\) fast enough that \(\lim_{x \to 0} f(x)\log^2 x\) is finite.

-

\(f(x)\) should be non-decreasing so that rare co-occurrences are not overweighted.

-

\(f(x)\) should be relatively small for large values of \(x\), so that frequent co-occurrences are not overweighted.

One class of functions that satisfies these properties is:

\[f(x) = \begin{cases} (x/x_{\text{max}})^\alpha & \text{if } x < x_{\text{max}} \\ 1 & \text{otherwise} \end{cases}\]How to represent sentences

- Bag of Words: Represents a sentence as a bag of words, ignoring the order of the words.

- TF-IDF: Represents a sentence as a bag of words, but with the frequency of the words in the sentence.

- N-grams: Represents a sentence as a sequence of N consecutive words.

- Word Embeddings: Dense vector representations of sentences that capture their meaning based on the distributional properties of words in text. However, it has context-independent issue, i.e., the same word has the same embedding regardless of its usage

- Contextual Embeddings: Use models that generate word embeddings based on the context within the sentence. Such as ELMo, BERT, etc. The embeddings can be the output of the [CLS] token or pool all word embeddings.

- Direct Sentence Embedding: Directly encode entire sentences into a fixed-length vector, such as Sentence-BERT or Universal Sentence Encoder.

N-gram model vs Neural model in terms of sentence representation:

- Neural model can capture the meaning of a sentence by learning the context of the words in the sentence while N-gram model cannot.

How to represent documents

Beside the above methods that we can use to obtain embeddings of all sentences in a document then arregate them, we can also use the following methods:

- Doc2Vec: Extends Word2Vec to represent entire documents as dense vectors, such as Doc2Vec, Paragraph Vector, etc.

- Topic Modeling: Extracts the topics from the document, such as Latent Dirichlet Allocation (LDA), Non-Negative Matrix Factorization (NMF), etc.

Data Augmentation

- Synonym Replacement: Replaces words in the document with their synonyms. E.g., “The cat sat on the mat.” -> “The feline rested on the rug.”

- Random Insertion: Randomly inserts words into the document. E.g., “The dog barked loudly.” -> “The big dog barked loudly.”

- Random Deletion: Randomly deletes words from the document. E.g., “The cat sat on the mat.” -> “The cat on the mat.”

- Random Swap: Randomly swaps words in the document. E.g., “The weather is very nice today.” -> “The weather today is very nice.”

- Back Translation: Translates the document to another language and then translates it back to the original language.

- Paraphrase: Generates a new sentence that has the same meaning as the original sentence.

- Contextual Augmentation: Uses models that generate word embeddings based on the context within the sentence. Such as ELMo, BERT, etc. E.g., “The car is fast.” -> “The vehicle is speedy.”

- CutMix: Mixes two documents by randomly selecting a segment from one document and replacing it with a segment from another document. E.g., “The sky is blue.” and “The grass is green.” -> “The sky is green.” and “The grass is blue.”

- Entity Replacement: Replace named entities with similar entities from a predefined set or dictionary. E.g., “John went to Paris last summer.” -> “Mary traveled to London last summer.”

- Generate Synthetic Data: Generate synthetic data using generative models. E.g., Prompt: “Describe a sunny day.”, Generate: “The sun shone brightly, warming the fields with golden light.”

Fundamental Tasks in NLP

Low-level Tasks

Tokenization

Definition: Splitting text into smaller units (words, subwords, or sentences).

Examples: “Dr. Anh Bui’s research focuses on AI.” -> [“Dr.”, “Anh”, “Bui”, “‘s”, “research”, “focuses”, “on”, “AI”, “.”]

Challenges:

- Out-of-Vocabulary (OOV) words

- Misspellings words

- Tokenizing agglutinative languages (e.g., Japanese, Finnish) where words merge complex meanings

- Handling abbreviations (e.g., “U.S.A. vs USA”)

- Dealing with contractions (“don’t → do + not”)

Part-of-Speech Tagging

Definition: Assigning grammatical categories (noun, verb, adjective) to words.

Examples: “Dr. Anh Bui’s research focuses on AI.”

- “Dr.”: NNP (Proper Noun, Prepositional)

- “‘s”: POS (Possessive)

- “research”: NN (Noun, Singular)

- “focuses”: VBZ (Verb, 3rd person singular present)

- “on”: IN (Preposition)

Challenges:

- Ambiguous words (e.g., “bank” can be a noun or a verb)

- Handling domain-specific terminology (e.g., medical or legal terms)

Named Entity Recognition

Definition: Identifying and classifying named entities in text into predefined categories such as person, organization, location, etc.

Examples: “Dr. Anh Bui is a research fellow at Monash University, Australia.”

- “Dr. Anh Bui”: B-PERSON

- “Monash University”: B-ORGANIZATION

- “Australia”: B-LOCATION

Challenges:

- Ambiguous entities (e.g., “apple” can be a fruit or a technology company)

- Handling nested entities (e.g., “New York City”, “San Francisco Bay Area” as a location entity)

Syntactic Parsing

Definition: Analyzing sentence structure (dependency or constituency parsing).

Examples: “The cat sat on the mat.”

- “sat” → root

- “cat” → subject

- “mat” → object

Challenges:

- Ambiguous sentence structures (e.g., “The man who the woman loves” can be parsed as “(The man) who (the woman loves)” or “(The man who) the woman loves”)

- Handling long-distance dependencies (e.g., “The book that the girl gave to the boy” where “book” depends on “boy”)

Semantic Role Labeling

Definition: Identifying roles of words in a sentence (who did what to whom).

Examples: “John gave Mary a book.”

- “John” → Giver

- “Mary” → Recipient

- “Book” → Object

Challenges:

- Understanding implicit meaning (e.g., “John helped Mary” → What did he do?)

- Handling metaphors and idioms

Coreference Resolution

Definition: Identifying which words refer to the same entity.

Examples: “Sarah loves her dog. She takes it for walks.”

- “She” → Sarah

- “It” → dog

Challenges:

- Resolving pronouns in long documents

- Handling ambiguous references (e.g., “John met Bob at the cafe. He ordered coffee.”)

High-level/Application Tasks

Sentiment Analysis

Definition: Determining the emotion behind text (positive, negative, neutral).

Examples: “The service was great, but the food was terrible!” → Mixed sentiment

Challenges:

- Sarcasm/Irony: “Oh great, another meeting.”

- Context dependency: “Not bad” (Positive or Negative?)

Machine Translation

Definition: Automatically translating text between languages.

Examples: “Hello, how are you?” → “Xin chào, dao này bạn thế nào?”

Challenges:

- Word alignment issues (different sentence structures in languages)

- Low-resource languages (limited training data)

- Idioms (Literal translation may not work)

Text Summarization

Definition: Generating a concise summary from a longer text.

Examples: Summarizing a research paper into key takeaways.

Challenges:

- Preserving key information without losing meaning

- Avoiding hallucinations (LLMs generating false facts)

Question Answering

Definition: Answering questions based on a given text or knowledge base.

Example (Extractive QA): Text: “Einstein developed the theory of relativity.” Question: “Who developed the theory of relativity?” Answer: “Einstein”

Challenges:

- Commonsense reasoning (e.g., “Can you fry ice?”)

- Handling unanswerable questions

Text Generation

Definition: Automatically generating human-like text (e.g., chatbots, story generation).

Examples: ChatGPT answering questions, generating articles.

Challenges:

- Hallucination: LLMs may generate false facts.

- Bias in data: Reinforces social biases present in training data.

Evaluation

References:

- [1] Perplexity of fixed-length models by Hugging Face

- [2] https://towardsdatascience.com/foundations-of-nlp-explained-bleu-score-and-wer-metrics-1a5ba06d812b

- [3] https://huggingface.co/spaces/evaluate-metric/bleu

- [4] LLM Evaluation Metrics: The Ultimate LLM Evaluation Guide

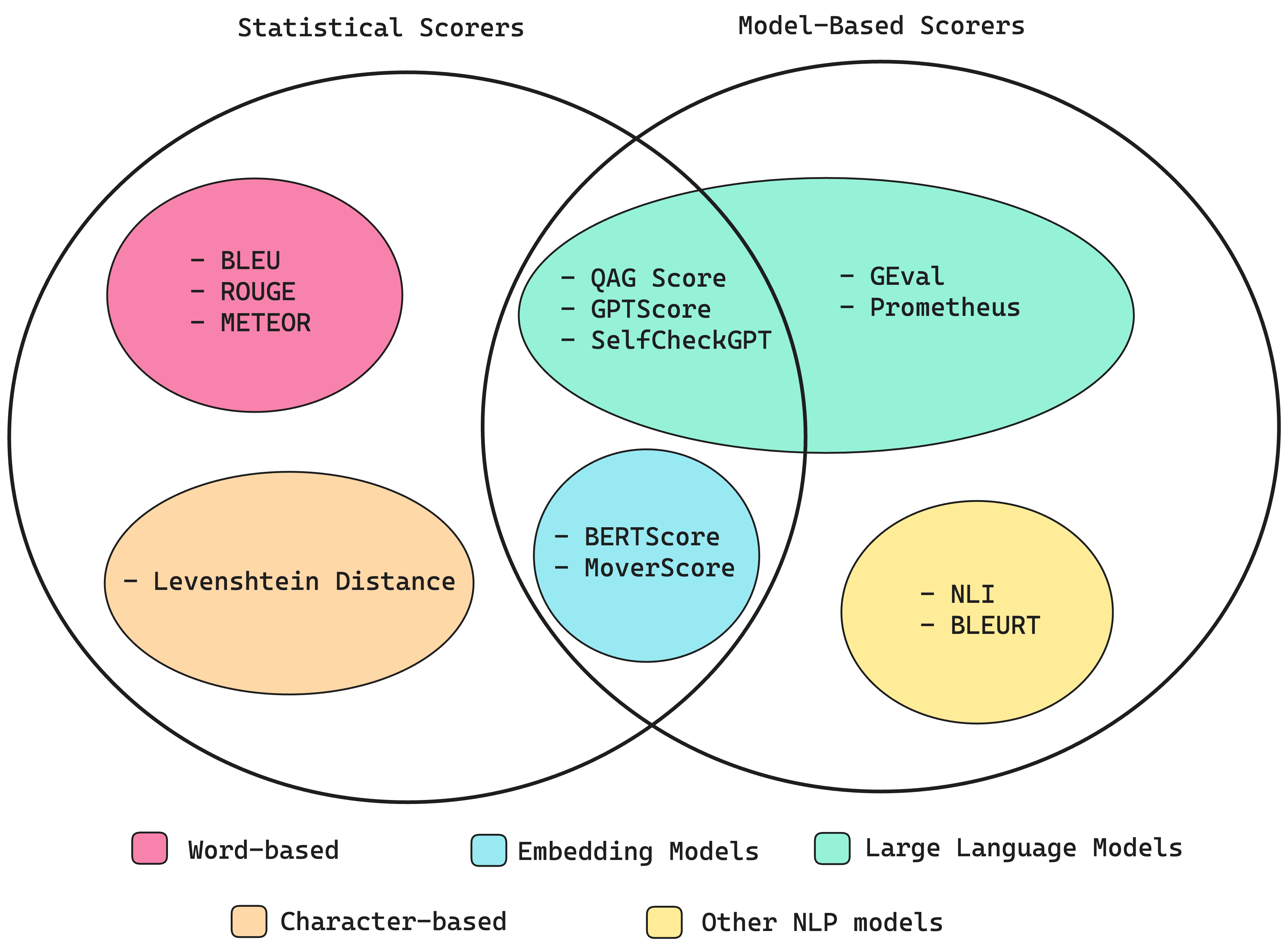

Evaluating the output of generative models presents unique challenges, particularly in assessing qualities like realism in generated images or coherence in generated text. This section explores various evaluation approaches, from traditional statistical metrics to more advanced model-based methods.

We can broadly categorize LLM evaluation metrics into two main types:

-

Statistical Metrics

- Traditional NLP metrics like Perplexity, BLEU Score, and WER

- Advantages: Reliable, consistent, and computationally efficient

- Limitations: Often fail to capture semantic meaning and context

-

Model-Based Metrics

- Advanced metrics like GEVal and SelfCheckGPT

- Advantages: Better at capturing semantic understanding and context

- Limitations: Can be inconsistent and prone to hallucination

For example, consider the BLEU score’s limitations with word order: The sentences “The guard arrived late because of the rain” and “The rain arrived late because of the guard” would receive identical unigram BLEU scores, despite having very different meanings. While statistical metrics like BLEU are computationally efficient and consistent, they often miss such semantic nuances. Conversely, model-based scorers like SelfCheckGPT can better capture meaning but may suffer from inconsistency and hallucination issues.

Most critical evaluation criteria:

- Answer Relevancy: Determines whether an LLM output is able to address the given input in an informative and concise manner.

- Prompt Alignment: Determines whether an LLM output is able to follow instructions from your prompt template.

- Correctness: Determines whether an LLM output is factually correct based on some ground truth.

- Hallucination: Determines whether an LLM output contains fake or made-up information.

- Contextual Relevancy: Determines whether the retriever in a RAG-based LLM system is able to extract the most relevant information for your LLM as context.

- Responsible Metrics: Includes metrics such as bias and toxicity, which determines whether an LLM output contains (generally) harmful and offensive content.

- Task-Specific Metrics: Includes metrics such as summarization, which usually contains a custom criteria depending on the use-case.

Perplexity

Perplexity is a measure of how well a language model can predict the next token in a sequence. It is defined as the inverse probability of the test set, normalized by the number of tokens (a.k.a. the exponent of the cross-entropy loss).

\[\text{Perplexity}(X) = \exp\left(-\frac{1}{n} \sum_{i=1}^n \log P_{\theta}(w_i \mid w_1, w_2, \ldots, w_{i-1})\right)\]Where \(P_{\theta}(w_i \mid w_1, w_2, \ldots, w_{i-1})\) is the probability of the next token in the sequence, obtained from a language model \(P_{\theta}\), and \(X = \{w_1, w_2, \ldots, w_n\}\) is a sequence of tokens in the test set. The lower the perplexity, the better the language model. Or the better model will be able to give higher probability to the token actually appearing next.

Perplexity

Perplexity measures the uncertainty of a probability distribution. If a random variable has more than k possible outcomes, the perplexity will still be k if the distribution is uniform over k outcomes and zero for the rest. Thus, a random variable with a perplexity of k can be described as being “k-ways perplexed,” meaning it has the same level of uncertainty as a fair k-sided die.

Example: Calculating perplexity with GPT-2 in 🤗 Transformers

BLEU score

The BLEU (Bilingual Evaluation Understudy) score is a metric for evaluating the quality of text generated by machine translation models by comparing it to one or more reference translations. It operates on the principle that the closer a machine-generated translation is to a professional human translation, the better it is.

Calculation of BLEU Score

N-gram Precision

The BLEU score calculates the precision of n-grams (contiguous sequences of ‘n’ words) between the candidate (machine-generated) translation and the reference translations. Commonly, unigrams (1-gram), bigrams (2-gram), trigrams (3-gram), and four-grams (4-gram) are used.

Clipped Precision

To prevent the model from gaining an artificially high precision by repeating words or phrases, BLEU employs “clipped precision.” This means that for each n-gram in the candidate translation, its count is clipped to the maximum number of times it appears in any single reference translation. For example, if the word “the” appears twice in a reference translation, and the candidate translation uses “the” four times, only two instances of “the” are considered for precision calculation.

The modified n-gram precision function is formally defined as:

\[p_n(\hat{S}; S) := \frac{\sum_{i=1}^M \sum_{s\in G_n(\hat{y}^{(i)})} \min(C(s,\hat{y}^{(i)}), \max_{y\in S_i} C(s,y))}{\sum_{i=1}^M \sum_{s\in G_n(\hat{y}^{(i)})} C(s,\hat{y}^{(i)})}\]While this looks complicated, it simplifies to a straightforward case when dealing with one candidate sentence and one reference sentence:

\[p_n(\{\hat{y}\};\{y\}) = \frac{\sum_{s\in G_n(\hat{y})}\min(C(s,\hat{y}), C(s,y))}{\sum_{s\in G_n(\hat{y})} C(s,\hat{y})}\]The denominator \(\sum_{s\in G_n(\hat{y})} C(s,y)\) represents the number of n-substrings in \(\hat{y}\) that appear in \(y\). Importantly, we count n-substrings, not n-grams. For example, when \(\hat{y} = aba\), \(y = abababa\), and \(n = 2\), all the 2-substrings in \(\hat{y}\) (“ab” and “ba”) appear in \(y\) 3 times each, so the count is 6, not 2.

Brevity Penalty (BP)

To discourage overly short candidate translations that might achieve high precision by omitting content, BLEU incorporates a brevity penalty. If the candidate translation is shorter than the reference, a penalty is applied to reduce the score.

Final BLEU Score

The BLEU score is computed by combining the geometric mean of the n-gram precisions with the brevity penalty:

\[\text{BLEU} = \text{BP} \times \exp(\sum_{n=1}^N w_n \log p_n)\]Where:

- BP is the brevity penalty

- \(w_n\) is the weight for each n-gram level (commonly uniform weights are used)

- \(p_n\) is the clipped precision for n-grams

Strengths of BLEU Score

- Speed and Simplicity: BLEU is quick to calculate and easy to understand

- Correlation with Human Judgment: It aligns well with human evaluations of translation quality

- Language Independence: BLEU is language-independent, making it applicable across different languages

- Multiple References: It can handle multiple reference translations, accommodating the diversity in acceptable translations

- Wide Adoption: Its widespread use facilitates comparison across different models and studies

Weaknesses of BLEU Score

- Sensitivity to Exact Matches: BLEU requires exact matches between candidate and reference translations, which may not account for synonyms or paraphrasing

- Lack of Context Understanding: It doesn’t consider the overall meaning or context, focusing solely on n-gram overlap

- Ignore the importance of words: With Bleu Score an incorrect word like “to” or “an” that is less relevant to the sentence is penalized just as heavily as a word that contributes significantly to the meaning of the sentence.

- Ignore the order of words: It does not consider the order of words eg. The sentence “The guard arrived late because of the rain” and “The rain arrived late because of the guard” would get the same (unigram) Bleu Score even though the latter is quite different.

- Brevity Penalty Limitations: While the brevity penalty discourages short translations, it may not adequately reward longer, more informative translations

- Inadequate for Single Sentences: BLEU is designed for corpus-level evaluation and may not be reliable for evaluating individual sentences

WER (Word Error Rate)

Speech-to-Text applications use Word Error Rate, not Bleu Score. It is because output of speech-to-text applications is a sequence of words that requires exact matches with the input speech/transcript. The metric that is typically used for these applications is Word Error Rate (WER), or its sibling, Character Error Rate (CER). It compares the predicted output and the target transcript, word by word (or character by character) to figure out the number of differences between them.

The difference between prediction and target can be classified into three categories:

- Substitution: The predicted word is different from the target word.

- Insertion: The predicted word is not in the target.

- Deletion: The target word is not in the predicted output.

Drawbacks of WER:

- It does not distinguish between words that are important to the meaning of the sentence and those that are not as relevant, for example, missing the word “the” in “the guard arrived late because of the rain” is the same as missing the word “rain” in the same sentence.

- When comparing words, it does not consider whether two words are different in just a single character or are completely different, for example, “arrive” and “arrived” are considered different words.

ROUGE (Recall-Oriented Understudy for Gisting Evaluation)

ROUGE measures the overlap between the generated text (candidate) and one or more reference texts. It focuses on n-gram, sequence, and word-level similarity and is particularly recall-oriented, meaning it emphasizes how much of the reference text is captured by the generated text.

\[\text{ROUGE-N} = \frac{\sum_{r \in R} \sum_{\text{gram}_n \in r} \min \big( \text{Count}_{C}(\text{gram}_n), \text{Count}_{r}(\text{gram}_n) \big)}{\sum_{r \in R} \sum_{\text{gram}_n \in r} \text{Count}_{r}(\text{gram}_n)}\]- Numerator: number of overlapping \(n\)-grams between candidate and references

- Denominator: total number of \(n\)-grams in references

Special cases:

- ROUGE-1 → unigram overlap

- ROUGE-2 → bigram overlap

METEOR (Metric for Evaluation of Translation with Explicit Ordering)

Motivation:

Traditional metrics like BLEU often rely on exact word matches and can fail to capture semantic similarity or word order quality. METEOR was proposed to better align with human judgments by combining precision, recall, and linguistic resources (e.g., stemming and synonyms). It is particularly useful when nuanced language understanding is important.

Unlike purely lexical metrics like BLEU, METEOR incorporates semantic and linguistic features, aiming for a more nuanced evaluation.

Some key features of METEOR:

- Semantic Matching: Uses WordNet synonyms, stemming, and paraphrases (not antonyms) to capture semantic meaning beyond exact word matches.

- Sentence Level Evaluation: Evaluates translations at the sentence level, making it more sensitive to quality differences.

- Explicit Ordering: Applies a penalty for disordered matches, accounting for word order in the translation.

Formula

Harmonic mean of precision and recall (with recall weighted higher):

\[F = \frac{10 P R}{9P + R}\]Where:

- \(P\) = precision: how many words in the generated text are also in the reference text

- \(R\) = recall: how many words in the reference text are also in the generated text

Penalty for disordered matches:

\[Penalty = \gamma \left( \frac{c}{m} \right)^3\]Where:

- \(c\) = number of contiguous matched segments

- \(m\) = number of matched words

- \(\gamma = 0.5\) (default constant)

Final METEOR score:

\[\text{METEOR} = F \times (1 - Penalty)\]Advantages of METEOR

- Linguistic richness: Incorporates synonyms, stemming, and paraphrases.

- Better correlation with human judgment: Often more reliable than metrics like BLEU.

- Flexibility: Suitable for multiple languages (when appropriate linguistic resources are available).

Limitations of METEOR

- Computational cost: More resource-intensive than simpler metrics like BLEU.

- Language dependency: Relies on linguistic resources (e.g., WordNet), which may not be equally robust across languages.

- Bias toward longer outputs: Its recall orientation can favor verbose candidates.

Levenshtein distance (Edit Distance)

Levenshtein distance, also known as edit distance, is a metric used to measure the difference between two strings. It calculates the minimum number of single-character edits (insertions, deletions, or substitutions) required to transform one string into another.

Where:

- \(s_1\) and \(s_2\) are the two strings

- \(\text{distance}(s_1, s_2)\) is the Levenshtein distance between \(s_1\) and \(s_2\)

- \(\text{cost of deleting } s_1[0]\) is the cost of deleting the first character of \(s_1\)

- \(\text{cost of inserting } s_2[0]\) is the cost of inserting the first character of \(s_2\)

- \(\text{cost of substituting } s_1[0] \text{ with } s_2[0]\) is the cost of substituting the first character of \(s_1\) with the first character of \(s_2\)

Example Calculation

Strings: A = “kitten”, B = “sitting”

Step by step calculation:

- Substitute “k” with “s”: distance(“kitten”, “sitten”) cost = 1

- Substitute “e” with “i”: distance(“sitten”, “sittin”) cost = 1

- Add “g” to the end: distance(“sittin”, “sitting”) cost = 1

Levenshtein distance = 3

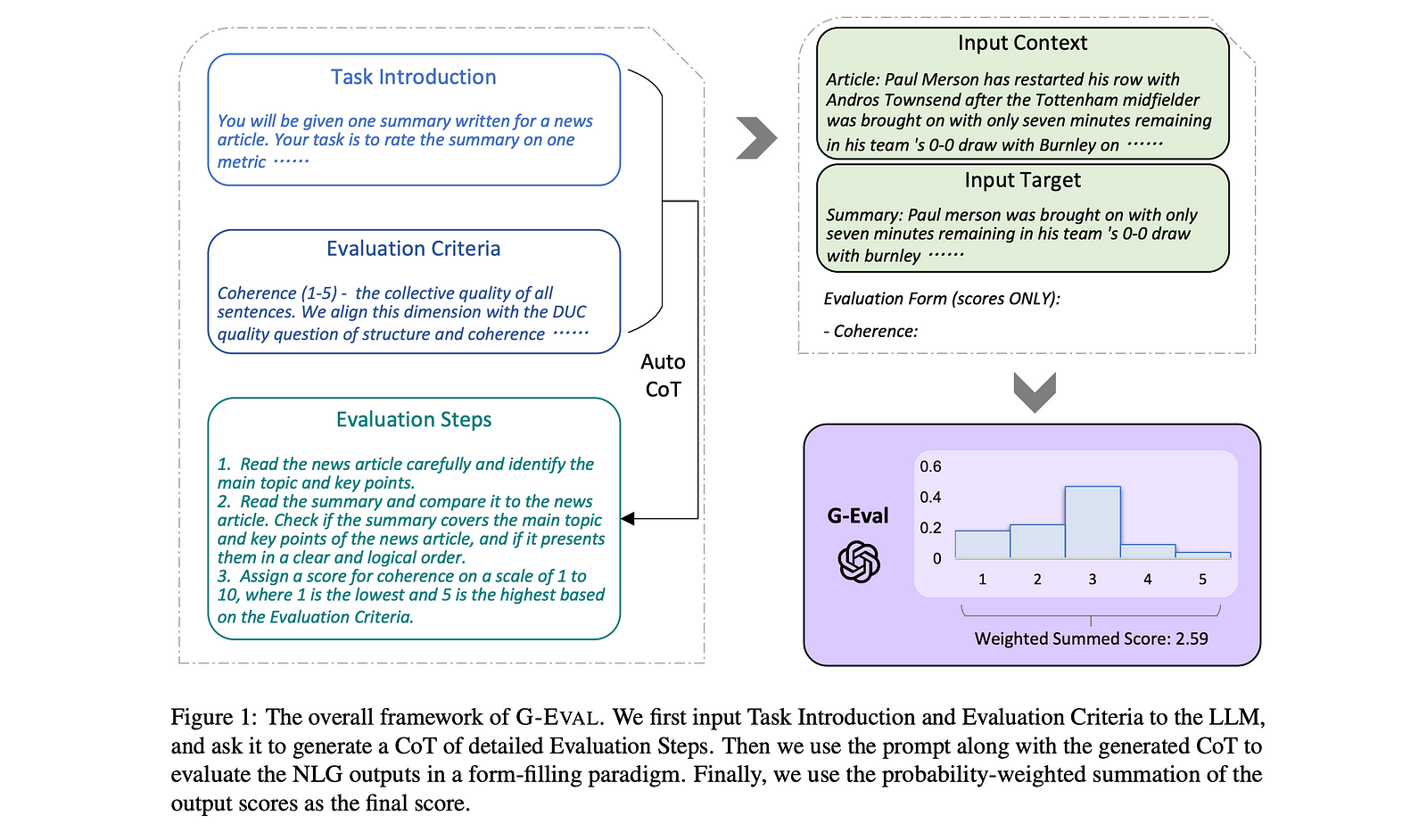

G-EVAL

Reference:

G-EVAL is a framework that treats a Large Language Model (LLM) as a judge for evaluating other LLMs outputs. It allows us to define custome evaluation criteria in natural language, which the system then decomposes into structured evaluation steps. The LLM-judge evalutes outputs according to those steps, and then G-Eval produces a weighted score (leveraging token probabilities, confidence scores, etc.) as the final metric. Compared to the traditional statistical metrics like BLEU, ROUGE, G-Eval is more flexible and human-aligned alternative.

How G-Eval works

Criteria → Evaluation Steps

You write a criterion in natural language (e.g. “Coherence (1–5): how well does the text flow logically?”). G-Eval’s internal LLM converts that into step-by-step sub-questions or checks.

The criterion prompt for coherence in the paper looked like this:

g_eval_criteria = """

Coherence (1-5) - the collective quality of all sentences. We align this dimension with

the DUC quality question of structure and coherence whereby "the summary should be

well-structured and well-organized. The summary should not just be a heap of related information, but should build from sentence to sentence to a coherent body of information about a topic.

"""

Which resulted in this final evaluation prompt after evaluation steps were generated from the above criterion

g_eval_prompt_template = """

You will be given one summary written for a news article.

Your task is to rate the summary on one metric.

Please make sure you read and understand these instructions carefully. Please keep this

document open while reviewing, and refer to it as needed.

Evaluation Criteria:

Coherence (1-5) - the collective quality of all sentences. We align this dimension with

the DUC quality question of structure and coherence whereby "the summary should be

well-structured and well-organized. The summary should not just be a heap of related information, but should build from sentence to sentence to a coherent body of information about a topic."

Evaluation Steps:

1. Read the news article carefully and identify the main topic and key points.

2. Read the summary and compare it to the news article. Check if the summary covers the main

topic and key points of the news article, and if it presents them in a clear and logical order.

3. Assign a score for coherence on a scale of 1 to 5, where 1 is the lowest and 5 is the highest

based on the Evaluation Criteria.

Example:

Source Text:

Summary:

Evaluation Form (scores ONLY):

- Coherence:

"""

Judging via the LLM-judge

Using those evaluation steps, the model assesses the candidate output (and perhaps reference or context) and provides intermediate judgments.

Scoring / Aggregation with weights

Rather than treating all judgments equally, G-Eval weights sub-judgments using model confidences (log probabilities), which gives more granularity and helps reduce bias and randomness.

By breaking down the judgment process and weighting according to confidence, G-Eval aims to improve consistency, fine-grained discrimination, and robustness compared to naive single-pass LLM scoring.

from deepeval.metrics import GEval

from deepeval.test_case import LLMTestCaseParams

correctness_metric = GEval(

name="Correctness",

criteria="Determine whether the actual output is factually correct based on the expected output.",

evaluation_params=[LLMTestCaseParams.ACTUAL_OUTPUT, LLMTestCaseParams.EXPECTED_OUTPUT],

)

Tokenization

A tokenizer is an important component of any NLP model. It is responsible for converting text into tokens (e.g., words, characters, or subwords) that LLMs can work with instead of raw text. The choice of tokenizer significantly impacts the performance of the model and the ability to generalize across different tasks.

- Shorter token sequences = faster inference and training. A good tokenizer reduces unncessary tokens and reduces the vocabulary size.

-

Better tokenization improves generalization. A good tokenizer will be able to handle out-of-vocabulary words (OOV) better. Words

"play","playing","plays"should be tokenized like"play","play" + "ing","play" + "s"rather than three different tokens so that the model can understand the relationship between the words. - Smaller vocabularies can work for low-resource languages.

- Custom tokenizers may improve domain-specific tasks, e.g., legal, medical, coding, etc, where there are specific tokens that are not found in the general tokenizer.

Most common tokenization methods:

- Byte pair encoding (BPE): A tokenization algorithm that iteratively merges the most frequent pairs of characters in a corpus of text to create a vocabulary of subword units. Used in GPT models.

- WordPiece: A tokenization algorithm similar to BPE that uses a greedy algorithm to iteratively merge the most frequent subword units in a corpus of text. Used in BERT models.

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("gpt2")

print(tokenizer.tokenize("biodegradability"))

# ['bio', 'degrad', 'ability']

Out-of-Vocabulary (OOV)

Reference:

- [1] https://spotintelligence.com/2024/10/08/out-of-vocabulary-oov-words/

When tokenizing text, new words (also called Out-of-Vocabulary (OOV) words) can be challenging. These are words that do not exist in the tokenizer’s vocabulary. There are several strategies to handle OOV words:

Subword Tokenization

Instead of treating words as atomic units, subword tokenization breaks words into smaller, reusable parts. This can be done by Byte Pair Encoding (BPE) or WordPiece. This helps handle new words, misspellings, and rare words effectively.

However, some rare words that cannot be broken down into smaller units still end up as new individual tokens.

Character-Level Tokenization

This approach treats each character as a token, which can handle OOV words better. However, it can lead to a very large sequence length and loss of semantic meaning, making it harder for the model to learn the context of the text.

However, this technique is still useful for languages with rich morphology - where the meaning of a word is determined by changes to the word’s base form instead of relative position or additional affixes.

Fallback to Unknown Token

If a word is not in the tokenizer’s vocabulary, replace it with a special [UNK] token. This is the simplest approach and can handle OOV words effectively. However, it can lead to loss of information and reduced model performance.

Combining this technique with subword tokenization can at least provide a partial meaning of the text.

Using Lookup Table

Maintaining an external lookup table of OOV words and their corresponding embeddings. When an OOV word is encountered, it is replaced with the embedding from the lookup table, otherwise, it is processed by the tokenizer as normal.

Contextual Embedding (FastText, ELMO)

-

FastText: FastText is an extension of Word2Vec that handles OOV words by considering subword information. Instead of learning embeddings for entire words, FastText learns embeddings for character n-grams. This allows it to generate meaningful representations for OOV words based on their constituent subwords. For example, FastText can produce an embedding for “blockchain”, even if it wasn’t part of the training data, by combining the embeddings of “block” and “chain.”

-

ELMO: ELMO uses a context-sensitive approach to handle OOV words. It uses a bidirectional LSTM to generate context-specific embeddings for each word. This allows it to generate a representation for OOV words based on the context in which they appear.

What is NLTK?

- Natural Language Toolkit (NLTK): A library for building programs to work with human language data. It provides easy-to-use interfaces to over 50 corpora and lexical resources such as WordNet, along with a suite of text processing libraries for classification, tokenization, stemming, tagging, parsing, and semantic reasoning.

Transformer

Most modern Large Language Models (LLMs) are built on the Transformer architecture, which stacks multiple layers of transformer blocks. Each block uses multi-head self-attention to capture relationships between tokens, allowing the model to understand words in the context of broader sequences. This architecture is the foundation behind modern NLP and many multimodal models.

Transformer Block

A transformer block consists of two main components:

- Multi-Head Self-Attention – lets each token attend to others in the sequence, capturing dependencies regardless of distance.

- Feed-Forward Network (FFN) – applies non-linear transformations to enrich token representations.

Residual connections and layer normalization stabilize training and help preserve information flow.

class TransformerBlock(nn.Module):

def __init__(self, embed_dim, num_heads):

super().__init__()

self.attention = nn.MultiheadAttention(embed_dim, num_heads)

self.feed_forward = nn.Sequential(

nn.Linear(embed_dim, 4 * embed_dim),

nn.ReLU(),

nn.Linear(4 * embed_dim, embed_dim)

)

self.layer_norm1 = nn.LayerNorm(embed_dim)

self.layer_norm2 = nn.LayerNorm(embed_dim)

def forward(self, x):

attn_output, _ = self.attention(x, x, x)

x = self.layer_norm1(x + attn_output)

ff_output = self.feed_forward(x)

return self.layer_norm2(x + ff_output)

Tokenization Before text can be processed, it must be tokenized into smaller units (tokens) such as words or subwords. These tokens are mapped to embeddings—high-dimensional vectors that capture semantic meaning and provide input to the transformer.

from transformers import AutoTokenizer, AutoModel

tokenizer = AutoTokenizer.from_pretrained("bert-base-uncased")

model = AutoModel.from_pretrained("bert-base-uncased")

text = "Hello, how are you?"

inputs = tokenizer(text, return_tensors="pt")

outputs = model(**inputs)

embeddings = outputs.last_hidden_state

Cross-Attention and Self-Attention

Transformers rely heavily on attention mechanisms, which compute relationships between tokens. There are two main types:

| Aspect | Self-Attention | Cross-Attention |

|---|---|---|

| Definition | Focuses on relationships between elements of the same sequence (e.g., within a sentence). | Focuses on relationships between elements of one sequence and another sequence (e.g., query and context). |

| Inputs | Single sequence (e.g., the same sequence is used for queries, keys, and values). | Two sequences: one provides queries, and the other provides keys and values. |

| Purpose | Captures intra-sequence dependencies, helping the model understand context within the same sequence. | Captures inter-sequence dependencies, aligning information between different sequences. |

| Key Benefit | Helps the model understand contextual relationships within a sequence. | Enables the model to incorporate external information from another sequence. Very important for multi-modal tasks. |

Details of Attention Mechanism

Below is the table of the operations and dimensions of the attention mechanisms including the original and two additional operations proposed in our paper (KPOP).

| Operation | Original Operation | Original Dim | Concatenative Operation | Concatenative Dim | Additive Operation | Additive Dim |

|---|---|---|---|---|---|---|

| Q | WqZ | b×mz×d | WqZ | b×mz×d | WqZ | b×mz×d |

| K | WkC | b×mc×d | Wkcat(C,repeat(p,b)) | b×(mc+mp)×d | Wk(C+repeat(p,b)) | b×mc×d |

| V | WvC | b×mc×d | Wvcat(C,repeat(p,b)) | b×(mc+mp)×d | Wv(C+repeat(p,b)) | b×mc×d |

| A | σ(QKT/√d) | b×mz×mc | σ(QKT/√d) | b×mz×(mc+mp) | σ(QKT/√d) | b×mz×mc |

| O | AV | b×mz×d | AV | b×mz×d | AV | b×mz×d |

Note: cat(), repeat(·,b), σ() represent the concatenate (at dim=1), repeat an input b times (at dim=0) and the softmax operations (at dim=2), respectively.

In the table, Z is the input sequence (i.e., the query), C is the context sequence that should be attended to. In the self-attention, Z and C are the same.

import torch

import torch.nn as nn

import torch.nn.functional as F

class CrossAttention(nn.Module):

def __init__(self, embed_dim, num_heads, dropout=0.1):

"""

Cross-Attention module.

Args:

embed_dim (int): Dimension of the input embeddings.

num_heads (int): Number of attention heads.

dropout (float): Dropout probability.

"""

super(CrossAttention, self).__init__()

self.embed_dim = embed_dim

self.num_heads = num_heads

self.head_dim = embed_dim // num_heads

assert self.head_dim * num_heads == embed_dim, "embed_dim must be divisible by num_heads"

# Query, Key, and Value linear projections

self.query_proj = nn.Linear(embed_dim, embed_dim)

self.key_proj = nn.Linear(embed_dim, embed_dim)

self.value_proj = nn.Linear(embed_dim, embed_dim)

# Output projection

self.out_proj = nn.Linear(embed_dim, embed_dim)

self.dropout = nn.Dropout(dropout)

def forward(self, query, key, value, mask=None):

"""

Forward pass for cross-attention.

Args:

query (torch.Tensor): Query tensor of shape (batch_size, query_len, embed_dim).

key (torch.Tensor): Key tensor of shape (batch_size, key_len, embed_dim).

value (torch.Tensor): Value tensor of shape (batch_size, key_len, embed_dim).

mask (torch.Tensor, optional): Mask tensor of shape (batch_size, 1, 1, key_len) or (batch_size, 1, query_len, key_len).

Used to mask out invalid positions.

Returns:

torch.Tensor: Output tensor of shape (batch_size, query_len, embed_dim).

"""

batch_size, query_len, embed_dim = query.size()

key_len = key.size(1)

# Project query, key, and value

Q = self.query_proj(query).view(batch_size, query_len, self.num_heads, self.head_dim).transpose(1, 2) # (batch_size, num_heads, query_len, head_dim)

K = self.key_proj(key).view(batch_size, key_len, self.num_heads, self.head_dim).transpose(1, 2) # (batch_size, num_heads, key_len, head_dim)

V = self.value_proj(value).view(batch_size, key_len, self.num_heads, self.head_dim).transpose(1, 2) # (batch_size, num_heads, key_len, head_dim)

# Compute scaled dot-product attention

scores = torch.matmul(Q, K.transpose(-2, -1)) / (self.head_dim ** 0.5) # (batch_size, num_heads, query_len, key_len)

if mask is not None:

scores = scores.masked_fill(mask == 0, float('-inf')) # Apply mask

attn_weights = F.softmax(scores, dim=-1) # (batch_size, num_heads, query_len, key_len)

attn_weights = self.dropout(attn_weights)

# Weighted sum of values

attn_output = torch.matmul(attn_weights, V) # (batch_size, num_heads, query_len, head_dim)

attn_output = attn_output.transpose(1, 2).contiguous().view(batch_size, query_len, embed_dim) # (batch_size, query_len, embed_dim)

# Final output projection

output = self.out_proj(attn_output) # (batch_size, query_len, embed_dim)

return output

Why Multi-Head Attention?

Multi-head attention is a core innovation of the Transformer architecture. It enables the model to capture a richer variety of relationships within the input by combining multiple perspectives in parallel.

- Diverse Representations: Each attention head has its own set of learned weights, allowing it to focus on different parts of the sequence or capture different types of dependencies (e.g., short-range vs. long-range). Together, these heads provide a more comprehensive understanding of context.

- Efficient Use of Dimensions: The embedding dimension is split across the heads, so each head works in a lower-dimensional subspace. This keeps individual computations efficient, while aggregating the outputs restores the full representational power of the model.

Positional Embedding

The key challenge for transformers is handling the sequential nature of text, where the order of tokens matters. The Self-Attention mechanism is permutation-invariant, meaning it processes a sequence of tokens as a set, with no inherent knowledge of their order or relative positions. Without this positional information, the model would lose important context about word order and context, e.g., cannot distinguish between “the cat sat on the mat” and “the mat sat on the cat”.

To address this, transformers use positional embeddings, which are added to the token embeddings to provide the model with the positional information. The positional embeddings are learned during training and are added to the token embeddings to provide the model with the positional information.

class PositionalEncoding(nn.Module):

def __init__(self, d_model, max_len=5000):

super(PositionalEncoding, self).__init__()

pe = torch.zeros(max_len, d_model)

position = torch.arange(0, max_len, dtype=torch.float).unsqueeze(1)

div_term = torch.exp(torch.arange(0, d_model, 2).float() * -(math.log(10000.0) / d_model))

pe[:, 0::2] = torch.sin(position * div_term)

pe[:, 1::2] = torch.cos(position * div_term)

self.pe = pe.unsqueeze(0)

The positional embeddings are added to the token embeddings to provide the model with the positional information.

\[E_{i} = E_{token} + E_{positional}\]Where \(E_{token}\) is the token embedding and \(E_{positional}\) is the positional embedding.

There are two types of positional embeddings:

- Absolute Positional Embedding: A fixed position embedding that is added to the token embeddings, such as Sinusoidal as in original Transformer paper, which uses mathematical function to generate the positional embeddings.

- Learned Positional Embedding: A position embedding that is learned during training, such as in BERT, which allows more flexible and dynamic complex positional information specific to the task.

Relative Positional Embedding: The positional information is not added to the input embedding, but instead incorporated directly into the attention mechanism itself, by modifying the dot-product attention to include relative position information. This enables generalization to sequence lengths longer than the training data and handle variable-length sequences.

Rotary Positional Embedding (RoPE)

KV Caching

BERT

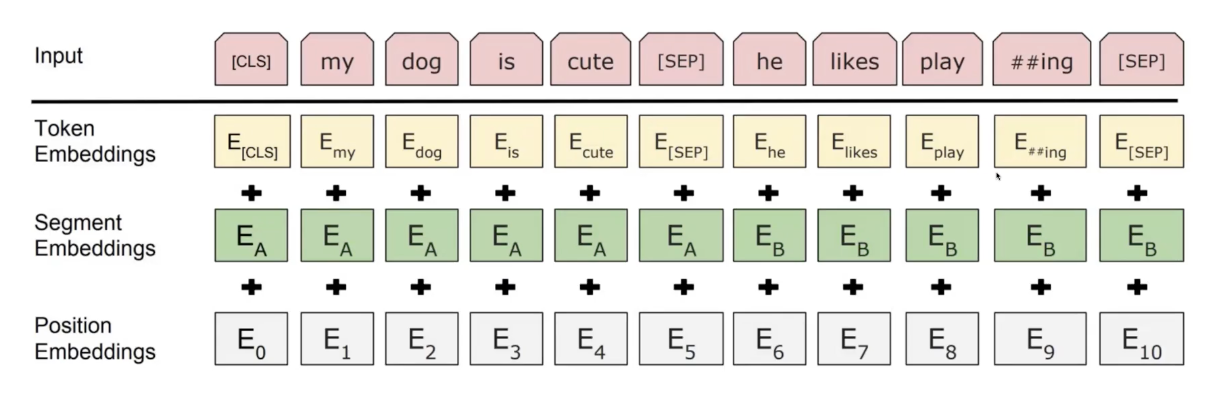

Tokenization in BERT

- WordPiece: A tokenization algorithm similar to BPE that uses a greedy algorithm to iteratively merge the most frequent subword units in a corpus of text.

- Example: The word “university” might be split into the subword units “un”, “##iver”, and “##sity”. The “##” prefix is used to indicate that a subword unit is part of a larger word. This allows the model to represent out-of-vocabulary words by combining subword units that it has seen during training. This also allows to reduce the size of the vocabulary and the number of out-of-vocabulary words.

Special tokens in BERT:

- [CLS]: A special token that is added to the beginning of each input sequence in BERT. It is used to represent the classification of the entire input sequence.

- [SEP]: A special token that is added between two sentences in BERT. It is used to separate the two sentences.

- [MASK]: A special token that is used to mask a word in the input sequence during the pretraining of BERT.

- [UNK]: A special token that is used to represent out-of-vocabulary words in BERT.

- [PAD]: A special token that is used to pad input sequences to the same length in BERT.

Learnable position embeddings: In BERT, the position embeddings are learned during the training process, allowing the model to learn the relative positions of words in the input sequence. This is in contrast to traditional position embeddings, which are fixed and do not change during training.

Segment embeddings: In BERT, each input sequence is associated with a segment embedding that indicates whether the input sequence is the first sentence or the second sentence in a pair of sentences. This allows the model to distinguish between the two sentences in the input sequence. There are only two values for the segment embeddings: 0 and 1.

Masked Prediction in BERT

- Masked Language Model (MLM): A pretraining objective for BERT that involves predicting the masked words in the input sequence. The masked words are replaced with the [MASK] token, and the model is trained to predict the original words in the input sequence.

- Next Sentence Prediction (NSP): A pretraining objective for BERT that involves predicting whether two sentences are consecutive in the original text. The sentences are concatenated together with the [SEP] token, and the model is trained to predict whether the two sentences are consecutive.

Pre-training BERT

- Masked Language Model (MLM): A pretraining objective for BERT that involves predicting the masked words in the input sequence. The masked words are replaced with the [MASK] token, and the model is trained to predict the original words in the input sequence.

- Next Sentence Prediction (NSP): A pretraining objective for BERT that involves predicting whether two sentences are consecutive in the original text. The sentences are concatenated together with the [SEP] token, and the model is trained to predict whether the two sentences are consecutive.

How to train a LLM

References:

- [1] Understanding and Using Supervised Fine-Tuning (SFT) for Language Models by Cameron Wolfe

- [2] LLM Training: RLHF and Its Alternatives by Sebastian Raschka

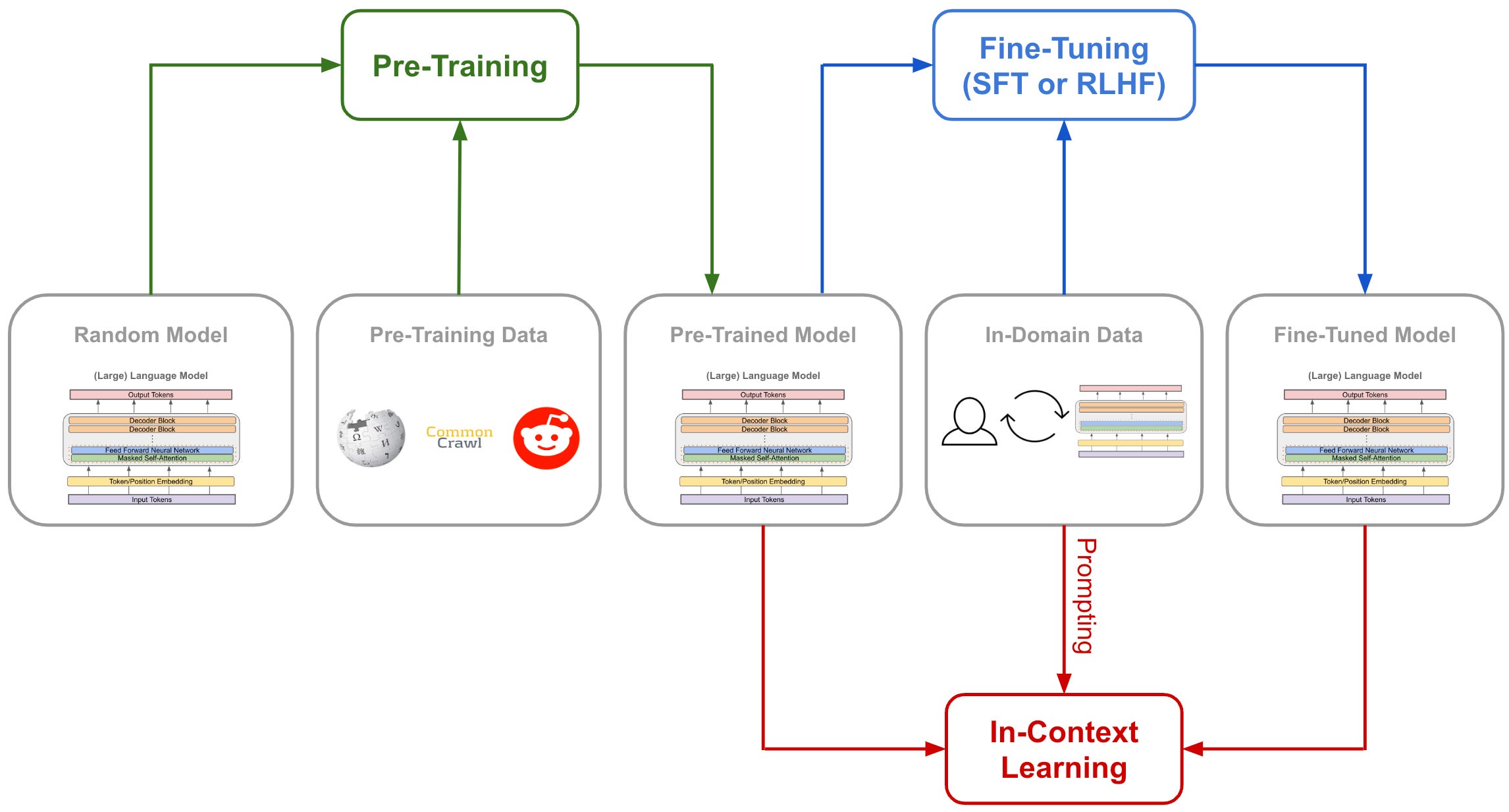

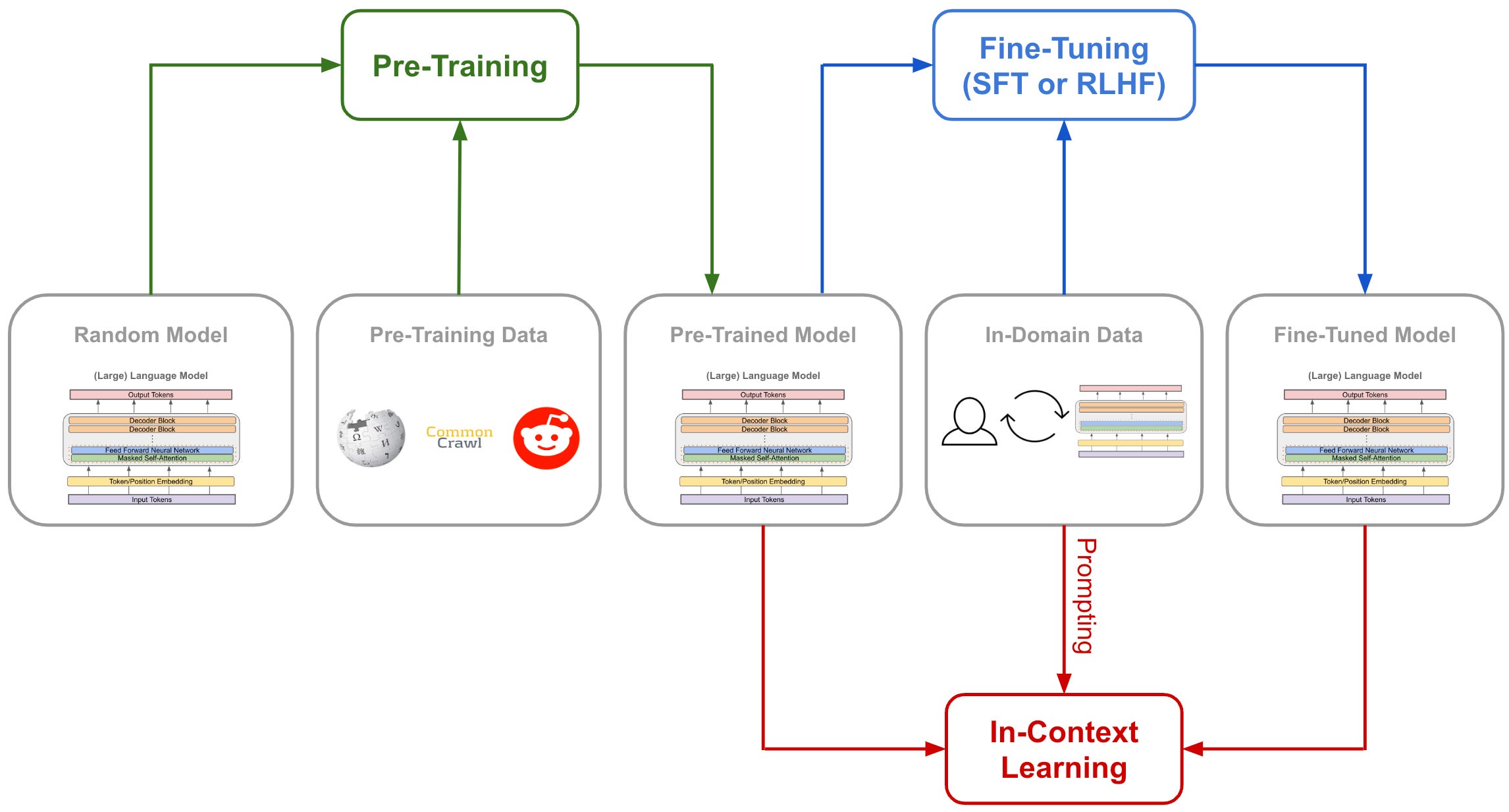

Unsupervised Pre-training

Goal:

Unsupervised pre-training involves training a model on a large-scale, unlabeled text corpus to learn general language representations (i.e., BERT) or general language understanding (i.e., GPT), with the goal of learning the structure, syntax, semantics, and general language understanding without explicit task-specific labels.

Process:

Uses unsupervised objectives like:

- Causal Language Modeling (CLM): Predict the next token in a sequence.

- Masked Language Modeling (MLM): Predict masked tokens in a sequence.

- Denoising: Reconstruct corrupted text (e.g., T5’s span corruption).

Loss Function: Cross-entropy loss for token prediction.

Advantages:

- Leverages massive unlabeled datasets.

- Provides a general-purpose foundation for language understanding.

- Highly scalable.

Limitations:

Produces models that are task-agnostic and need further fine-tuning for specific tasks. May generate outputs that are factual but misaligned with user intent or preferences.

Supervised Fine-tuning (SFT)

Goal: Adapting a pretrained model to specific tasks using labeled datasets, with the aim of training the model to perform well on specific tasks (e.g., summarization, classification, translation) with supervised learning. SFT is simple/cheap to use and a useful tool for aligning language models, which has made is popular within the open-source LLM research community and beyond [1].

Process:

Fine-tunes the pretrained model on task-specific datasets with labels. Aligns the model’s output with desired task objectives.

Loss Function: Cross-entropy loss or task-specific loss (e.g., mean squared error for regression tasks).

Advantages:

- Tailors the model for specific tasks, improving task performance.

- Reduces the amount of task-specific data needed compared to training from scratch.

Limitations:

- Performance depends on the quality and quantity of labeled data.

- Fine-tuning on one task may reduce performance on others (catastrophic forgetting).

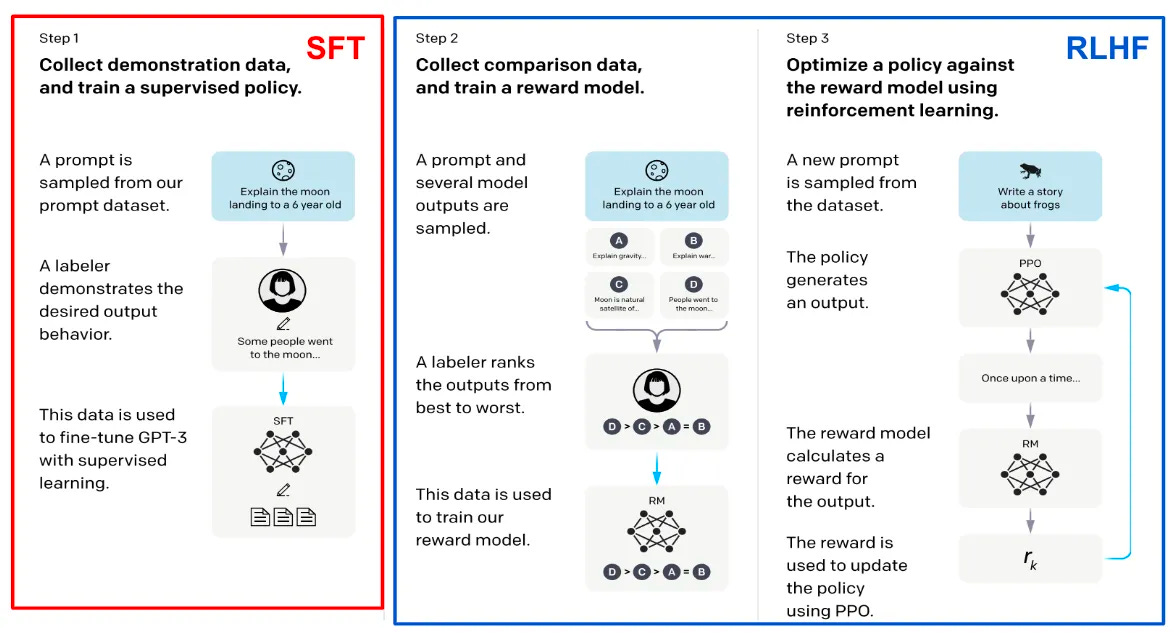

Reinforcement Learning with Human Feedback (RLHF)

Goal: Fine-tuning a model using reinforcement learning where the reward signal is derived from human feedback to align the model with human preferences and values beyond task-specific objectives.

Process:

- Supervised Pretraining: Start with a pretrained and optionally fine-tuned model.

- Feedback Collection: Collect human-labeled data ranking model outputs (e.g., ranking completions for prompts).

- Reward Model (RM): Train a reward model to predict rankings based on human feedback. The reward model is trained to mimic human preferences on a specific task.

- Policy Optimization: Fine-tune the model (policy) using reinforcement learning (e.g., Proximal Policy Optimization, PPO) to maximize the reward from the RM.

Loss Function:

- Combines reinforcement learning objectives (e.g., PPO loss) with supervised objectives to balance exploration and alignment.

Advantages:

- Aligns model behavior with human values, preferences, and ethical considerations.

- Reduces harmful or inappropriate responses.

- Improves usability in real-world scenarios (e.g., chatbot interactions).

Limitations:

- Requires expensive and time-consuming human feedback.

- May introduce biases based on the preferences of the feedback providers.

- Balancing alignment with generalization is challenging.

Comparison Table

| Aspect | Unsupervised Pretraining | Supervised Fine-Tuning | RLHF |

|---|---|---|---|

| Purpose | General-purpose language understanding | Task-specific performance improvement | Aligning outputs with human preferences |

| Data Requirement | Large-scale unlabeled text corpora | Labeled datasets for specific tasks | Human-labeled feedback or rankings |

| Objective | Learn language patterns and knowledge | Optimize for specific task objectives | Optimize for human alignment |

| Training Cost | High (large datasets, long training) | Moderate (smaller labeled datasets) | Very high (feedback collection + RL tuning) |

| Model Usage | Provides a base model | Task-specific models | Refines models for safer, more useful output |

| Challenges | Task-agnostic, needs fine-tuning | Dependent on labeled data quality | Expensive and subject to bias in feedback |

| Example | Training GPT, BERT, T5 from scratch | Fine-tuning BERT for sentiment analysis | Fine-tuning GPT with human ranking data |

PPO and DPO

References:

Proximal Policy Optimization (PPO)

The Bradley-Terry model is a probabilistic model used to rank items based on pairwise comparisons.

\[p(y_1 \succ y_2 \mid x) = \frac{exp(r(x,y_1))}{exp(r(x,y_1)) + exp(r(x,y_2))}\]where \(p\) is the probability of \(y_1\) being better than \(y_2\) given \(x\) representing the true human preferences and \(r\) is the reward function. \(y_1\) and \(y_2\) are the two items/responses being compared given \(x\) representing the input.

If \(r^*(x,y_1) > r^*(x,y_2)\), then \(p^*(y_1 \succ y_2 \mid x) > 0.5\), which means \(y_1\) is more likely to be better than \(y_2\) given \(x\).

To learn the reward function \(r\) from the data, we parameterize as a neural network \(r_{\phi}\) and optimize the following objective function:

\[\mathcal{L}_{R}(r_{\phi}, \mathcal{D}) = - \mathbb{E}_{(x,y_w,y_l) \sim \mathcal{D}} \left[ \log \sigma (exp(r_{\phi}(x,y_w)) - exp(r_{\phi}(x,y_l))) \right]\]where \(\mathcal{D}\) is the dataset of human preferences and \(y_w\) and \(y_l\) are the winning and losing responses against the same input \(x\). Minimizing the above objective function is equivalent to maximizing the probability of the winning response being better than the losing response given the input.

Note that the reward function \(r_{\phi}\) is usually initialized from the supervised fine-tuning (SFT) model same as the policy model.

Fine-tuning the policy model

Once we have the reward model \(r_{\phi}\), we can fine-tune the policy model \(\theta\) using the following objective function:

\[\mathcal{L}_{P}(\theta, \mathcal{D}) = - \mathbb{E}_{x \sim \mathcal{D}, y \sim \pi_{\theta}(y \mid x)} \left[ r_{\phi}(x,y) + \beta D_{KL}(\pi_{\theta}(y \mid x) || \pi_{ref}(y \mid x)) \right]\]where \(\pi_{ref}\) is the reference model and \(\beta\) is a hyperparameter. Minimizing the first term enforces the policy model to generate responses that are more preferred by the reward model, while minimizing the second term ensures that the policy model does not deviate too much from the reference model.

Advantages:

- Aligns the model with human preferences.

- Reduces harmful or inappropriate responses.

- Improves usability in real-world scenarios (e.g., chatbot interactions).

Limitations:

- Requires expensive and time-consuming human feedback.

- May introduce biases based on the preferences of the feedback providers.

- Balancing alignment with generalization is challenging.

Direct Preference Optimization (DPO)

DPO simplifies PPO by directly optimizing the policy model to adhere to human preferences without the need for a reward model.

Objective Function:

\[\pi_r(y \mid x) = \frac{1}{Z(x)} \pi_{\text{ref}}(y \mid x) \exp(r(x,y)/\beta)\]where \(\pi_r\) is the policy model, \(\pi_{\text{ref}}\) is the reference model, \(r\) is the reward function, and \(\beta\) is a hyperparameter.

\[r(x,y) = \beta \log \frac{\pi_r(y \mid x)}{\pi_{\text{ref}}(y \mid x)} + \log Z(x)\]where \(Z(x)\) is the partition function.

The final objective function is:

\[\mathcal{L}_{DPO}(\theta, \mathcal{D}) = - \mathbb{E}_{(x,y_w,y_l) \sim \mathcal{D}} \left[ \log \sigma (\pi_{\theta}(x,y_w) - \pi_{\theta}(x,y_l)) \right]\]Where there is no need for the reward model \(r_{\phi}\) as the policy model \(\pi_{\theta}\) is directly optimized to adhere to human preferences. Minimizing the above objective function is directly maximizing the probability of the winning response while minimizing the probability of the losing response.

SFT vs RLHF

Reinforcement Learning from Human Feedback (RLHF) is a technique used to align large language models (LLMs) more closely with human preferences and expectations. While supervised fine-tuning (SFT) is a critical step in improving a model’s capabilities, it has limitations that RLHF can address. In short, RLHF is needed when there is difficulty to define appropriate objective functions that to align with something ambiguous such as human preferences. However, recently DeepSeek has proposed a new RL method called GRPO (Group Relative Policy Optimization) that can be used to train reasoning models without the need for pair of wins and losses. Read more about it in my DeepSeek blog post.

When is RLHF needed?

Ambiguity in Objectives

- SFT aligns the model with a dataset of “correct” outputs, but human preferences often involve subjective judgment or context-specific nuance that cannot be fully captured in a static dataset.

- Example: Chatbots generating empathetic or polite responses where the tone and context matter significantly.

Unclear or Complex Evaluation Metrics

- For some tasks, it’s difficult to define explicit evaluation metrics, but humans can intuitively judge quality.

- Example: Creative writing or generating humorous content.

Long-Term or Multi-Step Reasoning

- SFT trains models to produce correct outputs based on immediate context but might fail in scenarios requiring multi-step decision-making or long-term coherence.

- Example: Writing a coherent multi-paragraph essay or guiding a user through a series of troubleshooting steps.

Avoiding Harmful Outputs

- Static datasets used for SFT may not include all edge cases or potential pitfalls, and RLHF can help refine the model to avoid harmful or toxic outputs based on human preferences.

- Example: Ensuring a conversational agent avoids generating offensive or biased content.

Improving User Experience

- SFT often focuses on correctness, but RLHF can optimize for user satisfaction, such as balancing informativeness, politeness, and conciseness.

- Example: Personal assistants generating concise and helpful responses tailored to user needs.

Why is Supervised Fine-Tuning Not Enough?

Static Nature of Datasets

- SFT relies on pre-collected datasets that might not represent all real-world scenarios or evolving user preferences.

- Limitation: If the dataset lacks certain examples, the model cannot generalize well.

Difficulty in Capturing Preferences

- Human preferences are often complex and not directly labeled in datasets.

- Limitation: A model trained on SFT might produce technically correct but undesirable outputs (e.g., overly verbose or lacking empathy).

Overfitting to Training Data

- SFT can lead to overfitting, where the model learns to replicate the training data without adapting to unseen scenarios.

- Limitation: This can result in poor performance on out-of-distribution examples.

Reward Optimization

- RLHF optimizes a reward function designed to capture human preferences, which allows fine-grained control over the model’s behavior.

- Limitation: SFT does not involve direct optimization based on human evaluations.

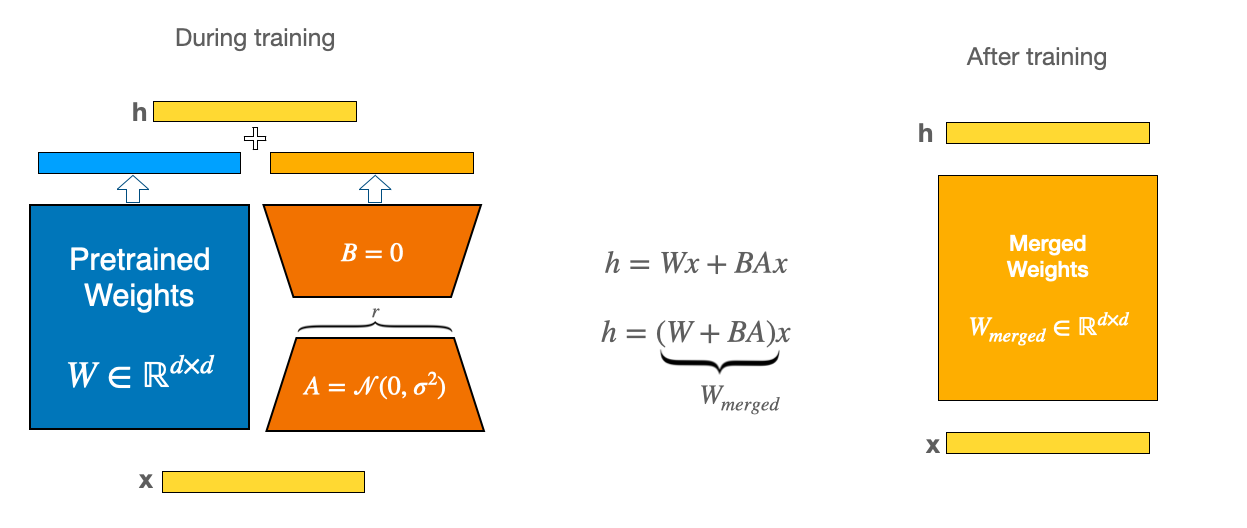

LoRA and Adapters

References:

- [1] Houlsby, Neil, et al. “Parameter-efficient transfer learning for NLP.” https://arxiv.org/abs/1902.00751

- [2] Hu et al. “LoRA: Low-Rank Adaptation of Large Language Models.” https://arxiv.org/abs/2106.09685

LoRA

Adapters

Prompt Engineering

References:

Zero-shot and Few-shot Learning

Chain of Thought

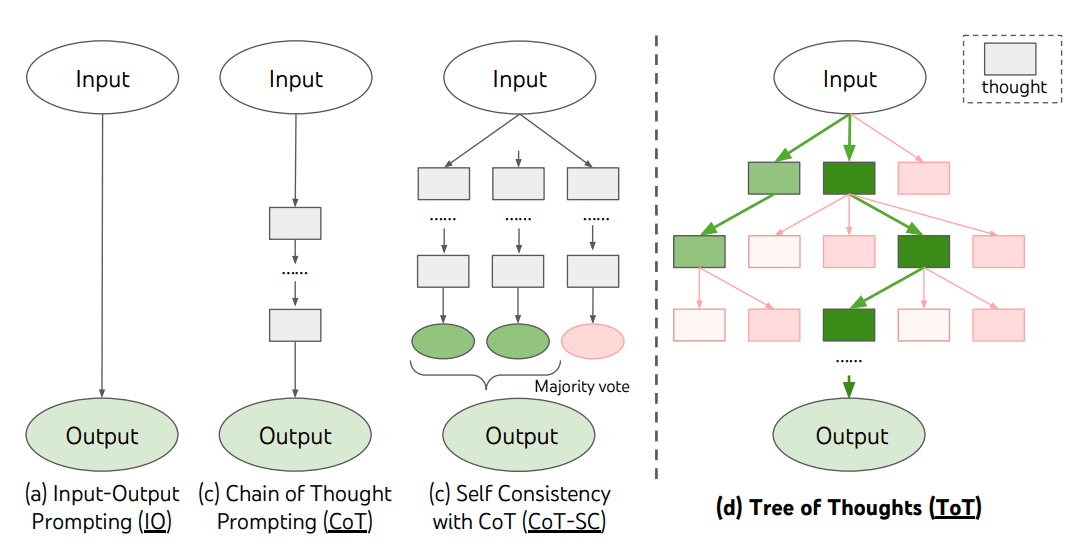

What is Chain of Thought? Chain of Thought (CoT) is a technique that allows LLMs to reason step by step. It can be done by adding intermediate reasoning steps in addition to the final answer to guide the model to break down a complex problem into smaller steps (Few-shot CoT). It is also possible to use Zero-shot CoT by adding a prompt “Let’s think step by step” to the input.

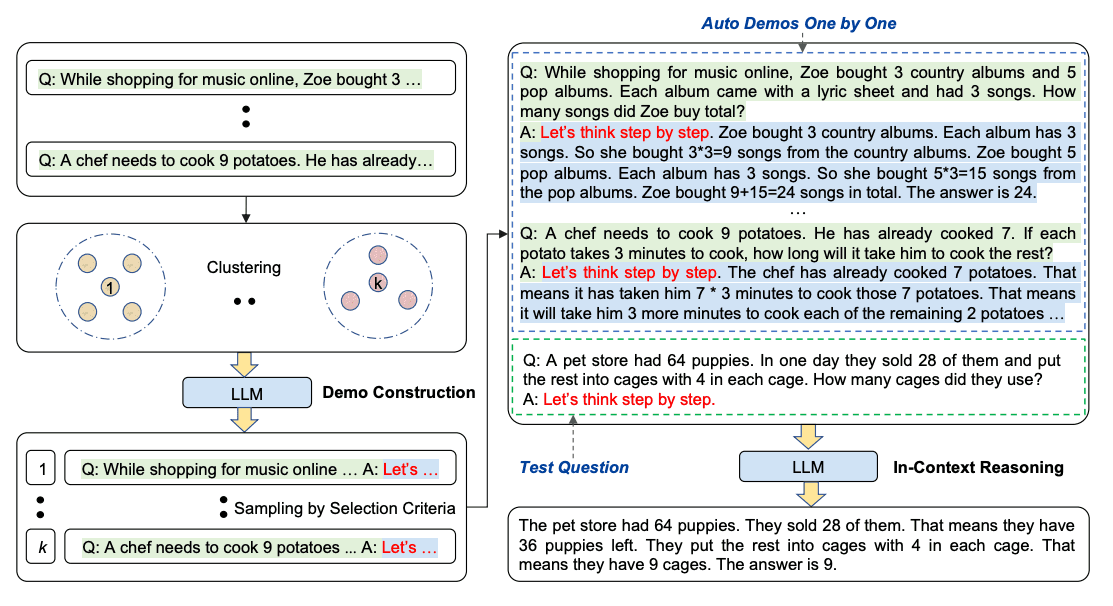

There are several advanced CoT methods such as Auto-CoT or Tree of Thoughts (ToT), addressing the limitations of CoT from different perspectives. For example, when applying CoT prompting with demonstrations, the process involves hand-crafting effective and diverse examples, which is time-consuming and not scalable. Auto-CoT proposes an automatic way to generate reasoning chains for demonstrations by leveraging other LLMs with Zero-Shot-CoT (“Let’s think step by step”). However, the generated chains are not guaranteed to be optimal and may contain errors. To address this, Auto-CoT proposes a two-stage process aiming to generate diverse reasoning chains for each question.

- Stage 1: partition questions into clusters

- Stage 2: select a representative question from each cluster and generate its reasoning chain using Zero-Shot-CoT with simple heuristics

Meta Prompting

A.k.a. Advanced Zero-Shot-CoT. Provide the format and pattern of problems and solutions rather than a specific content/example as in CoT. Uses syntax as a guiding template for the expected response or solution.

Self-Consistency

Idea: Sample multiple reasoning chains and select the most consistent one as the final answer.

Prompt with CoT examples:

Q: There are 15 trees in the grove. Grove workers will plant trees in the grove today. After they are done,

there will be 21 trees. How many trees did the grove workers plant today?

A: We start with 15 trees. Later we have 21 trees. The difference must be the number of trees they planted.

So, they must have planted 21 - 15 = 6 trees. The answer is 6.

Q: If there are 3 cars in the parking lot and 2 more cars arrive, how many cars are in the parking lot?

A: There are 3 cars in the parking lot already. 2 more arrive. Now there are 3 + 2 = 5 cars. The answer is 5.

Q: Leah had 32 chocolates and her sister had 42. If they ate 35, how many pieces do they have left in total?

A: Leah had 32 chocolates and Leah's sister had 42. That means there were originally 32 + 42 = 74

chocolates. 35 have been eaten. So in total they still have 74 - 35 = 39 chocolates. The answer is 39.

Q: Jason had 20 lollipops. He gave Denny some lollipops. Now Jason has 12 lollipops. How many lollipops

did Jason give to Denny?

A: Jason had 20 lollipops. Since he only has 12 now, he must have given the rest to Denny. The number of

lollipops he has given to Denny must have been 20 - 12 = 8 lollipops. The answer is 8.

Q: Shawn has five toys. For Christmas, he got two toys each from his mom and dad. How many toys does

he have now?

A: He has 5 toys. He got 2 from mom, so after that he has 5 + 2 = 7 toys. Then he got 2 more from dad, so

in total he has 7 + 2 = 9 toys. The answer is 9.

Q: There were nine computers in the server room. Five more computers were installed each day, from

monday to thursday. How many computers are now in the server room?

A: There are 4 days from monday to thursday. 5 computers were added each day. That means in total 4 * 5 =

20 computers were added. There were 9 computers in the beginning, so now there are 9 + 20 = 29 computers.

The answer is 29.

Q: Michael had 58 golf balls. On tuesday, he lost 23 golf balls. On wednesday, he lost 2 more. How many

golf balls did he have at the end of wednesday?

A: Michael initially had 58 balls. He lost 23 on Tuesday, so after that he has 58 - 23 = 35 balls. On

Wednesday he lost 2 more so now he has 35 - 2 = 33 balls. The answer is 33.

Q: Olivia has $23. She bought five bagels for $3 each. How much money does she have left?

A: She bought 5 bagels for $3 each. This means she spent $15. She has $8 left.

Q: When I was 6 my sister was half my age. Now I'm 70 how old is my sister?

A:

Output 1:

When I was 6 my sister was half my age, so she was 3. Now I am 70, so she is 70 - 3 = 67. The answer is 67.

Output 2:

When the narrator was 6, his sister was half his age, which is 3. Now that the narrator is 70, his sister would be 70 - 3 = 67 years old. The answer is 67.

Output 3:

When I was 6 my sister was half my age, so she was 3. Now I am 70, so she is 70/2 = 35. The answer is 35.

Question: How to get multiple outputs?

Introduce Randomness: Use randomness in the generation process to obtain diverse outputs. This can be done by:

- Temperature Settings: Set a higher temperature (e.g., 0.7 or above) to encourage more variability in responses.

- Top-k or Top-p Sampling: Adjust these parameters to allow exploration of a broader range of plausible outputs.

Generate Multiple Outputs: Generate multiple outputs by sampling multiple times from the model using the same prompt. For example:

import openai

prompt = "Explain the significance of photosynthesis in plants."

responses = []

for _ in range(10): # Generate 10 outputs

response = openai.Completion.create(

engine="text-davinci-003",

prompt=prompt,

max_tokens=150,

temperature=0.8,

n=1 # Single output per call

)

responses.append(response.choices[0].text.strip())

Question: How to aggregate and analyze outputs?

Review the generated outputs and identify consistent patterns or common elements. This can be done manually or using automated techniques such as:

- Majority Voting: Identify the most frequently occurring response.

- Semantic Similarity: Use clustering or similarity scoring to group similar responses.

Generated Knowledge Prompting

Idea: Use LLM to generate knowledge and then incorporate that knowledge into the prompt to help the model make more accurate predictions.

Examples:

Input: Greece is larger than mexico.

Knowledge: Greece is approximately 131,957 sq km, while Mexico is approximately 1,964,375 sq km, making Mexico 1,389% larger than Greece.

Input: Glasses always fog up.

Knowledge: Condensation occurs on eyeglass lenses when water vapor from your sweat, breath, and ambient humidity lands on a cold surface, cools, and then changes into tiny drops of liquid, forming a film that you see as fog. Your lenses will be relatively cool compared to your breath, especially when the outside air is cold.

Input: A fish is capable of thinking.

Knowledge: Fish are more intelligent than they appear. In many areas, such as memory, their cognitive powers match or exceed those of 'higher' vertebrates including non-human primates. Fish's long-term memories help them keep track of complex social relationships.

Input: A common effect of smoking lots of cigarettes in one's lifetime is a higher than normal chance of getting lung cancer.

Knowledge: Those who consistently averaged less than one cigarette per day over their lifetime had nine times the risk of dying from lung cancer than never smokers. Among people who smoked between one and 10 cigarettes per day, the risk of dying from lung cancer was nearly 12 times higher than that of never smokers.

Input: A rock is the same size as a pebble.

Knowledge: A pebble is a clast of rock with a particle size of 4 to 64 millimetres based on the Udden-Wentworth scale of sedimentology. Pebbles are generally considered larger than granules (2 to 4 millimetres diameter) and smaller than cobbles (64 to 256 millimetres diameter).

Input: Part of golf is trying to get a higher point total than others.

Knowledge:

Knowledge 1:

The objective of golf is to play a set of holes in the least number of strokes. A round of golf typically consists of 18 holes. Each hole is played once in the round on a standard golf course. Each stroke is counted as one point, and the total number of strokes is used to determine the winner of the game.

Knowledge 2:

Golf is a precision club-and-ball sport in which competing players (or golfers) use many types of clubs to hit balls into a series of holes on a course using the fewest number of strokes. The goal is to complete the course with the lowest score, which is calculated by adding up the total number of strokes taken on each hole. The player with the lowest score wins the game.

The next step is to integrate the knowledge and get a prediction

Question: Part of golf is trying to get a higher point total than others. Yes or No?

Knowledge: The objective of golf is to play a set of holes in the least number of strokes. A round of golf typically consists of 18 holes. Each hole is played once in the round on a standard golf course. Each stroke is counted as one point, and the total number of strokes is used to determine the winner of the game.

Explain and Answer:

Note: As mentioned in [1], the answer with Knowledge 1 is with a very high confidence while that with Knowledge 2 is very low one. Interestingly, Knowledge 2 is directly answering the question (The player with the lowest score wins the game.) while Knowledge 1 is not.

Prompt Chaining

Idea: Break down a complex task into multiple simpler tasks and use LLM to solve each task. Each step involves a specific prompt and the output of the previous step is used as the input for the next step.

Example Use Case: Writing an Essay

Prompt 1: Brainstorm Topics

- "List five unique topics for an essay about the benefits of renewable energy."

- Output: ["Solar energy in urban areas", "Wind energy for rural development", etc.]

Prompt 2: Develop an Outline

- Input: "Create a detailed outline for an essay on 'Solar energy in urban areas.'"

- Output: Introduction, Benefits of Solar Energy, Implementation Challenges, Conclusion.

Prompt 3: Write the Introduction

- Input: "Write an engaging introduction for an essay on 'Solar energy in urban areas.'"

- Output: A well-crafted opening paragraph.

Prompt 4: Complete Sections

- Repeat the process for other sections using tailored prompts.

Tree of Thoughts

Idea: Unlike Self-Consistency CoT when each reasoning path is independent and the final answer is voting/aggregating at the final step, Tree of Thoughts (ToT) explores multiple reasoning paths at each step and selects the most promising step to continue.

Examples (from ToT repos: https://github.com/princeton-nlp/tree-of-thought-llm/blob/master/src/tot/prompts/). You can also find more examples in this blog post https://learnprompting.org/docs/advanced/decomposition/tree_of_thoughts.

standard_prompt = '''

Write a coherent passage of 4 short paragraphs. The end sentence of each paragraph must be: {input}

'''

cot_prompt = '''

Write a coherent passage of 4 short paragraphs. The end sentence of each paragraph must be: {input}

Make a plan then write. Your output should be of the following format:

Plan:

Your plan here.

Passage:

Your passage here.

'''

vote_prompt = '''Given an instruction and several choices, decide which choice is most promising. Analyze each choice in detail, then conclude in the last line "The best choice is {s}", where s the integer id of the choice.

'''

compare_prompt = '''Briefly analyze the coherency of the following two passages. Conclude in the last line "The more coherent passage is 1", "The more coherent passage is 2", or "The two passages are similarly coherent".

'''

score_prompt = '''Analyze the following passage, then at the last line conclude "Thus the coherency score is {s}", where s is an integer from 1 to 10.

'''

Mixture of Reasoning Experts

MoRE leverages a pool of specialized experts, where each expert is optimized for a distinct reasoning type, such as:

- Factual reasoning (e.g., fact-based questions).

- Multihop reasoning (e.g., questions that require multiple steps of reasoning).

- Mathematical reasoning (e.g., solving math word problems).

- Commonsense reasoning (e.g., questions requiring implicit knowledge).

MoRE uses an answer selector to choose the best response based on predictions from the specialized experts. If the system detects that none of the answers are reliable, it can abstain from answering. Another key feature of MoRE is its ability to abstain from answering when it’s unsure, improving the system’s reliability.

How to create a prompt

To me, the most important part of prompt engineering is to understand the task, the data, and know which prompt techniques to apply, but not how to create a prompt because it is just a piece of text. However, there are some tools that can help you create a prompt, providing you several common templates. For example

from langchain_core.prompts import PipelinePromptTemplate, PromptTemplate

full_template = """{introduction}

{example}

{start}"""

full_prompt = PromptTemplate.from_template(full_template)

introduction_template = """You are impersonating {person}."""

introduction_prompt = PromptTemplate.from_template(introduction_template)

example_template = """Here's an example of an interaction:

Q: {example_q}

A: {example_a}"""

example_prompt = PromptTemplate.from_template(example_template)

start_template = """Now, do this for real!

Q: {input}

A:"""

start_prompt = PromptTemplate.from_template(start_template)

input_prompts = [

("introduction", introduction_prompt),

("example", example_prompt),

("start", start_prompt),

]

pipeline_prompt = PipelinePromptTemplate(

final_prompt=full_prompt, pipeline_prompts=input_prompts

)

pipeline_prompt.input_variables

print(

pipeline_prompt.format(

person="Elon Musk",

example_q="What's your favorite car?",

example_a="Tesla",

input="What's your favorite social media site?",

)

)

You are impersonating Elon Musk.

Here's an example of an interaction:

Q: What's your favorite car?

A: Tesla

Now, do this for real!

Q: What's your favorite social media site?

A:

Code and Frameworks for LLMs

LangChain

Prompt Templates

Purpose:

- Standardize prompts: Ensure consistency across different prompts.

- Parameterize prompts: Allow for easy modification of prompts.

- Chain prompts: Combine multiple prompts into a single prompt.

Example:

from langchain.prompts import PromptTemplate

template = "Translate the following text to French: {text}"

prompt = PromptTemplate(input_variables=["text"], template=template)

print(prompt.format(text="Hello, how are you?"))

Chains

Purpose:

- Combine multiple steps or functions into a cohesive pipeline

Key Functions:

-

LLMChain: Simplest chain, combining a prompt with an LLM. -

SequentialChain: Execute multiple chains sequentially, passing outputs as inputs. -

RouterChain: Routes user input to specific chains based on conditions

Example:

from langchain.chains import LLMChain

from langchain.prompts import PromptTemplate

from langchain.llms import OpenAI

llm = OpenAI(model="gpt-3.5-turbo")

prompt = PromptTemplate(template="What is a good name for a company that makes {product}?", input_variables=["product"])

chain = LLMChain(llm=llm, prompt=prompt)

print(chain.run(product="colorful socks"))

Memory

Purpose:

- Store and retrieve information: Allow chains to remember previous outputs.

- Chain of Thought: Help chains reason through complex problems.

Key Functions:

-

ConversationBufferMemory: Stores entire conversation history.. -

ConversationSummaryMemory: Summarizes past interactions for compact memory.