Personalized LLMs

Why Personalize LLMs?

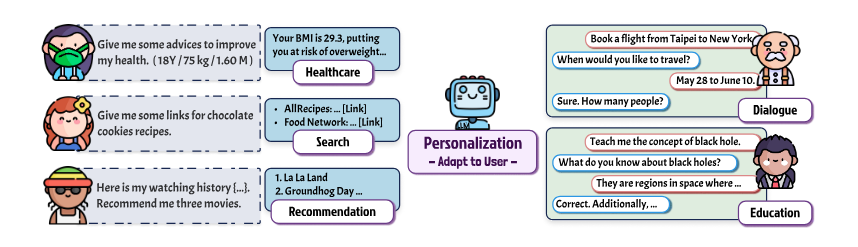

Large-scale generative AI models are trained on diverse datasets to acquire broad capabilities. However, they are typically not tailored to the needs, preferences, or knowledge of a specific user. For example, a general-purpose LLM can generate grammatically correct and factually relevant text, but it may fail to align with a user’s preferred tone or context-specific requirements.

Personalized LLMs aim to bridge this gap by adapting to individual users in the following key aspects:

- Style and Tone Adaptation: Adjusting the writing style, tone, or formality of the model to align with a user’s preferences—useful in applications like education, mental health support, or customer service.

- Personal Knowledge Integration: Utilizing user-specific data (e.g., calendar events, documents, preferences) to act as a digital assistant or agent.

- Domain-Specific Customization: Incorporating specialized knowledge for a particular task or profession, such as a medical LLM for diagnosis or a legal LLM for contract review.

Two Modes of Personalization

Personalized LLMs can be applied in two broad scenarios:

-

Category A: Assistant-Oriented Personalization

The LLM acts on behalf of the user by leveraging personal knowledge, effectively functioning as a digital twin. For instance, it can help write reports, schedule meetings, or generate personalized content using user-specific context. -

Category B: Preference-Oriented Personalization

The LLM adapts its outputs—tone, recommendations, search results, etc.—based on the user’s preferences, interests, or behavioral history.

Use Cases

Based on the above taxonomy, here are some common use cases (adapted from Tseng et al., 2024):

- Personalized Recommendation: Suggesting content or products that align with a user’s interests (Category B).

- Personalized Search: Enhancing search relevance by understanding historical queries and user intent (Category B).

-

Personalized Healthcare:

- Category A: Assisting with scheduling, medication reminders, or emergency support.

- Category B: Providing medical insights tailored to a user’s health profile.

- Software Development Support: Assisting developers by understanding their coding style, project context, and documentation (Category A).

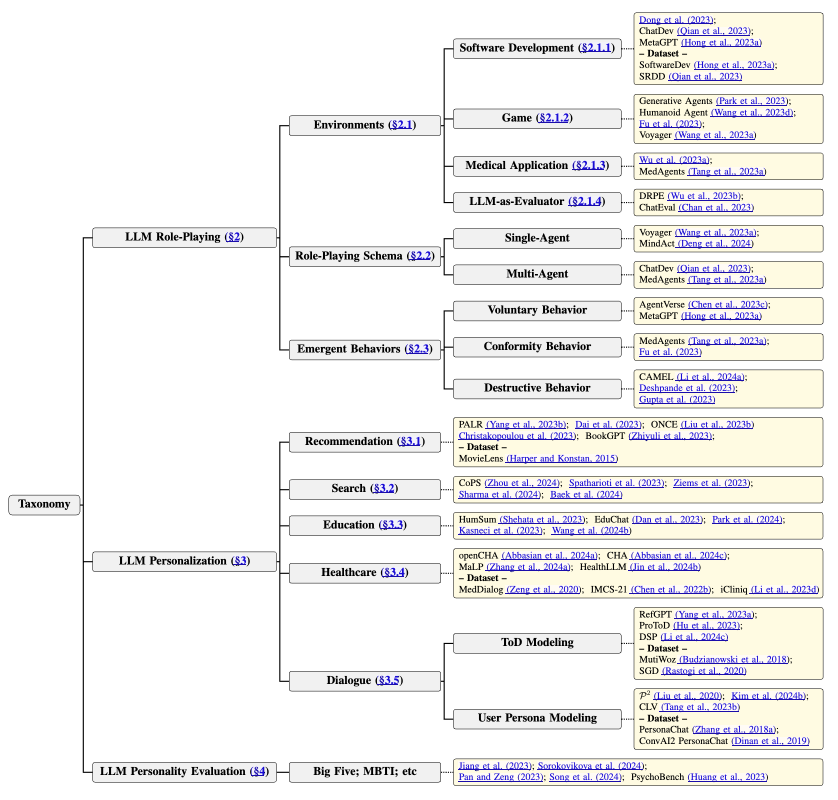

Two Tales of Persona in LLMs: Role-Playing vs. Personalization

There are two main directions in how persona is handled in LLMs:

-

Role-Playing LLMs: The model is assigned a fixed persona or character (e.g., doctor, lawyer, coach) to interact with users in a consistent manner. The focus is on task fidelity and role simulation, often used in training or simulation settings.

-

Personalized LLMs: The model adapts its behavior, knowledge, or language based on the user’s identity, preferences, and needs. The emphasis is on personal relevance and user satisfaction.

| Aspect | Role-Playing LLM | Personalized LLM |

|---|---|---|

| Persona Source | Assigned persona (e.g., fictional or occupational) | Adapted from user’s identity, behavior, or context |

| Primary Objective | Simulate a role with high fidelity | Optimize for individual user satisfaction |

| Core Focus | Role consistency and task performance | Contextual relevance and adaptability |

| Example | Doctor persona for training simulations | Shopping assistant based on browsing history |

Key Challenges

Injecting Personal Knowledge into LLMs

Several methods have been proposed to personalize LLMs by incorporating user-specific knowledge:

-

Prompting and In-Context Learning: Injecting user information directly into the input prompt. While flexible, this approach faces context length limitations and higher inference costs [5].

-

Fine-Tuning / Parameter-Efficient Tuning (PEFT): Adapting the model weights using personal data, such as through LoRA. This requires enough personal data and may risk overfitting or degradation on general tasks.

-

Retrieval-Augmented Generation (RAG): Storing user data externally and retrieving it during inference. This allows scalable personalization, but can suffer from noisy retrievals or incomplete information [6].

Updating Personal Knowledge

Evaluating Personalized LLMs

Assessing the quality of personalized outputs—especially for preference-based tasks like tone, emotion, or stylistic match—is an open challenge. Existing evaluation methods struggle to quantify subjective user satisfaction or match to personal styles [4].

Safety and Privacy Concerns

Fine-tuning or storing user data poses serious privacy and safety issues:

- Alignment Drift: Personal fine-tuning may bypass original safety constraints, unintentionally enabling jailbreaks [1].

- Bias and Overfitting: Training on unrepresentative user data can produce biased or brittle behaviors.

- Data Leakage: Training data could be extracted by adversaries through membership inference or extraction attacks [2], [3].

Further Reading

Personalized Language Modeling from Personalized Human Feedback

Beyond Dialogue: A Profile-Dialogue Alignment Framework Toward General Role-Playing LLMs

Problem setting:

- Bias between the Role Profile and Scene-Specific Dialogues:

References

- Tseng, Yu-Min, et al. “Two tales of persona in LLMs: A survey of role-playing and personalization.” arXiv preprint arXiv:2406.01171 (2024).

- Wang, Jeffrey G., et al. “Pandora’s White-Box: Precise Training Data Detection and Extraction in Large Language Models.” arXiv:2402.17012 (2024).

- Lukas, Nils, et al. “Analyzing leakage of personally identifiable information in language models.” IEEE S&P (2023).

- Samuel, Vinay, et al. “Personagym: Evaluating persona agents and LLMs.” arXiv:2407.18416 (2024).

- Richardson, Chris, et al. “Integrating summarization and retrieval for enhanced personalization via large language models.” arXiv:2310.20081 (2023).

- Tan, Zhaoxuan, et al. “Democratizing large language models via personalized parameter-efficient fine-tuning.” arXiv:2402.04401 (2024).

- Chen, Nuo, et al. “The Oscars of AI Theater: A Survey on Role-Playing with Language Models.” arXiv:2407.11484 (2024).

- Yu, Yeyong, et al. “Beyond dialogue: A profile-dialogue alignment framework towards general role-playing language model.” arXiv:2408.10903 (2024).

Additional Resources

- Awesome-Personalized-LLM GitHub: A curated list of papers, datasets, and benchmarks on personalized LLMs.

Enjoy Reading This Article?

Here are some more articles you might like to read next: