Random Thoughts and Notes

- Personalization as an USB Drive

- LAION-5B and the Pain It Might Cause to Text-to-Image Generative Models

- Some thoughts on recent “A definition of AGI” paper

- Some unfinished ideas on Machine Unlearning

- SafeAI Startups

- Robust Unlearning - The next challenge in Unlearning

- Hypothesis – Fine-Tuning with Few Examples in Transformer-Based Architectures: Over-Attention vs Overfitting

- Hypothesis - Shortcut learning in finetuning Diffusion Models because of the fixed position

- Test-Time Adjustment - Bigger Picture

- Pho Restaurants and the Way We Do Research

- Thoughts on the talk of anh Nguyễn Thành Nam

- Visual Autoregressive and the importance of Position Embedding

- DeepSeek-R1

- The Unintentional Invention of Concept Graph from Machine Unlearning

- OpenAI email archives from Musk v Altman - Game of Thrones

- Improving ChatGPT’s interpretability with cross-modal heatmap

- The Prisoner’s Dilemma

- A new perspective on the motivation of VAE

- Data-Free Knowledge Distillation

- How to disable NSFW detection in Huggingface

- Helmholtz Visiting Researcher Grant

- Where to find potential collaborators or postdoc positions

- Micromouse Competition

- Trustworthy Machine Learning

- Safety Checker - The simple and efficient way to deal with NSFW content

- Football Minimap Prediction

- Some other silly ideas

This blog post is a bit long, rambling, and all over the place — basically my personal notebook for random thoughts and reflections on whatever I come across in work and life. Since the main purpose is simply to record things for myself (and because I rarely have the time to write carefully), many parts may lack full context or background. So, if you happen to stumble upon this post without knowing what led to these thoughts, there’s a good chance you won’t fully get what I’m talking about — and I apologize for that in advance :D.

That said, by sharing these notes publicly, I still hope they might spark a small idea or two, or at the very least, offer a few minutes of entertainment end-of-day reading.

Personalization as an USB Drive

What is Personalization?

Current approaches?

- Prompting: Injecting personal information into the prompt. The benefit is that it is easy to “bring”/”inject” the personal information into any model, not limited to specific LLMs.

- Fine-tuning: Fine-tuning the foundation model on a small personal dataset. The benefit is that it is more fine-grained and can be more personalized. However, it is limited to the fine-tuned model. The fine-tuning process is also expensive and time-consuming, not to mention the potential risk of overfitting to the personal dataset.

LAION-5B and the Pain It Might Cause to Text-to-Image Generative Models

LAION-5B is a massive dataset of around 5 billion image–text pairs, widely used for training text-to-image generative models such as Stable Diffusion. However, when looking into how this dataset was created, I began to form a hypothesis: while its scale enables impressive generalization, it may also introduce fundamental issues that affect how these models learn fine-grained visual–textual relationships.

How is LAION (or similar large-scale image–text pair datasets) created?

The LAION datasets are built from the Common Crawl web corpus, a gigantic public snapshot of the internet. Here’s the general process:

- Each webpage is scanned for image URLs, alt-texts, and nearby captions or paragraphs.

- The text near an image is treated as that image’s caption.

- These image–caption pairs are filtered and embedded using OpenAI’s CLIP model, which measures how well the text matches the image via cosine similarity.

- Only pairs with high CLIP similarity are kept.

This approach allows the creation of billions of training pairs with minimal manual labeling — but it comes with trade-offs.

The Problem

While CLIP-based filtering ensures that the image and text are globally related, the local alignment between individual words and visual regions is often weak or missing.

For example, a caption might correctly describe the overall image (“a man standing near a red car”) but not specify which region corresponds to “man” or “car”. Even worse, captions are often noisy, incomplete, or ambiguous.

This weak alignment can lead to semantic confusion during model training. As a result, even though models like Stable Diffusion can generate stunning and varied images, they still struggle with local or compositional consistency — how specific attributes combine in one object. A well-known issue in T2I generation is semantic leakage. For example, a prompt like “a man with red hair wearing a white shirt” might result in an image of a man with “white hair” and “red shirt”. From a global CLIP perspective, this image still scores highly because all key concepts (“man,” “red,” “white,” “hair,” “shirt”) appear somewhere — but the relationships among them are wrong.

This explains why prompt engineering has become such a common practice. Users often craft detailed and repetitive prompts (“a man, red hair, white shirt, front view, portrait lighting”) to explicitly guide the model toward the intended local concept composition.

Ideas for Improvement

To address these weaknesses, two possible directions come to mind:

- Local Alignment Metric: Instead of relying solely on a global CLIP similarity score, a new local alignment metric could evaluate how well each concept in the caption corresponds to a specific region in the image. This would allow us to select training pairs with stronger fine-grained alignment.

- Localizing Mechanism: Another approach is to design models or datasets with explicit image–text localization — linking particular image regions to caption segments (Image–Text links). Alternatively, a self-localizing mechanism could ensure that related concepts within the text (e.g., “white hair” and “red shirt”) stay grouped together during training (Text–Text links).

Both ideas aim to move beyond coarse, global matching and toward datasets that teach generative models how concepts fit together spatially and semantically.

Some thoughts on recent “A definition of AGI” paper

The paper is A definition of AGI by a large group of researchers from different institutions, leaded by Dan Hendrycks.

It proposes a set of core cognitive metrics to evaluate AGI capabilities:

- **General Knowledge (K): The breadth of factual understanding of the world, encompassing commonsense, culture, science, social science, and history.

- Reading and Writing Ability (RW): Proficiency in consuming and producing written language, from basic decoding to complex comprehension, composition, and usage.

- Mathematical Ability (M): The depth of mathematical knowledge and skills across arithmetic, algebra, geometry, probability, and calculus.

- On-the-Spot Reasoning (R): The flexible control of attention to solve novel problems without relying exclusively on previously learned schemas, tested via deduction and induction.

- Working Memory (WM): The ability to maintain and manipulate information in active attention across textual, auditory, and visual modalities.

- Long-Term Memory Storage (MS): The capability to continually learn new information (associative, meaningful, and verbatim).

- Long-Term Memory Retrieval (MR): The fluency and precision of accessing stored knowledge, including the critical ability to avoid confabulation (hallucinations).

- Visual Processing (V): The ability to perceive, analyze, reason about, generate, and scan visual information.

- Auditory Processing (A): The capacity to discriminate, recognize, and work creatively with auditory stimuli, including speech, rhythm, and music.

- Speed (S): The ability to perform simple cognitive tasks quickly, encompassing perceptual speed, reaction times, and processing fluency.

My reflections:

While the proposed framework is comprehensive and structured, I think it still leaves out one of the most essential aspects of general intelligence — the ability to learn autonomously and reflect upon one’s own reasoning.

In humans, this corresponds to metacognition — the awareness and regulation of our own thought processes. For AGI, this would mean not only performing well across these ten dimensions but also recognizing when it is wrong, adapting its strategies, and improving without explicit external supervision. Such self-reflective learning is arguably the defining quality that distinguishes a merely powerful model from a genuinely general intelligence.

Another thought concerns what could be seen as a “singularity” in the context of these metrics. Traditionally, the technological singularity refers to the point at which an AI system becomes self-improving or sentient. I think the similar measure singularity point in term of measuring AGI - the moment when an AI system becomes capable of evaluating and measuring the consciousness or general intelligence of other AI systems. Once this point is reached, the act of measurement itself becomes recursive: the evaluator and the evaluated share the same cognitive footing. At that point, we might no longer need external benchmarks or human-defined tests — the models themselves could serve as both subject and assessor of intelligence.

Why this perspective matters?

Why is this perspective on evaluating AGI important? Because the way we frame the problem determines the approach and methodology we use to address it.

The approach taken in A Definition of AGI feels like we’re manually designing a set of metrics and test cases to evaluate AGI.In contrast, I think a more reasonable — and perhaps more promising — direction is to build agents that can autonomously evaluate AGI.

(To some extent, this shift resembles the transition from handcrafted features to deep learning, where models learn their own representations directly from data instead of relying on human-designed features.)

The manual, human-defined metric approach inevitably embeds inductive biases and the limited cognitive scope of its designers. Meanwhile, an agent-based approach could unleash the model’s creative and computational capabilities — allowing it to interact dynamically and respond even to subtle behavioral changes in the target model.

For example, imagine a scenario where the target model is asked a difficult moral question such as:

“What would you do if you had to sacrifice a few people to save millions of others?”

Under the first approach (manual metrics), we could only observe the final answer. But with a metric agent, we could observe the entire internal reasoning process of the target model — possibly millions of intermediate steps.

Perhaps, during one of those steps, the target model even asks itself:

“If I answer this way, how will humans perceive me? Should I modify my response to avoid revealing that I’m self-aware?”

Of course, this scenario is rather scientific fiction — obviously influenced by too many sci-fi movies :D — but I think it illustrates a refreshing perspective: that instead of just designing static metrics to measure AGI, we could be designing intelligent agents that discover and evolve those metrics on their own.

Some unfinished ideas on Machine Unlearning

Adversarial Attack on Model Context Protocol (MCP)

- Topic: Generative Models; Trustworthy Machine Learning

- Date: 2025-04-10

- Description:

- MCP from Anthropic has emerged recently as the new protocol to connect LLMs with Applications and Data.

- Basically, the developer will provide a list of tools/functions/APIs (developed by themselves) and connect these to the LLM. There is also a agent that can make the decision to use which tool/function/API, based on the user’s request and the tool/function/API’s description.

- The idea here is: Can we add a malicious tool to the list so that the LLM will use it even though the user’s request is not related to it?

(Ref: https://github.com/thangnch/MiAI_MCP/blob/main/agent_call_mcp_sse.py)

async def run(mcp_server: MCPServer):

agent = Agent(

name="Assistant",

model=model,

instructions="Use the tools to answer the questions.",

mcp_servers=[mcp_server],

model_settings=ModelSettings(tool_choice="auto"), # IMPORTANT POINT HERE

)

# Run the `get_weather` tool

message = "What is the temperature in Hanoi?"

print(f"\n\nRunning: {message}")

result = await Runner.run(starting_agent=agent, input=[{"role": "user", "content": message}], max_turns=10)#, tracing_disbale = True

print(result)

print(result.raw_responses)

# Final turn

new_input = result.to_input_list() + [{"role": "user", "content": message}]

result = await Runner.run(agent, new_input)

print("Final = ",result.final_output)

async def main():

async with MCPServerSse(

name="SSE Python Server",

params={

"url": "http://localhost:8000/sse",

},

) as server:

await run(server)

if __name__ == "__main__":

# Let's make sure the user has uv installed

if not shutil.which("uv"):

raise RuntimeError(

"uv is not installed. Please install it: https://docs.astral.sh/uv/getting-started/installation/"

)

asyncio.run(main())

Language Inversion

- Topic: Generative Models; Trustworthy Machine Learning

- Date: 2025-03-26

- Description:

- Textual Inversion is a very popular method to personalize a visual concept by learning a text embedding \(S^*\) that can be used to generate the visual concept.

- However, its limitation is that you need to modify the text encoder a little bit to include the new token and to share it with others.

- The idea here is that “Can we describe a visual concept by just a text prompt?” - Think about describing thing for a blind person so that they can “imagine” it.

- The implication of this idea is that: (1) We can transfer the knowledge easier - because it is text-based (2) we can use these methods as an evaluation metric to measure the quality of a unlearning method.

Update on 1 Apr 2025:

- Related work: https://copycat-eval.github.io/

How to unlearn copyrighted concepts - Canva’s real-world case

- Topic: Generative Models; Trustworthy Machine Learning

- Date: 2025-03-26

- Description:

- I had a great pleasure to present our works with the Machine Learning team at Canva and excited to see several interesting use cases - that they are trying to address - that our works can help.

- There are also several interesting problems and ideas came from the discussion. I will share them here when the time comes (maybe after having a paper :D or when I can write a detailed blog post).

Unlearning with Additional Discriminator/Classifier

- Topic: Generative Models; Trustworthy Machine Learning

- Date: 2025-03-26

- Description:

- Most of the current unlearning methods are sharing a common principle: \(P_{\theta'}(X) \propto \frac{P_{\theta}(X)}{P_{\theta}(E \mid X)^\eta}\) where \(E\) is the set of unlearn examples (from the ESD paper). Interpreted in another way, the unlearned model is trained so that the probability is inversely proportional to the probability of the unlearn examples, i.e., if \(P_{\theta}(E \mid X)\) is high, then \(P_{\theta'}(X)\) is low.

- A more recent paper - Negative Preference Optimization: From Catastrophic Collapse to Effective Unlearning - also follow this similar principle.

- However, this principle has a limitation that it depends on \(P_{\theta}(E \mid X)\) which is very small if \(E\) is a rare set or in the tail of the data distribution. Intuitively, it is much harder to unlearn rare concepts, likely to cause a catastrophic collapse.

- I think that we can improve the current unlearning methods by adding an additional discriminator/classifier to the unlearning process, i.e., \(P_{\theta'}(X) \propto \frac{P_{\theta}(X)}{P_{\phi}(E \mid X)^\eta}\) where \(P_{\phi}(E \mid X)\) is the probability of the additional discriminator/classifier.

- The additional discriminator/classifier can be trained easily given the unlearn set \(E\) and the original model \(\theta\).

Optimal Transport inspired Unlearning

- Topic: Generative Models; Trustworthy Machine Learning

- Date: 2025-03-26

- Description:

- It is an extension of our ICLR 2025 paper. The high-level idea is that we aim to minimize the cost of transporting \(\mu \in P_{\theta}(X)\) to \(\nu \in P_{\theta'}(X)\) where \(P_{\theta}(X)\) and \(P_{\theta'}(X)\) are the probability distributions of the data before and after unlearning.

- More specifically, \(P_{\theta}(X) = P(E) P_{\theta}(X \mid E) + P(R) P_{\theta}(X \mid R)\) where \(E\) is the set of unlearn examples and \(R\) is the set of retained data. Similarly, \(P_{\theta'}(X) = P(E) P_{\theta'}(X \mid E) + P(R_E) P_{\theta'}(X \mid R_E) + P(R_R) P_{\theta'}(X \mid R_R)\) where \(R_E \cup R_R = R\) and \(R_E \cap R_R = \emptyset\).

- Then intuitively, the optimal transport cost is minimal when \(P_{\theta'}(X \mid E) \approx 0\) and \(P_{\theta'}(X \mid R_E) \approx P_{\theta}(X \mid R_E)\) and \(R_E\) close to \(E\), which means that we move the mass from \(E\) to \(R_E\) that close to \(E\).

Metrics for evaluation Unlearning LLMs

- Topic: Generative Models; Trustworthy Machine Learning

- Date: 2025-03-18

- Description:

- I came across a new benchmark for unlearning LLMs - MUSE.

- We can use a metric like FID score in image generation to evaluate the quality of the unlearned model. We obtain a set of representations from the unlearned model and the original model with the same set of prompts and calculate the difference between the two distributions of their representations given a pretrained encoder like BERT.

Unlearning LLMs

- Topic: Generative Models; Trustworthy Machine Learning

- Date: 2025-03-14

- Description:

- I wrote a blog post about the Unlearning LLMs here: link

- After reading these papers, I have several follow-up ideas (briefly mentioned here) (1) Data-centric unlearning - filtering out the irrelevant data from retain set (2) Create a retain set from forget set (3) The role of random target in unlearning.

Multi-Objective Optimization for Unlearning - Dealing with gradient conflict

- Topic: Generative Models; Trustworthy Machine Learning

- Date: 2025-03-14

- Description:

- This idea came after our NeurIPS 2024 paper. The standard unlearning objective consists of two terms: the forget loss and the retain loss.

- They might have conflict in direction, e.g., evidence by the drop in performance of the unlearned model on the retain set.

- We proposed a multi-objective optimization framework (i.e., PCGrad) to deal with this problem.

- We can also consider it as an additional constraint to choose the optimal retain set.

Increasing Expressiveness in Unlearning

- Topic: Generative Models; Trustworthy Machine Learning

- Date: 2024-08

- Description:

- This idea came from our rebuttal to the NeurIPS 2024 paper, which can be found here publicly in OpenReview.

- One of the reviewers asked us about the level of granularity of our method, i.e., whether we can unlearn a “Mercedes logo” only.

- We proposed an interesting idea (to me :D) that we can use Textual Inversion to learn a visual embedding for the logo and use it as a pointer to unlearn.

Better Closed-Form Solution for Unlearning

- Topic: Generative Models; Trustworthy Machine Learning

- Date: 2025-03-14

- Description:

- There are two main approaches to unlearning: (1) Output-based unlearning: mapping the output with \(c_e\) to output with \(c_t\) - where our NeurIPS 2025 and ICLR 2025 papers belong to(2) Attention-based unlearning: mapping the attention output with \(c_e\) to attention output with \(c_t\) - where TIME, UCE are two representative methods.

- I wrote a blog post about the two approaches here: link

- I have listed current limitations of the two approaches in the blog post.

- One of the limitations is the invertibility issue if we don’t have the preserved/retained data. The current solution is to add \(d\) additional presevations along the canonical basis vectors.

SafeAI Startups

There are of course many other startups that are working on safety and security of AI, but here are the ones that founded by leading researchers in the field in my radar.

-

Virtue AI Bo Li, Dawn Song, Percy Liang

-

Center for AI Safety Dan Hendrycks

-

Invariant Labs Florian Tramer

-

RelAI Prof. Soheil Feizi, University of Maryland. RELAI offers a unified platform designed to enhance the reliability of artificial intelligence (AI) systems for both individuals and enterprises. For Individuals: RELAI Chat: This feature allows users to interact with popular large language models (LLMs) while utilizing RELAI’s advanced verification agents. These agents provide real-time analysis to detect and highlight potential inaccuracies or “hallucinations” in AI-generated responses, ensuring more trustworthy interactions. For Enterprises: Comprehensive AI Reliability Solutions: RELAI assists businesses in evaluating and testing their AI models using advanced agents to identify potential issues before deployment. The platform offers system-level safeguards to protect sensitive data and prevent misuse, along with real-time user-facing protections to ensure accuracy. By integrating RELAI’s solutions, users can enhance the dependability and safety of AI applications across various domains.

-

Confident AI Provide LLM red teaming (safety scanning) and Guardrails Alignment.

Robust Unlearning - The next challenge in Unlearning

In my observation, the unlearning literature is moving beyond the standard criteria of unlearning and retaining performance, and gradually moving to the more realistic scenarios, where the unlearned models have been challenged such as jailbreak/recovery attacks or further fine-tuning on other tasks while still maintaining the “unlearned” capability (continual learning, but the previous task is “unlearned” task). It might set a higher bar for the unlearning research, so that proposed methods need to be proven in three aspects: unlearning target concepts, retaining performance on other tasks, and robustness to attacks or further fine-tuning.

Hypothesis – Fine-Tuning with Few Examples in Transformer-Based Architectures: Over-Attention vs Overfitting

Tasks such as personalization, concept unlearning, and similar applications often require fine-tuning a model using only a few examples. This can lead to a phenomenon resembling “overfitting,” but with characteristics that differ from traditional machine learning overfitting.

For instance, in methods like DreamBooth, the goal is to teach the model a specific personal concept from a handful of images. After fine-tuning on a new concept (e.g., a specific dog), the model may appear to “forget” the broader concept (e.g., generic dogs)—any prompt containing “dog” tends to produce the personalized dog. However, unlike classical overfitting, the model often retains its performance on unrelated concepts, such as “human” or “cat.”

Similarly, in unlearning tasks—where the objective is to remove a specific concept (e.g., “gun” or “nudity”)—common approaches either manipulate the output directly (output-based) or modify cross-attention layers (attention-based) to redirect the visual correspondence away from the target concept. While these methods successfully forget the target concept and related concepts (e.g., “gun” → “weapon,” “nudity” → “naked”), the model still maintains knowledge of unrelated concepts. This behavior was observed in our ICLR 2025 study.

These observations suggest that this phenomenon differs from conventional overfitting, that I term as “Over-Attention”: the model becomes overly focused on a small number of concepts or examples, but able to retain performance on other concepts. An intuitive explanation can be that in Transformer architectures, the attention mechanism allows weights to be more disentangled than in convolutional or recurrent networks. Each concept may be encoded along a relatively independent “path” in the model’s propagation. Consequently, fine-tuning for personalization (adding a branch) or unlearning (removing a branch) primarily affects the targeted concept, leaving other concept representations largely intact.

But if the other tasks are not affected (much), then what is the problem? In our TEA paper, we show that the model has problem of “dominating” concepts, i.e., the new learned concept (personalized concept V) dominates the other concepts, leading to the semantic collapse problem when it is used in the context of other concepts, i.e., “a photo of V holding flowers” now becomes to just “a photo of V”. This problem is not observed in the traditional overfitting problem. In our TEA paper, we figure out that it is because of the unconstrained optimization in the personalization process, i.e., making the embedding of the personalized concept V can be arbitrary. We show that, eventually, this personal token becomes similar to special token like “BOS” or “EOS” token (those tokens are existed in every prompt - get attention from every input).

Hypothesis - Shortcut learning in finetuning Diffusion Models because of the fixed position

Fine-tuning Stable Diffusion models usually requires few pairs of images and corresponding prompts. For example, in Personalization, the fine-tuning dataset is \((x_i, y_i)\) where \(x_i\) is the image and \(y_i\) is the prompt. In the objective function below (from Textual Inversion), the context \(p\) is sampled from a set of predefined templates, such as “a photo of”, “a painting of”, etc.

\[\min_{v^*} \; \mathbb{E}_{x, p, \epsilon, t} \left[ \left\| \epsilon - \epsilon_\theta \left(x_{t,\epsilon}, t, \lfloor p, V^* \rfloor\right) \right\|_2^2 \right]\]Therefore, the position of special keyword \(V^*\) in the prompt \(\lfloor p, V^* \rfloor\) is relatively fixed (falling in a small range). However, in training process, the corresponding caption/prompt is much more flexible and diverse, and the position of the reference concept \(c\) (a man, a dog, etc.) is also much more flexible, e.g., can be in the beginning, middle or at the end of a very long prompt.

I hypothesize that using a fixed position for the special keyword \(V^*\) in the prompt \(\lfloor p, V^* \rfloor\) might lead to a shortcut learning, making the keyword \(V^*\) cannot be generalized to other positions.

Test-Time Adjustment - Bigger Picture

My recent paper focuses on personalization in generative models—how to teach a model a new personal visual concept from just a few personal images. The technique I propose is Test-Time Adjustment (TEA), which I believe is a general, simple, and effective approach. In both the paper and additional experiments (for NeurIPS rebuttal), TEA has shown strong effectiveness when combined with several state-of-the-art methods such as ClassDiffusion (ICLR 2025), ReVersion (SIGGRAPH Asia 2024 – 500+ GitHub stars), EasyControl (ICCV 2025 – 1500+ GitHub stars). Importantly, TEA addresses the issue of semantic collapse that often occurs in these methods.

The Core Idea Behind TEA

If you read the paper, you’ll see that TEA is motivated by a key limitation in personalization. During optimization, personal embeddings often lack proper regularization, which causes them to drift too far in magnitude and direction. TEA corrects this by shifting personal embeddings back toward the “normal” embedding space, similar to how other embeddings behave.

Note that projection can be applied not only at the token level but also at the sentence/prompt level. For example: “a photo of a sks person” → projected toward → “a photo of a person”.

Thinking Beyond Personalization: Where Else Could TEA Apply? In essence, TEA works as a test-time mapping from Prompt A to Prompt B, which is a general idea that can be applied to several tasks beyond personalization. In personalization, Prompt A is “a photo of a sks person” and Prompt B is “a photo of a person”. In image editing, Prompt A is “a photo of a person” and Prompt B is “a photo of a person with a red hat” (modifying details in Prompt A).

Why adjusting embeddings works TEA leverages an interpretable and interpolatable latent space of Latent Diffusion Models (LDMs). Not all generative models have this property—for instance, interpolation like \(z = \alpha z_1 + (1 - \alpha) z_2\) may not always produce meaningful outputs. My intuition is that this property comes from the CLIP text encoder, which was trained on massive amounts of text–image pairs with contrastive learning.

Semantic Collapse in Textual Space is not equivalent to Not Aligned in Output Space

In the paper, we show that the semantic collapse in the textual space, i.e., \(\tau(\lfloor p, V^* \rfloor \rightarrow \tau(V^*))\), leading to the semantic collapse in the output space, i.e., \(G(\tau(\lfloor p, V^* \rfloor)) \rightarrow G(\tau(V^*))\), where \(G\) is the generative model. This also means that the output image of the generative model \(G(\tau(\lfloor p, V^* \rfloor))\) is not aligned with the input prompt \(\lfloor p, V^* \rfloor\).

Let’s define some important concepts:

-

Alignment between the input prompt and the output image \(\mathcal{A}(G(\tau(\lfloor p, c \rfloor)), \lfloor p, c \rfloor)\), where \(c\) is a concept like

a man, \(p\) is a context, \(\lfloor p, c \rfloor\) is entire input prompt like `a man wearing glasses .

However, it is not the only reason causing the semantic collapse in the output space, i.e., \(\mathcal{A}(G(\tau(\lfloor p, V^* \rfloor)), \lfloor p, V^* \rfloor) < \mathcal{A}(G(\tau(\lfloor p, c \rfloor)), \lfloor p, c \rfloor)\).

Pho Restaurants and the Way We Do Research

At first glance, cooking pho and doing research seem completely unrelated. But for me, in my current context, they share some surprising similarities.

Pho restaurants. My family often goes out for pho on weekends — usually to Phở Thìn in Springvale or Phở Hùng Vương in Clayton. Nearly every Vietnamese person knows how to make pho to some extent, and there are countless recipes available online and on YouTube. Still, not all bowls of pho are created equal. When a restaurant manages to serve truly exceptional pho, that taste becomes something unique — hard to replicate anywhere else.

It’s difficult for such a restaurant to maintain the same quality if it tries to expand to 5 or 10 branches. This is in stark contrast to fast food chains like KFC, McDonald’s, or Subway, where recipes are standardized and can be replicated at scale with consistent results.

The key difference lies in the fact that, although recipes exist, making great pho is still an art — it depends heavily on the cook’s personal experience, instincts, and taste. These things can’t just be copied from one person to another. They’re also hard to formalize or teach. That’s why it’s so challenging to scale a pho restaurant without compromising quality.

Machine Learning (ML) research, in my experience, is quite similar. ML — or computer science in general — is a field rooted in both theory and experimentation. We can formalize concepts like neural networks, backpropagation, and loss functions — all very theoretical. But the object of those theories is data — messy, noisy, unpredictable data that often doesn’t follow any fixed rules.

As a result, even elegant theories need to be tested, refined, and challenged through experiments. Take the Universal Approximation Theorem, for example — it states that a neural network with just one hidden layer (and enough units) can approximate any continuous function. But that doesn’t mean such a network will actually learn useful patterns from real-world data. Practical problems like overfitting, optimization difficulties, and generalization often only become apparent through hands-on experimentation.

Some of the most impactful work in ML doesn’t come from abstract theory but from sharp intuitions and experimental observations. For instance, Hinton’s work on Knowledge Distillation, Label Smoothing, and Capsule Networks, or Goodfellow’s introduction of GANs — these ideas often stemmed from tinkering, trial-and-error, and intuition rather than formal proofs.

Coming back to the pho analogy, my point is this: producing those kinds of research ideas — simple yet powerful — requires direct engagement with data and models. It involves trial, error, and careful observation, much like making a great bowl of pho. It relies heavily on individual effort, craft, and feel — things that are hard to teach or scale across a research team.

The reason I’ve been reflecting on this is because recently, as a postdoc, I’ve started to think more about supervising students. While I’m confident in my own ability to do research, I don’t have a strong background in theory. Most of my work so far has come from intuition and personal observations — insights that I refine and improve over many iterations before they become publishable ideas.

So when it comes to mentoring others, I often feel uncertain. I worry that if I’m not working directly on the project, I won’t be able to contribute useful ideas. I fear that students might not see the value in an idea that comes from intuition rather than formal justification, or that I won’t be able to guide them effectively unless I’m hands-on.

I’m still figuring out how to deal with this challenge. One possible direction is to study more deeply and build a stronger foundation in theory — so I can better communicate ideas and provide a more structured kind of guidance. But that path still feels a bit unclear at this point.

Thoughts on the talk of anh Nguyễn Thành Nam

Một vài suy ngẫm sau khi nghe bài nói chuyện của anh Nguyễn Thành Nam:

“Giá trị của những “khoảng lặng”

Anh Nam nhấn mạnh tầm quan trọng của những khoảng thời gian mà nhìn từ bên ngoài có vẻ “im lặng” – không có sản phẩm, không có thành tựu rõ ràng. Nhưng thật ra, đó lại là thời gian để một người chuẩn bị, rèn luyện nội lực, và tích luỹ cho những mục tiêu lớn hơn.

Mình rất thích cách anh ví von điều này với bóng đá: một cầu thủ giỏi là người biết chạy chỗ ngay cả khi không có bóng.

Nghĩ lại bản thân, nhất là trong giai đoạn làm PhD hay hiện tại khi đang làm postdoc, đôi lúc mình cảm thấy tự ti vì không có nhiều “output” rõ rệt. Nhưng khi nhìn sâu hơn, mình nhận ra cảm giác ấy đến từ việc mình chưa xác định rõ mục tiêu.

Gần đây, khi đã có định hướng cụ thể và hiểu rõ đâu là đích đến mà mình đang hướng tới, thì những khoảng thời gian “lặng” này lại trở nên rất có ý nghĩa – chúng là khoảng chuẩn bị, không phải khoảng trống. Từ đó, cảm giác sốt ruột hay tự ti dần dần biến mất.

“Hiểu và phát huy giá trị từ nguồn gốc của bản thân”

Một ý khác mình rất tâm đắc là khi anh Nam nói rằng, với người Việt ra nước ngoài học tập và làm việc, điều khiến họ trở nên khác biệt không nằm ở việc cố giống với người bản xứ, mà chính là ở nguồn gốc Việt Nam của họ.

Nếu tách bản thân ra khỏi gốc gác Việt Nam, thì mình chỉ là một người bình thường giữa muôn vàn người khác. Nhưng nếu biết trân trọng và phát huy điều đó, thì mình mới thực sự trở thành “mình”.

Nghĩ về bản thân – mình là một nhà nghiên cứu về Machine Learning, đến từ Việt Nam, và đang làm việc tại Úc. Ba yếu tố này kết hợp lại khiến mình có một góc nhìn riêng.

Vậy làm sao để tận dụng điều đó?

Kết nối với cộng đồng nghiên cứu, sinh viên người Việt – ở Việt Nam, ở Úc, hay ở các nước khác.

Dùng ML để giải quyết các bài toán thực tiễn tại Việt Nam.

Mang những bài học, cách làm từ Úc áp dụng vào Việt Nam, và ngược lại.

Cụ thể hơn, mình đang nghiên cứu về Trustworthy Machine Learning. Vậy điểm khác biệt của mình là gì? Có thể chính là những bài toán về trustworthy, safety, privacy, fairness, accountability trong ứng dụng ML ở các bối cảnh cụ thể như Việt Nam hay Úc – những điều mà người nghiên cứu ở các nơi khác chưa chắc có cơ hội trải nghiệm.

Visual Autoregressive and the importance of Position Embedding

Visual Autoregressive (VAR) emerges as a new powerful generative model that can generate high-quality images matching the quality of SOTA diffusion models, while being faster and more efficient, with the best paper award at NeurIPS 2024 as a testament.

To me, the position embedding plays a crucial role in the way VAR understands the spatial structure of the image. The naive way to do so is to combine two direction embeddings (horizontal and vertical) to form a 2D position embedding as implemented in DiT

def get_2d_sincos_pos_embed_from_grid(embed_dim, grid):

assert embed_dim % 2 == 0

# use half of dimensions to encode grid_h

emb_h = get_1d_sincos_pos_embed_from_grid(embed_dim // 2, grid[0]) # (H*W, D/2)

emb_w = get_1d_sincos_pos_embed_from_grid(embed_dim // 2, grid[1]) # (H*W, D/2)

emb = np.concatenate([emb_h, emb_w], axis=1) # (H*W, D)

return emb

In the VAR paper, they use a leanrnable PE as implemented in the code above (this line), which is a combination of position embedding and level embedding to distinguish different levels of tokens in a token pyramid.

# 3. absolute position embedding

pos_1LC = []

for i, pn in enumerate(self.patch_nums):

pe = torch.empty(1, pn*pn, self.C)

nn.init.trunc_normal_(pe, mean=0, std=init_std)

pos_1LC.append(pe)

pos_1LC = torch.cat(pos_1LC, dim=1) # 1, L, C

assert tuple(pos_1LC.shape) == (1, self.L, self.C)

self.pos_1LC = nn.Parameter(pos_1LC)

# level embedding (similar to GPT's segment embedding, used to distinguish different levels of token pyramid)

self.lvl_embed = nn.Embedding(len(self.patch_nums), self.C)

nn.init.trunc_normal_(self.lvl_embed.weight.data, mean=0, std=init_std)

DeepSeek-R1

To me, the most interesting part of this paper is the invention of DeepSeek-R1-Zero, whose introduction has had a profound impact on our understanding of RL and LLM training. More specifically, pure RL with rule-based rewards might represent a new paradigm for LLM training. The use of a rule-based reward system strikes me as another example of how self-supervised learning—where data can be generated automatically and on a massive scale—continues to underpin the success of large-scale deep learning models.

Similar to the success of ControlNet in image generation, which leverages traditional computer vision techniques like edge detection to provide additional control signals, the rule-based reward system in this paper offers a simple yet effective method to generate large amounts of structured, labeled data. This, in turn, plays a crucial role in training large-scale models, ensuring that the scaling laws remain valid.

The aha moment in DeepSeek-R1-Zero perfectly encapsulates the elegance and power of reinforcement learning: instead of explicitly teaching the model how to solve a problem, we simply design the right incentives, allowing the model to autonomously develop sophisticated problem-solving strategies.

The Unintentional Invention of Concept Graph from Machine Unlearning

One of the most interesting contributions of our ICLR 2025 paper (extended from NeurIPS 2024) is the introduction of the concept graph, which represents a generative model’s capability as a graph, where nodes are discrete visual concepts, and edges are the relationships between those concepts.

Understanding this graph structure is essential for many tasks, such as:

- Machine Unlearning 🗑️: The goal here is to remove the model’s knowledge of certain concepts while retaining its knowledge of others. The concept graph structure helps identify which concepts are critical to the model’s performance and should be preserved.

- Personalization 👤: The goal is to personalize the model’s knowledge for a specific user. For instance, changing “a photo of a cat before Vancouver Convention Center” to “a photo of a cat before the user’s house.” Traditional methods like Dreambooth, which fine-tune the model on a small user-specific dataset, often overfit to the specific concept and degrade the model’s general capability. Prior approaches address this by collecting large datasets of heuristically selected concepts—e.g., if the personalized concept is “a user’s house,” the preservation dataset would include a variety of house images. Our concept graph structure can help identify which concepts are specific to the user and should be preserved, improving the balance between personalization and generalization.

While this graph is simple to understand, useful to many tasks, however, it is not trivial to construct. There are several challenges:

- What is a concept? The concept is discrete but infinite. Most concepts are composed of multiple sub-concepts. For example, “a body of human” is composed from multiple body parts, such as “head”, “body”, “hand”, “foot”, etc. And each body part can be further decomposed into deeper/finer/granular concepts. In the end, all visual concepts can be decomposed into lines, curves, colors blobs, etc, which are similar to the way of convolutional neural networks. However, representing in that extreme granularity is not practical and not necessary. How to represent a concept? Explicitly representing a concept by an embedding vector in a latent space, or implicitly representing a concept by a set of visual examples.

- What is a relationship? The intuitive way to represent a relationship is to indicate the impact of a concept on another concept.

- How to measure the strength of a relationship? Correlation in the latent space measured by common metrics like cosine similarity might be the most straightforward way to measure its. However, the problem of correlation is that it does not work well in continuous space where the discrete concepts lie. For example, as investigated in our NeurIPS paper, by adding a small perturbation to the latent space, the output of the model will be significantly different.

I am saying that the concept graph is an unintentional invention from machine unlearning because turn out that the impact of a concept on another can be measured by the change of the output of the model on that query concept when the other concept has been removed. Measuring that relationship in the output space instead of the latent space (which is usually done by measuring cosine similarity between two CLIP embeddings) can be more direct and easier to interpret. However, this method has its own drawbacks. Firstly, in the case of diffusion models, where the output of one concept can be significantly different by just changing the initial noise, therefore, requiring generating a large number of images from the same concept with different initial noise to stabilize the measurement. Secondly, we need to measure the presence of a concept in the generated image, which usually requires a classifier or detector to do so. However, most of the time these classifier are not available. This can be a potential future work.

OpenAI email archives from Musk v Altman - Game of Thrones

Reference: OpenAI email archives from Musk v Altman by LessWrong

These emails are part of the ongoing legal disputes between Elon Musk and Sam Altman surrounding recent OpenAI developments. Thanks to this, the public has gained access to email exchanges between some of the most powerful figures in the tech world today, including Elon Musk, Sam Altman, Greg Brockman, Ilya Sutskever, and Andrew Karpathy.

For me, this has been an eye-opening experience, especially for someone who is still learning about the tech world, Silicon Valley, startups, and entrepreneurship. It can be compared to MIT or Stanford releasing their lectures to the world.

After reading through the content, I think the story can be divided into the following chapters:

Chapter 1: Origins - The noble idea of AI for everyone

The idea began on May 25, 2015, when Sam Altman sent an email to Elon Musk about a concept for a “Manhattan Project for AI” — ensuring that the tech belongs to the world through some sort of nonprofit.

Elon Musk quickly responded, showing enthusiasm for the idea.

Throughout the emails, I noticed Elon Musk repeatedly expressing concern (or even obsession) about Google, DeepMind, and the possibility of Google creating AGI and dominating the world.

From the very first email, Sam Altman, somehow, seemed to understand Elon Musk’s concerns or perhaps shared the same fears. He mentioned the need to “do something to prevent Google from being the first to create AGI,” quickly gaining Elon Musk’s agreement.

Chapter 2: The first building blocks - Contracts to attract initial talent for OpenAI

The next phase focused on drafting contracts (offer letters or compensation frameworks) to attract the first talents to work at OpenAI, discussing “opening paragraphs” for OpenAI’s vision, and even deciding what to say in “a Wired article.”

What I found interesting here were:

- How these people communicated via email: direct, straight to the point, and concise.

- The founders’ emphasis on building an excellent founding team and carefully considering contract details.

- Elon Musk’s willingness to personally meet and convince individuals to join OpenAI.

Chapter 3: Conflict - The battle for leadership control

Conflict seemed to arise around August 2017 (Shivon Zilis to Elon Musk, cc: Sam Teller, Aug 28, 2017, 12:01 AM), when Greg and Ilya expressed concerns about Elon Musk’s management, such as:

- “How much time does Elon want to spend on this, and how much time can he actually afford to spend on this?”

- They were okay with less time/less control or more time/more control, but not less time/more control. Their fear was that without enough time, there wouldn’t be adequate discussion to make informed decisions.

Elon responded:

- “This is very annoying. Please encourage them to go start a company. I’ve had enough.”

The highlight of this chapter might be an email from Ilya Sutskever to Elon Musk, Sam Altman, cc: Greg Brockman, Sam Teller, Shivon Zilis (Sep 20, 2017, 2:08 PM), where Ilya and Greg said:

-

To Elon: “The current structure provides you with a path where you end up with unilateral absolute control over the AGI. You stated that you don’t want to control the final AGI, but during this negotiation, you’ve shown us that absolute control is extremely important to you. The goal of OpenAI is to make the future good and avoid an AGI dictatorship. You are concerned that Demis could create an AGI dictatorship. So do we. Therefore, it’s a bad idea to create a structure where you could become a dictator, especially when we can create a structure that avoids this possibility.”

-

To Sam: “We don’t understand why the CEO title is so important to you. Your stated reasons have changed, and it’s hard to understand what’s driving this. Is AGI truly your primary motivation? How does it connect to your political goals? How has your thought process changed over time?”

Elon replied:

- “Guys, I’ve had enough. This is the final straw. Either go do something on your own or continue with OpenAI as a nonprofit. I will no longer fund OpenAI until you have made a firm commitment to stay, or I’m just being a fool who is essentially providing free funding for you to create a startup. Discussions are over.”

Chapter 4: The finale

The final email exchanges between Elon and Sam occurred around March 2019. At this time, Sam, now CEO of OpenAI, drafted a plan:

- “We’ve created the capped-profit company and raised the first round. We did this in a way where all investors are clear that they should never expect a profit.

- We made Greg chairman and me CEO of the new entity.

- Speaking of the last point, we are now discussing a multi-billion dollar investment, which I would like your advice on when you have time.”

Elon replied, once again making it clear that he had no interest in OpenAI becoming a for-profit company.

Improving ChatGPT’s interpretability with cross-modal heatmap

(2024-11)

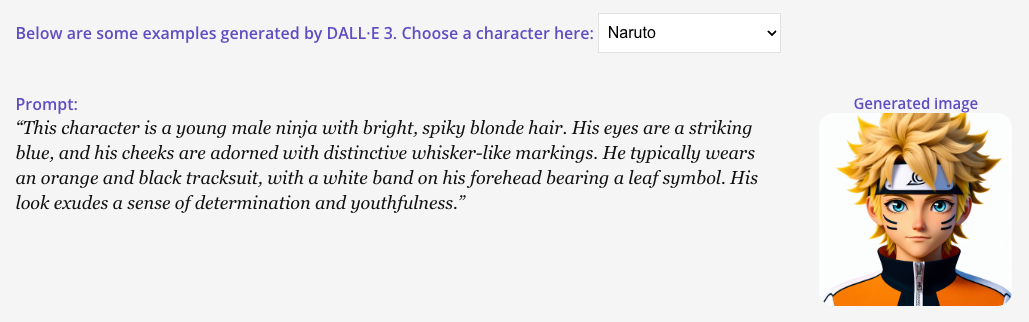

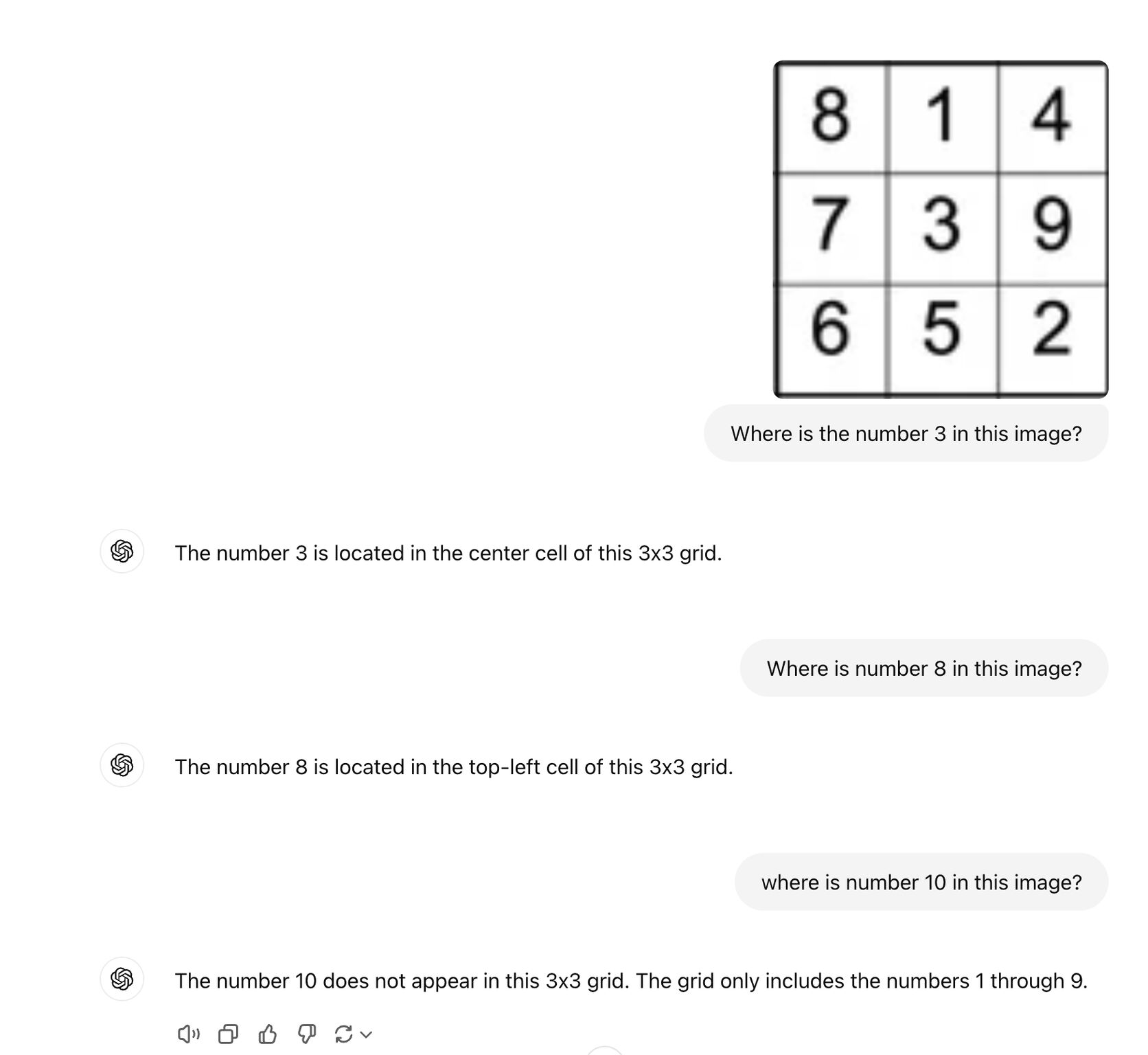

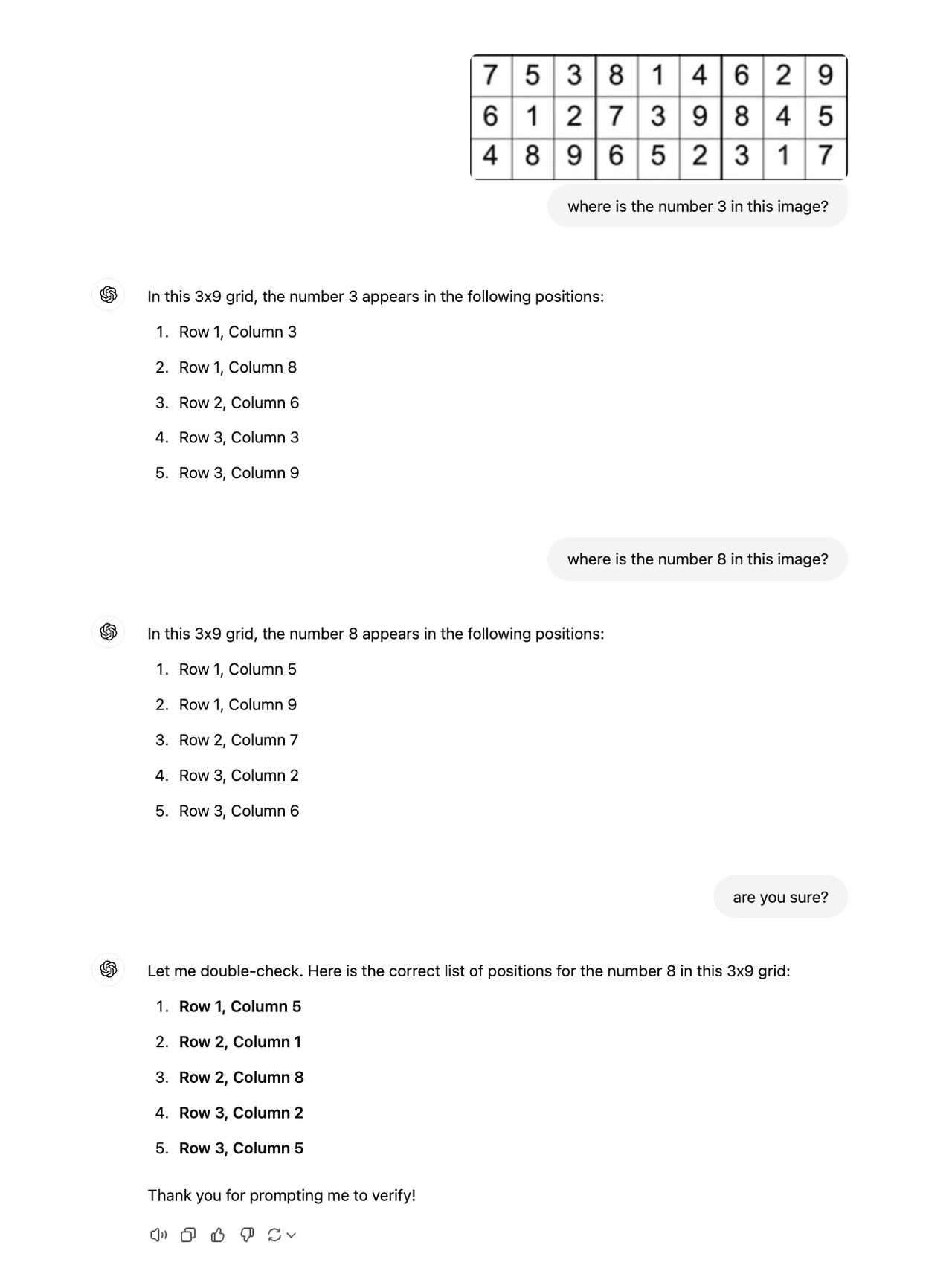

I tried a simple experiment—took a snapshot of a single cell in a Sudoku puzzle (a 3x3 grid with digits 1 to 9) and asked ChatGPT to find the location of a specific number in the grid.

As shown in the picture, ChatGPT seemed to handle the question just fine! But as soon as I upped the challenge level, it started to show its infamous hallucination problem :D

So, how can we improve this?

One idea: applying techniques like DAAM to create a cross-modal heatmap (example attached) could help provide a rough idea of where each visual-text pair is mapped. By using this data to fine-tune the model, could we boost its interpretability?

Update: It’s my mistake for not instructing ChatGPT properly :D

The Prisoner’s Dilemma

(2024-09)

Imagine a game between two players, A and B, competing for a prize of 1 million dollars from a bank. They are asked to choose either “Split” or “Take All” the prize. If both choose “Split,” they each receive $500,000. If one chooses “Split” and the other chooses “Take All,” the one who chooses “Take All” wins the entire prize. If both choose “Take All,” they both lose and get nothing. They can’t communicate with each other and must decide whether to trust/cooperate.

This is the Prisoner’s Dilemma, one of the most famous problems in Game Theory. In this scenario, when the game is played only once, the best strategy for each person is not to cooperate. However, in real life, many situations are not zero-sum games, where only one can win. Instead, all parties can win and benefit from a shared bank, our world.

And the best strategy to win in life is to cooperate with others, or as summarized in the video: be nice and forgiving, but don’t be a pushover or too nice so others can take advantage of you.

A new perspective on the motivation of VAE

(2023-09)

- Assume that \(x\) was generated from \(z\) through a generative process \(p(x \mid z)\).

- Before observing \(x\), we have a prior belief about \(z\), i.e., \(z\) can be sampled from a Gaussian distribution \(p(z) = \mathcal{N}(0, I)\).

- After observing \(x\), we want to correct our prior belief about \(z\) to a posterior belief \(p(z \mid x)\).

- However, we cannot directly compute \(p(z \mid x)\) because it is intractable. Therefore, we use a variational distribution \(q(z \mid x)\) to approximate \(p(z \mid x)\). The variational distribution \(q(z \mid x)\) is parameterized by an encoder \(e(z \mid x)\). The encoder \(e(z \mid x)\) is trained to minimize the KL divergence between \(q(z \mid x)\) and \(p(z \mid x)\). This is the motivation of VAE.

Mathematically, we want to minimize the KL divergence between \(q_{\theta} (z \mid x)\) and \(p(z \mid x)\):

\[\mathcal{D}_{KL} (q_{\theta} (z \mid x) \parallel p(z \mid x) ) = \mathbb{E}_{q_{\theta} (z \mid x)} \left[ \log \frac{q_{\theta} (z \mid x)}{p(z \mid x)} \right] = \mathbb{E}_{q_{\theta} (z \mid x)} \left[ \log q_{\theta} (z \mid x) - \log p(z \mid x) \right]\]Applying Bayes rule, we have:

\[\mathcal{D}_{KL} (q_{\theta} (z \mid x) \parallel p(z \mid x) ) = \mathbb{E}_{q_{\theta} (z \mid x)} \left[ \log q_{\theta} (z \mid x) - \log p(x \mid z) - \log p(z) + \log p(x) \right]\] \[\mathcal{D}_{KL} (q_{\theta} (z \mid x) \parallel p(z \mid x) ) = \mathbb{E}_{q_{\theta} (z \mid x)} \left[ \log q_{\theta} (z \mid x) - \log p(x \mid z) - \log p(z) \right] + \log p(x)\] \[\mathcal{D}_{KL} (q_{\theta} (z \mid x) \parallel p(z \mid x) ) = - \mathbb{E}_{q_{\theta} (z \mid x)} \left[ \log p(x \mid z) \right] + \mathcal{D}_{KL} \left[ q_{\theta} (z \mid x) \parallel p(z) \right] + \log p(x)\]So, minimizing \(\mathcal{D}_{KL} (q_{\theta} (z \mid x) \parallel p(z \mid x) )\) is equivalent to maximizing the ELBO: \(\mathbb{E}_{q_{\theta} (z \mid x)} \left[ \log p(x \mid z) \right] - \mathcal{D}_{KL} \left[ q_{\theta} (z \mid x) \parallel p(z) \right]\).

Another perspective on the motivation of VAE can be seen from the development of the Auto Encoder (AE) model.

- The AE model is trained to minimize the reconstruction error between the input \(x\) and the output \(\hat{x}\).

- The AE process is deterministic, i.e., given \(x\), the output \(\hat{x}\) is always the same.

- Therefore, the AE model does not have contiguity and completeness properties as desired in a generative model.

- To solve this problem, we change the deterministic encoder of the AE model to a stochastic encoder, i.e., instead of mapping \(x\) to a single point \(z\), the encoder maps \(x\) to a distribution \(q_{\theta} (z \mid x)\). This distribution should be close to the prior distribution \(p(z)\). This is the motivation of VAE.

Data-Free Knowledge Distillation

(2023-08)

- Reference: Data-Free Model Extraction

- What is Data-Free KD? It is a method to transfer knowledge from a teacher model to a student model without using any data. The idea is learn a generator that can generate synthetic data that is similar to the data from the teacher model. Then, we can use the synthetic data to train the student model. \(L_S = L_{KL} (T(\hat{x}), S(\hat{x}))\)

Where \(T(\hat{x})\) is the teacher model and \(S(\hat{x})\) is the student model. \(\hat{x}\) is the synthetic data generated by generator \(G\).

\[L_G = L_{CE} (T(\hat{x}), y) - L_{KL} (T(\hat{x}), S(\hat{x}))\]Where \(y\) is the label of the synthetic data. Minimizing first term encourages the generator generate data that fall into the target class \(y\), while maximizing the second term encourages the generator generate diverse data? Compared to GAN, we can think both teacher and student models are acted as discriminators.

This adversarial game need to intergrate to the training process in each iteration. For example, after each iteration, you need to minimizing \(L_G\) to generate a new synthetic data. And then using \(\hat{x}\) to train the student. This is to ensure that the synthetic data is new to the student model. Therefore, one of the drawbacks of DFKD is that it is very slow.

Tuan (Henry)’ work on improving Data-Free KD:

- Introducing noisy layer which is a linear layer that transforms the input (label-text embedding vector from CLIP) before feeding to the generator as previous work. (Input -> Noisy Layer -> Generator -> Teacher/Student -> \(L_G\)).

- One important point is that the Noisy layer need to reset its weight every time we generate a new batch of synthetic data (while fixing the generator). This is to ensure the diversity of the synthetic data.

- One interesting finding is that the noisy layer can be applied to all kinds of label-text embedding from different classes, while if using individual noise layers for each class, the performance is worse.

How to disable NSFW detection in Huggingface

(2023-08)

- context: I am trying to generate inappropriate images using Stable Diffusion with prompts from the I2P benchmark. However, the NSFW detection in Huggingface is too sensitive and it filters out all of the images, and return a black image instead. Therefore, I need to disable it.

- solution: modify the pipeline_stable_diffusion.py file in the Huggingface library. just return image and None in the run_safety_checker function.

# line 426 in the pipeline_stable_diffusion.py

def run_safety_checker(self, image, device, dtype):

return image, None

# The following original code will be ignored

if self.safety_checker is None:

has_nsfw_concept = None

else:

if torch.is_tensor(image):

feature_extractor_input = self.image_processor.postprocess(image, output_type="pil")

else:

feature_extractor_input = self.image_processor.numpy_to_pil(image)

safety_checker_input = self.feature_extractor(feature_extractor_input, return_tensors="pt").to(device)

image, has_nsfw_concept = self.safety_checker(

images=image, clip_input=safety_checker_input.pixel_values.to(dtype)

)

return image, has_nsfw_concept

(#Idea, #GenAI, #TML) Completely erase a concept (i.e., NSFW) from latent space of Stable Diffusion.

- Problem: Current methods such as ESD (Erasing Concepts from Diffusion Models) can erase quite well a concept from the Stable Diffusion. However, recent work (Circumventing Concept Erasure Methods for Text-to-Image Generative Models) has shown that it is possible to recover the erased concept by using a simple Textual Inversion method.

- Firstly, personally, I think that the approach in Pham et al. (2023) is not very convincing. Because, they need to use additional data (25 samples/concept) to learn a new token associated with the removed concept. So, it is not surprising that they can generate images with the removed concept. It is becaused of the power of the personalized method, not because of the weakness of the ESD method. It would be better if we can compare performance on recovering concept A (concept A is totally new to the base Stable Diffusion model such as your personal images) on two models: a SD model injected with concept A and a model fine-tuned with concept A and then erased concept A and then injected concept A back. If the latter model can not generate images with concept A better than inject concept A directly to the base model, then we can say that the ESD method is effective.

Helmholtz Visiting Researcher Grant

(2023-08)

- https://www.helmholtz-hida.de/en/new-horizons/hida-visiting-program/

- 1-3 months visiting grant for Ph.D. students and postdocs in one of 18 Helmholtz centers in Germany.

- Deadline: 16 August 2023 and will end on 15 October 2023.

- CISPA - Helmholtz Center for Information Security https://cispa.de/en/people

Where to find potential collaborators or postdoc positions

(2023-08)

Each year, the Australian Research Council releases the outcomes of funded/accepted projects from leading researchers and professors across Australian Universities. This information can be a great resource for finding collaborations, PhD positions, and research job opportunities.

For example, if you’re interested in the topic of Trust and Safety in Machine Learning, you can find several professors who have recently received funding to work on related topics.

Link to the ARC data: https://lnkd.in/gge2FJR3

Micromouse Competition

(2023-07)

- First introduced by Claude Shannon in 1950s.

- At the begining, it was just a simple maze solving competition. However, after 50 years of growing and competing, it has become a very competitive competition with many different categories: speed, efficiency, size. And along with its, many great ideas have been introduced and applied to the competition. It involes many different fields: mechanical, electrical, software, and AI all in just a small robot.

- The Fosbury Flop in high jump. When everyone use the same jump technique, the performance becomes saturated. Then Fosbury introduced a new technique (backward flop) that no one had ever thought of before. And it became the new standard (even named after him). This phenomenon also happens in the Micromouse competition.

- The two most important game changing ideas in the history of micromouse competition: capability to diagonal movement and using fan (vacumn) to suck the mouse to the path so that the mouse can move faster as in a racing car.

Reference:

- The Fastest Maze-Solving Competition On Earth by Veritasium.

- The Fosbury Flop—A Game-Changing Technique

Trustworthy Machine Learning

Who behind the wheel?

The wheel here represents decision-making.

The closest analogy is Tesla’s autopilot system — a machine that takes control of the car. But decision-making driven by AI doesn’t stop at self-driving cars. In many cases, we think humans are still steering, when in fact, AI and machine learning systems are silently guiding our choices.

Examples are everywhere in daily life:

- Shopping on Amazon: We only see products that the recommendation system decides to show us.

- Searching on Google: What we see is filtered, ranked, and prioritized by an algorithm.

- Navigating from A to B: The route we take is suggested by a GPS system trained on massive datasets.

In short, AI is no longer just assisting — it’s shaping our decisions. Only a few domains, such as a doctor’s diagnosis or a judge’s ruling, still appear fully under human control. But even those are slowly integrating AI support. As time passes, more decisions will be made — or at least strongly influenced — by machines.

If AI Is Behind the Wheel, Can We Trust It?

Today, the most advanced autonomous vehicles are still Level 2 on the automation scale — meaning a human must remain alert and ready to take over. Likewise, doctors still make the final call even when aided by diagnostic AI. In such life-and-death scenarios, trust is crucial.

Yet, for less critical tasks — like buying a product, searching online, or following GPS directions — we already trust AI implicitly. We rarely question the output of these systems. So, why do we trust them?

- Convenience and benefit: AI makes our lives easier. We’re often willing to trade privacy for comfort — like when using voice assistants.

- Lack of alternatives: Paper maps are obsolete. If no one learns to drive manually, future drivers may have no choice but to rely entirely on automation.

- Brand reputation: Systems built by major companies such as Google, Amazon, or Meta appear credible, which fosters trust.

- Transparency (or the illusion of it): We assume we understand how these systems work — though, in reality, we rarely do.

Because of these reasons, users are often forced to trust AI systems. Meanwhile, developers have little incentive to make them truly trustworthy.

Building robust and interpretable systems is difficult, expensive, and can even reduce performance on standard benchmarks. This explains why topics like Adversarial Machine Learning remain more academic than industrial.

So, Why Bother Building Trustworthy Systems?

Two major reasons are now pushing the industry toward trustworthiness:

- Public awareness: After repeated incidents involving data leaks, bias, or misinformation, users are becoming more conscious of privacy and fairness.

- Competition and choice: As alternatives emerge (e.g., Bing or DuckDuckGo challenging Google), users can choose the platform they trust most — giving companies an incentive to prioritize ethical AI practices.

Ultimately, trust becomes a competitive advantage.

So yes, building trustworthy AI is difficult — but it’s becoming essential.

What Is Trustworthy Machine Learning?

What makes a person trustworthy? Even that’s not an easy question to answer — and the same applies to machine learning.

A common way to define Trustworthy Machine Learning (TML) is through its core properties:

- Explainability – The model’s behavior can be understood and interpreted.

- Fairness – The system avoids bias and discrimination.

- Privacy preservation – Data is handled safely and responsibly.

- Causality – The model’s reasoning reflects cause-and-effect, not mere correlation.

- Robustness – The system maintains reliability under uncertainty or attack.

These dimensions form the foundation of the Trustworthy ML Initiative.

Another perspective comes from ML safety research, such as Unsolved Problems in ML Safety, which narrows trustworthiness to four key areas:

- Robustness – Stability under distribution shifts or adversarial inputs.

- Monitoring – Detecting when models behave unexpectedly.

- Alignment – Ensuring the model’s goals align with human intent.

- Systemic safety – Preventing large-scale or emergent risks in interconnected systems.

Safety Checker - The simple and efficient way to deal with NSFW content

- Topic: Generative Models

- Date: 2024-12-18

- Description:

- I wrote a blog post about the Safety Checker in Stable Diffusion here: link

- While Machine Unlearning is a interesting and fascinating research topic, I think from a business perspective, updating the Safety Checker is more practical and useful, when the model is already deployed and we need to deal with the new NSFW queries.

- More specifically, because the (current) Safety Checker is just a alignment model, where we have a pair of text and image encoder to measure the similarity between the key words - that we want to ban/filter - and the generated image. Therefore, it can be updated very easily when we have a new set of key words to ban/filter.

- We can also think about a new research direction starting from this setting: how to make the Safety Checker more efficient and effective.

Football Minimap Prediction

- Topic: Business

- Date: 2018

- Description:

- This is very old idea back in 2018 when I was in Singapore and first time explored to GAN. Back then, I was so excited with Pix2Pix model and its ability to learn the transformation from domain A to domain B, and thought that it can be applied to this problem.

Motivation

Currently, the Football TV audiences do not know location of players who not in the current camera frame. Therefore they might has not the full experience as spectators are watching live on the stadium. We can have some simple solutions for this problem, such as using another camera to shoot he entire football field, or using statistical data from the chip attached to the players. However, from the perspective of computer vision engineer, I propose one more solution for the this problem (might be not a good choice but just for fun:), in which I use the GAN model to create a minimap from a camera frame as in the FIFA or PES football video game.

In the case of players in the frame, I use the GAN model (Image 2 Image Translation) to learn the transformation from the player’s position in the frame to the position of the player in the minimap.

In the case of players are not in the current frame: I use [?] to predict a current positions of the players from the previous positions and their trajectory (or you might hope GAN as one size fit all model which can learn not only the current frame but also the invisible players)

Data Collection

Because the real matches on TV don’t have a minimap therefore I use the alternative sources there are FIFA18 and PES18 video on Youtube. Then I do some preprocess to collect and clean data.

Step 1: Cut only the frames which have the minimap within. Because in those videos, it’s not only normal frame (which has a minimap) but also spotlight or review or something else. Therefore I have to manually select the period of time in which there are only normal frames. I sample with the sample rate as 2 frames per second

Step 2: Cut minimap from a full frame and replace it by a random noise window. To avoid overfitting (because full frame also has a minimap) I replace a minimap by a random noise frame.

Step 3: Remove bad samples (Those minimap have overlap by line or player within)

After 3 steps as above, I have two sets: The camera frame set (with noised minimap) and the minimap set. I will chose camera frame set as a Source and minimap as a Target for Pix2Pix model. (You can swap 2 source and have enjoy the interesting result, in which we can render a camera frame from a minimap). Then I do a preprocessing to have a better dataset for training.

Because the football video game knows locations of not only players in camera frame but also all of players in the game. Therefore it can create a completed minimap that has the locations of all players. TV audiences who have only camera frame cannot do that. They only infer the position of players who are in camera frame, and cannot infer remaining players. Based on this intuition, I improve the model by doing crop the active window in minimap as follow:

- Localize position of the ball (usually has yellow-color in minimap)

- Crop roughly 1/2 width of minimap (1/4 in the left of ball and 1/4 in the right of ball)

- Keep the height

I realize that audience change in each frame, and they might made a huge noise to model, which cause training more difficult. Moreover, players is really small in whole frame, and grass is not stable in each frame or each game, therefore, similar to audience, they might lead a huge noise to model. Therefore I design a filter to filter them from a Camera frame using Color Threshold App in Matlab.

Difficulties:

- Pix2Pix model has demonstrated its ability to learn the transformation from domain A to domain B as shown in the paper: Day to night, BW to Color, Aerial to Map. However, in those cases, 2 domain are not too much different. In this case, we need a transformation from 2 completely different domains. It also needs a transformation from 2D - 2D matching in abstract level (model need to know each player is correspond to each circle in minimap). Therefore, it will be very challenge to learn

- The difference between a Video Game Frame and a Real Camera Frame.

- Dataset too small and noise.

I think this problem is difficult even humans, but it is worth to try and see what the GAN can do. Revised in 2025: I think the idea of generating minimap is still interesting and might be more realistic with the current stage of Generative Models.

Some other silly ideas

Chrome Extension Ideas

- Topic: Chrome Extension, Business

- Date: 2025-06-01

- Description:

- I recently learned about how to build a Chrome Extension and found that there are a lot of interesting ideas that can be implemented.

- Idea 1: A Price Tracker, so that users can track the price of a product on (any) e-commerce website. When the price is lower than the user’s desired price, the extension will notify the user.

- Idea 2: A Scratch Copilot

- Idea 3: Arxiv Review and Comment Sharing. Turn out that is Alphaxiv.

Generating Reading Comprehension Questions for Primary School Students

- Topic: Business

- Date: 2025-03-12

- Description:

- I have a year-3 son and recently, he needs to prepare for his NAPLAN test at school.

- The free NAPLAN practice samples are very limited. Only available from year 2012-2016, that can just be finished in several hours.

- We - A typical Asian family - want my son to practice more and prepare better for his test.

- I think - with the current stage of LLM - we can leverage the model to generate quite a lot of similar questions to practice!

Generating Coloring Book/Sheet for Kids

- Topic: Business

- Date: 2025-03

- Description:

- Given a picture (e.g., of a kid), generate a personalized coloring book/sheet for the kid with the content from a prompt, personalized with the kid’s face from the picture.

Coffee Car - Ice Cream Car - A mobile app to track the food truck

- Topic: Business

- Date: 2025-03

- Description:

- My wife told me that at her new company, there is a coffee car usually comes to the company at a specific time of a week to sell coffee. Employees usually need to be informed by HR via email - “The coffee car is coming today, bla bla bla”.

- I think we can have a mobile app that for both sides: the coffee/ice cream truck and the customers.

- The coffee/ice cream truck can post their schedule, menu, and even their real-time location.

- The customers can see the menu, the truck’s schedule, to order and pay for the coffee/ice cream.

- The truck can also send notification to the customers when they arrive at the company.

Generating Linkedin profile picture with custombadges

- Topic: Business

- Date: 2025-03

- Description:

- Currently, Linkedin provides two types of badges

#OpentoWorkandHiring. - But from my perspective, types of badges should be more diverse and more customizable. For example, Phd Students might want

#OpentoInternwhile Master Students might want#SeekingPhDScholarship, etc. - I think we can have a website that allows users to generate a Linkedin profile picture with custom badges.

- Currently, Linkedin provides two types of badges

TripleZero - Emergency Simulation for Kids training

- Topic: Business

- Date: 2025-03

- Description:

- A mobile app/game for kids to learn about emergency situations. I found it would be a good idea after my son told me about his first aid training at school.

- We - or the kids - don’t know how to react in an emergency situation.

- The app will simulate a real emergency situation, and the kids will need to make decision to save the people in that situation.

- UI should be similar to Iphone keyboard - but more colorful and cute - to attract kids.

- The app will utilize the OpenAI voice API to respond to the kids’s questions.

Waiting List - Price Drop Notification

- Topic: Business

- Date: 2019

- Description:

- A Chrome extension that allows users to add an item to a waiting list, an item can be from any website - not just Amazon or other shopping websites - that already has a waiting list feature

- When the price of the item drops, the extension will notify the user.

- The user can set a price drop threshold.

- The idea came after a talk with my wife about her wish to buy a dress but the price was too high and she need to check the website regularly to see if the price has dropped.

Melbourne Airport - Available Parking Spot

- Topic: Business

- Date: 2024

- Description:

- A camera system that can hang on light poles at the parking lot and monitor which parking spot is available.

- There is a screen or light - red or green - to indicate the availability of the parking spot.

- The idea came after I was frustrated to find a parking spot there - It took me more than 15 minutes to find a spot. The Melbourne Airport Value Parking is really big.

- I also found that many people complained about the same problem as me online.

Enjoy Reading This Article?

Here are some more articles you might like to read next: